jugghm / penet_icra2021 Goto Github PK

View Code? Open in Web Editor NEWICRA 2021 "Towards Precise and Efficient Image Guided Depth Completion"

License: MIT License

ICRA 2021 "Towards Precise and Efficient Image Guided Depth Completion"

License: MIT License

Hello, I am still training on PENet with NYU dataset, please help me to take a look. The third one in this graph is the predicted result, right? I think this also proves that this network can run on the NYU dataset, is that correct? Because I want to know if this network is suitable for a densely labeled dataset, thanks. Looking forward to your recovery.

Hi,

Thanks for the code, I am using 4 GPU's to train the model and training pass goes well. However when It starts validation i receive an error given below.

RuntimeError: Caught RuntimeError in replica 0 on device 0.

Original Traceback (most recent call last):

File "/opt/conda/lib/python3.6/site-packages/torch/nn/parallel/parallel_apply.py", line 60, in _worker

output = module(*input, **kwargs)

File "/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 545, in __call__

result = self.forward(*input, **kwargs)

File "/home/nazir/PENet_ICRA2021/model.py", line 440, in forward

sparsed_feature3 = self.depth_layer3(sparsed_feature2_plus, geo_s2, geo_s3) # b 64 88 304

File "/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 545, in __call__

result = self.forward(*input, **kwargs)

File "/home/nazir/PENet_ICRA2021/basic.py", line 312, in forward

x = torch.cat((x, g1), 1)

RuntimeError: invalid argument 0: Sizes of tensors must match except in dimension 1. Got 176 and 160 in dimension 2 at /tmp/pip-req-build-akjifb_7/aten/src/THC/generic/THCTensorMath.cu:71

I assume this is due to the multi-gpu training. I see that you have also used 2 GPUs for training, did you see this issue while training?

Thanks,

Hello

I now want to use the PENet network architecture to complete depth completion, because I only need the dataset at hand, so I found the HandNet dataset, which contains depth data and image data collected by the realsense series of depth cameras. I especially want to know where do I need to modify the code? Thank you in advance!

Looking forward to your reply!

I'm so sorry to bother you again. I watched the NLSPN network a few days ago, but I still want to use the NYU dataset on PENet to see the effect. I especially want to know why the prediction error is so large. Because I have been reading this article before. So I may still need to ask you a few questions:

Hi!

I just want to know whether the released pre-trained models the best models which can reproduce the results presented on the paper?

Hi, recently I've been through your great work, I would like to train the network , but which classes should I to download, you know that, KITTI-raw dataset is too big, so please guide me which classes in KITTI-raw dataset for training. Thanks in advance!

Hi there,

thank you very much for your excellent work and for publishing it.

I am trying to implement a "light weight" version of the ENet which aims to be faster in computation.

In order to have the runtime of the ENet on my hardware (Tesla V100 GPU) as a benchmark, I was trying to measure it. Being aware of this issue #4, I took account of the torch.cuda.synchronize() command.

Measuring the time this way, I obtained 10,5 ms runtime for processing a single image.

However, I realized that I can not compute more than 6 depth images per second (while having a GPU load of 100 %), which indicated to me that something was wrong.

Doing further investigations, I came across the PyTorch profiler which seems to be the official tool for correct GPU time measurement . Measuring the time that way I got 150 ms, which is in accordance with my maximum frame rate, as data preprocessing on the CPU comes on top.

Is it possible, that the times you measured are still not the proper execution times of the network, but rather the kernel launch times ?

Hi,

I just had a few questions regarding using our own data and running inference using PENet pretrained weights.

How sparse can the depth map be?

Currently, my inference image is from the Kitti360 dataset which is quite similar to the previous kitti that the network was trained on. But there is no GT depth to sample the depth from. So my sparse depth map is quite sparse.

When I run inference on this image, the prediction is also sparse i.e I have prediction only in the regions covered by the sparse depth map. Is this an expected behaviour?

What should my input be for 'positions' (i.e the cropped image), I don't want to crop the images for running inference, so should I just set input['positions'] = input['rgb']?

It would be great if you can answer these questions when time permits :)

Regards,

Shrisha

I want to infer using your model on kitti .bin files but your model take .png files. So if it's possible to provide the code for this conversion.

Thanks in advance.

Line 216 in ee4318a

How long it takes to train the model one epoch?

Hi!I need to infer image with size 370*1226 without cropping. Is there any args in the code that I can change to directly conduct it?

Hi and thank you very much for your great repo.

As it was mentioned in #43 before, you did not normalize the input data. However, as far as I know it is common practice to do so for a more efficient training and keeping weights and biases small. May I ask why you decided to not normalize your data?

Hello,

I'm now working on the cross-modality detection tasks in 3D space.

Since the SFDNet uses this method as their depth completion way, so I try this repo as well.

The following is the output of PENet and the outcome I obtained when I project them back.

Does it look correct?

I know that the recovered 3d position from the depth map is suffering from the artifacts as u discuss in this issue: #3.

But it looks more severe far beyond my expectation.

Thanks for any help

Good day,

First of all, congratulations on your work and paper. The idea of separating depth-dominant and color-dominant branches is interesting. Also, thank you for releasing the source code to the public. I have been replicating your code the past few days, and so far inferencing has been straightforward (I am getting RMSE scores at around ~760).

However, correct me if I'm wrong but I think there might be a mistake in the inference time computation. In main.py line 213/216, this is where the predictions are generated from the ENet/PENet models, after which gpu_time is computed. I tried adding a print(pred) function call (see in the image below).

I got very different inference times with and without the print(pred) function call. I ran this on a machine with RTX 2080Ti, i7-9700k, CUDA 11.2, torch==1.3.1, torchvision==0.4.2. Below are my runtimes:

original code - a bit faster than your official runtime presumably due to my newer CUDA version(?)

modified code - much slower when print(pred) was added

My understanding is that calling pred = model(batch_data) does not yet run the model prediction; the model inference only actually runs when you call result.evaluate() in line 268 (i.e. lazy execution):

This results in a nearly x10 increase in inference time (i.e. 151ms vs 17ms). Can you confirm that this also happens in your environment?

Hi,

I am trying to load the e.pth.tar model and I am not able to do it. All I am doing is

import torch

torch.load('e.pth.tar', 'cuda:0')

and it gives me this error:

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/core_uc/.local/lib/python3.6/site-packages/torch/serialization.py", line 608, in load

return _legacy_load(opened_file, map_location, pickle_module, **pickle_load_args)

File "/home/core_uc/.local/lib/python3.6/site-packages/torch/serialization.py", line 787, in _legacy_load

result = unpickler.load()

ModuleNotFoundError: No module named 'metrics'

Would you know what could be the issue? I didpip3 install metrics just in case but it did not work.

Hi, I am wondering to use 3 inputs like as if now you are using 2 inputs like Sparse depth map and corresponding RGB, Is that possible to use one more input like Coarse dense depth map got from hand crafted methods with corresponding to sparse depth map ? please let me know if its possible, Thanks in advance .

Thank you for your amazing work!

I have an interest to use the ENet depth completion model. It is working very well with the KITTI database, but when I try to feed it with images of different size (640x360) instead of (1216x352) I am facing this error:

RuntimeError: Given groups=1, weight of size [32, 4, 5, 5], expected input[1, 5, 360, 640] to have 4 channels, but got 5 channels instead.

Is the input layer dedicated to this form factor? What is your recommendation to adapt to input image of size 360x640 or 720p?

Opening the link for the PE model leads to the google drive link e.pth.tar, wheres the link for the E model leads to the drive link for pe.pth.tar.

Thanks for your code!

I have a question about the KITTI raw data. Do I need to download all the raw data? I know that in the monocular depth estimation, generally only a part of it needs to be downloaded. Is there a download list that I can refer to? thanks!

Thank you very much for sharing this great work~

In the process of modifying your network, I found a small problem. I just increased the network parameters from 131762888 to 131763344. Why did the saved .Pth.tar file triple from about 500m to 1.5G

Hello,

Thank you very much for such an amazing work.

I have a question about input of ENet. As I understand from method __getitem__ of class KittiDepth, images represented by variable rgb do not get normalized to have pixel intensity in the range [0, 1.0], instead the intesity range is [0, 255]. Is it correct?

Looking forward to your answer.

The number of epochs to train the model (the three stages) is 100 ?

And how long to train the whole model ?

Thank you for your excellent work, network design gives me a lot of inspiration.

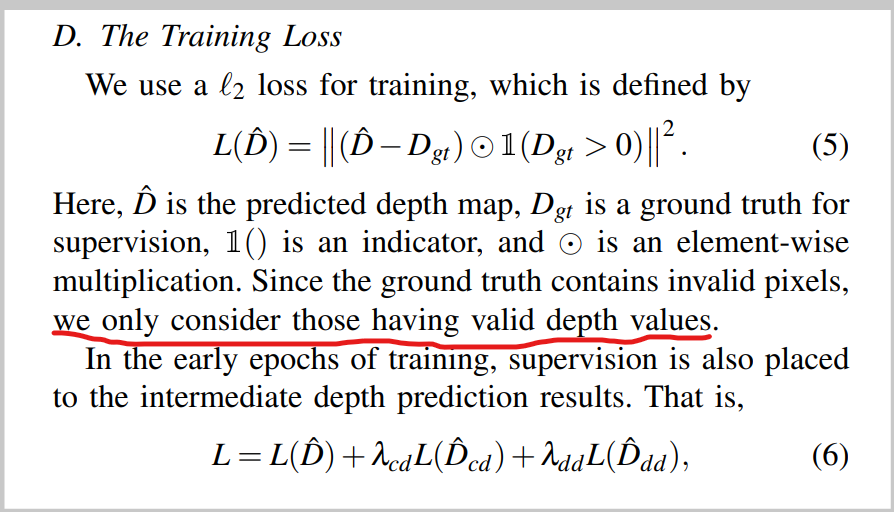

But there's one thing I never understood. I noticed that the training loss function in the paper only focuses on pixels with valid depth(sparse),so why can networks generate dense depth maps? How to ensure that pixels are accurate without supervision?

Hi,

When I read the code about the implementation of DE-CSPN++. I notice that during the iteration you continuously update the depth3, depth5 and depth7. However, the depth3, depth5, depth7 are stored in the list without cloning the underlying data. I am wondering if it is incorrect, since the assignment operation in pytorch is only a reference assignment, so the depth stored in the list would also change when the iteration continues.

Thanks for your reply.

Hi, recently I've been through your great work, I would like to train the network but with less Kitti_Raw data, is that possible? if so please guide me on how to reduce Kitt-raw data for training. Thanks in advance!

Display file corruption

Thank you for your outstanding contribution!

I want to know how the Color-dominant Branch is combined with the point cloud and sent to the network. Does the radar point cloud only take effective points, and does the color image also take the same effective points as the point cloud? How can we get a dense depth map in this way?

This question has been bothering me. I hope you can answer it for me. Thank you again!

Hello,

Thank you for the great work. I want to ask one small query regarding the iRMSE calculation. In your code iRMSE is calculated as follows

# convert from meters to km

inv_output_km = (1e-3 * output[valid_mask])**(-1)

inv_target_km = (1e-3 * target[valid_mask])**(-1)

abs_inv_diff = (inv_output_km - inv_target_km).abs()

irmse = math.sqrt((torch.pow(abs_inv_diff, 2)).mean())`

I want to ask two things

Thanks for helping out.

Thank you very much for a very interesting paper.

I have run the PENet pre-trained model you've provided in evaluation mode on the cropped image.

in the results the code provided (val.csv under results) I got RMSE=757.197 MAE=209.001, compared to RMSE=730.08 MAE=210.55 as it appears in the Kitti benchmark page.

IIs there a different PENet model that matches the submitted results? or there is something in the parameters that I put wrong (I kept the parameters as is in this repository).

Thanks a lot,

Mani

Hi, thanks for your good work!

I found that cspn++ only accelerate in inference, can it accelerate during training?

Thanks!

Hello!

I encountered some difficulties while training the mode. I run it on sigle NVIDIA GTX 2080 GPU, but an error occurred

RuntimeError: CUDA out of memory. Tried to allocate 38.00 MiB (GPU 0; 7.79 GiB total capacity; 5.85 GiB already allocated; 87.56 MiB free; 136.18 MiB cached)

I think memory should be sufficient, but it only run successfully when the batch size is reduced to 1, and the gradient jitter is very severe. Do you have any advise for this ? :(

Thank you for your good work!

I notice that in both CD-branch and DD-branch, confidence maps(concatenated with CD-depth and DD-depth respectively) are generated by the last convolutional layer, which are comprized of ordinary conv+bn+relu layer.

self.rgb_decoder_output = deconvbnrelu(in_channels=32, out_channels=2, kernel_size=3, stride=1, padding=1, output_padding=0)

rgb_output = self.rgb_decoder_output(rgb_feature0_plus)

rgb_depth = rgb_output[:, 0:1, :, :]

rgb_conf = rgb_output[:, 1:2, :, :]self.decoder_layer6 = convbnrelu(in_channels=32, out_channels=2, kernel_size=3, stride=1, padding=1)

depth_output = self.decoder_layer6(decoder_feature5)

d_depth, d_conf = torch.chunk(depth_output, 2, dim=1)

rgb_conf, d_conf = torch.chunk(self.softmax(torch.cat((rgb_conf, d_conf), dim=1)), 2, dim=1)I wonder how convbnrelu layer can output a neat confidence map and a depth map without confidence supervision. Would you please provide me with some relavant works or papers to see? Thanks.

In "kitti_loader.py" file, the function load_calib() changes the principal point of image by the code

"""

K[0, 2] = K[0, 2] - 13; # from width = 1242 to 1216, with a 13-pixel cut on both sides

K[1, 2] = K[1, 2] - 11.5; # from width = 375 to 352, with a 11.5-pixel cut on both sides

"""

but I find the data is croped from the bottom. In this case, I think the change of the principal point should be calculated as

"""

K[0, 2] = K[0, 2] - 13; # from width = 1242 to 1216, with a 13-pixel cut on both sides

K[1, 2] = K[1, 2] - 23; # from width = 375 to 352, with a 23-pixel cut from the bottom

"""

Hi, I would like to estimate the dense depth for the Kitti 3D Object detection dataset which also includes Images and its corresponding point cloud but it's in 360 views, not only front view. so how to convert Raw point cloud to the front view depth map and need to save in .png so that I can estimate dense depth map with that. If you provide code for this conversion, I would be very happy to use that code. Thanks in advance.

Hi,

Torch have officially removed version 1.3.1 from their website and I am unable to load the pretrained weights using torch versions 1.4 >. I get this error: ModuleNotFoundError: No module named 'metrics', which is then followed by AttributeError: Can't get attribute 'Result' on <module 'metrics' from '/home/lib/python3.8/site-packages/metrics/__init__.py'> after I pip install metrics.

All of these errors arise when I try torch.load('pe.pth.tar').

I searched for some issues and this maybe because PyTorch has changed the way they save and load the models. Would it be possible to save the model using this: _use_new_zipfile_serialization=True as a flag?

Or did you face this error aswell? The latest version of metrics is 0.3.3

Hi, I am just curious to know whether it's possible to access tensrboard logs during training like others. Because it helps to see how convergence our loss function is! Thanks in advance

Thank you for your outstanding contribution!

I want to know how the Color-dominant Branch is combined with the point cloud and sent to the network. Does the radar point cloud only take effective points, and does the color image also take the same effective points as the point cloud? How can we get a dense depth map in this way?

This question has been bothering me. I hope you can answer it for me. Thank you again!

Hi!

I have a question for you!

Recently I changed PENet's backbone network ENet to attention-unet, and I only used part of Kitti's dataset. I felt that the training was a little slow, and then I modified the learning rate when training the backbone network so that the network could learn faster.

It's ok when I train ENet. (Blue is the training set, yellow is the validation set)

But when I used the trained backbone network for the second stage of training, the network experienced severe overfitting. I wonder if this has something to do with the learning rate?

We can see that cspn++ is trained very poorly. The validation set error is large.

What is the reason for this?

Below is the learning rate when I train ENet.

Hi thanks for your impressive work! My future research interests is on the depth completion so I would like to start with your work!

Just one minor question on downloading the kitti raw data: should I download the rectified or uncertified data? And Are all the branches (road, campus, city, person) are used?

Thanks again for your great work and looking forward to your reply!

Thanks for your great work !

I have a question about data augmentation part. You applied random crop on images and I notice that you didn't apply same augmentation on camera intrinsic matrix. When you crop the images, then the parameters in camera intrinsic matrix like cx,cy will mismatch. Do you guys think fixing this will get some improvement or you leave this on purpose ?

Thanks again for your open-source !

PENet is efficient and the output depth image seems very nice, but the point cloud transformed from the depth inferred by PENet looks plausible and noisy. Is there something wrong with my matlab code used for transformation? Thank you.

depth2cloud.zip

Thanks for the nice work!

Is there any chance that the training loss log can be released? That can greatly help my debugging process.

Hi, thanks for your great work! I am wondering why during the training, the original CSPN is used. Because as you mentioned in the paper, the new implemented one is much faster.

Thank you for the great work.

I am currently running stage 3 training to further refine the depth maps. However, the RMSE is not so great (till 15 epochs), It's still lingering around 860-890. After how many epochs do you generally experience a drop of RMSE especially in the third stage? and the hyperparameters used in the code repository for stage three is the same as you used in the stage 3 experiment?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.