NiiVue is web-based visualization tool for neuroimaging that can run on any operating system and any web device (phone, tablet, computer). This repository contains only the core NiiVue package that can be embedded into other projects. We have additional repositories that wrap NiiVue for use in jupyter notebooks, vscode, and electron applications.

Click here to see NiiVue live demos

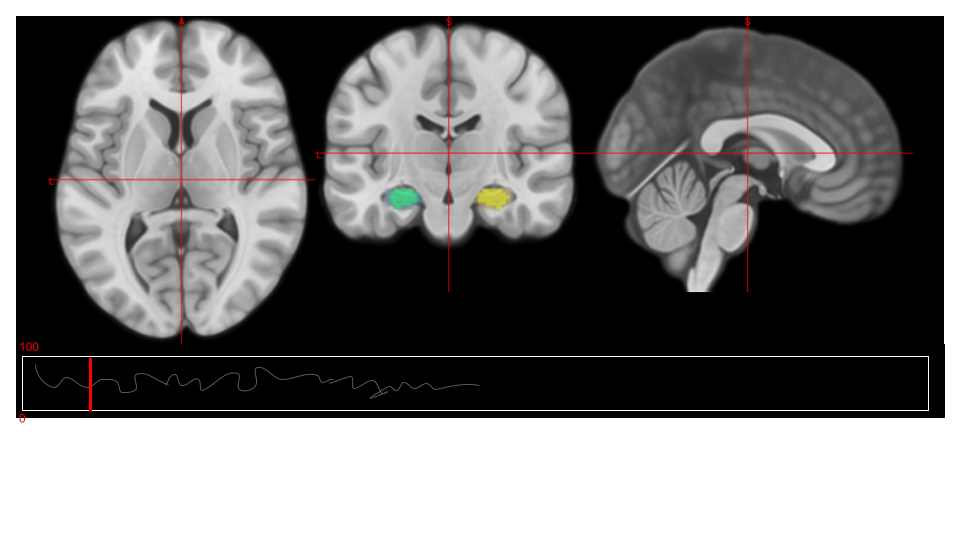

What makes NiiVue unique is its ability to simultaneously display all the datatypes popular with neuroimaging: voxels, meshes, tractography streamlines, statistical maps and connectomes. Alternative voxel-based web tools include ami, BioImage Suite Web, BrainBrowser, nifti-drop, OHIF DICOM Viewer, Papaya, VTK.js, and slicedrop.

To run a hot-loading development that is updated whenever you save changes to any source files, you can run:

git clone [email protected]:niivue/niivue.git

cd niivue

npm install

npm run dev

The command npm run demo will minify the project and locally host all of the live demos. The DEVELOP.md file provides more details for developers.

Click here for the docs web page

- Analysis of Functional NeuroImages (AFNI) Scientific and Statistical Computing Core (National Institutes of Health)

- ChRIS Research Integration System (ChRIS) from Boston Children's Hospital uses NiiVue.

- Center for the Study of Aphasia Recovery (University of South Carolina)

- FMRIB's Software Library (FSL) Wellcome Centre for Integrative Neuroimaging (University of Oxford)

- FreeSurfer Laboratories for Computational Neuroimaging (Massachusetts General Hospital) uses NiiVue for FreeBrowse

- OpenNeuro.org uses NiiVue to visualize datasets

- BrainLife.io integrates NiiVue into ezbids

- nilearn is extending ipyniivue

- neurodesk uses NiiVue for their QSMxT Quantitative Susceptibility Mapping toolbox

- niivue-vscode is a VSCode extension for displaying images

- Neuroinformatics Research and Development Group embeds NiiVue in tractoscope

- CACTAS from Daniel Haehn's team is extending NiiVue drawing and segmentation capabilities

- Digital Brain Bank for navigating MRI datasets

- 2021-2022 P50 DC014664 NIH NIDCD NOT-OD-21-091

- 2023-2026 RF1 MH133701 NIH NIMH

NiiVue supports many popular brain imaging formats:

- Voxel-based formats: NIfTI, NRRD, MRtrix MIF, AFNI HEAD/BRIK, MGH/MGZ, ITK MHD, ECAT7.

- Mesh-based formats: GIfTI, ASC, BYU/GEO/G, BrainSuite DFS, ICO/TRI, PLY, BrainNet NV, BrainVoyager SRF, FreeSurfer, MZ3, OFF, Wavefront OBJ, STL, Legacy VTK, X3D.

- Mesh overlay formats: GIfTI, CIfTI-2, MZ3, SMP, STC, FreeSurfer (CURV/ANNOT)

- Tractography formats: TCK, TRK, TRX, TSF, TT, VTK, AFNI .niml.tract

- DICOM: DICOM and DICOM Manifests