Homepage: https://cloudcompare.org

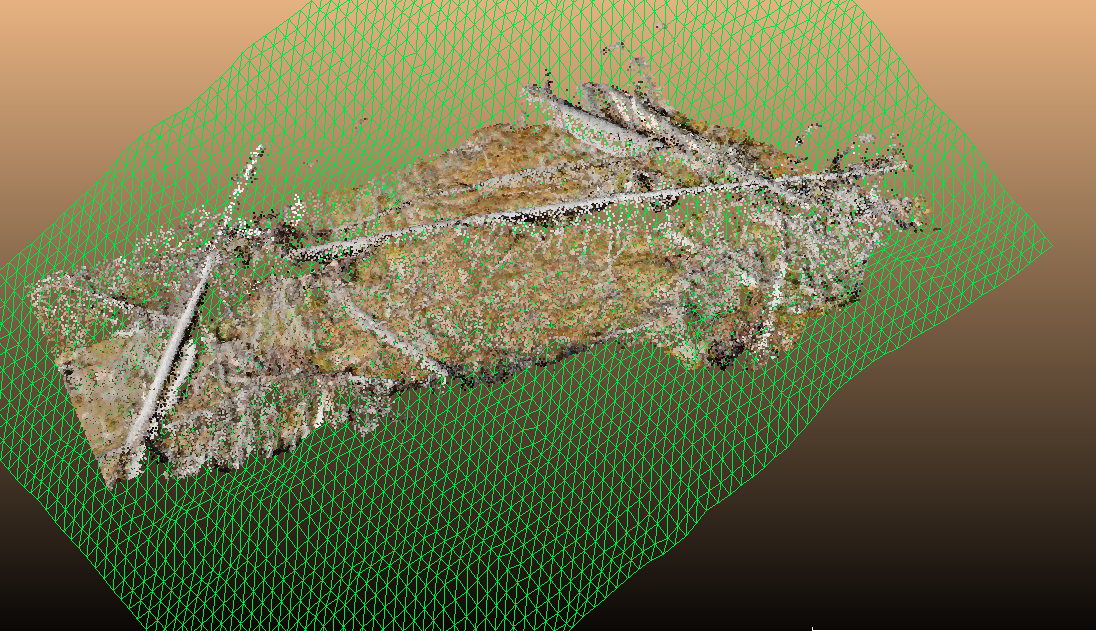

CloudCompare is a 3D point cloud (and triangular mesh) processing software. It was originally designed to perform comparison between two 3D points clouds (such as the ones obtained with a laser scanner) or between a point cloud and a triangular mesh. It relies on an octree structure that is highly optimized for this particular use-case. It was also meant to deal with huge point clouds (typically more than 10 million points, and up to 120 million with 2 GB of memory).

More on CloudCompare here

This project is under the GPL license: https://www.gnu.org/licenses/gpl-3.0.html

This means that you can use it as is for any purpose. But if you want to distribute it, or if you want to reuse its code or part of its code in a project you distribute, you have to comply with the GPL license. In effect, all the code you mix or link with CloudCompare's code must be made public as well. This code cannot be used in in a closed source software.

Linux:

- Flathub: https://flathub.org/apps/details/org.cloudcompare.CloudCompare

flatpak install flathub org.cloudcompare.CloudCompare

Supports: Windows, Linux, and macOS

Refer to the BUILD.md file for up-to-date information.

Basically, you have to:

- clone this repository

- install mandatory dependencies (OpenGL, etc.) and optional ones if you really need them (mainly to support particular file formats, or for some plugins)

- launch CMake (from the trunk root)

- enjoy!

If you want to help us improve CloudCompare or create a new plugin you can start by reading this guide

If you want to help us in another way, you can make donations via

Thanks!