Website: https://pushpin.org/

Forum: https://community.fastly.com/c/pushpin/12

Pushpin is a reverse proxy server written in Rust & C++ that makes it easy to implement WebSocket, HTTP streaming, and HTTP long-polling services. The project is unique among realtime push solutions in that it is designed to address the needs of API creators. Pushpin is transparent to clients and integrates easily into an API stack.

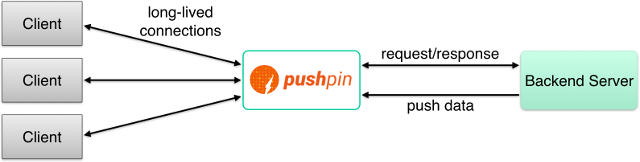

Pushpin is placed in the network path between the backend and any clients:

Pushpin communicates with backend web applications using regular, short-lived HTTP requests. This allows backend applications to be written in any language and use any webserver. There are two main integration points:

- The backend must handle proxied requests. For HTTP, each incoming request is proxied to the backend. For WebSockets, the activity of each connection is translated into a series of HTTP requests1 sent to the backend. Pushpin's behavior is determined by how the backend responds to these requests.

- The backend must tell Pushpin to push data. Regardless of how clients are connected, data may be pushed to them by making an HTTP POST request to Pushpin's private control API (

http://localhost:5561/publish/by default). Pushpin will inject this data into any client connections as necessary.

To assist with integration, there are libraries for many backend languages and frameworks. Pushpin has no libraries on the client side because it is transparent to clients.

To create an HTTP streaming connection, respond to a proxied request with special headers Grip-Hold and Grip-Channel2:

HTTP/1.1 200 OK

Content-Type: text/plain

Content-Length: 22

Grip-Hold: stream

Grip-Channel: test

welcome to the streamWhen Pushpin receives the above response from the backend, it will process it and send an initial response to the client that instead looks like this:

HTTP/1.1 200 OK

Content-Type: text/plain

Transfer-Encoding: chunked

Connection: Transfer-Encoding

welcome to the streamPushpin eats the special headers and switches to chunked encoding (notice there's no Content-Length). The request between Pushpin and the backend is now complete, but the request between the client and Pushpin remains held open. The request is subscribed to a channel called test.

Data can then be pushed to the client by publishing data on the test channel:

curl -d '{ "items": [ { "channel": "test", "formats": { "http-stream": \

{ "content": "hello there\n" } } } ] }' \

http://localhost:5561/publishThe client would then see the line "hello there" appended to the response stream. Ta-da, transparent realtime push!

For more details, see the HTTP streaming section of the documentation. Pushpin also supports HTTP long-polling and WebSockets.

Using a library on the backend makes integration even easier. Here's another HTTP streaming example, similar to the one shown above, except using Pushpin's Django library. Please note that Pushpin is not Python/Django-specific and there are backend libraries for other languages/frameworks, too.

The Django library requires configuration in settings.py:

MIDDLEWARE_CLASSES = (

'django_grip.GripMiddleware',

...

)

GRIP_PROXIES = [{'control_uri': 'http://localhost:5561'}]Here's a simple view:

from django.http import HttpResponse

from django_grip import set_hold_stream

def myendpoint(request):

if request.method == 'GET':

# subscribe every incoming request to a channel in stream mode

set_hold_stream(request, 'test')

return HttpResponse('welcome to the stream\n', content_type='text/plain')

...What happens here is the set_hold_stream() method flags the request as needing to turn into a stream, bound to channel test. The middleware will see this and add the necessary Grip-Hold and Grip-Channel headers to the response.

Publishing data is easy:

from gripcontrol import HttpStreamFormat

from django_grip import publish

publish('test', HttpStreamFormat('hello there\n'))Pushpin supports WebSockets by converting connection activity/messages into HTTP requests and sending them to the backend. For this example, we'll use Pushpin's Express library. As before, please note that Pushpin is not Node/Express-specific and there are backend libraries for other languages/frameworks, too.

The Express library requires configuration and setting up a middleware handler:

const express = require('express');

const { ServeGrip } = require('@fanoutio/serve-grip');

var app = express();

// Instantiate the middleware and register it with Express

const serveGrip = new ServeGrip({

grip: { 'control_uri': 'http://localhost:5561', 'key': 'changeme' }

});

app.use(serveGrip);

// Instantiate the publisher to use from your code to publish messages

const publisher = serveGrip.getPublisher();

app.get('/hello', (req, res) => {

res.send('hello world\n');

});With that structure in place, here's an example of a WebSocket endpoint:

const { WebSocketMessageFormat } = require( '@fanoutio/grip' );

app.post('/websocket', async (req, res) => {

const { wsContext } = req.grip;

// If this is a new connection, accept it and subscribe it to a channel

if (wsContext.isOpening()) {

wsContext.accept();

wsContext.subscribe('all');

}

while (wsContext.canRecv()) {

var message = wsContext.recv();

// If return value is null then connection is closed

if (message == null) {

wsContext.close();

break;

}

// broadcast the message to everyone connected

await publisher.publishFormats('all', WebSocketMessageFormat(message));

}

res.end();

});The above code binds all incoming connections to a channel called all. Any received messages are published out to all connected clients.

What's particularly noteworthy is that the above endpoint is stateless. The app doesn't keep track of connections, and the handler code only runs whenever messages arrive. Restarting the app won't disconnect clients.

The while loop is deceptive. It looks like it's looping for the lifetime of the WebSocket connection, but what it's really doing is looping through a batch of WebSocket messages that was just received via HTTP. Often this will be one message, and so the loop performs one iteration and then exits. Similarly, the wsContext object only exists for the duration of the handler invocation, rather than for the lifetime of the connection as you might expect. It may look like socket code, but it's all an illusion. 🎩

For details on the underlying protocol conversion, see the WebSocket-Over-HTTP Protocol spec.

Pushpin can also connect to backend servers via ZeroMQ instead of HTTP. This may be preferred for writing lower-level services where a real webserver isn't needed. The messages exchanged over the ZeroMQ connection contain the same information as HTTP, encoded as TNetStrings.

To use a ZeroMQ backend, first make sure there's an appropriate route in Pushpin's routes file:

* zhttpreq/tcp://127.0.0.1:10000

The above line tells Pushpin to bind a REQ-compatible socket on port 10000 that handlers can connect to.

Activating an HTTP stream is as easy as responding on a REP socket:

import zmq

import tnetstring

zmq_context = zmq.Context()

sock = zmq_context.socket(zmq.REP)

sock.connect('tcp://127.0.0.1:10000')

while True:

req = tnetstring.loads(sock.recv()[1:])

resp = {

'id': req['id'],

'code': 200,

'reason': 'OK',

'headers': [

['Grip-Hold', 'stream'],

['Grip-Channel', 'test'],

['Content-Type', 'text/plain']

],

'body': 'welcome to the stream\n'

}

sock.send('T' + tnetstring.dumps(resp))Pushpin is an ambitious project with two primary goals:

- Make realtime API development easier. There are many other solutions out there that are excellent for building realtime apps, but few are useful within the context of APIs. For example, you can't use Socket.io to build Twitter's streaming API. A new kind of project is needed in this case.

- Make realtime push behavior delegable. The reason there isn't a realtime push CDN yet is because the standards and practices necessary for delegating to a third party in a transparent way are not yet established. Pushpin is more than just another realtime push solution; it represents the next logical step in the evolution of realtime web architectures.

To really understand Pushpin, you need to think of it as more like a gateway than a message queue. Pushpin does not persist data and it is agnostic to your application's data model. Your backend provides the mapping to whatever that data model is. Tools like Kafka and RabbitMQ are complementary. Pushpin is also agnostic to your API definition. Clients don't necessarily subscribe to "channels" or receive "messages". Clients make HTTP requests or send WebSocket frames, and your backend decides the meaning of those inputs. Pushpin could perhaps be awkwardly described as "a proxy server that enables web services to delegate the handling of realtime push primitives".

On a practical level, there are many benefits to Pushpin that you don't see anywhere else:

- The proxy design allows Pushpin to fit nicely within an API stack. This means it can inherit other facilities from your REST API, such as authentication, logging, throttling, etc. It can be combined with an API management system.

- As your API scales, a multi-tiered architecture will become inevitable. With Pushpin you can easily do this from the start.

- It works well with microservices. Each microservice can have its own Pushpin instance. No central bus needed.

- Hot reload. Restarting the backend doesn't disconnect clients.

- In the case of WebSocket messages being proxied out as HTTP requests, the messages may be handled statelessly by the backend. Messages from a single connection can even be load balanced across a set of backend instances.

Check out the the Install guide, which covers how to install and run. There are packages available for Linux (Debian, Ubuntu, CentOS, Red Hat), Mac (Homebrew), or you can build from source.

By default, Pushpin listens on port 7999 and requests are handled by its internal test handler. You can confirm the server is working by browsing to http://localhost:7999/. Next, you should modify the routes config file to route requests to your backend webserver. See Configuration.

Pushpin is horizontally scalable. Instances don’t talk to each other, and sticky routing is not needed. Backends must publish data to all instances to ensure clients connected to any instance will receive the data. Most of the backend libraries support configuring more than one Pushpin instance, so that a single publish call will send data to multiple instances at once.

Optionally, ZeroMQ PUB/SUB can be used to send data to Pushpin instead of using HTTP POST. When this method is used, subscription information is forwarded to each publisher, such that data will only be published to instances that have listeners.

As for vertical scalability, Pushpin has been tested with up to 1 million concurrent connections running on a single DigitalOcean droplet with 8 CPU cores. In practice, you may want to plan for fewer connections per instance, depending on your throughput. The new connection accept rate is about 800/sec (though this also depends on the speed of your backend), and the message throughput is about 8,000/sec. The important thing is that Pushpin is horizontally scalable which is effectively limitless.

Pushpin means to "pin" connections open for "pushing".

Pushpin is offered under the Apache License, Version 2.0. See the LICENSE file.

1: Pushpin can communicate WebSocket activity to the backend using either HTTP or WebSockets. Conversion to HTTP is generally recommended as it makes the backend easier to reason about.

2: GRIP (Generic Realtime Intermediary Protocol) is the name of Pushpin's backend protocol. More about that here.