❌ I have stopped maintaining this repo. For fine-tuning ResNet, I would suggest using Torch version from Facebook repo.

This repository is a Matconvnet re-implementation of "Deep Residual Learning for Image Recognition",Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun. You can train Deep Residual Network on ImageNet from Scratch or fine-tune pre-trained model on your own dataset. This repo is created by Hang Zhang.

The code relies on vlfeat, and matconvnet, which should be downloaded and built before running the experiments. You can use the following commend to download them.

git clone -b v1.0 --recurse-submodules https://github.com/zhanghang1989/ResNet-Matconvnet.gitIf you have problem with compiling, please refer to the link.

-

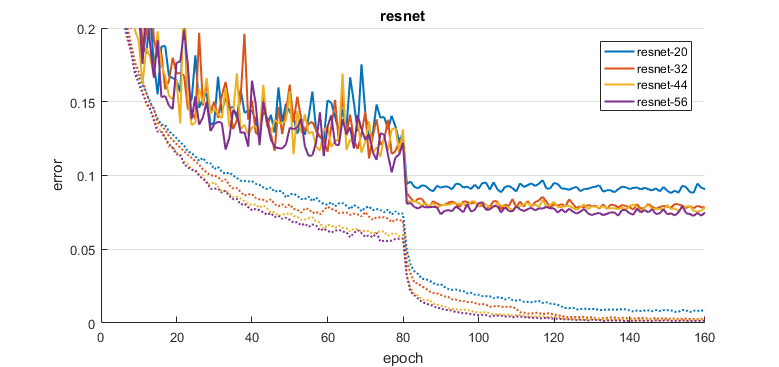

Cifar. Reproducing Figure 6 from the original paper.

run_cifar_experiments([20 32 44 56 110], 'plain', 'gpus', [1]); run_cifar_experiments([20 32 44 56 110], 'resnet', 'gpus', [1]);

Cifar Experiments

Reproducing the experiments in Facebook blog. Removing ReLU layer at the end of each residual unit, we observe a small but significant improvement in test performance and the converging progress becomes smoother.

res_cifar(20, 'modelType', 'resnet', 'reLUafterSum', false,... 'expDir', 'data/exp/cifar-resNOrelu-20', 'gpus', [2]) plot_results_mix('data/exp','cifar',[],[],'plots',{'resnet','resNOrelu'})

-

Imagenet2012. download the dataset to

data/ILSVRC2012and follow the instructions insetup_imdb_imagenet.m.run_experiments([50 101 152], 'gpus', [1 2 3 4 5 6 7 8]);

-

Your own dataset.

run_experiments([18 34],'datasetName', 'minc',... 'datafn', @setup_imdb_minc, 'nClasses', 23, 'gpus', [1 2]);

-

Download

- the models to

data/models: imagenet-resnet-50-dag , imagenet-resnet-101-dag , imagenet-resnet-152-dag - the datasets to

data/: Material in Context Database (minc)

- the models to

-

Fine-tuning

res_finetune('datasetName', 'minc', 'datafn',... @setup_imdb_minc, 'gpus',[1 2]);

-

06/21/2016:

- Support Pre-activation model described in Identity Mappings in Deep Residual Networks, Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

-

05/17/2016:

- Reproducing the experiments in Facebook blog, removing ReLU layer at the end of each residual unit.

-

05/02/2016:

- Supported official Matconvnet version.

- Added Cifar experiments and plots.

-

04/27/2016: Re-implementation of Residual Network:

- The code benefits from Hang Su's implementation.

- The generated models are compatitible with the converted models.