sergio0694 / neuralnetwork.net Goto Github PK

View Code? Open in Web Editor NEWA TensorFlow-inspired neural network library built from scratch in C# 7.3 for .NET Standard 2.0, with GPU support through cuDNN

License: GNU General Public License v3.0

A TensorFlow-inspired neural network library built from scratch in C# 7.3 for .NET Standard 2.0, with GPU support through cuDNN

License: GNU General Public License v3.0

Hey Serio0694,

really nice libary. I love this libary.

For my question:

I´m able to train my network with cuda, save the network and load it with CPU only for the function "Forward" ?.

Thanks in advance.

Hi, I am Faruk. I found your repo via someone who was discussing some topic with me on Github. Special Thanks to Github for this.

I have been also developing a deep learning library in .NET 5 for 9 months and have developed the project enough to be considered as mature. I see you achieved NeuralNetwork.NET your own. That's a huge effort really. Thank you for that as well.

Your library seems to be similar to tensorflow. Do you have static or dynamic computation graph in the background?

My network type is dynamic graph like in pytorch. My aim is/was to develop a library to give the scientist the best experience and the most flexible library. To do so, I created multi-dimensional layers (unless you specify that it shouldn't be) and multi-inputs for an RNN layer etc. Because RNN layers are processed dynamically, there are many things you can do to control the flow of the network while it goes forward. So, how the flow will go may depend on the output of the current layer for example, or depend on the index (current time step value which is also multidimensional).

The layers that are ready to be used:

I separated the project into two subproject named: DeepLearningFramework and PerformanceWork.

Tensor, memory management and kernel implementations are in PerformanceWork. Other things like layers, terms, training procedures etc are in DeepLearningFramework.

The layers have terms inside them. The terms are the basic units of a graph. Terms can be thought as each box in the RNN representation. So, for each time step in a layer, there is a term corresponds to that time step. The time step is not just a number, but an n-dimensional tuple, because the network is multi-dimensional.

Example code for XOR model creation:

Example code for MNIST model creation:

The training procedure and loading data to the model are not ready for users. So, I semi-manually do that currently.

There are many things that I consider for future development such as Encoder-Decoder models and Multi-Task Learning. The library should be enough flexible to enable users to develop those models and the models that are not implemented yet in the world maybe.

Serialization is another topic that I give thoughts about. There are trade-offs between how flexible library and how easy to reload a model that is saved.

To sum up, my aim is to create the most flexible library, optimization is important of course but not the main target here. There are also distributed learning approaches for multi-GPU instances that I forgot to mention. It's pretty messed up thinking of those all together. Serialization, multi-GPU, encoder-decoder, multi-task learning... These are the things that are to be considered together while designing the library.

However, I have no strength to support my project. I believe that I have gained a lot of experience while developing the library. I also want to create it and propose to scientists and to my university maybe. Do you have any idea about how should I keep developing or should I? I'm trying to collaborate with people, but no one is coming. Every time, I write a lot of messages like this to explain the library. How did you manage to develop the library on your own? I mean it, it's really huge work. Congrats! Also, if you have enough time, you can check out my project. If you'd like to help me, I gladly accept that 😄

While installing the Nuget package there is a package reference error.

I expect the package isn't online anymore.

Package Source is Nuget.org.

I'm finding the library very useful, however I've noticed that the TryLoad for loading a saved model fails and always returns null.

I'm training and saving the model with the below code;

var result = NetworkManager.TrainNetwork(

network,

trainingDataSet,

TrainingAlgorithms.AdaDelta(),

_epochsToRun,

_dropoutProb,

null,

(prog) =>

{

_currentTrainAccuracy = prog.Result.Accuracy;

_currentTrainCost = prog.Result.Cost;

},

null,

testingDataSet,

cancellationToken.ShutdownToken);

var fileInfo = new FileInfo(_settings.NeuralNetworkFilePath);

network.Save(fileInfo);

And then loading with the following;

var fileInfo = new FileInfo(_settings.NeuralNetworkFilePath);

var model = NetworkLoader.TryLoad(fileInfo, ExecutionModePreference.Cpu);

However, the model is always null, regardless of the file I try to load.

I've taken a look at the serialization and deserialization and noticed the issue occurs in the FullyConnectedLayer's Deserialize function.

public static INetworkLayer Deserialize([NotNull] Stream stream)

{

if (!stream.TryRead(out TensorInfo input)) return null;

if (!stream.TryRead(out TensorInfo output)) return null;

if (!stream.TryRead(out ActivationType activation)) return null;

if (!stream.TryRead(out int wLength)) return null;

float[] weights = stream.ReadUnshuffled(wLength);

if (!stream.TryRead(out int bLength)) return null;

float[] biases = stream.ReadUnshuffled(bLength);

return new FullyConnectedLayer(input, output.Size, weights, biases, activation);

}

I monitored the bytes being stored in the serialization for the lengths of the weights and bias arrays, and noticed that the wLength value is correctly read from the stream, but when it gets to reading the bLength value, the bytes read are entirely different to those that were recorded and an exception is thrown because bLength becomes an absurdly large or small number and an array of that size isn't possible to create.

My assumption is that there's some issue with the reading of weights that ends up moving the stream position to the wrong place, but streams aren't exactly something I'm overly used to working with!

I'm aware this project is no longer maintained, but if anyone could suggest a workaround or fix, it'd certainly be helpful and very much appreciated!

I was able to quickly reproduce the problem by creating a new CLI project and using the following Program.cs;

using NeuralNetworkNET.APIs;

using NeuralNetworkNET.APIs.Structs;

var testNet = NetworkManager.NewSequential(TensorInfo.Linear(505),

NetworkLayers.FullyConnected(14, NeuralNetworkNET.APIs.Enums.ActivationType.ReLU),

NetworkLayers.Softmax(2));

testNet.Save(new FileInfo("D:\\TestNet.nnet"));

var file = "D:\\TestNet.nnet";

var fInfo = new FileInfo(file);

var model = NetworkLoader.TryLoad(fInfo, NeuralNetworkNET.APIs.Enums.ExecutionModePreference.Cpu);

var res = model != null;

Hi!

Sorry but I still don't understand how to compute neural network when the training is over.

Should I use:

network.ExtractDeepFeatures(input); ?

I can not run the project DigitsCudaTest, error thrown noticed that 'Couldn't open CUDA library 'cudnn64_5''

Should i use cuDNN 5.1 or cuDNN 5.0 with CUDA 8.0?

Thanks for the library, Sergio. I have a question that is similar to @quequiere's - I am trying to create a simple example (the XOR problem( to help understand your library but am struggling to get it to produce the correct results. Can you see what I'm doing incorrectly?

` var network = NetworkManager.NewSequential(TensorInfo.Linear(2),

NetworkLayers.FullyConnected(2, ActivationType.Sigmoid),

NetworkLayers.Softmax(1));

IEnumerable<(float[] x, float[] u)> data = new List<(float[] x, float[] u)>

{

(new[] {0f, 0f}, new[] {0f}),

(new[] {0f, 1f}, new[] {1f}),

(new[] {1f, 0f}, new[] {1f}),

(new[] {1f, 1f}, new[] {0f})

};

var trainingData = DatasetLoader.Training(data, 10);

var result = NetworkManager.TrainNetwork(

network,

trainingData,

TrainingAlgorithms.StochasticGradientDescent(),

100,

0.5f,

p => { Console.WriteLine($"Progress: {p.Percentage}%, Processed {p.ProcessedItems}"); },

t => { Console.WriteLine($"Training: Iteration {t.Iteration}, Accuracy {t.Result.Accuracy}, Cost {t.Result.Cost}"); });

Console.WriteLine(network.Forward(new float[] { 0, 0 })[0]);

Console.WriteLine(network.Forward(new float[] { 0, 1 })[0]);

Console.WriteLine(network.Forward(new float[] { 1, 0 })[0]);

Console.WriteLine(network.Forward(new float[] { 1, 1 })[0]);`

I am trying to learn about Graph Neural Networks and wanted to know if they are possible to build using this library.

I see all of the basic building blocks, but I couldn't find how to create a layer that is connected in the same way as a graph, I was looking for someway to provide an adjacency list but was unsuccessful.

Is there an example to show how, or clarity on if it is possible with this library?

Thanks!

Brilliant library. Beyond brilliant.

However:

Without at least one comprehensive sample trying to use ESP to figure out what the intended usage would be is ridiculous beyond belief.

Simple example:

How would we implement a tensor reshape operation between layers?

How would we implement a custom loss function?

etc...

This is such a brilliant library and quite a lot of work has been put into it. Shame there is not one comprehensive sample. Which results in hours and hours of wasted time trying to figure out howto use it.

As a matter of fact the only way to really figure it out is to download the master source. Then wade through the source code looking for a glimpse of maybe a unit test or some snippet of code from which the intended usage can be inferred.

@Sergio0694 Just because your IQ is way beyond most humans does not mean that you can expect the rest of us to operate at the same level. Why build and publish this library if you don't actually want anyone without Extra Sensory Perception and mind reading abilities to be able to use it?

I don't know if this project is actively maintained anymore, but just in case, I'd mention that I got it working fine with CPU but GPU/CUDA does not seem to work since the Alea file isn't found. Running in .NET Framework 7.0.

Is it possible to import TensorFlow models to this library? is it a feature? if not is it planned? if not again xD, is there a way that i can do that manually?

Dear Sergio,

After reloading the * .nnet file, the result in the train result is different. Before reloading 28% and after Reloading 24%. How come?

Great

Zeljko

Hello, I accomplish to train the network by my own dataset,

but I dont know, how can I predict or classify (or simulate or whatever the name) any input data.

for example in matlap:

y=sim(net, x);

Thank you.

The instruction CuDnnNetworkLayers.IsCudaSupportAvailable should return True if Cuda is available, False otherwise

Exception is raised:

System.IO.FileNotFoundException: 'Could not load file or assembly 'Alea, Version=3.0.0.0, Culture=neutral, PublicKeyToken=ba52afc3c2e933d6'.

Gym.NET/examples/AtariDeepQLearner/AtariDeepQLearner/Trainer.cs line 32

Awesome library! I wanted to ask if the library is thread-safe? We've been wanting to use it in an ASP.NET application to train (very small) NNs on the fly.

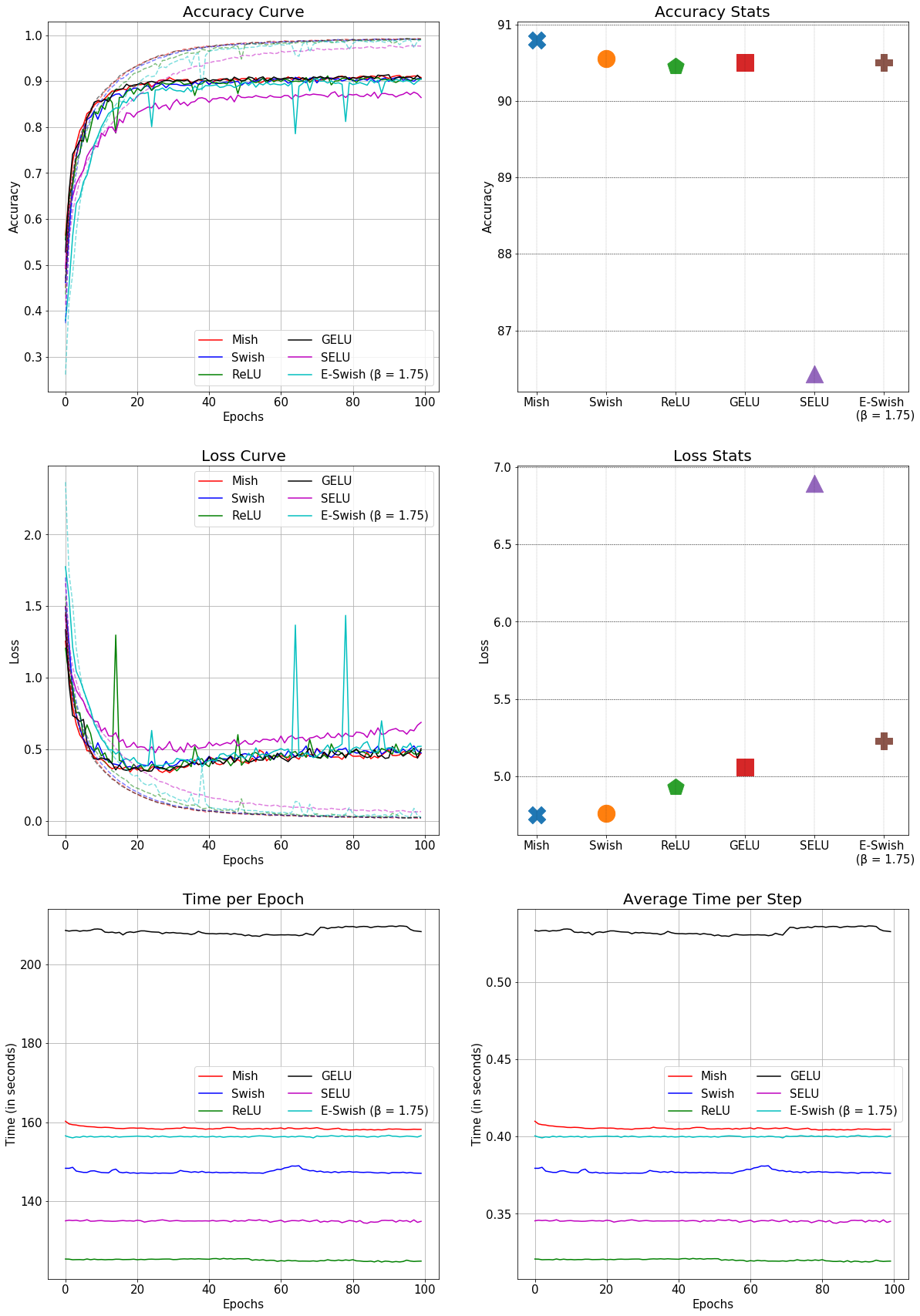

Mish is a new novel activation function proposed in this paper.

It has shown promising results so far and has been adopted in several packages including:

All benchmarks, analysis and links to official package implementations can be found in this repository

It would be nice to have Mish as an option within the activation function group.

This is the comparison of Mish with other conventional activation functions in a SEResNet-50 for CIFAR-10: (Better accuracy and faster than GELU)

I need a plain output layer, I could also implement it myself if you give some hint.

I want to do a time series forecasting and it took a while to realize I was misusing the softmax layer which is not what I need.

I don't need the GPU version, so:

I think I should implement a subclass of OutputLayerBase that relies on some CpuDnn.SOMETHINGFORWARD.. correct?

I tried to install it on NuGet, but with error:

Could not install package 'NeuralNetwork.NET 2.1.3'. You are trying to install this package into a project that targets '.NETFramework,Version=v4.6.1', but the package does not contain any assembly references or content files that are compatible with that framework.

could anyone help me ?

thanks

TrainingStopReason.NumericOverflow. It happens quite a lot but the settings have a huge impact on how often. Is there a way to mitigate this?

Brilliant work btw!

While installing the Nuget package there is a package reference error.

I expect the package isn't online anymore.

Package Source is Nuget.org.

Nice library!

Is it possible to extract results on the training data?

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.