scikit-learn-contrib is a github organization for gathering high-quality scikit-learn compatible projects. It also provides a template for establishing new scikit-learn compatible projects.

With the explosion of the number of machine learning papers, it becomes increasingly difficult for users and researchers to implement and compare algorithms. Even when authors release their software, it takes time to learn how to use it and how to apply it to one's own purposes. The goal of scikit-learn-contrib is to provide easy-to-install and easy-to-use high-quality machine learning software. With scikit-learn-contrib, users can install a project by pip install sklearn-contrib-project-name and immediately try it on their data with the usual fit, predict and transform methods. In addition, projects are compatible with scikit-learn tools such as grid search, pipelines, etc.

If you would like to include your own project in scikit-learn-contrib, take a look at the workflow.

A simple-but-efficient density-based clustering algorithm that can find clusters of arbitrary size, shapes and densities in two-dimensions. Higher dimensions are first reduced to 2-D using the t-sne. The algorithm relies on a single parameter K, the number of nearest neighbors.

Maintained by: Mohamed Abbas

Large-scale linear classification, regression and ranking.

Maintained by Mathieu Blondel and Fabian Pedregosa.

Fast and modular Generalized Linear Models with support for models missing in scikit-learn.

Maintained by Mathurin Massias, Pierre-Antoine Bannier, Quentin Klopfenstein and Quentin Bertrand.

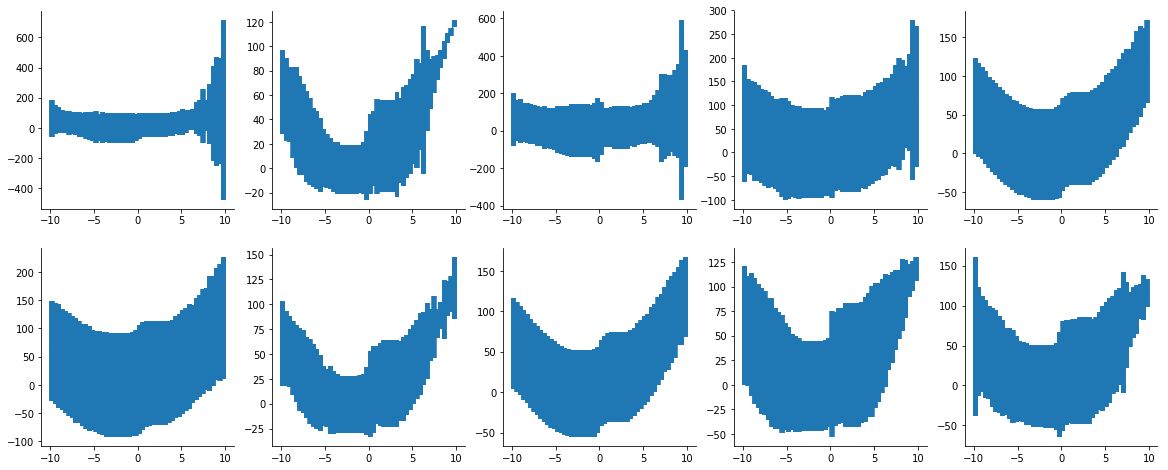

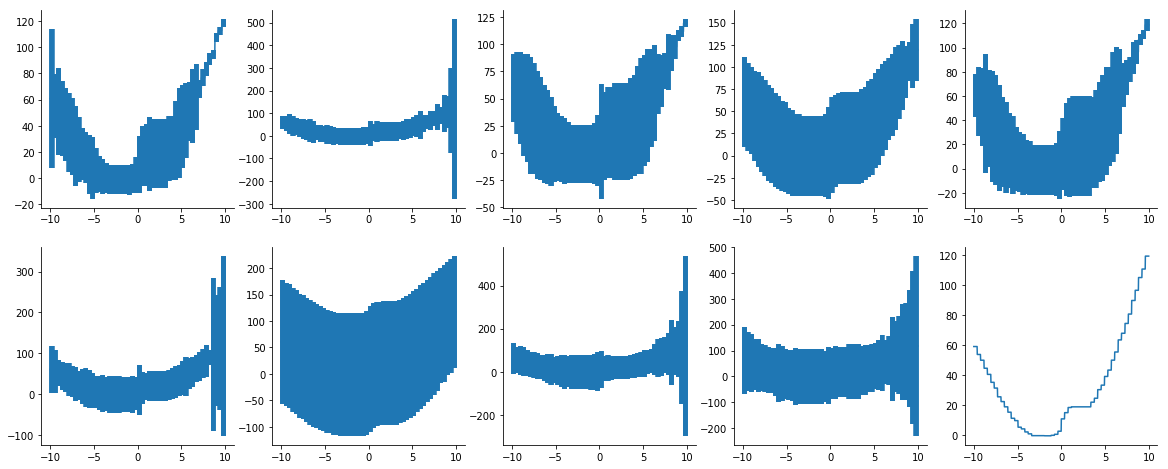

A Python implementation of Jerome Friedman's Multivariate Adaptive Regression Splines.

Maintained by Jason Rudy and Mehdi.

Python module to perform under sampling and over sampling with various techniques.

Maintained by Guillaume Lemaitre, Fernando Nogueira, Dayvid Oliveira and Christos Aridas.

Factorization machines and polynomial networks for classification and regression in Python.

Maintained by Vlad Niculae.

Confidence intervals for scikit-learn forest algorithms.

Maintained by Ariel Rokem, Kivan Polimis and Bryna Hazelton.

A high performance implementation of HDBSCAN clustering.

Maintained by Leland McInnes, jc-healy, c-north and Steve Astels.

A library of sklearn compatible categorical variable encoders.

Maintained by Will McGinnis and Paul Westenthanner

Python implementations of the Boruta all-relevant feature selection method.

Maintained by Daniel Homola

Pandas integration with sklearn.

Maintained by Israel Saeta Pérez

Machine learning with logical rules in Python.

Maintained by Florian Gardin, Ronan Gautier, Nicolas Goix and Jean-Matthieu Schertzer.

A Python implementation of the stability selection feature selection algorithm.

Maintained by Thomas Huijskens

Metric learning algorithms in Python.

Maintained by CJ Carey, Yuan Tang, William de Vazelhes, Aurélien Bellet and Nathalie Vauquier.