Sayan Deb Sarkar1, Ondrej Miksik2, Marc Pollefeys1,2, Daniel Barath1, Iro Armeni1

1ETH Zurich 2Microsoft Mixed Reality & AI Labs

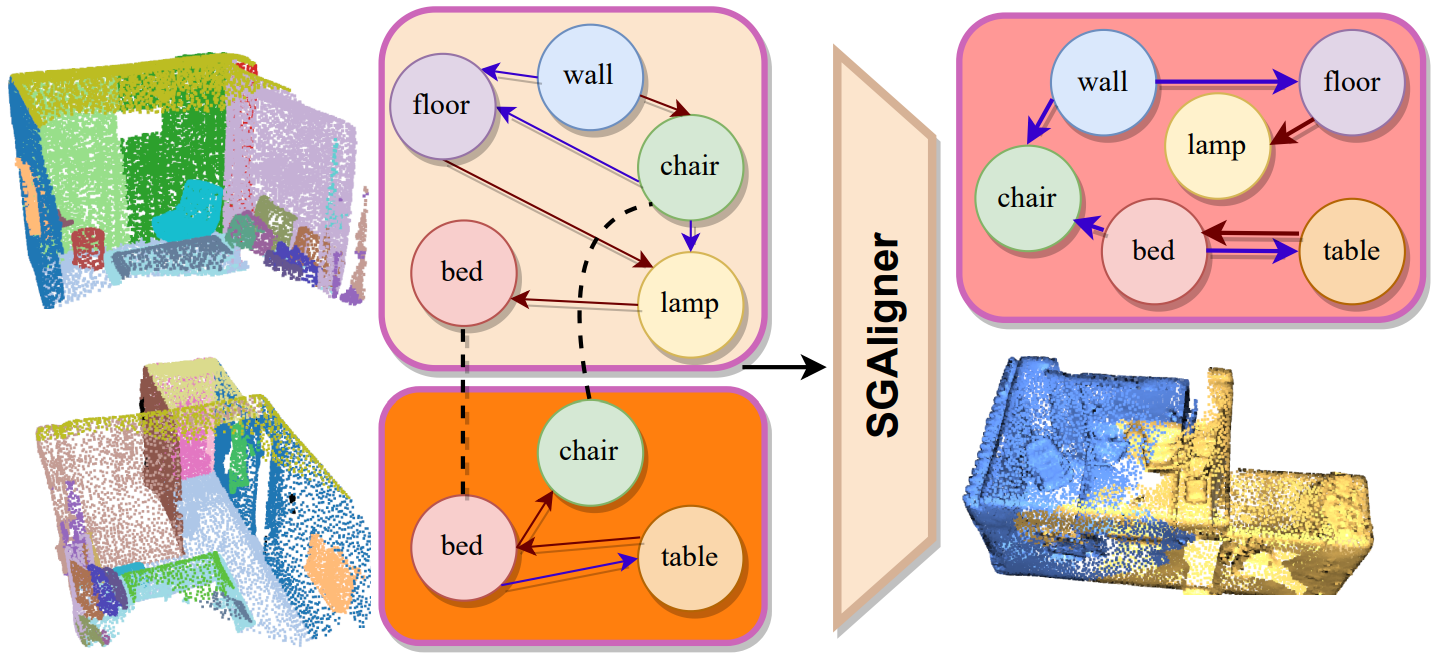

SGAligner aligns 3D scene graphs of environments using multi-modal learning and leverage the output for the downstream task of 3D point cloud registration.

[Project Webpage] [Paper]

- 14. July 2023 : SGAligner accepted to ICCV 2023. 🔥

- 1. May 2023: SGAligner preprint released on arXiv.

- 10. April 2023: Code released.

├── sgaligner

│ ├── data-preprocessing <- subscan generation + preprocessing

│ ├── configs <- configuration files

│ ├── src

│ │ │── aligner <- SGAligner modules

│ │ │── datasets <- dataloader for 3RScan subscans

│ │ │── engine <- trainer classes

│ │ │── GeoTransformer <- geotransformer submodule for registration

│ │ │── inference <- inference files for alignment + downstream applications

│ │ │── trainers <- train + validation loop (EVA + SGAligner)

│ │── utils <- util functions

│ │── README.md

│ │── scripts <- bash scripts for data generation + preprocesing + training

│ └── output <- folder that stores models and logs

│

The main dependencies of the project are the following:

python: 3.8.15

cuda: 11.6You can set up a conda environment as follows :

git clone --recurse-submodules -j8 [email protected]:sayands/sgaligner.git

cd sgaligner

conda env create -f req.ymlPlease follow the submodule for additional installation requirements and setup of GeoTransformer.

The pre-trained model and other meta files are available here.

After installing the dependencies, we preprocess the datasets and provide the benchmarks.

Download 3RScan and 3DSSG. Move all files of 3DSSG to a new files/ directory within Scan3R. The structure should be:

├── 3RScan

│ ├── files <- all 3RScan and 3DSSG meta files (NOT the scan data)

│ ├── scenes <- scans

│ └── out <- Default output directory for generated subscans (created when running pre-processing)

To generate

labels.instances.align.annotated.v2.plyfor each 3RScan scan, please refer to the repo from here.

Change the absolute paths in utils/define.py.

First, we create sub-scans from each 3RScan scan using the ground truth scene Graphs from the 3DSSG dataset and then calculate the pairwise overlap ratio for the subscans in a scan. Finally, we preprocess the data for our framework. The relevant code can be found in the data-preprocessing/ directory. You can use the following command to generate the subscans.

bash scripts/generate_data_scan3r_gt.shNote To adhere to our evaluation procedure, please do not change the seed value in the files in

configs/directory.

To generate overlapping and non-overlapping pairs, use :

python preprocessing/gen_all_pairs_fileset.pyThis will create a fileset with the same number of randomly chosen non-overlapping pairs from the generated subscans as overlapping pairs generated before during subscan generation.

Usage on Predicted Scene Graphs : Coming Soon!

To train SGAligner on 3RScan subscans generated from here, you can use :

cd src

python trainers/trainval_sgaligner.py --config ../configs/scan3r/scan3r_ground_truth.yamlWe also provide training scripts for EVA, used as a baseline after being adapted for scene graph alignment. To train EVA similar to SGAligner on the same data, you can use :

cd src

python trainers/trainval_eva.py --config ../configs/scan3r/scan3r_eva.yamlWe provide config files for the corresponding data in config/ directory. Please change the parameters in the configuration files, if you want to tune the hyper-parameters.

cd src

python inference/sgaligner/inference_align_reg.py --config ../configs/scan3r/scan3r_ground_truth.yaml --snapshot <path to SGAligner trained model> --reg_snapshot <path to GeoTransformer model trained on 3DMatch>❗ Run Generating Overlapping and Non-Overlapping Subscan Pairs before.

To run the inference, you need to:

cd src

python inference/sgaligner/inference_find_overlapper.py --config ../configs/scan3r/scan3r_gt_w_wo_overlap.yaml --snapshot <path to SGAligner trained model> --reg_snapshot <path to GeoTransformer model trained on 3DMatch>First, we generate the subscans per 3RScan scan using :

python data-preprocessing/gen_scan_subscan_mapping.py --split <the split you want to generate the mapping for>And then, to run the inference, you need to:

cd src

python inference/sgaligner/inference_mosaicking.py --config ../configs/scan3r/scan3r_gt_mosaicking.yaml --snapshot <path to SGAligner trained model> --reg_snapshot <path to GeoTransformer model trained on 3DMatch>We provide detailed results and comparisons here.

| Method | Mean Reciprocal Rank | Hits@1 | Hits@2 | Hits@3 | Hits@4 | Hits@5 |

|---|---|---|---|---|---|---|

| EVA | 0.867 | 0.790 | 0.884 | 0.938 | 0.963 | 0.977 |

| 0.884 | 0.835 | 0.886 | 0.921 | 0.938 | 0.951 | |

|

|

0.897 | 0.852 | 0.899 | 0.931 | 0.945 | 0.955 |

|

|

0.911 | 0.861 | 0.916 | 0.947 | 0.961 | 0.970 |

| SGAligner | 0.950 | 0.923 | 0.957 | 0.974 | 0.9823 | 0.987 |

| Method | CD | RRE | RTE | FMR | RR |

|---|---|---|---|---|---|

| GeoTr | 0.02247 | 1.813 | 2.79 | 98.94 | 98.49 |

| Ours, K=1 | 0.01677 | 1.425 | 2.88 | 99.85 | 98.79 |

| Ours, K=2 | 0.01111 | 1.012 | 1.67 | 99.85 | 99.40 |

| Ours, K=3 | 0.01525 | 1.736 | 2.55 | 99.85 | 98.81 |

-

Add 3D Point Cloud Mosaicking -

Add Support For EVA - Add usage on Predicted Scene Graphs

- Add scene graph alignment of local 3D scenes to prior 3D maps

- Add overlapping scene finder with a traditional retrieval method (FPFH + VLAD + KNN)

@article{sarkar2023sgaligner,

title={SGAligner : 3D Scene Alignment with Scene Graphs},

author={Sayan Deb Sarkar and Ondrej Miksik and Marc Pollefeys and Daniel Barath and Iro Armeni},

journal={Proceedings of the IEEE International Conference on Computer Vision (ICCV)},

year={2023}

}

In this project we use (parts of) the official implementations of the following works and thank the respective authors for open sourcing their methods:

- SceneGraphFusion (3RScan Dataloader)

- GeoTransformer (Registration)

- MCLEA (Alignment)