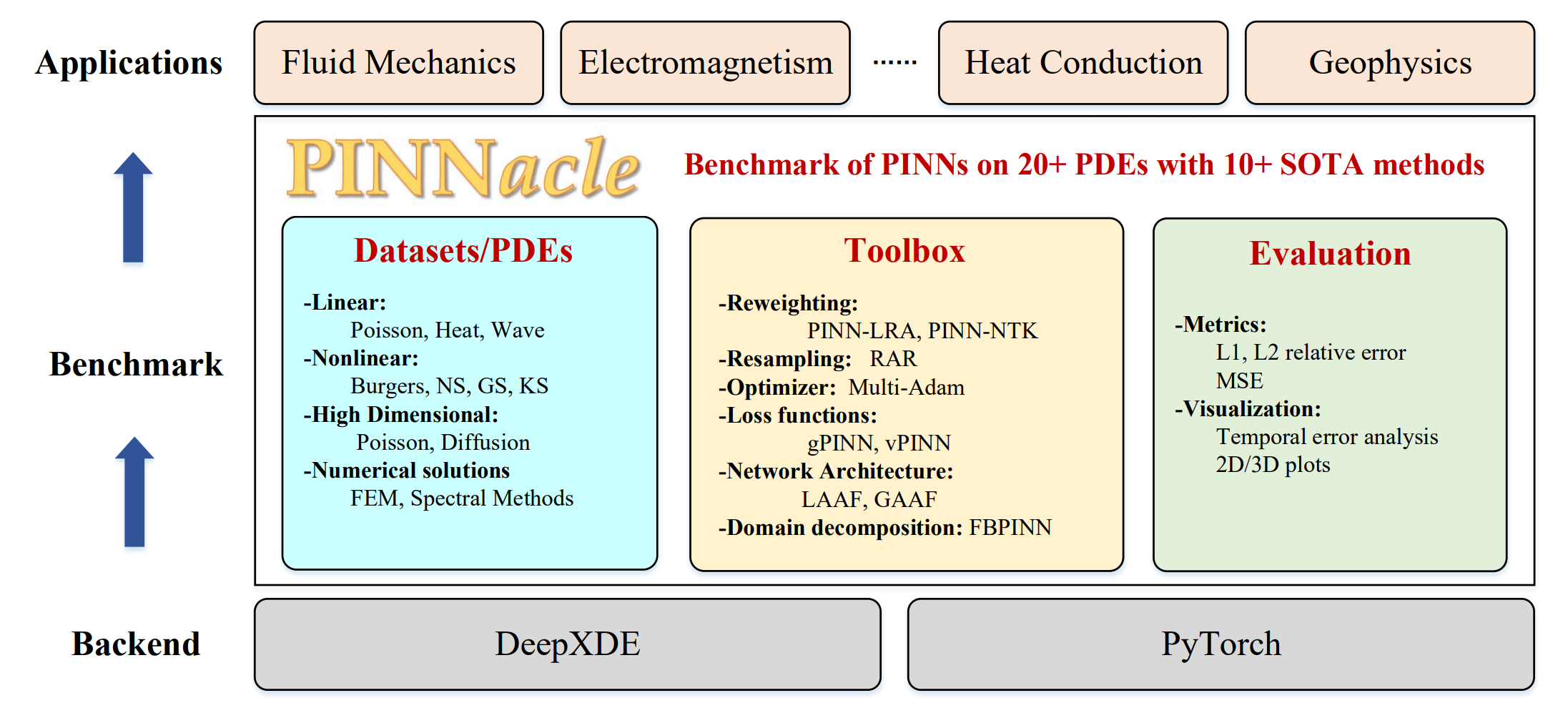

This repository is our codebase for PINNacle: A Comprehensive Benchmark of Physics-Informed Neural Networks for Solving PDEs. Our paper is currently under review. We will provide more detailed guide soon.

This benchmark paper implements the following variants and create a new challenging dataset to compare them,

| Method | Type |

|---|---|

| PINN | Vanilla PINNs |

| PINNs(Adam+L-BFGS) | Vanilla PINNs |

| PINN-LRA | Loss reweighting |

| PINN-NTK | Loss reweighting |

| RAR | Collocation points resampling |

| MultiAdam | New optimizer |

| gPINN | New loss functions (regularization terms) |

| hp-VPINN | New loss functions (variational formulation) |

| LAAF | New architecture (activation) |

| GAAF | New architecture (activation) |

| FBPINN | New architecture (domain decomposition) |

See these references for more details,

- Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations

- Understanding and mitigating gradient pathologies in physics-informed neural networks

- When and why PINNs fail to train: A neural tangent kernel perspective

- A comprehensive study of non-adaptive and residual-based adaptive sampling for physics-informed neural networks

- MultiAdam: Parameter-wise Scale-invariant Optimizer for Multiscale Training of Physics-informed Neural Networks

- Gradient-enhanced physics-informed neural networks for forward and inverse PDE problems

- Sobolev Training for Physics Informed Neural Networks

- Variational Physics-Informed Neural Networks For Solving Partial Differential Equations

- hp-VPINNs: Variational Physics-Informed Neural Networks With Domain Decomposition

- Locally adaptive activation functions with slope recovery for deep and physics-informed neural networks

- Adaptive activation functions accelerate convergence in deep and physics-informed neural networks

- Finite Basis Physics-Informed Neural Networks (FBPINNs): a scalable domain decomposition approach for solving differential equations

git clone https://github.com/i207M/PINNacle.git --depth 1

cd PINNacle

pip install -r requirements.txtRun all 20 cases with default settings:

python benchmark.py [--name EXP_NAME] [--seed SEED] [--device DEVICE]If you find out work useful, please cite our paper at:

@article{hao2023pinnacle,

title={PINNacle: A Comprehensive Benchmark of Physics-Informed Neural Networks for Solving PDEs},

author={Hao, Zhongkai and Yao, Jiachen and Su, Chang and Su, Hang and Wang, Ziao and Lu, Fanzhi and Xia, Zeyu and Zhang, Yichi and Liu, Songming and Lu, Lu and others},

journal={arXiv preprint arXiv:2306.08827},

year={2023}

}

We also suggest you have a look at the survey paper (Physics-Informed Machine Learning: A Survey on Problems, Methods and Applications) about PINNs, neural operators, and other paradigms of PIML.

@article{hao2022physics,

title={Physics-informed machine learning: A survey on problems, methods and applications},

author={Hao, Zhongkai and Liu, Songming and Zhang, Yichi and Ying, Chengyang and Feng, Yao and Su, Hang and Zhu, Jun},

journal={arXiv preprint arXiv:2211.08064},

year={2022}

}