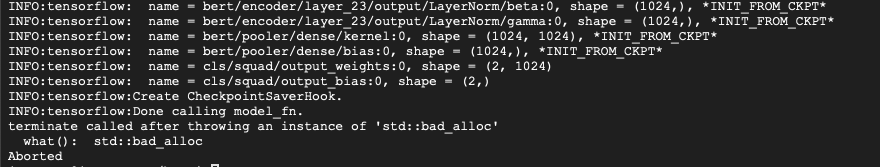

when run the classify script "run_classifier.py"

as follow:

python run_classifier.py --task_name=XNLI --do_train=true --do_eval=true --data_dir=$XNLI_DIR --vocab_file=$BERT_BASE_DIR/vocab.txt --bert_config_file=$BERT_BASE_DIR/bert_config.json --init_checkpoint=$BERT_BASE_DIR/bert_model.ckpt --max_seq_length=128 --train_batch_size=32 --learning_rate=5e-5 --num_train_epochs=0.01 --output_dir=/tmp/xnli_output/

suffer this error, I cannot find this file "bert_model.ckpt"

INFO:tensorflow:Error recorded from training_loop: Unsuccessful TensorSliceReader constructor: Failed to find any matching files for /path/chinese_L-12_H-768_A-12//bert_model.ckpt INFO:tensorflow:training_loop marked as finished WARNING:tensorflow:Reraising captured error Traceback (most recent call last): File "run_classifier.py", line 838, in <module> tf.app.run() File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/platform/app.py", line 125, in run _sys.exit(main(argv)) File "run_classifier.py", line 794, in main estimator.train(input_fn=train_input_fn, max_steps=num_train_steps) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 2400, in train rendezvous.raise_errors() File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/error_handling.py", line 128, in raise_errors six.reraise(typ, value, traceback) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 2394, in train saving_listeners=saving_listeners File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/estimator/estimator.py", line 356, in train loss = self._train_model(input_fn, hooks, saving_listeners) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/estimator/estimator.py", line 1181, in _train_model return self._train_model_default(input_fn, hooks, saving_listeners) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/estimator/estimator.py", line 1211, in _train_model_default features, labels, model_fn_lib.ModeKeys.TRAIN, self.config) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 2186, in _call_model_fn features, labels, mode, config) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/estimator/estimator.py", line 1169, in _call_model_fn model_fn_results = self._model_fn(features=features, **kwargs) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 2470, in _model_fn features, labels, is_export_mode=is_export_mode) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 1250, in call_without_tpu return self._call_model_fn(features, labels, is_export_mode=is_export_mode) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/tpu/python/tpu/tpu_estimator.py", line 1524, in _call_model_fn estimator_spec = self._model_fn(features=features, **kwargs) File "run_classifier.py", line 575, in model_fn ) = modeling.get_assignment_map_from_checkpoint(tvars, init_checkpoint) File "/Users/xiaoqiugen/Project/tmp/bert/modeling.py", line 331, in get_assignment_map_from_checkpoint init_vars = tf.train.list_variables(init_checkpoint) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/training/checkpoint_utils.py", line 95, in list_variables reader = load_checkpoint(ckpt_dir_or_file) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/training/checkpoint_utils.py", line 64, in load_checkpoint return pywrap_tensorflow.NewCheckpointReader(filename) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/pywrap_tensorflow_internal.py", line 314, in NewCheckpointReader return CheckpointReader(compat.as_bytes(filepattern), status) File "/Library/anaconda2/lib/python2.7/site-packages/tensorflow/python/framework/errors_impl.py", line 526, in __exit__ c_api.TF_GetCode(self.status.status)) tensorflow.python.framework.errors_impl.NotFoundError: Unsuccessful TensorSliceReader constructor: Failed to find any matching files for /path/chinese_L-12_H-768_A-12//bert_model.ckpt