This crate is intended to load glTF 2.0, a file format designed for the efficient transmission of 3D assets.

rustc version 1.61 or above is required.

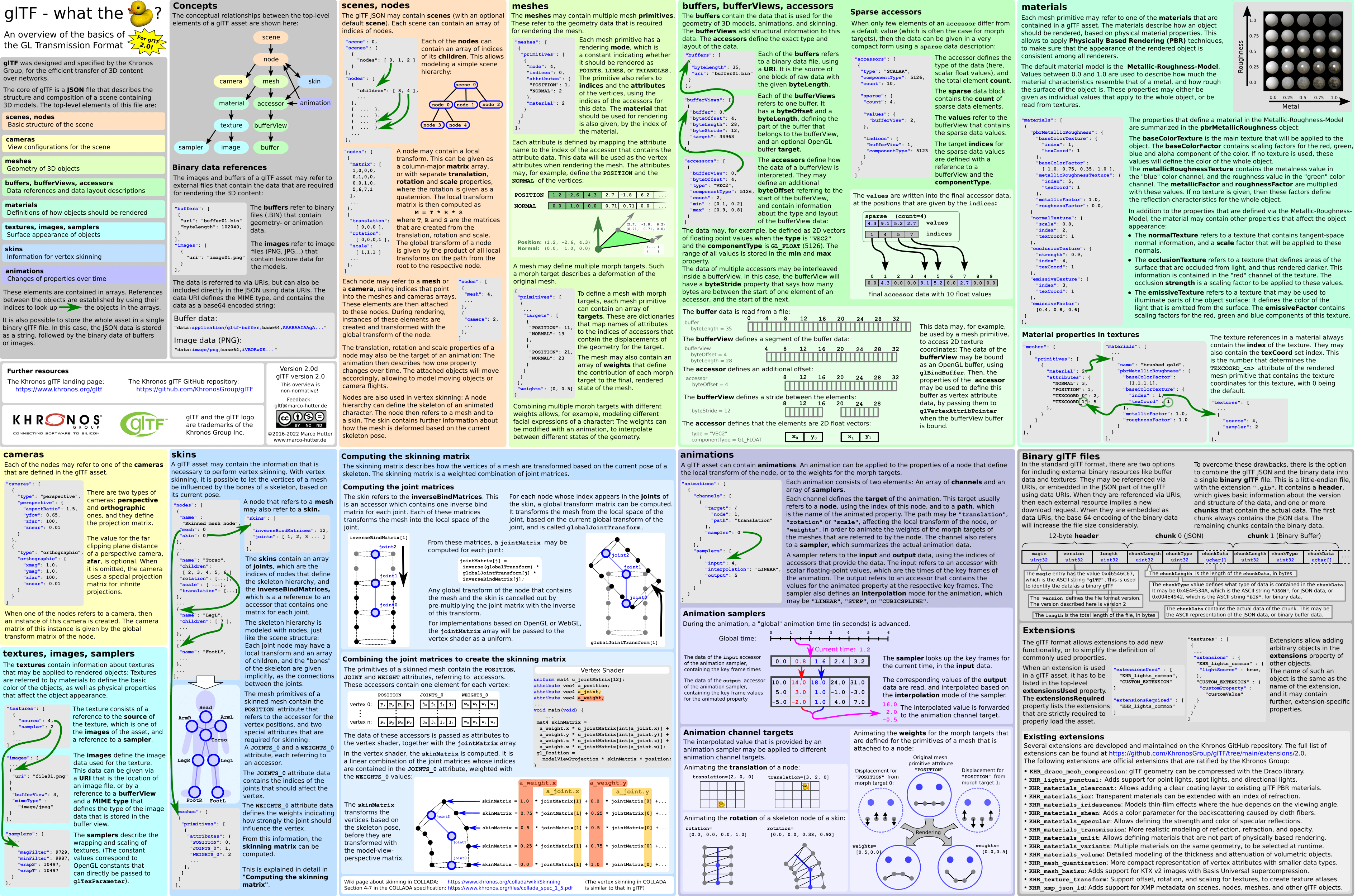

From javagl/gltfOverview

See the crate documentation for example usage.

By default, gltf ignores all extras and names included with glTF assets. You can negate this by enabling the extras and names features, respectively.

[dependencies.gltf]

version = "1.2"

features = ["extras", "names"]The following glTF extensions are supported by the crate:

KHR_lights_punctualKHR_materials_pbrSpecularGlossinessKHR_materials_unlitKHR_texture_transformKHR_materials_variantsKHR_materials_volumeKHR_materials_specularKHR_materials_transmissionKHR_materials_iorKHR_materials_emissive_strength

To use an extension, list its name in the features section.

[dependencies.gltf]

features = ["KHR_materials_unlit"]Demonstrates how the glTF JSON is deserialized.

cargo run --example gltf-display path/to/asset.gltfDemonstrates how glTF JSON can be built and exported using the gltf-json crate.

cargo run --example gltf-exportDeserializes and serializes the JSON part of a glTF asset.

cargo run --example gltf-roundtrip path/to/asset.gltfVisualises the scene heirarchy of a glTF asset, which is a strict tree of nodes.

cargo run --example gltf-tree path/to/asset.gltfRunning tests locally requires to clone the glTF-Sample-Models repository first.

git clone https://github.com/KhronosGroup/glTF-Sample-Models.git