This repository hosts the code underlying Geocomputation with R, a book by Robin Lovelace, Jakub Nowosad, and Jannes Muenchow. If you find the contents useful, please cite it as follows:

Lovelace, Robin, Jakub Nowosad and Jannes Muenchow (2019). Geocomputation with R. The R Series. CRC Press.

The first version of the book has been published by CRC Press in the R Series and can be viewed online at bookdown.org. Read the latest version at r.geocompx.org.

Summary of the changes

Since commencing work on the Second Edition in September 2021 much has changed, including:

- Replacement of

rasterwithterrain Chapters 1 to 7 (see commits related to this update here) - Update of Chapter 7 to include mention alternative ways or reading-in OSM data in #656

- Refactor build settings so the book builds on Docker images in the geocompr/docker repo

- Improve the experience of using the book in Binder (ideal for trying out the code before installing or updating the necessary R packages), as documented in issue #691 (thanks to yuvipanda)

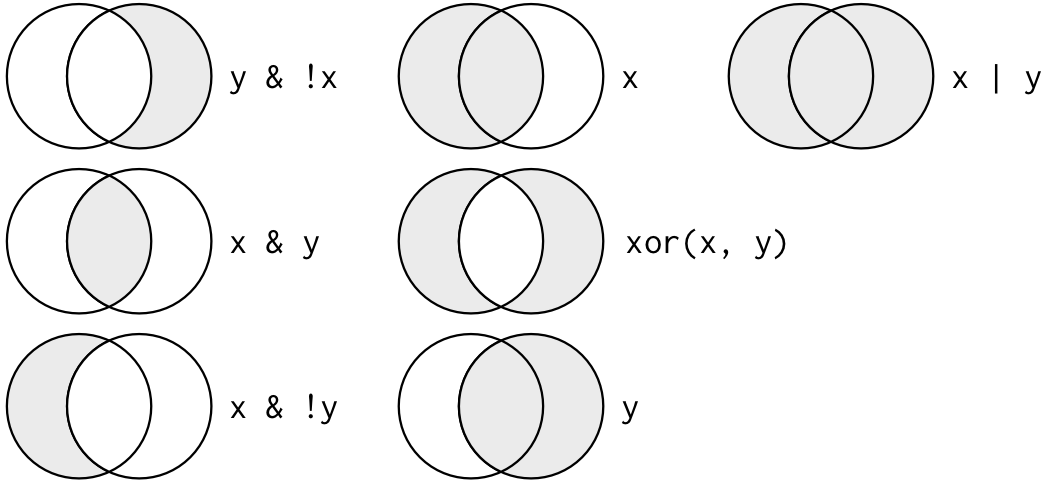

- Improved communication of binary spatial predicates in Chapter 4 (see #675)

- New section on the links between subsetting and clipping (see #698) in Chapter 5

- New section on the dimensionally extended 9 intersection model (DE-9IM)

- New chapter on raster-vector interactions split out from Chapter 5

- New section on the sfheaders package

- New section in Chapter 2 on spherical geometry engines and the s2 package

- Replacement of code based on the old mlr package with code based on the new mlr3 package, as described in a huge pull request

See https://github.com/geocompx/geocompr/compare/1.9…main for a continuously updated summary of the changes to date. At the time of writing (April 2022) there have been more than 10k lines of code/prose added, lots of refactoring!

Contributions at this stage are very welcome.

We encourage contributions on any part of the book, including:

- improvements to the text, e.g., clarifying unclear sentences, fixing typos (see guidance from Yihui Xie);

- changes to the code, e.g., to do things in a more efficient way;

- suggestions on content (see the project’s issue tracker);

- improvements to and alternative approaches in the Geocompr solutions booklet hosted at r.geocompx.org/solutions (see a blog post on how to update solutions in files such as _01-ex.Rmd here)

See our-style.md for the book’s style.

Many thanks to all contributors to the book so far via GitHub (this list will update automatically): prosoitos, florisvdh, babayoshihiko, katygregg, tibbles-and-tribbles, Lvulis, rsbivand, iod-ine, KiranmayiV, cuixueqin, defuneste, zmbc, erstearns, FlorentBedecarratsNM, dcooley, darrellcarvalho, marcosci, appelmar, MikeJohnPage, eyesofbambi, krystof236, nickbearman, tylerlittlefield, giocomai, KHwong12, LaurieLBaker, MarHer90, mdsumner, pat-s, sdesabbata, ahmohil, ateucher, annakrystalli, andtheWings, kant, gavinsimpson, Himanshuteli, yutannihilation, howardbaek, jimr1603, jbixon13, olyerickson, yvkschaefer, katiejolly, kwhkim, layik, mpaulacaldas, mtennekes, mvl22, ganes1410, richfitz, VLucet, wdearden, yihui, adambhouston, chihinl, cshancock, e-clin, ec-nebi, gregor-d, jasongrahn, p-kono, pokyah, schuetzingit, tim-salabim, tszberkowitz, vlarmet.

During the project we aim to contribute ‘upstream’ to the packages that

make geocomputation with R possible. This impact is recorded in

our-impact.csv.

The recommended way to get the source code underlying Geocomputation with R on your computer is by cloning the repo. You can can that on any computer with Git installed with the following command:

git clone https://github.com/geocompx/geocompr.gitAn alternative approach, which we recommend for people who want to

contribute to open source projects hosted on GitHub, is to install the

gh CLI tool. From there

cloning a fork of the source code, that you can change and share

(including with Pull Requests to improve the book), can be done with the

following command:

gh repo fork geocompx/geocompr # (gh repo clone geocompx/geocompr # also works)Both of those methods require you to have Git installed. If not, you can download the book’s source code from the URL https://github.com/geocompx/geocompr/archive/refs/heads/main.zip . Download/unzip the source code from the R command line to increase reproducibility and reduce time spent clicking around:

u = "https://github.com/geocompx/geocompr/archive/refs/heads/main.zip"

f = basename(u)

download.file(u, f) # download the file

unzip(f) # unzip it

file.rename(f, "geocompr") # rename the directory

rstudioapi::openProject("geococompr") # or open the folder in vscode / other IDETo ease reproducibility, we created the geocompkg package. Install it

with the following commands:

install.packages("remotes")

# To reproduce the first Part (chapters 1 to 8):

install.packages('geocompkg', repos = c('https://geocompr.r-universe.dev', 'https://cloud.r-project.org'), dependencies = TRUE, force = TRUE)Installing geocompkg will also install core packages required for

reproducing Part 1 of the book (chapters 1 to 8). Note: you may also

need to install system

dependencies if you’re

running Linux (recommended) or Mac operating systems. You also need to

have the remotes package

installed:

To reproduce book in its entirety, run the following command (which installs additional ‘Suggests’ packages, this may take some time to run!):

# Install packages to fully reproduce book (may take several minutes):

options(repos = c(

geocompx = 'https://geocompx.r-universe.dev',

cran = 'https://cloud.r-project.org/'

))

# From geocompx.r-universe.dev (recommended):

install.packages("geocompkg", dependencies = TRUE)

# Alternatively from GitHub:

remotes::install_github("geocompr/geocompkg", dependencies = TRUE)You need a recent version of the GDAL, GEOS, PROJ and udunits libraries installed for this to work on Mac and Linux. See the sf package’s README for information on that. After the dependencies have been installed you should be able to build and view a local version the book with:

# Change this depending on where you have the book code stored:

rstudioapi::openProject("~/Downloads/geocompr")

# or code /location/of/geocompr in the system terminal

# or cd /location/of/geocompr then R in the system terminal, then:

bookdown::render_book("index.Rmd") # to build the book

browseURL("_book/index.html") # to view it

# Or, to serve a live preview the book and observe impact of changes:

bookdown::serve_book()A great feature of VS Code is devcontainers, which allow you to develop in an isolated Docker container. If you have VS Code and the necessary dependencies installed on your computer, you can build Geocomputation with R in a devcontainer as shown below (see #873 for details):

For many people the quickest way to get started with Geocomputation with R is in your web browser via Binder. To see an interactive RStudio Server instance click on the following button, which will open mybinder.org with an R installation that has all the dependencies needed to reproduce the book:

You can also have a play with the repository in RStudio Cloud by clicking on this link (requires log-in):

See the geocompx/docker repository for details.

To reduce the book’s dependencies, scripts to be run infrequently to generate input for the book are run on creation of this README.

The additional packages required for this can be installed as follows:

source("code/extra-pkgs.R")With these additional dependencies installed, you should be able to run the following scripts, which create content for the book, that we’ve removed from the main book build to reduce package dependencies and the book’s build time:

source("code/01-cranlogs.R")

source("code/sf-revdep.R")

source("code/09-urban-animation.R")

source("code/09-map-pkgs.R")Note: the .Rproj file is configured to build a website not a single

page. To reproduce this

README use

the following command:

rmarkdown::render("README.Rmd", output_format = "github_document", output_file = "README.md")The main packages used in this book are cited from packages.bib. Other

citations are stored online using Zotero.

If you would like to add to the references, please use Zotero, join the open group add your citation to the open geocompr library.

We use the following citation key format:

[auth:lower]_[veryshorttitle:lower]_[year]

This can be set from inside Zotero desktop with the Better Bibtex plugin

installed (see

github.com/retorquere/zotero-better-bibtex)

by selecting the following menu options (with the shortcut Alt+E

followed by N), and as illustrated in the figure below:

Edit > Preferences > Better Bibtex

Zotero settings: these are useful if you want to add references.

When you export the citations as a .bib file from Zotero, use the

Better BibTex (not BibLaTeX) format.

We use Zotero because it is a powerful open source reference manager that integrates well with citation tools in VS Code and RStudio.