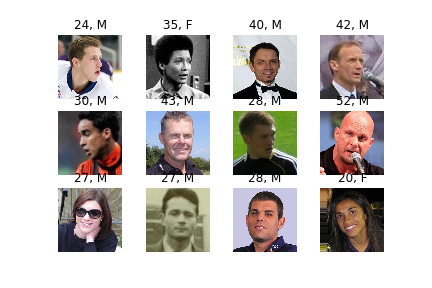

This is a Keras implementation of a CNN for estimating age and gender from a face image [1, 2]. In training, the IMDB-WIKI dataset is used.

- [Aug. 21, 2020] Refactored; use tensorflow.keras

- [Jun. 30, 2019] Another PyTorch-based project was released

- [Nov. 12, 2018] Enable Adam optimizer; seems to be better than momentum SGD

- [Sep. 23, 2018] Demo from directory

- [Aug. 11, 2018] Add age estimation sub-project here

- [Jul. 5, 2018] The UTKFace dataset became available for training.

- [Apr. 10, 2018] Evaluation result on the APPA-REAL dataset was added.

- Python3.6+

Tested on:

- Ubuntu 16.04, Python 3.6.9, Tensorflow 2.3.0, CUDA 10.01, cuDNN 7.6

Run the demo script (requires web cam).

You can use --image_dir [IMAGE_DIR] option to use images in the [IMAGE_DIR] directory instead.

python demo.pyThe trained model will be automatically downloaded to the pretrained_models directory.

First, download the dataset.

The dataset is downloaded and extracted to the data directory by:

./download.shSecondly, filter out noise data and serialize labels into .csv file.

Please check check_dataset.ipynb for the details of the dataset.

The training data is created by:

python create_db.py --db imdbusage: create_db.py [-h] [--db DB] [--min_score MIN_SCORE]

This script cleans-up noisy labels and creates database for training.

optional arguments:

-h, --help show this help message and exit

--db DB dataset; wiki or imdb (default: imdb)

--min_score MIN_SCORE minimum face_score (default: 1.0)The resulting files with default parameters are included in this repo (meta/imdb.csv and meta/wiki.csv), thus there is no need to run this by yourself.

Firstly, download images from the website of the UTKFace dataset.

UTKFace.tar.gz can be downloaded from Aligned&Cropped Faces in Datasets section.

Then, extract the archive.

tar zxf UTKFace.tar.gz UTKFaceFinally, run the following script to create the training data:

python create_db_utkface.py -i UTKFace -o UTKFace.mat

[NOTE]: Because the face images in the UTKFace dataset is tightly cropped (there is no margin around the face region),

faces should also be cropped in demo.py if weights trained by the UTKFace dataset is used.

Please set the margin argument to 0 for tight cropping:

python demo.py --weight_file WEIGHT_FILE --margin 0The pre-trained weights can be found here.

Train the model architecture using the training data created above:

python train.pyTrained weight files are stored as checkpoints/*.hdf5 for each epoch if the validation loss becomes minimum over previous epochs.

You can change default setting(s) from command line as:

python train.py model.model_name=EfficientNetB3 model.batch_size=64Available models can be found here.

The training logs can be easily visualized via wandb by:

- create account from here

- create new project in wandb (e.g. "age-gender-estimation")

- run

wandb loginon terminal and authorize - run training script with

wandb.project=age-gender-estimationargument - check dashboard!

python demo.pyusage: demo.py [-h] [--weight_file WEIGHT_FILE] [--margin MARGIN]

[--image_dir IMAGE_DIR]

This script detects faces from web cam input, and estimates age and gender for

the detected faces.

optional arguments:

-h, --help show this help message and exit

--weight_file WEIGHT_FILE

path to weight file (e.g. weights.28-3.73.hdf5)

(default: None)

--margin MARGIN margin around detected face for age-gender estimation

(default: 0.4)

--image_dir IMAGE_DIR

target image directory; if set, images in image_dir

are used instead of webcam (default: None)Please use the best model among checkpoints/*.hdf5 for WEIGHT_FILE if you use your own trained models.

Trained on imdb, tested on wiki.

You can evaluate a trained model on the APPA-REAL (validation) dataset by:

python evaluate_appa_real.py --weight_file WEIGHT_FILEPlease refer to here for the details of the APPA-REAL dataset.

The results of trained model is:

MAE Apparent: 5.33

MAE Real: 6.22

The best result reported in [5] is:

MAE Apparent: 4.08

MAE Real: 5.30

Please note that the above result was achieved by finetuning the model using the training set of the APPA-REAL dataset.

This project is released under the MIT license. However, the IMDB-WIKI dataset used in this project is originally provided under the following conditions.

Please notice that this dataset is made available for academic research purpose only. All the images are collected from the Internet, and the copyright belongs to the original owners. If any of the images belongs to you and you would like it removed, please kindly inform us, we will remove it from our dataset immediately.

Therefore, the pretrained model(s) included in this repository is restricted by these conditions (available for academic research purpose only).

[1] R. Rothe, R. Timofte, and L. V. Gool, "DEX: Deep EXpectation of apparent age from a single image," in Proc. of ICCV, 2015.

[2] R. Rothe, R. Timofte, and L. V. Gool, "Deep expectation of real and apparent age from a single image without facial landmarks," in IJCV, 2016.

[3] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, "mixup: Beyond Empirical Risk Minimization," in arXiv:1710.09412, 2017.

[4] Z. Zhong, L. Zheng, G. Kang, S. Li, and Y. Yang, "Random Erasing Data Augmentation," in arXiv:1708.04896, 2017.

[5] E. Agustsson, R. Timofte, S. Escalera, X. Baro, I. Guyon, and R. Rothe, "Apparent and real age estimation in still images with deep residual regressors on APPA-REAL database," in Proc. of FG, 2017.