0.0.6

Running test cases for BRITS...

Model initialized successfully. Number of the trainable parameters: 580976

ERunning test cases for BRITS...

Model initialized successfully. Number of the trainable parameters: 580976

ERunning test cases for LOCF...

LOCF test_MAE: 0.1712224306027283

.Running test cases for LOCF...

.Running test cases for SAITS...

Model initialized successfully. Number of the trainable parameters: 1332704

Exception: expected self and mask to be on the same device, but got mask on cpu and self on cuda:0

ERunning test cases for SAITS...

Model initialized successfully. Number of the trainable parameters: 1332704

Exception: expected self and mask to be on the same device, but got mask on cpu and self on cuda:0

ERunning test cases for Transformer...

Model initialized successfully. Number of the trainable parameters: 666122

epoch 0: training loss 0.7681, validating loss 0.2941

epoch 1: training loss 0.4731, validating loss 0.2395

epoch 2: training loss 0.4235, validating loss 0.2069

epoch 3: training loss 0.3781, validating loss 0.1914

epoch 4: training loss 0.3530, validating loss 0.1837

ERunning test cases for Transformer...

Model initialized successfully. Number of the trainable parameters: 666122

epoch 0: training loss 0.7826, validating loss 0.2820

epoch 1: training loss 0.4687, validating loss 0.2352

epoch 2: training loss 0.4188, validating loss 0.2132

epoch 3: training loss 0.3857, validating loss 0.1977

epoch 4: training loss 0.3604, validating loss 0.1945

E

======================================================================

ERROR: test_impute (pypots.tests.test_imputation.TestBRITS)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 99, in setUp

self.brits.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/brits.py", line 494, in fit

training_set = DatasetForBRITS(train_X) # time_gaps is necessary for BRITS

File "mydirs(...)/python3.9/site-packages/pypots/data/dataset_for_brits.py", line 62, in __init__

forward_delta = parse_delta(forward_missing_mask)

File "mydirs(...)/python3.9/site-packages/pypots/data/dataset_for_brits.py", line 36, in parse_delta

delta.append(torch.ones(1, n_features) + (1 - m_mask[step]) * delta[-1])

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

======================================================================

ERROR: test_parameters (pypots.tests.test_imputation.TestBRITS)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 99, in setUp

self.brits.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/brits.py", line 494, in fit

training_set = DatasetForBRITS(train_X) # time_gaps is necessary for BRITS

File "mydirs(...)/python3.9/site-packages/pypots/data/dataset_for_brits.py", line 62, in __init__

forward_delta = parse_delta(forward_missing_mask)

File "mydirs(...)/python3.9/site-packages/pypots/data/dataset_for_brits.py", line 36, in parse_delta

delta.append(torch.ones(1, n_features) + (1 - m_mask[step]) * delta[-1])

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

======================================================================

ERROR: test_impute (pypots.tests.test_imputation.TestSAITS)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 83, in _train_model

results = self.model.forward(inputs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 95, in forward

imputed_data, [X_tilde_1, X_tilde_2, X_tilde_3] = self.impute(inputs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 62, in impute

enc_output, _ = encoder_layer(enc_output)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 122, in forward

enc_output, attn_weights = self.slf_attn(enc_input, enc_input, enc_input, attn_mask=mask_time)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 72, in forward

v, attn_weights = self.attention(q, k, v, attn_mask)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 32, in forward

attn = attn.masked_fill(attn_mask == 1, -1e9)

RuntimeError: expected self and mask to be on the same device, but got mask on cpu and self on cuda:0

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 35, in setUp

self.saits.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 171, in fit

self._train_model(training_loader, val_loader, val_X_intact, val_X_indicating_mask)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 123, in _train_model

raise RuntimeError('Training got interrupted. Model was not get trained. Please try fit() again.')

RuntimeError: Training got interrupted. Model was not get trained. Please try fit() again.

======================================================================

ERROR: test_parameters (pypots.tests.test_imputation.TestSAITS)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 83, in _train_model

results = self.model.forward(inputs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 95, in forward

imputed_data, [X_tilde_1, X_tilde_2, X_tilde_3] = self.impute(inputs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 62, in impute

enc_output, _ = encoder_layer(enc_output)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 122, in forward

enc_output, attn_weights = self.slf_attn(enc_input, enc_input, enc_input, attn_mask=mask_time)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 72, in forward

v, attn_weights = self.attention(q, k, v, attn_mask)

File "mydirs(...)/python3.9/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 32, in forward

attn = attn.masked_fill(attn_mask == 1, -1e9)

RuntimeError: expected self and mask to be on the same device, but got mask on cpu and self on cuda:0

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 35, in setUp

self.saits.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/saits.py", line 171, in fit

self._train_model(training_loader, val_loader, val_X_intact, val_X_indicating_mask)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 123, in _train_model

raise RuntimeError('Training got interrupted. Model was not get trained. Please try fit() again.')

RuntimeError: Training got interrupted. Model was not get trained. Please try fit() again.

======================================================================

ERROR: test_impute (pypots.tests.test_imputation.TestTransformer)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 68, in setUp

self.transformer.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 257, in fit

self._train_model(training_loader, val_loader, val_X_intact, val_X_indicating_mask)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 129, in _train_model

if np.equal(self.best_loss, float('inf')):

File "mydirs(...)/python3.9/site-packages/torch/_tensor.py", line 732, in __array__

return self.numpy()

TypeError: can't convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

======================================================================

ERROR: test_parameters (pypots.tests.test_imputation.TestTransformer)

----------------------------------------------------------------------

Traceback (most recent call last):

File "mydirs(...)/python3.9/site-packages/pypots/tests/test_imputation.py", line 68, in setUp

self.transformer.fit(self.train_X, self.val_X)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/transformer.py", line 257, in fit

self._train_model(training_loader, val_loader, val_X_intact, val_X_indicating_mask)

File "mydirs(...)/python3.9/site-packages/pypots/imputation/base.py", line 129, in _train_model

if np.equal(self.best_loss, float('inf')):

File "mydirs(...)/python3.9/site-packages/torch/_tensor.py", line 732, in __array__

return self.numpy()

TypeError: can't convert cuda:0 device type tensor to numpy. Use Tensor.cpu() to copy the tensor to host memory first.

----------------------------------------------------------------------

Ran 8 tests in 20.239s

FAILED (errors=6)

PyPOTS Research Team (pypots.com)

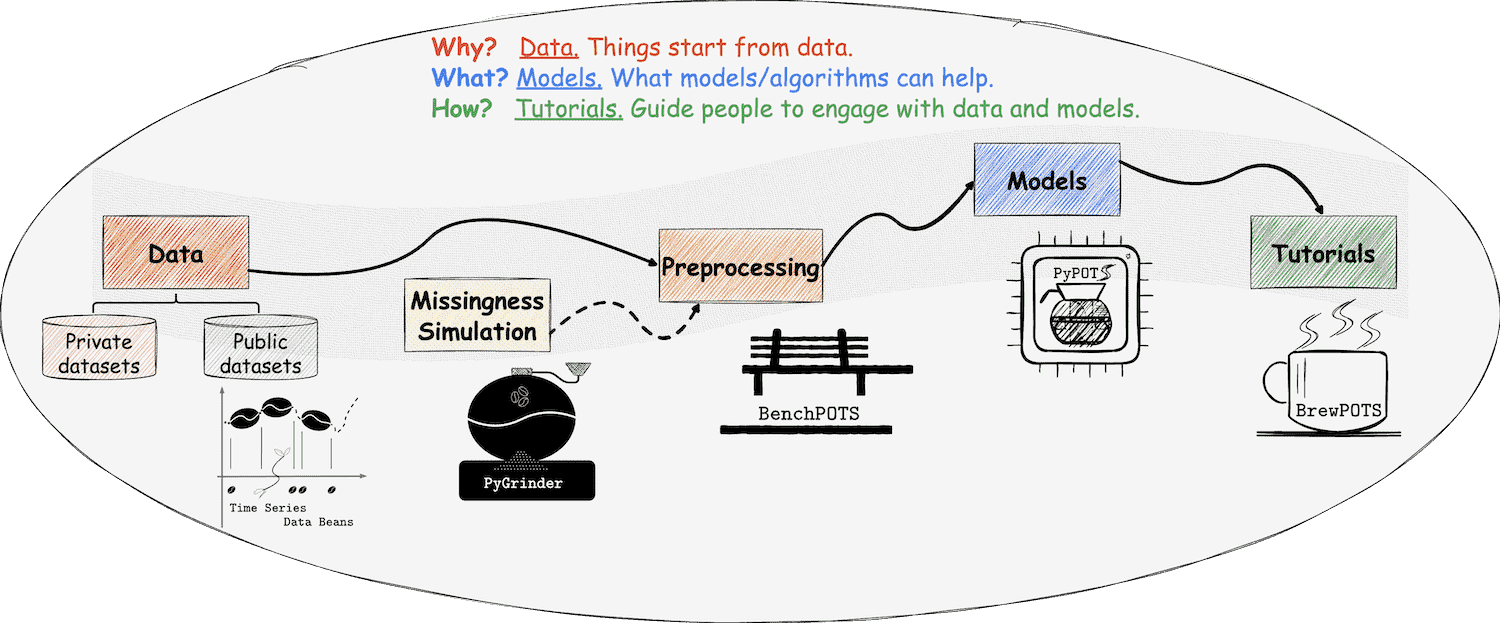

to build a comprehensive Python toolkit ecosystem for POTS modeling, including data preprocessing, neural net training, and benchmarking.

Stars🌟 on our repos are also very welcome of course if you like what we're trying to achieve with PyPOTS.