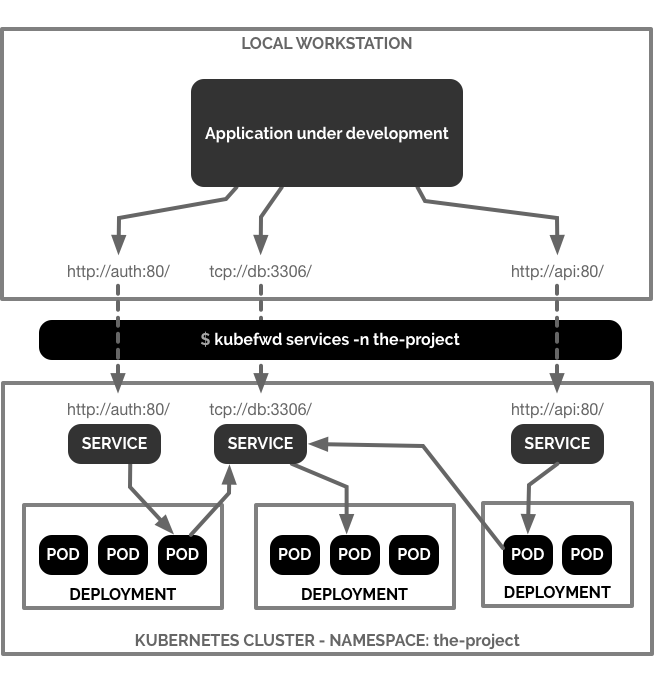

Kubernetes port forwarding for local development.

NOTE: Accepting pull requests for bug fixes, tests, and documentation only.

Read Kubernetes Port Forwarding for Local Development for background and a detailed guide to kubefwd. Follow Craig Johnston on Twitter for project updates.

kubefwd is a command line utility built to port forward multiple services within one or more namespaces on one or more Kubernetes clusters. kubefwd uses the same port exposed by the service and forwards it from a loopback IP address on your local workstation. kubefwd temporally adds domain entries to your /etc/hosts file with the service names it forwards.

When working on our local workstation, my team and I often build applications that access services through their service names and ports within a Kubernetes namespace. kubefwd allows us to develop locally with services available as they would be in the cluster.

Tested directly on macOS and Linux based docker containers.

kubefwd assumes you have kubectl installed and configured with access to a Kubernetes cluster. kubefwd uses the kubectl current context. The kubectl configuration is not used. However, its configuration is needed to access a Kubernetes cluster.

Ensure you have a context by running:

kubectl config current-contextIf you are running MacOS and use homebrew you can install kubefwd directly from the txn2 tap:

brew install txn2/tap/kubefwdTo upgrade:

brew upgrade kubefwdscoop install kubefwdTo upgrade:

scoop update kubefwdForward all services from the namespace the-project to a Docker container named the-project:

docker run -it --rm --privileged --name the-project \

-v "$(echo $HOME)/.kube/":/root/.kube/ \

txn2/kubefwd services -n the-projectExecute a curl call to an Elasticsearch service in your Kubernetes cluster:

docker exec the-project curl -s elasticsearch:9200Check out the releases section on Github for alternative binaries.

Fork kubefwd and build a custom version. Accepting pull requests for bug fixes, tests, stability and compatibility enhancements, and documentation only.

Forward all services for the namespace the-project. Kubefwd finds the first Pod associated with each Kubernetes service found in the Namespace and port forwards it based on the Service spec to a local IP address and port. A domain name is added to your /etc/hosts file pointing to the local IP.

Forwarding of headlesss Service is currently supported, Kubefwd forward all Pods for headless service; At the same time, the namespace-level service monitoring is supported. When a new service is created or the old service is deleted under the namespace, kubefwd can automatically start/end forwarding; Supports Pod-level forwarding monitoring. When the forwarded Pod is deleted (such as updating the deployment, etc.), the forwarding of the service to which the pod belongs is automatically restarted;

sudo kubefwd svc -n the-projectForward all svc for the namespace the-project where labeled system: wx:

sudo kubefwd svc -l system=wx -n the-projectForward a single service named my-service in the namespace the-project:

sudo kubefwd svc -n the-project -f metadata.name=my-service

Forward more than one service using the in clause:

sudo kubefwd svc -l "app in (app1, app2)"$ kubefwd svc --help

INFO[00:00:48] _ _ __ _

INFO[00:00:48] | | ___ _| |__ ___ / _|_ ____| |

INFO[00:00:48] | |/ / | | | '_ \ / _ \ |_\ \ /\ / / _ |

INFO[00:00:48] | <| |_| | |_) | __/ _|\ V V / (_| |

INFO[00:00:48] |_|\_\\__,_|_.__/ \___|_| \_/\_/ \__,_|

INFO[00:00:48]

INFO[00:00:48] Version 0.0.0

INFO[00:00:48] https://github.com/txn2/kubefwd

INFO[00:00:48]

Forward multiple Kubernetes services from one or more namespaces. Filter services with selector.

Usage:

kubefwd services [flags]

Aliases:

services, svcs, svc

Examples:

kubefwd svc -n the-project

kubefwd svc -n the-project -l app=wx,component=api

kubefwd svc -n default -l "app in (ws, api)"

kubefwd svc -n default -n the-project

kubefwd svc -n default -d internal.example.com

kubefwd svc -n the-project -x prod-cluster

kubefwd svc -n the-project -m 80:8080 -m 443:1443

kubefwd svc -n the-project -z path/to/conf.yml

kubefwd svc -n the-project -r svc.ns:127.3.3.1

kubefwd svc --all-namespaces

Flags:

-A, --all-namespaces Enable --all-namespaces option like kubectl.

-x, --context strings specify a context to override the current context

-d, --domain string Append a pseudo domain name to generated host names.

-f, --field-selector string Field selector to filter on; supports '=', '==', and '!=' (e.g. -f metadata.name=service-name).

-z, --fwd-conf string Define an IP reservation configuration

-h, --help help for services

-c, --kubeconfig string absolute path to a kubectl config file

-m, --mapping strings Specify a port mapping. Specify multiple mapping by duplicating this argument.

-n, --namespace strings Specify a namespace. Specify multiple namespaces by duplicating this argument.

-r, --reserve strings Specify an IP reservation. Specify multiple reservations by duplicating this argument.

-l, --selector string Selector (label query) to filter on; supports '=', '==', and '!=' (e.g. -l key1=value1,key2=value2).

-v, --verbose Verbose output.Apache License 2.0

Open source utility by Craig Johnston, imti blog and sponsored by Deasil Works, Inc.

Please check out my book Advanced Platform Development with Kubernetes: Enabling Data Management, the Internet of Things, Blockchain, and Machine Learning.

Source code from the book Advanced Platform Development with Kubernetes: Enabling Data Management, the Internet of Things, Blockchain, and Machine Learning by Craig Johnston (@cjimti) ISBN 978-1-4842-5610-7 Apress; 1st ed. edition (September, 2020)

Read my blog post Advanced Platform Development with Kubernetes for more info and background on the book.

Follow me on Twitter: @cjimti (Craig Johnston)

UNICEF is on the ground helping Ukraine's children, please donate to https://www.unicefusa.org/ <- "like" this project by donating.