❔Question

Hi there

I'm completely new to PyTorch and YOLO, so I assume this is a very dummy question.

I try to load the model yolo-pose weights with this code:

model = torch.hub.load('ultralytics/yolov5', 'yolov5s')

weight = torch.load('C:\Pose\yolov5s.pt')

model.load_state_dict(weight["model"].state_dict())

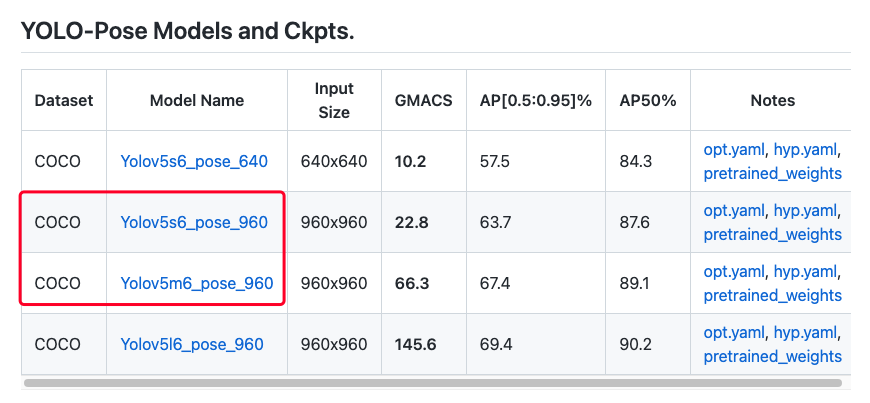

The weights are downloaded from: https://github.com/TexasInstruments/edgeai-yolov5/tree/yolo-pose/weights

But I got this error:

Traceback (most recent call last):

File "C:\MVP\yolov5\venv\lib\site-packages\IPython\core\interactiveshell.py", line 3398, in run_code

exec(code_obj, self.user_global_ns, self.user_ns)

File "", line 3, in <cell line: 3>

model.load_state_dict(weight["model"].state_dict())

File "C:\MVP\yolov5\venv\lib\site-packages\torch\nn\modules\module.py", line 1497, in load_state_dict

raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format(

RuntimeError: Error(s) in loading state_dict for AutoShape:

Missing key(s) in state_dict: "model.model.model.0.conv.weight", "model.model.model.0.conv.bias", "model.model.model.1.conv.weight", "model.model.model.1.conv.bias", "model.model.model.2.cv1.conv.weight", "model.model.model.2.cv1.conv.bias", "model.model.model.2.cv2.conv.weight", "model.model.model.2.cv2.conv.bias", "model.model.model.2.cv3.conv.weight", "model.model.model.2.cv3.conv.bias", "model.model.model.2.m.0.cv1.conv.weight", "model.model.model.2.m.0.cv1.conv.bias", "model.model.model.2.m.0.cv2.conv.weight", "model.model.model.2.m.0.cv2.conv.bias", "model.model.model.3.conv.weight", "model.model.model.3.conv.bias", "model.model.model.4.cv1.conv.weight", "model.model.model.4.cv1.conv.bias", "model.model.model.4.cv2.conv.weight", "model.model.model.4.cv2.conv.bias", "model.model.model.4.cv3.conv.weight", "model.model.model.4.cv3.conv.bias", "model.model.model.4.m.0.cv1.conv.weight", "model.model.model.4.m.0.cv1.conv.bias", "model.model.model.4.m.0.cv2.conv.weight", "model.model.model.4.m.0.cv2.conv.bias", "model.model.model.4.m.1.cv1.conv.weight", "model.model.model.4.m.1.cv1.conv.bias", "model.model.model.4.m.1.cv2.conv.weight", "model.model.model.4.m.1.cv2.conv.bias", "model.model.model.5.conv.weight", "model.model.model.5.conv.bias", "model.model.model.6.cv1.conv.weight", "model.model.model.6.cv1.conv.bias", "model.model.model.6.cv2.conv.weight", "model.model.model.6.cv2.conv.bias", "model.model.model.6.cv3.conv.weight", "model.model.model.6.cv3.conv.bias", "model.model.model.6.m.0.cv1.conv.weight", "model.model.model.6.m.0.cv1.conv.bias", "model.model.model.6.m.0.cv2.conv.weight", "model.model.model.6.m.0.cv2.conv.bias", "model.model.model.6.m.1.cv1.conv.weight", "model.model.model.6.m.1.cv1.conv.bias", "model.model.model.6.m.1.cv2.conv.weight", "model.model.model.6.m.1.cv2.conv.bias", "model.model.model.6.m.2.cv1.conv.weight", "model.model.model.6.m.2.cv1.conv.bias", "model.model.model.6.m.2.cv2.conv.weight", "model.model.model.6.m.2.cv2.conv.bias", "model.model.model.7.conv.weight", "model.model.model.7.conv.bias", "model.model.model.8.cv1.conv.weight", "model.model.model.8.cv1.conv.bias", "model.model.model.8.cv2.conv.weight", "model.model.model.8.cv2.conv.bias", "model.model.model.8.cv3.conv.weight", "model.model.model.8.cv3.conv.bias", "model.model.model.8.m.0.cv1.conv.weight", "model.model.model.8.m.0.cv1.conv.bias", "model.model.model.8.m.0.cv2.conv.weight", "model.model.model.8.m.0.cv2.conv.bias", "model.model.model.9.cv1.conv.weight", "model.model.model.9.cv1.conv.bias", "model.model.model.9.cv2.conv.weight", "model.model.model.9.cv2.conv.bias", "model.model.model.10.conv.weight", "model.model.model.10.conv.bias", "model.model.model.13.cv1.conv.weight", "model.model.model.13.cv1.conv.bias", "model.model.model.13.cv2.conv.weight", "model.model.model.13.cv2.conv.bias", "model.model.model.13.cv3.conv.weight", "model.model.model.13.cv3.conv.bias", "model.model.model.13.m.0.cv1.conv.weight", "model.model.model.13.m.0.cv1.conv.bias", "model.model.model.13.m.0.cv2.conv.weight", "model.model.model.13.m.0.cv2.conv.bias", "model.model.model.14.conv.weight", "model.model.model.14.conv.bias", "model.model.model.17.cv1.conv.weight", "model.model.model.17.cv1.conv.bias", "model.model.model.17.cv2.conv.weight", "model.model.model.17.cv2.conv.bias", "model.model.model.17.cv3.conv.weight", "model.model.model.17.cv3.conv.bias", "model.model.model.17.m.0.cv1.conv.weight", "model.model.model.17.m.0.cv1.conv.bias", "model.model.model.17.m.0.cv2.conv.weight", "model.model.model.17.m.0.cv2.conv.bias", "model.model.model.18.conv.weight", "model.model.model.18.conv.bias", "model.model.model.20.cv1.conv.weight", "model.model.model.20.cv1.conv.bias", "model.model.model.20.cv2.conv.weight", "model.model.model.20.cv2.conv.bias", "model.model.model.20.cv3.conv.weight", "model.model.model.20.cv3.conv.bias", "model.model.model.20.m.0.cv1.conv.weight", "model.model.model.20.m.0.cv1.conv.bias", "model.model.model.20.m.0.cv2.conv.weight", "model.model.model.20.m.0.cv2.conv.bias", "model.model.model.21.conv.weight", "model.model.model.21.conv.bias", "model.model.model.23.cv1.conv.weight", "model.model.model.23.cv1.conv.bias", "model.model.model.23.cv2.conv.weight", "model.model.model.23.cv2.conv.bias", "model.model.model.23.cv3.conv.weight", "model.model.model.23.cv3.conv.bias", "model.model.model.23.m.0.cv1.conv.weight", "model.model.model.23.m.0.cv1.conv.bias", "model.model.model.23.m.0.cv2.conv.weight", "model.model.model.23.m.0.cv2.conv.bias", "model.model.model.24.anchors", "model.model.model.24.m.0.weight", "model.model.model.24.m.0.bias", "model.model.model.24.m.1.weight", "model.model.model.24.m.1.bias", "model.model.model.24.m.2.weight", "model.model.model.24.m.2.bias".

Unexpected key(s) in state_dict: "model.0.conv.weight", "model.0.bn.weight", "model.0.bn.bias", "model.0.bn.running_mean", "model.0.bn.running_var", "model.0.bn.num_batches_tracked", "model.1.conv.weight", "model.1.bn.weight", "model.1.bn.bias", "model.1.bn.running_mean", "model.1.bn.running_var", "model.1.bn.num_batches_tracked", "model.2.cv1.conv.weight", "model.2.cv1.bn.weight", "model.2.cv1.bn.bias", "model.2.cv1.bn.running_mean", "model.2.cv1.bn.running_var", "model.2.cv1.bn.num_batches_tracked", "model.2.cv2.conv.weight", "model.2.cv2.bn.weight", "model.2.cv2.bn.bias", "model.2.cv2.bn.running_mean", "model.2.cv2.bn.running_var", "model.2.cv2.bn.num_batches_tracked", "model.2.cv3.conv.weight", "model.2.cv3.bn.weight", "model.2.cv3.bn.bias", "model.2.cv3.bn.running_mean", "model.2.cv3.bn.running_var", "model.2.cv3.bn.num_batches_tracked", "model.2.m.0.cv1.conv.weight", "model.2.m.0.cv1.bn.weight", "model.2.m.0.cv1.bn.bias", "model.2.m.0.cv1.bn.running_mean", "model.2.m.0.cv1.bn.running_var", "model.2.m.0.cv1.bn.num_batches_tracked", "model.2.m.0.cv2.conv.weight", "model.2.m.0.cv2.bn.weight", "model.2.m.0.cv2.bn.bias", "model.2.m.0.cv2.bn.running_mean", "model.2.m.0.cv2.bn.running_var", "model.2.m.0.cv2.bn.num_batches_tracked", "model.3.conv.weight", "model.3.bn.weight", "model.3.bn.bias", "model.3.bn.running_mean", "model.3.bn.running_var", "model.3.bn.num_batches_tracked", "model.4.cv1.conv.weight", "model.4.cv1.bn.weight", "model.4.cv1.bn.bias", "model.4.cv1.bn.running_mean", "model.4.cv1.bn.running_var", "model.4.cv1.bn.num_batches_tracked", "model.4.cv2.conv.weight", "model.4.cv2.bn.weight", "model.4.cv2.bn.bias", "model.4.cv2.bn.running_mean", "model.4.cv2.bn.running_var", "model.4.cv2.bn.num_batches_tracked", "model.4.cv3.conv.weight", "model.4.cv3.bn.weight", "model.4.cv3.bn.bias", "model.4.cv3.bn.running_mean", "model.4.cv3.bn.running_var", "model.4.cv3.bn.num_batches_tracked", "model.4.m.0.cv1.conv.weight", "model.4.m.0.cv1.bn.weight", "model.4.m.0.cv1.bn.bias", "model.4.m.0.cv1.bn.running_mean", "model.4.m.0.cv1.bn.running_var", "model.4.m.0.cv1.bn.num_batches_tracked", "model.4.m.0.cv2.conv.weight", "model.4.m.0.cv2.bn.weight", "model.4.m.0.cv2.bn.bias", "model.4.m.0.cv2.bn.running_mean", "model.4.m.0.cv2.bn.running_var", "model.4.m.0.cv2.bn.num_batches_tracked", "model.4.m.1.cv1.conv.weight", "model.4.m.1.cv1.bn.weight", "model.4.m.1.cv1.bn.bias", "model.4.m.1.cv1.bn.running_mean", "model.4.m.1.cv1.bn.running_var", "model.4.m.1.cv1.bn.num_batches_tracked", "model.4.m.1.cv2.conv.weight", "model.4.m.1.cv2.bn.weight", "model.4.m.1.cv2.bn.bias", "model.4.m.1.cv2.bn.running_mean", "model.4.m.1.cv2.bn.running_var", "model.4.m.1.cv2.bn.num_batches_tracked", "model.5.conv.weight", "model.5.bn.weight", "model.5.bn.bias", "model.5.bn.running_mean", "model.5.bn.running_var", "model.5.bn.num_batches_tracked", "model.6.cv1.conv.weight", "model.6.cv1.bn.weight", "model.6.cv1.bn.bias", "model.6.cv1.bn.running_mean", "model.6.cv1.bn.running_var", "model.6.cv1.bn.num_batches_tracked", "model.6.cv2.conv.weight", "model.6.cv2.bn.weight", "model.6.cv2.bn.bias", "model.6.cv2.bn.running_mean", "model.6.cv2.bn.running_var", "model.6.cv2.bn.num_batches_tracked", "model.6.cv3.conv.weight", "model.6.cv3.bn.weight", "model.6.cv3.bn.bias", "model.6.cv3.bn.running_mean", "model.6.cv3.bn.running_var", "model.6.cv3.bn.num_batches_tracked", "model.6.m.0.cv1.conv.weight", "model.6.m.0.cv1.bn.weight", "model.6.m.0.cv1.bn.bias", "model.6.m.0.cv1.bn.running_mean", "model.6.m.0.cv1.bn.running_var", "model.6.m.0.cv1.bn.num_batches_tracked", "model.6.m.0.cv2.conv.weight", "model.6.m.0.cv2.bn.weight", "model.6.m.0.cv2.bn.bias", "model.6.m.0.cv2.bn.running_mean", "model.6.m.0.cv2.bn.running_var", "model.6.m.0.cv2.bn.num_batches_tracked", "model.6.m.1.cv1.conv.weight", "model.6.m.1.cv1.bn.weight", "model.6.m.1.cv1.bn.bias", "model.6.m.1.cv1.bn.running_mean", "model.6.m.1.cv1.bn.running_var", "model.6.m.1.cv1.bn.num_batches_tracked", "model.6.m.1.cv2.conv.weight", "model.6.m.1.cv2.bn.weight", "model.6.m.1.cv2.bn.bias", "model.6.m.1.cv2.bn.running_mean", "model.6.m.1.cv2.bn.running_var", "model.6.m.1.cv2.bn.num_batches_tracked", "model.6.m.2.cv1.conv.weight", "model.6.m.2.cv1.bn.weight", "model.6.m.2.cv1.bn.bias", "model.6.m.2.cv1.bn.running_mean", "model.6.m.2.cv1.bn.running_var", "model.6.m.2.cv1.bn.num_batches_tracked", "model.6.m.2.cv2.conv.weight", "model.6.m.2.cv2.bn.weight", "model.6.m.2.cv2.bn.bias", "model.6.m.2.cv2.bn.running_mean", "model.6.m.2.cv2.bn.running_var", "model.6.m.2.cv2.bn.num_batches_tracked", "model.7.conv.weight", "model.7.bn.weight", "model.7.bn.bias", "model.7.bn.running_mean", "model.7.bn.running_var", "model.7.bn.num_batches_tracked", "model.8.cv1.conv.weight", "model.8.cv1.bn.weight", "model.8.cv1.bn.bias", "model.8.cv1.bn.running_mean", "model.8.cv1.bn.running_var", "model.8.cv1.bn.num_batches_tracked", "model.8.cv2.conv.weight", "model.8.cv2.bn.weight", "model.8.cv2.bn.bias", "model.8.cv2.bn.running_mean", "model.8.cv2.bn.running_var", "model.8.cv2.bn.num_batches_tracked", "model.8.cv3.conv.weight", "model.8.cv3.bn.weight", "model.8.cv3.bn.bias", "model.8.cv3.bn.running_mean", "model.8.cv3.bn.running_var", "model.8.cv3.bn.num_batches_tracked", "model.8.m.0.cv1.conv.weight", "model.8.m.0.cv1.bn.weight", "model.8.m.0.cv1.bn.bias", "model.8.m.0.cv1.bn.running_mean", "model.8.m.0.cv1.bn.running_var", "model.8.m.0.cv1.bn.num_batches_tracked", "model.8.m.0.cv2.conv.weight", "model.8.m.0.cv2.bn.weight", "model.8.m.0.cv2.bn.bias", "model.8.m.0.cv2.bn.running_mean", "model.8.m.0.cv2.bn.running_var", "model.8.m.0.cv2.bn.num_batches_tracked", "model.9.cv1.conv.weight", "model.9.cv1.bn.weight", "model.9.cv1.bn.bias", "model.9.cv1.bn.running_mean", "model.9.cv1.bn.running_var", "model.9.cv1.bn.num_batches_tracked", "model.9.cv2.conv.weight", "model.9.cv2.bn.weight", "model.9.cv2.bn.bias", "model.9.cv2.bn.running_mean", "model.9.cv2.bn.running_var", "model.9.cv2.bn.num_batches_tracked", "model.10.conv.weight", "model.10.bn.weight", "model.10.bn.bias", "model.10.bn.running_mean", "model.10.bn.running_var", "model.10.bn.num_batches_tracked", "model.13.cv1.conv.weight", "model.13.cv1.bn.weight", "model.13.cv1.bn.bias", "model.13.cv1.bn.running_mean", "model.13.cv1.bn.running_var", "model.13.cv1.bn.num_batches_tracked", "model.13.cv2.conv.weight", "model.13.cv2.bn.weight", "model.13.cv2.bn.bias", "model.13.cv2.bn.running_mean", "model.13.cv2.bn.running_var", "model.13.cv2.bn.num_batches_tracked", "model.13.cv3.conv.weight", "model.13.cv3.bn.weight", "model.13.cv3.bn.bias", "model.13.cv3.bn.running_mean", "model.13.cv3.bn.running_var", "model.13.cv3.bn.num_batches_tracked", "model.13.m.0.cv1.conv.weight", "model.13.m.0.cv1.bn.weight", "model.13.m.0.cv1.bn.bias", "model.13.m.0.cv1.bn.running_mean", "model.13.m.0.cv1.bn.running_var", "model.13.m.0.cv1.bn.num_batches_tracked", "model.13.m.0.cv2.conv.weight", "model.13.m.0.cv2.bn.weight", "model.13.m.0.cv2.bn.bias", "model.13.m.0.cv2.bn.running_mean", "model.13.m.0.cv2.bn.running_var", "model.13.m.0.cv2.bn.num_batches_tracked", "model.14.conv.weight", "model.14.bn.weight", "model.14.bn.bias", "model.14.bn.running_mean", "model.14.bn.running_var", "model.14.bn.num_batches_tracked", "model.17.cv1.conv.weight", "model.17.cv1.bn.weight", "model.17.cv1.bn.bias", "model.17.cv1.bn.running_mean", "model.17.cv1.bn.running_var", "model.17.cv1.bn.num_batches_tracked", "model.17.cv2.conv.weight", "model.17.cv2.bn.weight", "model.17.cv2.bn.bias", "model.17.cv2.bn.running_mean", "model.17.cv2.bn.running_var", "model.17.cv2.bn.num_batches_tracked", "model.17.cv3.conv.weight", "model.17.cv3.bn.weight", "model.17.cv3.bn.bias", "model.17.cv3.bn.running_mean", "model.17.cv3.bn.running_var", "model.17.cv3.bn.num_batches_tracked", "model.17.m.0.cv1.conv.weight", "model.17.m.0.cv1.bn.weight", "model.17.m.0.cv1.bn.bias", "model.17.m.0.cv1.bn.running_mean", "model.17.m.0.cv1.bn.running_var", "model.17.m.0.cv1.bn.num_batches_tracked", "model.17.m.0.cv2.conv.weight", "model.17.m.0.cv2.bn.weight", "model.17.m.0.cv2.bn.bias", "model.17.m.0.cv2.bn.running_mean", "model.17.m.0.cv2.bn.running_var", "model.17.m.0.cv2.bn.num_batches_tracked", "model.18.conv.weight", "model.18.bn.weight", "model.18.bn.bias", "model.18.bn.running_mean", "model.18.bn.running_var", "model.18.bn.num_batches_tracked", "model.20.cv1.conv.weight", "model.20.cv1.bn.weight", "model.20.cv1.bn.bias", "model.20.cv1.bn.running_mean", "model.20.cv1.bn.running_var", "model.20.cv1.bn.num_batches_tracked", "model.20.cv2.conv.weight", "model.20.cv2.bn.weight", "model.20.cv2.bn.bias", "model.20.cv2.bn.running_mean", "model.20.cv2.bn.running_var", "model.20.cv2.bn.num_batches_tracked", "model.20.cv3.conv.weight", "model.20.cv3.bn.weight", "model.20.cv3.bn.bias", "model.20.cv3.bn.running_mean", "model.20.cv3.bn.running_var", "model.20.cv3.bn.num_batches_tracked", "model.20.m.0.cv1.conv.weight", "model.20.m.0.cv1.bn.weight", "model.20.m.0.cv1.bn.bias", "model.20.m.0.cv1.bn.running_mean", "model.20.m.0.cv1.bn.running_var", "model.20.m.0.cv1.bn.num_batches_tracked", "model.20.m.0.cv2.conv.weight", "model.20.m.0.cv2.bn.weight", "model.20.m.0.cv2.bn.bias", "model.20.m.0.cv2.bn.running_mean", "model.20.m.0.cv2.bn.running_var", "model.20.m.0.cv2.bn.num_batches_tracked", "model.21.conv.weight", "model.21.bn.weight", "model.21.bn.bias", "model.21.bn.running_mean", "model.21.bn.running_var", "model.21.bn.num_batches_tracked", "model.23.cv1.conv.weight", "model.23.cv1.bn.weight", "model.23.cv1.bn.bias", "model.23.cv1.bn.running_mean", "model.23.cv1.bn.running_var", "model.23.cv1.bn.num_batches_tracked", "model.23.cv2.conv.weight", "model.23.cv2.bn.weight", "model.23.cv2.bn.bias", "model.23.cv2.bn.running_mean", "model.23.cv2.bn.running_var", "model.23.cv2.bn.num_batches_tracked", "model.23.cv3.conv.weight", "model.23.cv3.bn.weight", "model.23.cv3.bn.bias", "model.23.cv3.bn.running_mean", "model.23.cv3.bn.running_var", "model.23.cv3.bn.num_batches_tracked", "model.23.m.0.cv1.conv.weight", "model.23.m.0.cv1.bn.weight", "model.23.m.0.cv1.bn.bias", "model.23.m.0.cv1.bn.running_mean", "model.23.m.0.cv1.bn.running_var", "model.23.m.0.cv1.bn.num_batches_tracked", "model.23.m.0.cv2.conv.weight", "model.23.m.0.cv2.bn.weight", "model.23.m.0.cv2.bn.bias", "model.23.m.0.cv2.bn.running_mean", "model.23.m.0.cv2.bn.running_var", "model.23.m.0.cv2.bn.num_batches_tracked", "model.24.anchors", "model.24.m.0.weight", "model.24.m.0.bias", "model.24.m.1.weight", "model.24.m.1.bias", "model.24.m.2.weight", "model.24.m.2.bias".

Can someone tell me what I'm missing?