Dongyoon Han, Sangdoo Yun, Byeongho Heo, and YoungJoon Yoo | Paper | Pretrained Models

Clova AI Research, NAVER Corp.

This paper addresses representational bottleneck in a network and propose a set of design principles that improves model performance significantly. We argue that a representational bottleneck may happen in a network designed by a conventional design and results in degrading the model performance. To investigate the representational bottleneck, we study the matrix rank of the features generated by ten thousand random networks. We further study the entire layer's channel configuration towards designing more accurate network architectures. Based on the investigation, we propose simple yet effective design principles to mitigate the representational bottleneck. Slight changes on baseline networks by following the principle leads to achieving remarkable performance improvements on ImageNet classification. Additionally, COCO object detection results and transfer learning results on several datasets provide other backups of the link between diminishing representational bottleneck of a network and improving performance.

-

Accuracy comparison with ResNet50 and EfficientNetB0 by using own ImageNet-pretrained models to transfer on the fine-grained datasets:

-

We provide representative ReXNets' pretrained weights on ImageNet dataset. Note that all the models are trained and evaluated with 224x224 image size:

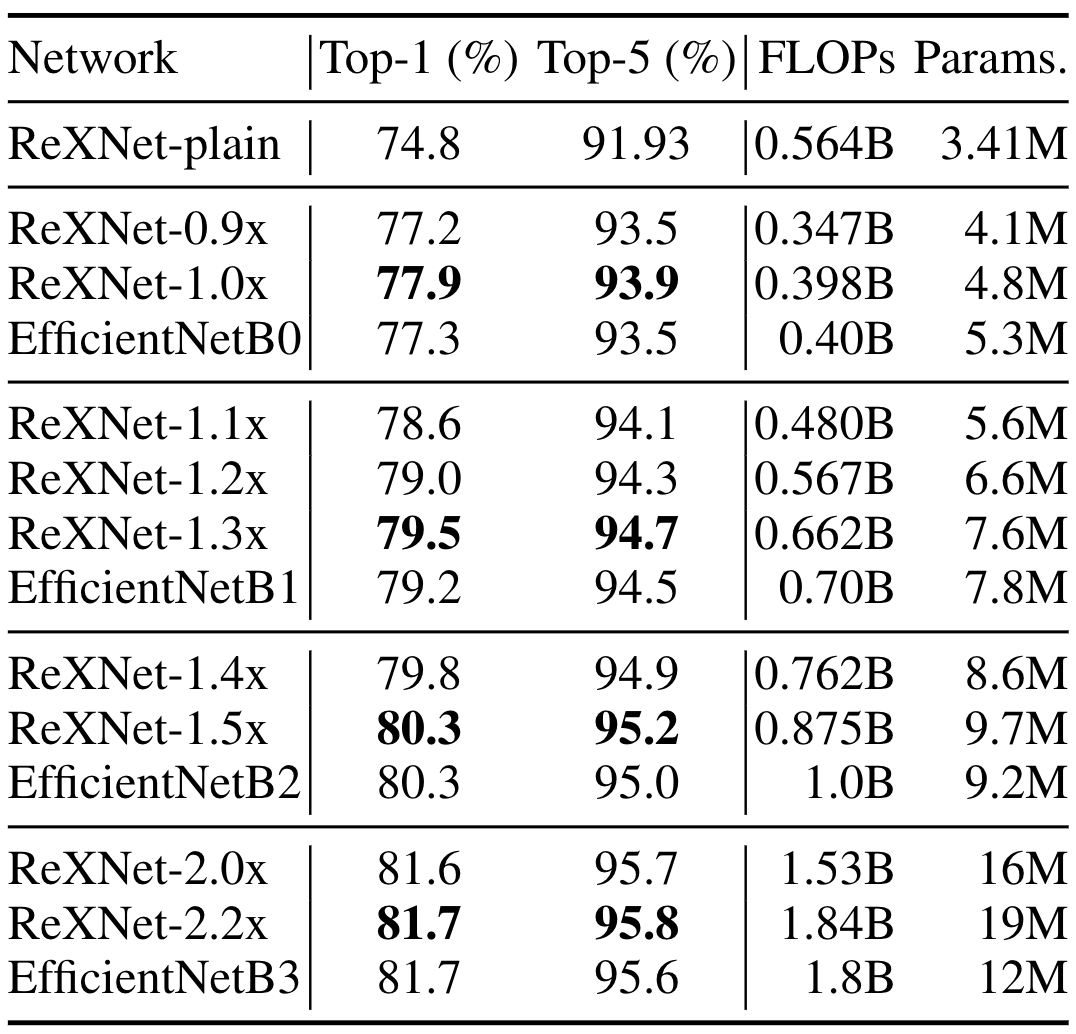

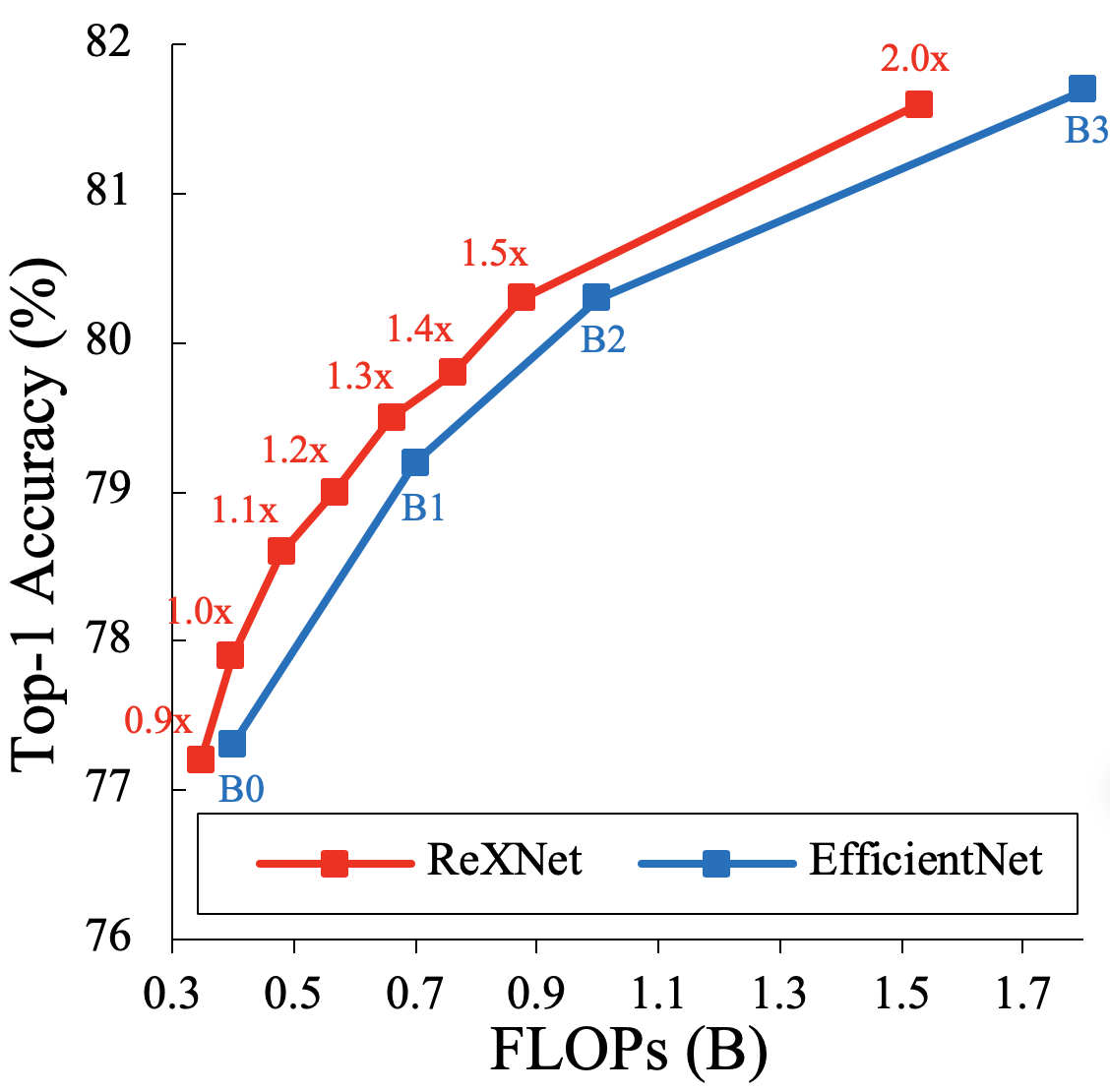

Model Input Res. Top-1 acc. Top-5 acc. FLOPs/params ReXNet_V1-1.0x 224x224 77.9 93.9 0.40B/4.8M ReXNet_V1-1.3x 224x224 79.5 94.7 0.66B/7.6M ReXNet_V1-1.5x 224x224 80.3 95.2 0.66B/7.6M ReXNet_V1-2.0x 224x224 81.6 95.7 1.53B/16.4M

- Python3

- PyTorch (> 1.0)

- Torchvision (> 0.2)

- NumPy

-

Usage is the same as the other models officially released in pytorch Torchvision.

-

Using models in GPUs:

import torch

import rexnetv1

model = rexnetv1.ReXNetV1(width_mult=1.0).cuda()

model.load_state_dict(torch.load('./rexnetv1_1.0x.pth'))

model.eval()

print(model(torch.randn(1, 3, 224, 224)))- For CPUs:

import torch

import rexnetv1

model = rexnetv1.ReXNetV1(width_mult=1.0)

model.load_state_dict(torch.load('./rexnetv1_1.0x.pth', map_location=torch.device('cpu')))

model.eval()

print(model(torch.randn(1, 3, 224, 224)))

ReXNet can be trained with any PyTorch training codes including ImageNet training in PyTorch with the model file and proper arguments. Since the provided model file is not complicated, we simply convert the model to train a ReXNet in other frameworks like MXNet. For MXNet, we recommend MXnet-gluoncv as a training code.

Using PyTorch, we trained ReXNets with one of the popular imagenet classification code, rwightman's pytorch-image-models for more efficient training. After including ReXNet's model file into the training code, one can train ReXNet-1.0x with the following command line:

./distributed_train.sh 4 /imagenet/ --model rexnetv1 --rex-width-mult 1.0 --opt sgd --amp \

--lr 0.5 --weight-decay 1e-5 \

--batch-size 128 --epochs 400 --sched cosine \

--remode pixel --reprob 0.2 --drop 0.2 --aa rand-m9-mstd0.5

This project is distributed under MIT license.

@article{han2020rexnet,

title={{ReXNet}: Diminishing Representational Bottleneck on Convolutional Neural Network

},

author={Han, Dongyoon and Yun, Sangdoo and Heo, Byeongho and Yoo, YoungJoon},

year={2020},

journal={arXiv preprint arXiv:2007.00992},

}