English | 简体中文

README:

Visit Website to learn more

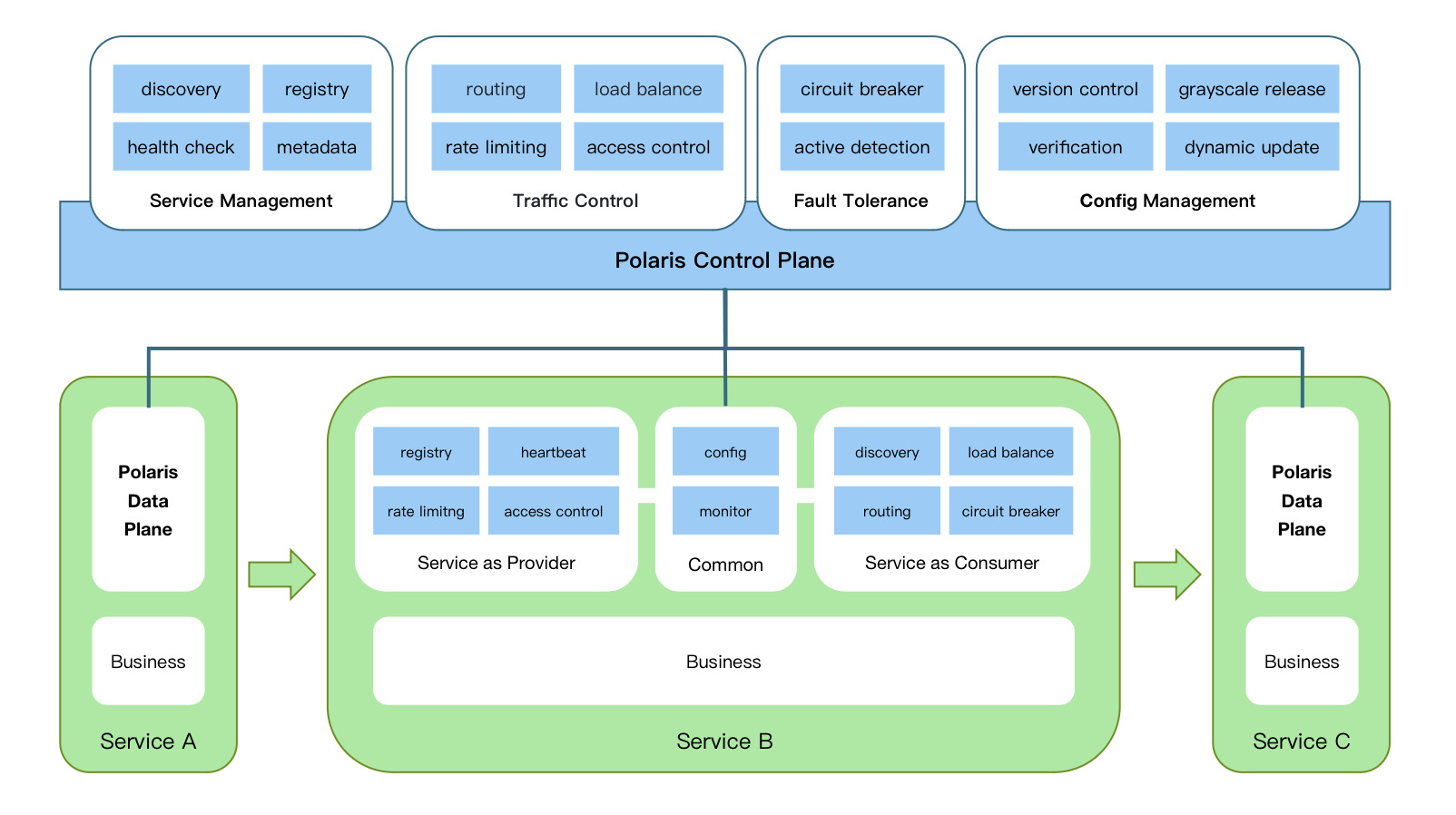

Polaris is an open source system for service discovery and governance. It can be used to solve the problem of service management, traffic control, fault tolerance and config management in distributed and microservice architecture.

Functions:

- service management: service discovery, service registry and health check

- traffic control: customizable routing, load balance, rate limiting and access control

- fault tolerance: circuit breaker for service, interface and instance

- config management: config version control, grayscale release and dynamic update

Features:

- It is a one-stop solution instead of registry center, service mesh and config center.

- It provides multi-mode data plane, including SDK, development framework, Java agent and sidecar.

- It is integrated into the most frequently used frameworks, such as Spring Cloud, Dubbo and gRPC.

- It supports K8s service registry and automatic injection of sidecar for proxy service mesh.

Visit Installation Guide to learn more

Polaris provides multi-mode data plane including SDK, development framework, Java agent and sidecar. You can select one or more mode to develop service according to business requirements.

Use Polaris multi-language SDK and call Polaris Client API directly:

Use HTTP or RPC frameworks already integrating Polaris Java SDK:

- spring cloud

- spring boot

- dubbo-java

- grpc-java

Use HTTP or RPC frameworks already integrating Polaris Go SDK:

Use K8s service and sidecar:

You can integrate service gateways with Polaris service discovery and governance.

Please scan the QR code to join the chat group.