The manuscript is published as a preprint: https://osf.io/fsd7t

We welcome your feedback, e.g., by opening issues on this repository or with OSF annotations. We especially welcome your help by creating strong illustrating examples, see issue #4.

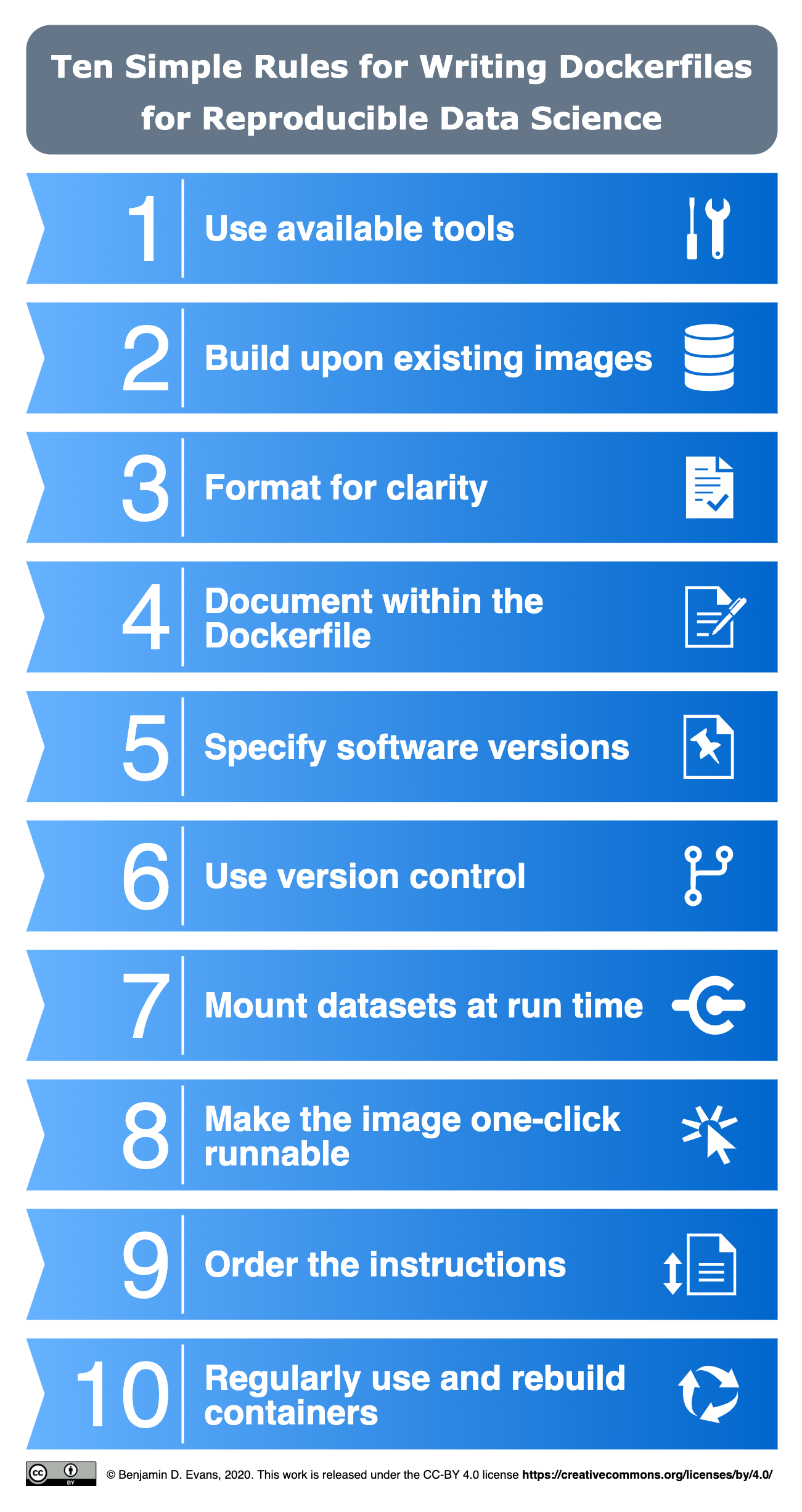

Ten Simple Rules Collection on PLOS

DN conceived the idea and contributed to conceptualisation, methodology, and writing - original draft, review & editing, and validation. VS contributed to conceptualisation, methodology, and writing - original draft, and review & editing. BM contributed to writing – review & editing. SJE contributed to conceptualisation, writing – review & editing, and validation. THe contributed to conceptualisation. THi contributed to writing – review & editing. BDE contributed to conceptualisation, writing – review & editing, visualisation, and validation. This articles was written collaboratively on GitHub, where contributions in form of text or discussions comments are documented: https://github.com/nuest/ten-simple-rules-dockerfiles/.

First, build the container. It will install the dependencies that you need for compiling the LaTex.

docker build -t ten-simple-rules-dockerfiles .Then run it! You'll need to set a password to login with user "rstudio."

PASSWORD=simple

docker run --rm -it -p 8787:8787 -e PASSWORD=$PASSWORD -v $(pwd):/home/rstudio/ten-simple-rules-dockerfiles ten-simple-rules-dockerfilesOpen http://localhost:8787 to get to RStudio, log in, and navigate to the directory ~/ten-simple-rules-dockerfiles to open the Rmd file and start editing.

Use the "Knit" button to render the PDF.

The first rendering takes a bit longer, because required LaTeX packages must be installed.

See more options in the Rocker docs.

See the end of the Dockerfile for instructions.

- Get all author's GitHub handles:

cat *.Rmd | grep ' # https://github.com/' | sed 's| # https://github.com/|@|'

- Get all author's emails:

cat *.Rmd | grep 'email:' | sed 's| email: ||'

- [Work in progress!] Get a

.docxfile out of the Rmd so one can compare versions and generate marked-up copies of changes:# https://github.com/davidgohel/officedown library("officedown") rmarkdown::render("ten-simple-rules-dockerfiles.Rmd", output_format = officedown::rdocx_document(), output_file = "tsrd.docx") # https://noamross.github.io/redoc/articles/mixed-workflows-with-redoc.html library("redoc") rmarkdown::render("ten-simple-rules-dockerfiles.Rmd", output_format = redoc::redoc(), output_file = "tsrd.docx")

- Compare with

latexdiff# get a specific version of the text file wget -O submission.v2.tex https://raw.githubusercontent.com/nuest/ten-simple-rules-dockerfiles/submission.v2/ten-simple-rules-dockerfiles.tex # compare it with current version latexdiff --graphics-markup=2 submission.v2.tex ten-simple-rules-dockerfiles.tex > diff.tex # render diff.tex with RStudio

This manuscript is published under a Creative Commons Attribution 4.0 International (CC BY 4.0) license, see file LICENSE.md.