Neural Network Support as Gstreamer Plugins.

NNStreamer is a set of Gstreamer plugins that allow Gstreamer developers to adopt neural network models easily and efficiently and neural network developers to manage neural network pipelines and their filters easily and efficiently.

Architectural Description (WIP)

Toward Among-Device AI from On-Device AI with Stream Pipelines, IEEE/ACM ICSE 2022 SEIP

NNStreamer: Efficient and Agile Development of On-Device AI Systems, IEEE/ACM ICSE 2021 SEIP [media]

NNStreamer: Stream Processing Paradigm for Neural Networks ... [pdf/tech report]

GStreamer Conference 2018, NNStreamer [media] [pdf/slides]

Naver Tech Talk (Korean), 2018 [media] [pdf/slides]

Samsung Developer Conference 2019, NNStreamer (media)

ResearchGate Page of NNStreamer

| Tizen | Ubuntu | Android | Yocto | macOS | |

|---|---|---|---|---|---|

| 5.5M2 and later | 9/P | Kirkstone | |||

| arm | Available | Available | Ready | N/A | |

| arm64 | Available | N/A | |||

| x64 | Ready | Ready | Available | ||

| x86 | N/A | N/A | Ready | N/A | |

| Publish | Tizen Repo | PPA | Daily build | Layer | Brew Tap |

| API | C/C# (Official) | C | Java | C | C |

- Ready: CI system ensures build-ability and unit-testing. Users may easily build and execute. However, we do not have automated release & deployment system for this instance.

- Available: binary packages are released and deployed automatically and periodically along with CI tests.

- Daily Release

- SDK Support: Tizen Studio (5.5 M2+) / Android Studio (JCenter, "nnstreamer")

- Enabled features of official releases

-

Provide neural network framework connectivities (e.g., tensorflow, caffe) for gstreamer streams.

- Efficient Streaming for AI Projects: Apply efficient and flexible stream pipeline to neural networks.

- Intelligent Media Filters!: Use a neural network model as a media filter / converter.

- Composite Models!: Multiple neural network models in a single stream pipeline instance.

- Multi Modal Intelligence!: Multiple sources and stream paths for neural network models.

-

Provide easy methods to construct media streams with neural network models using the de-facto-standard media stream framework, GStreamer.

- Gstreamer users: use neural network models as if they are yet another media filters.

- Neural network developers: manage media streams easily and efficiently.

- Jijoong Moon

- Geunsik Lim

- Sangjung Woo

- Wook Song

- Jaeyun Jung

- Hyoungjoo Ahn

- Parichay Kapoor

- Dongju Chae

- Gichan Jang

- Yongjoo Ahn

- Jihoon Lee

Note that this project has just started and many of the components are in design phase. In Component Description page, we describe nnstreamer components of the following three categories: data type definitions, gstreamer elements (plugins), and other misc components.

For more details, please access the following manuals.

- For Linux-like systems such as Tizen, Debian, and Ubuntu, press here.

- For macOS systems, press here.

- To build an API library for Android, press here.

- Edge-AI Examples

- Products with NNStreamer

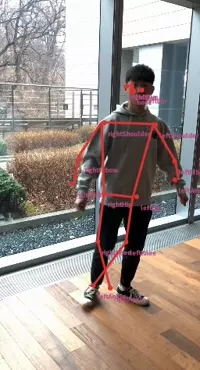

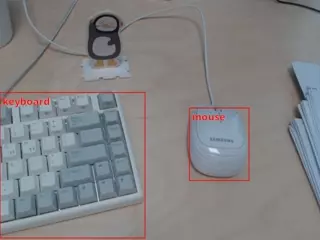

- NNStreamer example applications: Github / Screenshots

Although a framework may accelerate transparently as Tensorflow-GPU does, nnstreamer provides various hardware acceleration subplugins.

- Movidius-X via ncsdk2 subplugin: Released

- Movidius-X via openVINO subplugin: Released

- Edge-TPU via edgetpu subplugin: Released

- ONE runtime via nnfw(an old name of ONE) subplugin: Released

- ARMNN via armnn subplugin: Released

- Verisilicon-Vivante via vivante subplugin: Released

- Qualcomm SNPE via snpe subplugin: Released

- NVidia via TensorRT subplugin: Released

- TRI-x NPUs: Released

- NXP i.MX series: via the vendor

- Others: TVM, TensorFlow, TensorFlow-lite, PyTorch, Caffe2, SNAP, ...

Contributions are welcome! Please see our Contributing Guide for more details.