neural-nuts / image-caption-generator Goto Github PK

View Code? Open in Web Editor NEW[DEPRECATED] A Neural Network based generative model for captioning images using Tensorflow

License: BSD 3-Clause "New" or "Revised" License

[DEPRECATED] A Neural Network based generative model for captioning images using Tensorflow

License: BSD 3-Clause "New" or "Revised" License

When executing python main.py --mode test --image_path Path_to_image ,

getting error : IOError: [Errno 2] No such file or directory: 'Dataset/vocab.npy'

when I run this code for train or test, it both reminds me that ,no file named "Dataset\features.npy" can be found, could you tell me how can I get the lost file while just for a test?

I just find "results_20130124.token","inception_v4.pb" these 2 files, could you show or tell me how can I get the other files that I need when test this project.

decoder, encoder, trained_graphs,and so on ,the files in model

My email is [email protected].

thank you for your reply

Hello, after I first trained and tested the project with the Mscoco dataset. I wanted to train this on another dataset. I've adapted the captions to fit the Flickr format and made a .token of it. There is only one Sentence for each Image. After the training process, I wanted to test the system, but I had to realize that there is only "UNK" includes words. After initial debugging steps, I was unable to locate the source of the error. In the sentences to the pictures there are words he does not recognize, but these are only isolated words and do not make up most of the words. The screenshot shows the test mode. I have made several issues here which show that in the method IDs_to_Words the are entered words, then the sentence will be reduced after the character </ S> appears for the first time for end. Has or did someone have the same problem or does anyone know what exactly it could be?

Great work! The model is fantastic! However, I wish to train it upon a very large dataset and I have to stop training in order to test the model (part of an experiment). I find that I cannot resume training from a saved tf.checkpoint; is this a function that I have to code myself or...?

jishan@jishan-HP-ProBook-450-G3:~/Downloads/fy$ python convfeatures.py --data_path Dataset/flickr30k-images --inception_path ConvNets/inception_v4.pb

Extracting Features

#Images: 3

2018-04-14 01:05:43.501841: I tensorflow/core/platform/cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

Traceback (most recent call last):

File "convfeatures.py", line 122, in <module>

forward_pass(io, args.data_path)

File "convfeatures.py", line 80, in forward_pass

print "Progress:" + str(((n_batch) / float(n_batch) * 100)) + "%\n"

ZeroDivisionError: float division by zero

How can solve that problem. I faced this problem when i want to generate features.npy file. Please help me. Thanks in advance.

I noticed that two different bleu metrics in your eval.py.

It's thoughtful of you to include both of them.

But, which one is the metrics used in current published papers, such as in <Show, attend and tell- Neural image caption generation with visual attention (2015), K. Xu et al>?

Thanks for your answers.

I want to port your model to Tensorflow.js

I downloaded the model provided in Cam2Caption project.

Installed tensorflowjs for python. And tried to convert the frozen model to web model with

$ tensorflowjs_converter \

--input_format=tf_frozen_model \

--output_node_names='' \

--saved_model_tags=serve \

/merged_frozen_graph.pb \

/web_model

But I don't know what to use for output_node_names parameter?

According to docs:

Can you guys please tell what should be the value of output_node_names for your model?

Hello all,

When I want to try run test mode in this project, always show this error :

root@jishan-HP-ProBook-450-G3:/home/jishan/Downloads/project# python main.py --mode test

Traceback (most recent call last):

File "main.py", line 64, in <module>

if os.path.exists(args.image_path):

File "/usr/lib/python2.7/genericpath.py", line 26, in exists

os.stat(path)

TypeError: coercing to Unicode: need string or buffer, NoneType found

I already ran python convfeatures.py and python main.py --mode train

but when I try to run main.py for test mode then it shows this problem. How can I fix this error? Please help me.

Thanks in advance

After training the Image Captioning by using main.py.

Generated the

model.ckpt-42903.data-00000-of-00001

model.ckpt-42903.index

model.ckpt-42903.meta

how generate the merged_frozen_graph_FILE.pb from it

Any help would be appreciated.

I am getting this error while running Serve-DualProtoBuf.py

C:\E\Ai\story image\image-caption-generator>python utils\Serve-DualProtoBuf.py

C:\ProgramData\Anaconda3\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

WARNING:tensorflow:From utils\Serve-DualProtoBuf.py:26: calling import_graph_def (from tensorflow.python.framework.importer) with op_dict is deprecated and will be removed in a future version.

Instructions for updating:

Please file an issue at https://github.com/tensorflow/tensorflow/issues if you depend on this feature.

2018-09-29 22:55:00.913076: I T:\src\github\tensorflow\tensorflow\core\platform\cpu_feature_guard.cc:140] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

Traceback (most recent call last):

File "utils\Serve-DualProtoBuf.py", line 55, in <module>

features = encoder_forward_pass(sess, "s2.jpg")

File "utils\Serve-DualProtoBuf.py", line 35, in encoder_forward_pass

in1 = graph.get_tensor_by_name("encoder/InputImage:0")

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py", line 3766, in get_tensor_by_name

return self.as_graph_element(name, allow_tensor=True, allow_operation=False)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py", line 3590, in as_graph_element

return self._as_graph_element_locked(obj, allow_tensor, allow_operation)

File "C:\ProgramData\Anaconda3\lib\site-packages\tensorflow\python\framework\ops.py", line 3632, in _as_graph_element_locked

"graph." % (repr(name), repr(op_name)))

KeyError: "The name 'encoder/InputImage:0' refers to a Tensor which does not exist. The operation, 'encoder/InputImage', does not exist in the graph."

Hello, I am using the COCO dataset,

A two-layer LSTM model, one layer for top-down attention, and one layer for language models.

Extracting words with jieba

I used all the words in the picture description that occurred more than 3 times as a dictionary file, and a total of 14,226 words.

words = [w for w in word_freq.keys () if word_freq [w]> 3]

After training the model, when using it, multiple words of the same type appear in the result, such as:

Note notebook laptop computer on bed

A little girl little girl girl standing together

How can I solve this problem?

Finally, this could also break intended behaviour in some places so:

Traceback (most recent call last):

File "convfeatures.py", line 122, in

forward_pass(io, args.data_path)

File "convfeatures.py", line 62, in forward_pass

batch = batch_iter.next()

File "convfeatures.py", line 49, in load_next_batch

batch = batch.reshape(batch_size, 299, 299, 3)

ValueError: cannot reshape array of size 1 into shape (10,299,299,3)

Required Files not present. Regenerating Data.

Loading Caption Data Dataset/results_20130124.token

Preprocessing Captions

Traceback (most recent call last):

File "main.py", line 56, in <module>

config.word_threshold, config.max_len, caption_file, feature_file)

File "/media/piyush/2EF68783F6874A53/ImageCap/image-caption-generator-master/utils/data_util.py", line 117, in generate_captions

df = preprocess_captions(filenames, captions)

File "/media/piyush/2EF68783F6874A53/ImageCap/image-caption-generator-master/utils/data_util.py", line 20, in preprocess_captions

**df['caption'] = df['caption'].apply(word_tokenize).apply(**

File "/home/piyush/p3/lib/python3.5/site-packages/pandas/core/series.py", line 2551, in apply

mapped = lib.map_infer(values, f, convert=convert_dtype)

File "pandas/_libs/src/inference.pyx", line 1521, in pandas._libs.lib.map_infer

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/__init__.py", line 128, in word_tokenize

sentences = [text] if preserve_line else sent_tokenize(text, language)

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/__init__.py", line 95, in sent_tokenize

return tokenizer.tokenize(text)

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1237, in tokenize

return list(self.sentences_from_text(text, realign_boundaries))

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1285, in sentences_from_text

return [text[s:e] for s, e in self.span_tokenize(text, realign_boundaries)]

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1276, in span_tokenize

return [(sl.start, sl.stop) for sl in slices]

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1276, in <listcomp>

return [(sl.start, sl.stop) for sl in slices]

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1316, in _realign_boundaries

for sl1, sl2 in _pair_iter(slices):

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 312, in _pair_iter

prev = next(it)

File "/home/piyush/p3/lib/python3.5/site-packages/nltk/tokenize/punkt.py", line 1289, in _slices_from_text

**for match in self._lang_vars.period_context_re().finditer(text):

TypeError: expected string or bytes-like object**

Hello all,

When I want to try run this project always show this error :

jishan@jishan-HP-ProBook-450-G3:~/Downloads/project$ python main.py --mode train

Required Files not present. Regenerating Data.

Loading Caption Data Dataset/results_20130124.token

Traceback (most recent call last):

File "main.py", line 55, in

config.word_threshold, config.max_len, caption_file, feature_file)

File "/home/jishan/Downloads/project/utils/data_util.py", line 116, in generate_captions

captions = [caps.replace('\n', '').split('\t')[1] for caps in data]

IndexError: list index out of range

I already ran python convfeatures.py but when I try to run main.py then it shows this problem. How can I fix this error? Please help me.

Thanks in advance

Looking for contributors to help with major code cleanup and refactoring based on PEP-8 style guidelines.

When the trained model is frozen and is installed on Android some random arbitrary sequence of words is generated instead of correct captions..

Here is the link to screenshot of output : https://drive.google.com/file/d/1xKc9xgci9yPC1kKpvyprys6lS2GToUeY/view?usp=drivesdk

When project is run on laptop it's working fine.

The model is trained for 50 epochs.

Whereas when the Frozen model provided by author here https://github.com/neural-nuts/Cam2Caption is used, then captions are generated correctly

i.e "A laptop sitting on the desk"

Thank you for you help, now I can run the train, test examples.

Thank you very much.

While running the convfeature.py .. i am getting this error... I am not getting what the issue is.. a little help here..

usage: ipykernel_launcher.py [-h]

D:\New folder\image-caption-generator-master\Dataset\COCO-images

D:\New folder\image-caption-generator-master\ConvNets\inception_v4.pb

ipykernel_launcher.py: error: the following arguments are required: D:\New folder\image-caption-generator-master\ConvNets\inception_v4.pb

If anyone has successfully trained a model against the datasets, it would be awesome to get a copy of your pretrained weights and vocab files. I'd love to try out the project without spending hours / days training the model.

If no one has weights lying around, I might be able to take this on.

checkpoints are 32180 and stopped from there

#Images:

Traceback (most recent call last):

File "convfeatures.py", line 136, in

print "#Images:", len(files)

NameError: name 'files' is not defined

I don't know how to fix this error. It is already defined above, so why is it giving me this error? I already downloaded flickr30k_images and results_20130124.token in Dataset folder.

How can I show epoch vs error in a graph in this project? Is it already created, and has this feature already added in the code? If not, then how can I show this graph?

Another question is: which GPU did you use for training?

Thanks in advance.

(cnn) C:\Users\lenovo\Desktop\Image_Captioning\image-caption-generator>python convfeatures.py --data_path Dataset/flickr30k-images --inception_path ConvNets/inception_v4.pb

File "convfeatures.py", line 55

print "#Images:", len(files)

^

SyntaxError: invalid syntax

For MS coco dataset. which images should i download. MS coco 2014 Train images [83K/13GB] or 2014 Val images [41K/6GB]

Where can we obtain this file from? It is not included in the Flickr 30K dataset

http://shannon.cs.illinois.edu/DenotationGraph/data/index.html

01-11 19:30:21.051 29425-29432/? E/art: Failed sending reply to debugger: Broken pipe

01-11 19:30:21.225 29425-29425/? W/art: Throwing OutOfMemoryError "Failed to allocate a 198571917 byte allocation with 3779736 free bytes and 186MB until OOM"

01-11 19:30:21.284 29425-29425/? W/art: Throwing OutOfMemoryError "Failed to allocate a 198571917 byte allocation with 3781696 free bytes and 186MB until OOM"

01-11 19:30:21.342 29425-29425/? E/AndroidRuntime: FATAL EXCEPTION: main

Process: com.example.android.camera2basic, PID: 29425

java.lang.OutOfMemoryError: Failed to allocate a 198571917 byte allocation with 3781696 free bytes and 186MB until OOM

at org.tensorflow.contrib.android.TensorFlowInferenceInterface.load(TensorFlowInferenceInterface.java:401)

at org.tensorflow.contrib.android.TensorFlowInferenceInterface.initializeTensorFlow(TensorFlowInferenceInterface.java:95)

at com.example.android.camera2basic.Camera2BasicFragment.InitSession(Camera2BasicFragment.java:834)

at com.example.android.camera2basic.Camera2BasicFragment.onViewCreated(Camera2BasicFragment.java:808)

at android.app.FragmentManagerImpl.moveToState(FragmentManager.java:1010)

at android.app.FragmentManagerImpl.moveToState(FragmentManager.java:1171)

at android.app.BackStackRecord.run(BackStackRecord.java:815)

at android.app.FragmentManagerImpl.execPendingActions(FragmentManager.java:1580)

at android.app.FragmentController.execPendingActions(FragmentController.java:371)

at android.app.Activity.performStart(Activity.java:6688)

at android.app.ActivityThread.performLaunchActivity(ActivityThread.java:2622)

at android.app.ActivityThread.handleLaunchActivity(ActivityThread.java:2724)

at android.app.ActivityThread.-wrap12(ActivityThread.java)

at android.app.ActivityThread$H.handleMessage(ActivityThread.java:1473)

at android.os.Handler.dispatchMessage(Handler.java:102)

at android.os.Looper.loop(Looper.java:154)

at android.app.ActivityThread.main(ActivityThread.java:6123)

at java.lang.reflect.Method.invoke(Native Method)

at com.android.internal.os.ZygoteInit$MethodAndArgsCaller.run(ZygoteInit.java:867)

at com.android.internal.os.ZygoteInit.main(ZygoteInit.java:757)

python main.py --mode train

Required Files not present. Regenerating Data.

Loading Caption Data Dataset/results_20130124.token

Traceback (most recent call last):

File "main.py", line 55, in

config.word_threshold, config.max_len, caption_file, feature_file)

File "/home/jishan/Desktop/project/utils/data_util.py", line 116, in generate_captions

captions = [caps.replace('\n', '').split('\t')[1] for caps in data]

IndexError: list index out of range

I faced this problem right now. I used bangla dataset but unfortunate got this problem. Please help me. Thanks.

Want to convert my trained files ( checkpoint , meta file, index file and data file ) into frozen graph to port that into android. When I'm executing save_graph.py its asking for correct output node names.

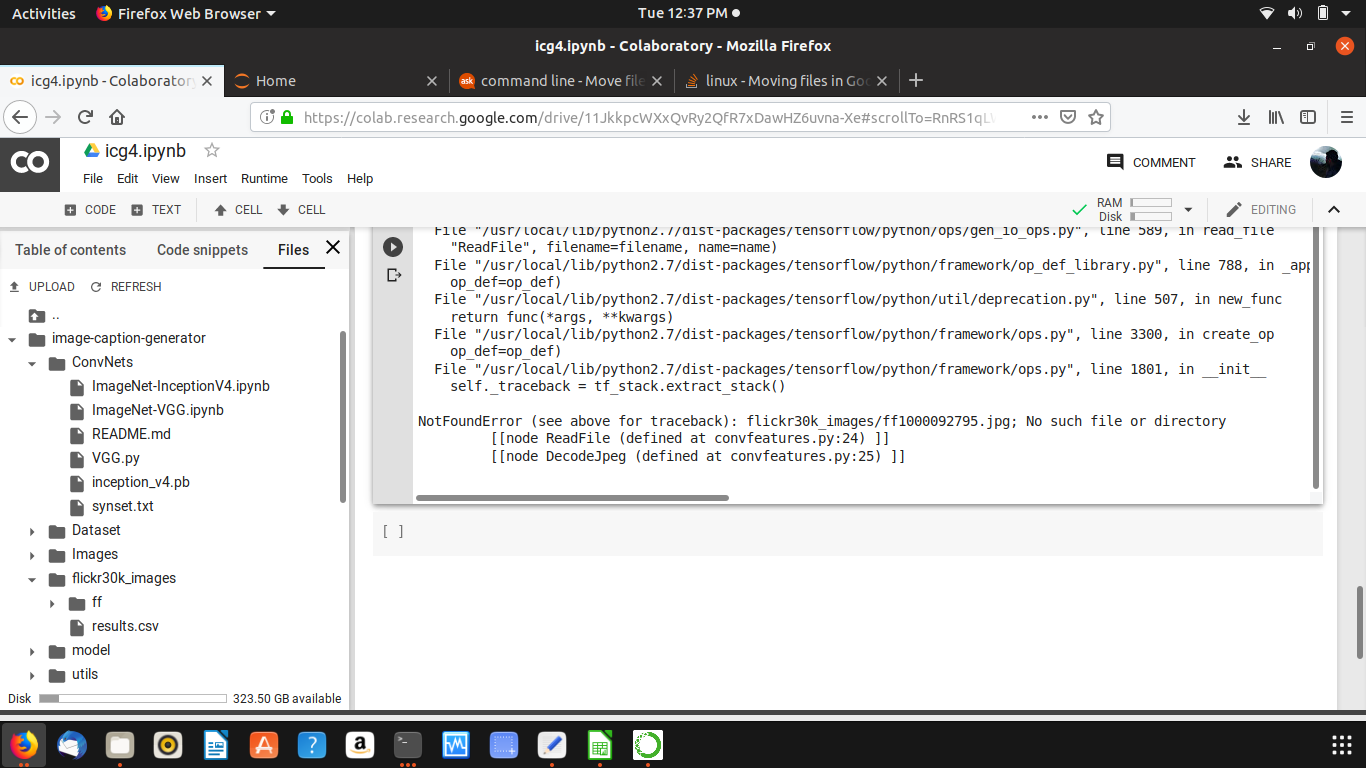

NotFoundError (see above for traceback): flickr30k_images/ff1000092795.jpg; No such file or directory

[[node ReadFile (defined at convfeatures.py:24) ]]

[[node DecodeJpeg (defined at convfeatures.py:25) ]]

please help me with this issue as i am trying to make my college project with this. Also a python3 version migration would have helped a lot.

the 10000 is so large. Why we should train the Flickr30k so many epochs?

Also, I trained the graph almost 200 epochs, but the results look still not favorable:

a man in a black shirt and black pants is sitting on a bench .

a man is sitting on a bench with a red shirt and a black shirt .

a man is sitting on a bench with a man in a black shirt .

a man in a black shirt and black pants is sitting on a bench .

a man in a black shirt and a black shirt is sitting on a bench .

Although they came from difference images.

Any clue? I used one TitanX for training, which follows your instructions.

Thank you very much!

I am getting the following error when invoking main.py in train mode:

python main.py --mode train --resume 0

Required Files not present. Regenerating Data.

Loading Caption Data Dataset/results_20130124.token

Preprocessing Captions

Features Loaded Dataset/features.npy

Padding Caption to Max Length 20 + 2 forand

Generating Vocabulary

Generating Word to Index and Index to Word

Size of Vocabulary 12232

Preprocessing Complete

Converting Captions to IDs

Traceback (most recent call last):

File "main.py", line 59, in

model = Caption_Generator(config, data=data)

File "/local/ampazis/image-caption-generator/caption_generator.py", line 70, in init

self.model()

File "/local/ampazis/image-caption-generator/caption_generator.py", line 152, in model

self.lstm_cell = tf.contrib.rnn.BasicLSTMCell(self.num_hidden)

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/python/util/lazy_loader.py", line 53, in getattr

module = self._load()

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/python/util/lazy_loader.py", line 42, in _load

module = importlib.import_module(self.name)

File "/home/ampazis/anaconda2/lib/python2.7/importlib/init.py", line 37, in import_module

import(name)

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/init.py", line 31, in

from tensorflow.contrib import factorization

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/factorization/init.py", line 24, in

from tensorflow.contrib.factorization.python.ops.gmm import *

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/factorization/python/ops/gmm.py", line 27, in

from tensorflow.contrib.learn.python.learn.estimators import estimator

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/init.py", line 88, in

from tensorflow.contrib.learn.python.learn import *

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/init.py", line 23, in

from tensorflow.contrib.learn.python.learn import *

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/init.py", line 25, in

from tensorflow.contrib.learn.python.learn import estimators

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/estimators/init.py", line 297, in

from tensorflow.contrib.learn.python.learn.estimators.dnn import DNNClassifier

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/estimators/dnn.py", line 30, in

from tensorflow.contrib.learn.python.learn.estimators import dnn_linear_combined

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/estimators/dnn_linear_combined.py", line 31, in

from tensorflow.contrib.learn.python.learn.estimators import estimator

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/estimators/estimator.py", line 49, in

from tensorflow.contrib.learn.python.learn.learn_io import data_feeder

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/learn_io/init.py", line 21, in

from tensorflow.contrib.learn.python.learn.learn_io.dask_io import extract_dask_data

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/tensorflow/contrib/learn/python/learn/learn_io/dask_io.py", line 26, in

import dask.dataframe as dd

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/dask/dataframe/init.py", line 3, in

from .core import (DataFrame, Series, Index, _Frame, map_partitions,

File "/home/ampazis/anaconda2/lib/python2.7/site-packages/dask/dataframe/core.py", line 38, in

pd.computation.expressions.set_use_numexpr(False)

AttributeError: 'module' object has no attribute 'computation'

Is it related to the installed dask version?

Thanks

Hi, i want to replicate this cool project but was unsure how should i install dependencies. I am assuming this is running on python 2.7? And do i have to install tf 1.0.0 or does later version work as well? Is there a requirement.yml or txt file I am missing? Any help would be greatly appreciated!

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.