Hi there! This guide is for you:

- You're new to Machine Learning.

- You know Python. (At least the basics! If you want to learn Python, try Dive Into Python.)

I learned Python by hacking first, and getting serious later. I wanted to do this with Machine Learning. If this is your style, join me in getting a bit ahead of yourself.

Note: There are several fields within "Data," and Machine Learning is just one. It's good to know the context: What is the difference between Data Analytics, Data Analysis, Data Mining, Data Science, Machine Learning, and Big Data?

I suggest you get your feet wet ASAP. You'll boost your confidence.

- Python. (I'm using 2.7.5) and pip, the Python package manager

- ipython and IPython Notebook.

pip install "ipython[notebook]" - Some scientific computing packages:

pip install scikit-learn pandas matplotlib numpy

If you're only using Python for scientific computing, you can grab these tools in one convenient package: Anaconda.

Learn how to use IPython Notebook (5-10 minutes). (You can learn by screencast instead.)

Now, follow along with this brief exercise (10 minutes): An introduction to machine learning with scikit-learn. Do it in ipython or IPython Notebook. It'll really boost your confidence.

I'll wait...

You just classified some hand-written digits using scikit-learn. Neat huh?

scikit-learn is the go-to library for machine learning in Python. Some recognizable logos use it, including Spotify and Evernote. Machine learning is complex. You'll be glad your tools are simple.

I encourage you to look at the scikit-learn homepage and spend about 5 minutes looking over the names of the strategies (Classification, Regression, etc.), and their applications. Don't click through yet! Just get a glimpse of the vocabulary.

Read A Few Useful Things to Know about Machine Learning by Pedro Domingos. It's densely packed with valuable information, but not opaque. The author understands that there's a lot of "black art" and folk wisdom, and they invite you in.

Take your time with this one. Take notes. Don't worry if you don't understand it all yet.

The whole paper is packed with value, but I want to call out two points:

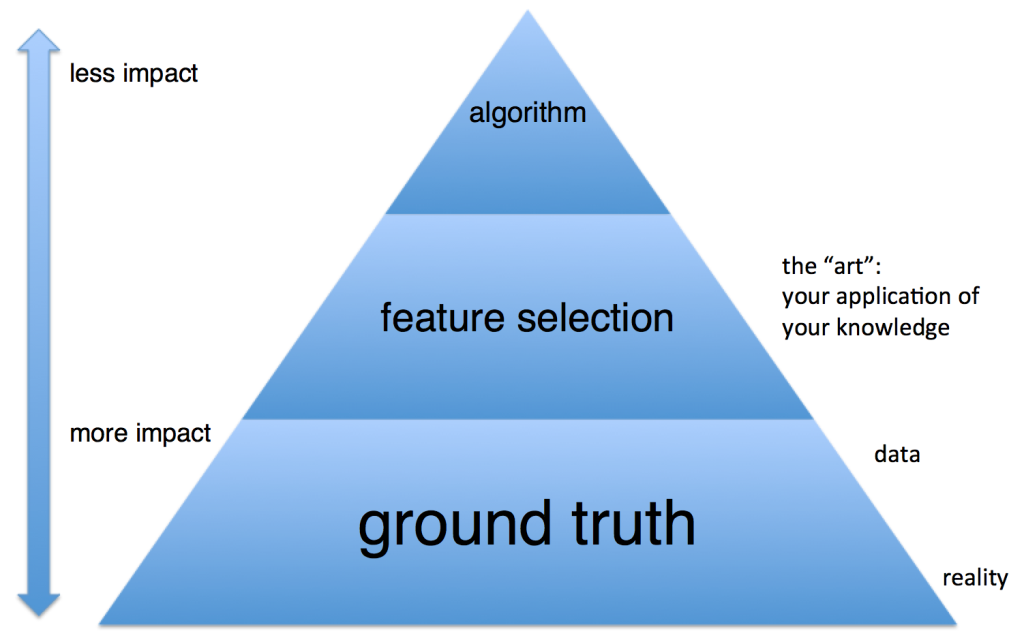

- Data alone is not enough. This is where science meets art in machine-learning. Quoting Domingos: "... the need for knowledge in learning should not be surprising. Machine learning is not magic; it can’t get something from nothing. What it does is get more from less. Programming, like all engineering, is a lot of work: we have to build everything from scratch. Learning is more like farming, which lets nature do most of the work. Farmers combine seeds with nutrients to grow crops. Learners combine knowledge with data to grow programs."

- More data beats a cleverer algorithm. Listen up, programmers. We like cool tools. Resist the temptation to reinvent the wheel, or to over-engineer solutions. Your starting point is to Do the Simplest Thing that Could Possibly Work. Quoting Domingos: "Suppose you’ve constructed the best set of features you can, but the classifiers you’re getting are still not accurate enough. What can you do now? There are two main choices: design a better learning algorithm, or gather more data. [...] As a rule of thumb, a dumb algorithm with lots and lots of data beats a clever one with modest amounts of it. (After all, machine learning is all about letting data do the heavy lifting.)"

So knowledge and data are critical. Focus your efforts on those, before fussing about algorithms. In practice, this means that unless you have to increase complexity, you should continue to Do Simple Things; don't rush to neural networks just because they're cool. To improve your model, get more data and use your knowledge of the problem to manipulate the data. You should spend most of your time on these steps. Only optimize your choice of algorithms after you've got enough data, and you've processed it well.

(The image above was inspired by a slide from Alex Pinto's talk, "Secure Because Math: A Deep-Dive on ML-Based Monitoring.)

Subscribe to Talking Machines, a podcast about machine learning. It's great. It's a low-effort, high-yield way to learn more.

I suggest this listening order:

- Start with the "Starting Simple" episode. It supports what we read from Domingos. Ryan Adams talks about starting simple, as discussed above. Adams also stresses the importance of feature engineering. Feature engineering is an exercise of the "knowledge" Domingos writes about.

- Then, start over from the first episode

Pick one of these IPython Notebooks and play along. (Or maybe two. But move onto your main course afterward!)

- Face Recognition on a subset of the Labeled Faces in the Wild

- Machine Learning from Disaster: Using Titanic data, "Demonstrates basic data munging, analysis, and visualization techniques. Shows examples of supervised machine learning techniques."

- Election Forecasting: A replication of the model Nate Silver used to make predictions about the 2012 US Presidential Election for the New York Times)

- ClickSecurity's "data hacking" series (thanks Aaron)

- Detect Algorithmically Generated Domains

- Detect SQL Injection

- Java Class File Analysis: is this Java code malicious or benign?

- If you want more of a DATA SCIENCE bent, pick a notebook from this excellent list of Data Science ipython notebooks. "Continually updated Data Science Python Notebooks: Spark, Hadoop MapReduce, HDFS, AWS, Kaggle, scikit-learn, matplotlib, pandas, NumPy, SciPy, and various command lines."

- Or more generic tutorials/overviews ...

There are more places to find great IPython Notebooks:

- A Gallery of Interesting IPython notebooks (wiki page on GitHub): Statistics, Machine Learning and Data Science

- Fabian Pedregosa's larger, automatic gallery

Prof. Andrew Ng (Stanford)'s online course Machine Learning is the free online course I see recommended the most.

It's helpful if you decide on a pet project to play around with, as you go, so you have a way to apply your knowledge. You could use one of these Awesome Public Datasets. And remember, IPython Notebook is your friend.

Also, the book Elements of Statistical Learning comes up frequently, but is usually referred to as a "reference" not an introduction. It's free, so download or bookmark it!

Here are some other free online courses I've seen recommended. (Machine Learning, Data Science, and related topics.)

- Machine Learning by Prof. Pedro Domingos of the University of Washington. Domingos wrote the paper "A Few Useful Things to Know About Machine Learning" quoted earlier in this guide. (Thanks to paperwork on HN.)

- Advanced Statistical Computing (Vanderbilt, BIOS8366) -- great option, highly interactive (lots of IPython Notebook material)

- Data Science (Harvard, CS109)

- Data Science (General Assembly)

- Data science courses as IPython Notebooks:

- Practical Data Science

- Learn Data Science (an entire self-directed course!)

- Supplementary material: donnemartin/data-science-ipython-notebooks. "Continually updated Data Science Python Notebooks: Spark, Hadoop MapReduce, HDFS, AWS, Kaggle, scikit-learn, matplotlib, pandas, NumPy, SciPy, and various command lines."

If you're focusing on Python, you should get more familiar with Pandas.

- Essential: 10 Minutes to Pandas

- Essential: Things in Pandas I Wish I'd Had Known Earlier (IPython Notebook)

- Useful Pandas Snippets

- Here are some docs I found especially helpful as I continued learning:

Bookmark these cheat sheets:

- scikit-learn algorithm cheat sheet

- Metacademy: a package manager for [machine learning] knowledge. A mind map of machine learning concepts, with great detail on each.

- An entire self-directed course in Data Science, as a IPython Notebook

- Extremely accessible data science book: Data Smart by John Foreman

- Data Science Workflow: Overview and Challenges (read the article & the comment by Joseph McCarthy)

- Fun little IPython Notebook: Web Scraping Indeed.com for Key Data Science Job Skills

Check out Gideon Wulfsohn's excellent introduction to Machine Learning for specialized knowledge on many topics... including Ensemble Methods, Apache Spark, Neural Networks, Reinforcement Learning, Natural Language Processing (RNN, LDA, Word2Vec), Structured Prediction, Deep Learning, Distributed Systems (Hadoop Ecosystem), Graphical Models (Hidden Markov Models), Hyper Parameter Optimization, GPU Acceleration (Theano), Computer Vision, Internet of Things, and Visualization.

Here's an IPython Notebook book about Probabilistic Programming and Bayesian Methods for Hackers: "An intro to Bayesian methods and probabilistic programming from a computation/understanding-first, mathematics-second point of view."

For now, the best StackExchange site is stats.stackexchange.com – machine-learning. (There's also datascience.stackexchange.com, but it's still in Beta.) And there's /r/machinelearning. There are also many relevant discussions on Quora, for example: What is the difference between Data Analytics, Data Analysis, Data Mining, Data Science, Machine Learning, and Big Data?

You should also join the Gitter channel for scikit-learn!

The rest of the stuff that might not be structured enough for a course, but seems important to know.

"Machine learning systems automatically learn programs from data." Pedro Domingos, in A Few Useful Things to Know about Machine Learning. The programs you generate will require maintenance. Like any way of creating programs faster, you can rack up technical debt.

Really essential:

- Surviving Data Science "at the Speed of Hype" by John Foreman, Data Scientist at MailChimp

- 11 Clever Methods of Overfitting and How to Avoid Them

A worthwhile paper: Machine Learning: The High-Interest Credit Card of Technical Debt. Here's the abstract:

Machine learning offers a fantastically powerful toolkit for building complex systems quickly. This paper argues that it is dangerous to think of these quick wins as coming for free. Using the framework of technical debt, we note that it is remarkably easy to incur massive ongoing maintenance costs at the system level when applying machine learning. The goal of this paper is highlight several machine learning specific risk factors and design patterns to be avoided or refactored where possible. These include boundary erosion, entanglement, hidden feedback loops, undeclared consumers, data dependencies, changes in the external world, and a variety of system-level anti-patterns.

And a few more articles:

- The Perilous World of Machine Learning for Fun and Profit: Pipeline Jungles and Hidden Feedback Loops

- The High Cost of Maintaining Machine Learning Systems

If you're using machine learning for unscientific reasons, like benefiting users ... as always you've got to keep your users in mind.

I have a friend who worked at <Redacted> Music Streaming Service. This company used machine learning in their recommendation and radio services. He complained about the way the company scored the radio feature's performance. There was disagreement about what should be scored. They used a metric, "no song skips." But why? Sure that indicates the recommendation wasn't awful, what if you want to measure engagement? Other metrics could measure positive engagement: "favorites," shares, listening time, or whether the listener returns to the radio station later. Measuring "no skips" might work for the passive listener, but the engaged listener is different. Perhaps the engaged listener will skip 5 songs, but find 20 songs they love and come back to the service later.

My takeaway: if you use machine learning to benefit your users, you must understand your users. You must understand which kind of user you're trying to benefit. Without the right measurement, you can't optimize your users' experiences.

There was a great BlackHat webcast on this topic, Secure Because Math: Understanding Machine Learning-Based Security Products. Slides are there, video recording is here. Equally relevant to InfoSec and AppSec.

Scaling data analysis is a familiar problem now, and there's no shortage of ways to address it. Beware needless hype and companies that want to sell you flashy, proprietary solutions. You can do it all with open-source tools. Even if you contract it, you consider looking for contractors who use known good stacks. No news here.

Here are some obvious tools to reach for:

- Apache Spark.

- NetflixOSS (see "Big Data Tools")

Also: 10 things statistics taught us about big data analysis

For Machine-Learning libraries that might not be on GitHub, there's MLOSS (Machine Learning Open Source Software). Seems to feature many academic libraries.

Kaggle has really exciting competitions and a data science job board.

Lastly, here are other guides to Machine Learning:

- Machine Learning for Developers is another good introduction. It introduces machine learning for a developer audience using Smile, a machine learning library that can be used both in Java and Scala.

- Materials for Learning Machine Learning by Jack Simpson

- How to Machine Learn by Gideon Wulfsohn

- Features links to find meetups about ML

- Features links to "deeper dive" specialized topics

- An example Machine Learning notebook: Provides an example of what a Python machine learning notebook would look like. Plenty of useful code snippets.

- [Your guide here]