NL2Bash

Overview

This repository contains the data and source code release of the paper: NL2Bash: A Corpus and Semantic Parser for Natural Language Interface to the Linux Operating System.

Specifically, it contains the following components:

- A set of ~10,000 bash one-liners collected from websites such as StackOverflow paired with their English descriptions written by Bash programmers.

- Tensorflow implementations of the following translation models:

- A Bash command parser which parses a Bash command into an abstractive syntax tree, developed on top of bashlex.

- A set of domain-specific natural language processing tools, including a regex-based sentence tokenizer and a domain specific named entity recognizer.

Data Statistics

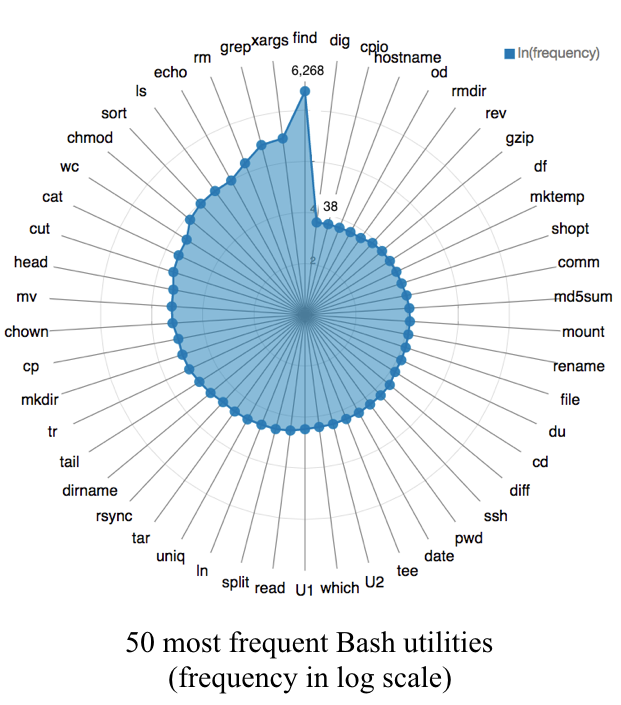

Our corpus contain a diverse set of Bash utilities and flags: 102 unique utilities, 206 unique flags and 15 reserved tokens. (Browse the raw data collection here.)

In our experiments, the set of ~10,000 NL-bash command pairs are splitted into train, dev and test sets such that neither a natural language description nor a Bash command appears in more than one split.

The statistics of the data split is tabulated below. (A command template is defined as a Bash command with all of its arguments replaced by their semantic types.)

| Split | Train | Dev | Test |

| # pairs | 8,090 | 609 | 606 |

| # unique NL | 7,340 | 549 | XX |

| # unique command | 6,400 | 599 | XX |

| # unique command template | 4,002 | 509 | XX |

The # occurrences of the top 50 most frequent Bash utilities in the corpus is illustrated in the following diagram.

Leaderboard

Manually Evaluated Translation Accuracy

Top-k full command accuracy and top-k command template accuracy judged by human experts. Please refer to section 4 of the paper for how manual evaluation is conducted.

| Model | F-Acc-Top1 | F-Acc-Top3 | T-Acc-Top1 | T-Acc-Top3 |

| Sub-token CopyNet (this work) | 0.36 | 0.45 | 0.49 | 0.61 |

| Tellina (Lin et. al. 2017) | 0.27 | 0.32 | 0.53 | 0.62 |

Automatic Evaluation Metrics

In addition, we also report BLEU and a self-defined template matching score as the automatic evaluation metrics used to approximate the true translation accuracy. Please refer to appendix C of the paper for more details.

| Model | BLEU-Top1 | BLEU-Top3 | TM-Top1 | TM-Top3 |

| Sub-token CopyNet (this work) | 50.9 | 58.2 | 0.574 | 0.634 |

| Tellina (Lin et. al. 2017) | 48.6 | 53.8 | 0.625 | 0.698 |

Run Experiments

Install TensorFlow

To reproduce our experiments, please install TensorFlow (>= 1.0). The experiments can be reproduced on machines with or without GPUs.

We suggest following the official instructions to install the library. The following pip installation command is copied from the official website and has been tested on Mac OS 10.10.5.

sudo pip3 install --upgrade \

https://storage.googleapis.com/tensorflow/mac/cpu/tensorflow-1.3.0-py3-none-any.whl

Environment Variables & Dependencies

Once TensorFlow is installed, run make in the root directory of the repo. This sets up the Python path and main experiment dependencies.

(sudo) make

Change Directory

Then enter the experiments directory.

cd experiments

Data filtering, split and pre-processing

Run the following command. This will clean the raw NL2Bash corpus and apply filtering, create the train/dev/test splits and preprocess the data into the formats taken by the Tensorflow models.

make data

To change the data-processing workflow, go to data and modify the utility scripts.

Train the models

make train

Generate evaluation table using pre-trained models

Decode the pre-trained models and print the evaluation summary table.

make decode

Skip the decoding step and print the evaluation summary table from the predictions saved on disk.

make gen_evaluation_table

By default, the decoding and evaluation steps will print sanity checking messages. You may set verbose to False in the following source files to suppress those messages.

encoder_decoder/decode_tools.py

eval/eval_tools.py

Citation

If you use the data or source code in your work, please cite

@inproceedings{LinWZE2018:NL2Bash,

author = {Xi Victoria Lin and Chenglong Wang and Luke Zettlemoyer and Michael D. Ernst},

title = {NL2Bash: A Corpus and Semantic Parser for Natural Language Interface to the Linux Operating System},

booktitle = {Proceedings of the Eleventh International Conference on Language Resources

and Evaluation {LREC} 2018, Miyazaki (Japan), 7-12 May, 2018.},

year = {2018}

}

Related paper: Lin et. al. 2017. Program Synthesis from Natural Language Using Recurrent Neural Networks.