TAP: Text-Aware Pre-training for Text-VQA and Text-Caption

by Zhengyuan Yang, Yijuan Lu, Jianfeng Wang, Xi Yin, Dinei Florencio, Lijuan Wang, Cha Zhang, Lei Zhang, and Jiebo Luo

IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021, Oral

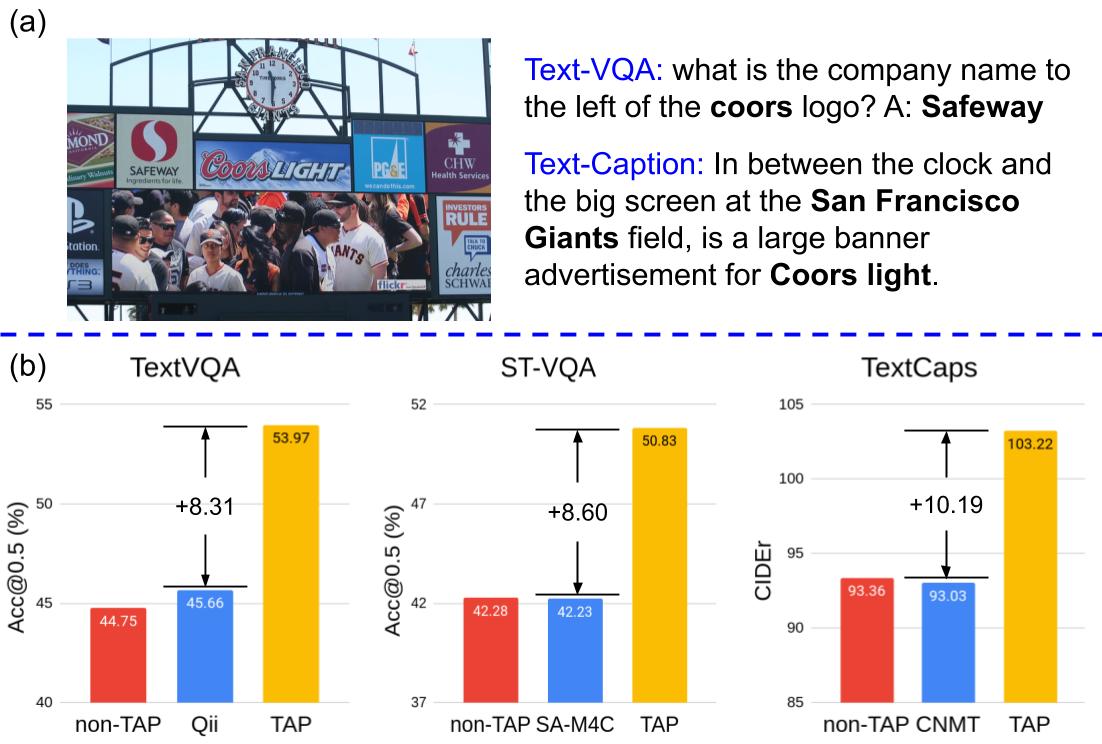

We propose Text-Aware Pre-training (TAP) for Text-VQA and Text-Caption tasks. For more details, please refer to our paper.

@inproceedings{yang2021tap,

title={TAP: Text-Aware Pre-training for Text-VQA and Text-Caption},

author={Yang, Zhengyuan and Lu, Yijuan and Wang, Jianfeng and Yin, Xi and Florencio, Dinei and Wang, Lijuan and Zhang, Cha and Zhang, Lei and Luo, Jiebo},

booktitle={CVPR},

year={2021}

}

-

Python 3.6

-

Pytorch 1.4.0

-

Please refer to

requirements.txt. Or usingpython setup.py develop

-

Clone the repository

git clone https://github.com/microsoft/TAP.git cd TAP python setup.py develop -

Data

- Please refer to the Readme in the

datafolder.

-

Train the model, run the code under main folder. Using flag

--pretrainto access the pre-training mode, otherwise the main QA/Captioning losses are used to optimize the model. Example yml files are inconfigsfolder. Detailed configs are in released models.Pre-training:

python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --pretrain --tasks vqa --datasets $dataset --model $model --seed $seed --config configs/vqa/$dataset/"$pretrain_yml".yml --save_dir save/$pretrain_savedir training_parameters.distributed True # for example python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --pretrain --tasks vqa --datasets m4c_textvqa --model m4c_split --seed 13 --config configs/vqa/m4c_textvqa/tap_base_pretrain.yml --save_dir save/m4c_split_pretrain_test training_parameters.distributed TrueFine-tuning:

python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --tasks vqa --datasets $dataset --model $model --seed $seed --config configs/vqa/$dataset/"$refine_yml".yml --save_dir save/$refine_savedir --resume_file save/$pretrain_savedir/$savename/best.ckpt training_parameters.distributed True # for example python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --tasks vqa --datasets m4c_textvqa --model m4c_split --seed 13 --config configs/vqa/m4c_textvqa/tap_refine.yml --save_dir save/m4c_split_refine_test --resume_file save/pretrained/textvqa_tap_base_pretrain.ckpt training_parameters.distributed True -

Evaluate the model, run the code under main folder. Set up val or test set by

--run_type.python -m torch.distributed.launch --nproc_per_node $num_gpu tools/run.py --tasks vqa --datasets $dataset --model $model --config configs/vqa/$dataset/"$refine_yml".yml --save_dir save/$refine_savedir --run_type val --resume_file save/$refine_savedir/$savename/best.ckpt training_parameters.distributed True # for example python -m torch.distributed.launch --nproc_per_node 4 tools/run.py --tasks vqa --datasets m4c_textvqa --model m4c_split --config configs/vqa/m4c_textvqa/tap_refine.yml --save_dir save/m4c_split_refine_test --run_type val --resume_file save/finetuned/textvqa_tap_base_best.ckpt training_parameters.distributed True -

Captioning evaluation.

python projects/M4C_Captioner/scripts/textcaps_eval.py --set val --pred_file YOUR_VAL_PREDICTION_FILE

Please check the detailed experiment settings in our paper.

path/to/azcopy copy https://tapvqacaption.blob.core.windows.net/data/save <local_path>/save --recursive

Please refer to the Readme in the data folder for the detailed instructions on azcopy downloading.

| Text-VQA | TAP | TAP** (with extra data) |

|---|---|---|

| TextVQA | 49.91 | 54.71 |

| STVQA | 45.29 | 50.83 |

| Text-Captioning | TAP | TAP** (with extra data) |

|---|---|---|

| TextCaps | 105.05 | 109.16 |

The project is built based on the following repository: