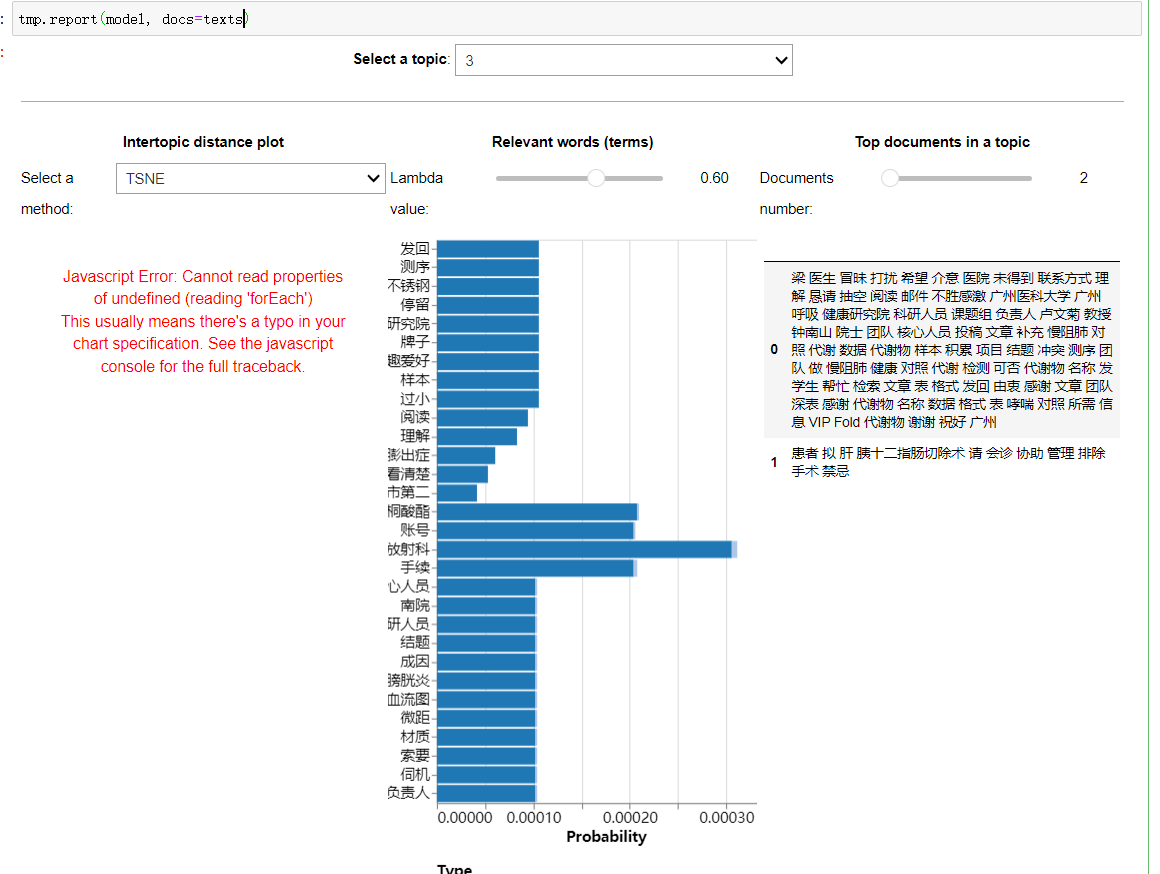

And then the below error is produced by it. Maybe I am the one at fault here but I can't see why the problem arises, It has worked when doing the same thing previously (on a different dataset).

I am utilizing the latest release of tmplot.

Thank you for any possible assistance and I apologize beforehand if have made any mistake, this is my first issue raised here on GitHub.

AttributeError Traceback (most recent call last)

Cell In[19], line 2

1 #Calculate coordinates

----> 2 topics_coords = tmp.prepare_coords(model)

File ~\Anaconda3\lib\site-packages\tmplot\_report.py:44, in prepare_coords(model, labels, dist_kws, scatter_kws)

42 theta = get_theta(model)

43 topics_dists = get_topics_dist(phi, **dist_kws)

---> 44 topics_coords = get_topics_scatter(topics_dists, theta, **scatter_kws)

45 topics_coords['label'] = labels or theta.index

46 return topics_coords

File ~\Anaconda3\lib\site-packages\tmplot\_distance.py:177, in get_topics_scatter(topic_dists, theta, method, method_kws)

174 elif method == 'isomap':

175 transformer = Isomap(**method_kws)

--> 177 coords = transformer.fit_transform(topic_dists)

179 topics_xy = DataFrame(coords, columns=['x', 'y'])

180 topics_xy['topic'] = topics_xy.index.astype(int)

File ~\Anaconda3\lib\site-packages\sklearn\manifold\_t_sne.py:1119, in TSNE.fit_transform(self, X, y)

1117 self._validate_params()

1118 self._check_params_vs_input(X)

-> 1119 embedding = self._fit(X)

1120 self.embedding_ = embedding

1121 return self.embedding_

File ~\Anaconda3\lib\site-packages\sklearn\manifold\_t_sne.py:963, in TSNE._fit(self, X, skip_num_points)

956 print(

957 "[t-SNE] Indexed {} samples in {:.3f}s...".format(

958 n_samples, duration

959 )

960 )

962 t0 = time()

--> 963 distances_nn = knn.kneighbors_graph(mode="distance")

964 duration = time() - t0

965 if self.verbose:

File ~\Anaconda3\lib\site-packages\sklearn\neighbors\_base.py:988, in KNeighborsMixin.kneighbors_graph(self, X, n_neighbors, mode)

985 A_data = np.ones(n_queries * n_neighbors)

987 elif mode == "distance":

--> 988 A_data, A_ind = self.kneighbors(X, n_neighbors, return_distance=True)

989 A_data = np.ravel(A_data)

991 else:

File ~\Anaconda3\lib\site-packages\sklearn\neighbors\_base.py:824, in KNeighborsMixin.kneighbors(self, X, n_neighbors, return_distance)

817 use_pairwise_distances_reductions = (

818 self._fit_method == "brute"

819 and ArgKmin.is_usable_for(

820 X if X is not None else self._fit_X, self._fit_X, self.effective_metric_

821 )

822 )

823 if use_pairwise_distances_reductions:

--> 824 results = ArgKmin.compute(

825 X=X,

826 Y=self._fit_X,

827 k=n_neighbors,

828 metric=self.effective_metric_,

829 metric_kwargs=self.effective_metric_params_,

830 strategy="auto",

831 return_distance=return_distance,

832 )

834 elif (

835 self._fit_method == "brute" and self.metric == "precomputed" and issparse(X)

836 ):

837 results = _kneighbors_from_graph(

838 X, n_neighbors=n_neighbors, return_distance=return_distance

839 )

File ~\Anaconda3\lib\site-packages\sklearn\metrics\_pairwise_distances_reduction\_dispatcher.py:277, in ArgKmin.compute(cls, X, Y, k, metric, chunk_size, metric_kwargs, strategy, return_distance)

196 """Compute the argkmin reduction.

197

198 Parameters

(...)

274 returns.

275 """

276 if X.dtype == Y.dtype == np.float64:

--> 277 return ArgKmin64.compute(

278 X=X,

279 Y=Y,

280 k=k,

281 metric=metric,

282 chunk_size=chunk_size,

283 metric_kwargs=metric_kwargs,

284 strategy=strategy,

285 return_distance=return_distance,

286 )

288 if X.dtype == Y.dtype == np.float32:

289 return ArgKmin32.compute(

290 X=X,

291 Y=Y,

(...)

297 return_distance=return_distance,

298 )

File sklearn\metrics\_pairwise_distances_reduction\_argkmin.pyx:95, in sklearn.metrics._pairwise_distances_reduction._argkmin.ArgKmin64.compute()

File ~\Anaconda3\lib\site-packages\sklearn\utils\fixes.py:139, in threadpool_limits(limits, user_api)

137 return controller.limit(limits=limits, user_api=user_api)

138 else:

--> 139 return threadpoolctl.threadpool_limits(limits=limits, user_api=user_api)

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:171, in threadpool_limits.__init__(self, limits, user_api)

167 def __init__(self, limits=None, user_api=None):

168 self._limits, self._user_api, self._prefixes = \

169 self._check_params(limits, user_api)

--> 171 self._original_info = self._set_threadpool_limits()

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:268, in threadpool_limits._set_threadpool_limits(self)

265 if self._limits is None:

266 return None

--> 268 modules = _ThreadpoolInfo(prefixes=self._prefixes,

269 user_api=self._user_api)

270 for module in modules:

271 # self._limits is a dict {key: num_threads} where key is either

272 # a prefix or a user_api. If a module matches both, the limit

273 # corresponding to the prefix is chosed.

274 if module.prefix in self._limits:

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:340, in _ThreadpoolInfo.__init__(self, user_api, prefixes, modules)

337 self.user_api = [] if user_api is None else user_api

339 self.modules = []

--> 340 self._load_modules()

341 self._warn_if_incompatible_openmp()

342 else:

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:373, in _ThreadpoolInfo._load_modules(self)

371 self._find_modules_with_dyld()

372 elif sys.platform == "win32":

--> 373 self._find_modules_with_enum_process_module_ex()

374 else:

375 self._find_modules_with_dl_iterate_phdr()

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:485, in _ThreadpoolInfo._find_modules_with_enum_process_module_ex(self)

482 filepath = buf.value

484 # Store the module if it is supported and selected

--> 485 self._make_module_from_path(filepath)

486 finally:

487 kernel_32.CloseHandle(h_process)

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:515, in _ThreadpoolInfo._make_module_from_path(self, filepath)

513 if prefix in self.prefixes or user_api in self.user_api:

514 module_class = globals()[module_class]

--> 515 module = module_class(filepath, prefix, user_api, internal_api)

516 self.modules.append(module)

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:606, in _Module.__init__(self, filepath, prefix, user_api, internal_api)

604 self.internal_api = internal_api

605 self._dynlib = ctypes.CDLL(filepath, mode=_RTLD_NOLOAD)

--> 606 self.version = self.get_version()

607 self.num_threads = self.get_num_threads()

608 self._get_extra_info()

File ~\Anaconda3\lib\site-packages\threadpoolctl.py:646, in _OpenBLASModule.get_version(self)

643 get_config = getattr(self._dynlib, "openblas_get_config",

644 lambda: None)

645 get_config.restype = ctypes.c_char_p

--> 646 config = get_config().split()

647 if config[0] == b"OpenBLAS":

648 return config[1].decode("utf-8")

AttributeError: 'NoneType' object has no attribute 'split'