Maggy is a framework for distribution transparent machine learning experiments on Apache Spark. In this post, we introduce a new unified framework for writing core ML training logic as oblivious training functions. Maggy enables you to reuse the same training code whether training small models on your laptop or reusing the same code to scale out hyperparameter tuning or distributed deep learning on a cluster. Maggy enables the replacement of the current waterfall development process for distributed ML applications, where code is rewritten at every stage to account for the different distribution context.

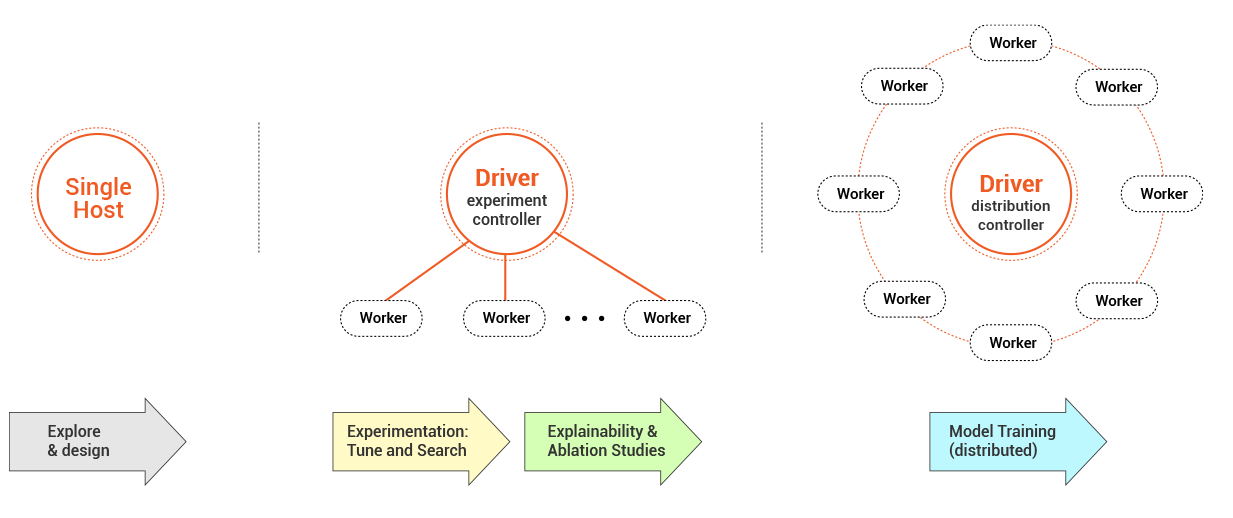

Maggy uses the same distribution transparent training function in all steps of the machine learning development process.

Maggy uses the same distribution transparent training function in all steps of the machine learning development process.

Maggy uses PySpark as an engine to distribute the training processes. To get started, install Maggy in the Python environment used by your Spark Cluster, or install Maggy in your local Python environment with the 'spark' extra, to run on Spark in local mode:

pip install maggyThe programming model consists of wrapping the code containing the model training inside a function. Inside that wrapper function provide all imports and parts that make up your experiment.

Single run experiment:

def train_fn():

# This is your training iteration loop

for i in range(number_iterations):

...

# add the maggy reporter to report the metric to be optimized

reporter.broadcast(metric=accuracy)

...

# Return metric to be optimized or any metric to be logged

return accuracy

from maggy import experiment

result = experiment.lagom(train_fn=train_fn, name='MNIST')lagom is a Swedish word meaning "just the right amount". This is how MAggy uses your resources.

Full documentation is available at maggy.ai

There are various ways to contribute, and any contribution is welcome, please follow the CONTRIBUTING guide to get started.

Issues can be reported on the official GitHub repo of Maggy.

Please see our publications on maggy.ai to find out how to cite our work.

The development of Maggy is supported by the EU H2020 Deep Cube Project (Grant agreement ID: 101004188).