kurin / blazer Goto Github PK

View Code? Open in Web Editor NEWA Go library for Backblaze's B2.

License: Other

A Go library for Backblaze's B2.

License: Other

Something that beats watching log output.

This won't always be possible, but we should do it where we can.

B2 doesn't store the complete file hash on large object uploads. You can add it yourself... if you know it when you BEGIN the transfer. Even through there's an HTTP request you need to make to FINISH the large transfer, there's no facility there to say "okay here's the SHA1 of the whole thing I sent you," presumably because they don't want to have to calculate it themselves to verify it.

Also, it'd only work when users are downloading an entire object. Which I suspect is the majority use case, but which is kind of unsatisfying.

Nobody's asked for this, but it's a thing, so why not.

I can't figure out how to (easily) find out if file already exists.

I tried:

func BackblazeExists(b2Path string) (bool, error) {

ctx := context.Background()

bucket, err := getBucket(ctx)

if err != nil {

return false, err

}

obj := bucket.Object(b2Path)

_, err = obj.Attrs(ctx)

if err == nil {

return true, nil

}

if b2.IsNotExist(err) {

return false, nil

}

return false, err

}But the error from obj.Attrs() is b2_download_file_by_name: 404: bucket kjkpub does not have file: and doesn't match b2.IsNotExist().

I can think of few (unsatisfactory) work-arounds, given the current api:

Possible solutions in the library:

Bucket.getObject as Bucket.getObject so that I don't have to use Object.Attrs()Object.Attrs() return an error with notFoundErr = true for the case of "object doesn't exist"Also, example for that in readme would be good.

Sometimes we'll have a file that comes from disk or is already entirely in memory, and in those cases we shouldn't be copying anything into memory or writing to scratch space.

Something like a w.ReadFrom(r io.Reader) (int64, error) that's optimized if r is also an io.ReadSeeker and can reread sections.

In #41 a user noticed that there was no data going, but didn't have good visibility into what was happening.

Should b2 expose debug info? If so, how?

As noted in restic/restic/issues/512 requiring object size to read can drastically increase the number of list_files calls when there are many small reads.

We currently check object size in order to handle EOFs when multiplexing downloads. It may instead be possible just to assume that short reads indicate EOF.

If an upload is cancelled by cancelling the context, it's possible that it could internally be blocked trying to read from the w.ready channel. If w.Close() is then called for some reason, this will result in a send on a closed channel, and it will panic.

Trying to create an empty file:

obj := bucket.Object("foo")

wc := obj.NewWriter(context.Background())

fmt.Println(wc.Write([]byte{}))

fmt.Println(wc.Close())produces the error: b2_upload_file: 400: Missing header: Content-Length

Hello, I am trying to generate Authorization token which I would like to re-use in order to grant tmp access to image files that I am storing on B2 bucket.

Here is my code:

type B2 struct {

ID string // unique identifier for B2 cloud storage

Key string // application key that controls access to B2 storage

BucketName string // name of the container that holds files

ObjectKey string // absolute path to specific file/directory inside given bucket

AuthToken string // authentication token which is used in order to grant access to bucket private files

Client *b2.Client // b2 client object

Context context.Context // environment context

Bucket *b2.Bucket // b2 bucket object

}

//

// Creates and initializes new B2 struct.

//

// Arguments:

// - id string: unique identifier for B2 cloud storage

// - key string: application key that controls access to B2 storage

// - bucket string: name of a bucket on b2 cloud storage

// - objectKey string: path to specific file or directory that is part of B2 bucket

//

// Returns:

// - Reference to new B2 instance

//

func NewB2Driver(id string, key string, bucketName string, objectKey string) (*B2, error) {

var b2Driver B2

b2Driver.Context = context.Background()

client, err := newB2Client(&b2Driver.Context, id, key)

if err != nil {

glog.Errorf("Failed to create new B2 client object, reason - %v", err)

return nil, err

}

b2Driver.Client = client

bucket, err := newB2Bucket(&b2Driver.Context, client, bucketName, objectKey)

if err != nil {

glog.Errorf("Failed to create new B2 bucket object, reason - %v", err)

return nil, err

}

b2Driver.Bucket = bucket

b2Driver.ID = id

b2Driver.BucketName = bucketName

b2Driver.Key = key

b2Driver.ObjectKey = objectKey

return &b2Driver, nil

}

func (b2Obj *B2) GenerateTmpAuthURL(prefix string, expireAfter time.Duration) (string, error) {

if b2Obj.AuthToken == "" {

authToken, err := b2Obj.Bucket.AuthToken(b2Obj.Context, prefix, expireAfter)

if err != nil {

glog.Errorf("Failed to generate temporary authorization token for B2 cloud storage, reason - %v", err)

return "", err

}

glog.V(2).Infof("[INFO]: Successfully generated tmp authorization token for B2 cloud storage. Token - %s", authToken)

b2Obj.AuthToken = authToken

}

objUrl := b2Obj.Bucket.Object(b2Obj.ObjectKey).URL()

objUrl = objUrl + "?Authorization=" + b2Obj.AuthToken

return objUrl, nil

}

And here is how resulting URL looks like once functions above are executed:

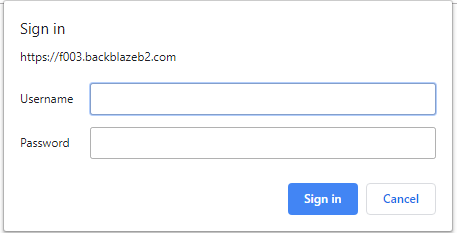

https://f003.backblazeb2.com/file/cart-test-thumbnail-lambda-thumbnail-bucket/thumbnail_20181231235907_0_181094_0.jpeg?Authorization=3_20190917124617_ed5bc772862c3ab6ada9a331_0ed4df528f58a6e91bdee9dbb96a7425501100b6_003_20190917125117_0084_dnld

When I copy paste URL into the browser I get the following:

Is this suppose to happen? Shouldnt I have access to the file without having to SIGN IN? Also, whenever I enter my information I get back right to the same pop-up window, nothing happens. Its like I cannot sign in.

A bunch of Backblaze pods had their SSL certs expire, and b2 kept retrying until the user got annoyed and quit.

Should this have been a permanent error?

seriously what is even

The user of Blazer-powered program might be presented with a list of unfinished large file uploads in B2 and after some time (think a next request through the browser) might decide to pick one and cancel it permanently. The problem is, if I understood correctly, that blazer exposes an object, but does not expose the B2's identifier of the upload (File.id - it is being held in a private variable of the object). Therefore it is not possible to reliably identify the file chosen by the user to cancel after the object is freed from memory. We can't really keep it in memory, as we have no idea whether and when the user would choose to cancel it...

The b2 public interface layer could hide internal cloud identifiers, however:

b2 is a high-level interface, specifically designed to abstract away the Backblaze API details by providing familiar Go interfacebase is a "very low-level interface on top of the B2 v1 API", which is not intended to be used directlyExposure of this File.id identifier from the large file upload list function would require that:

base layer of Blazer to be modified (here the b2 "officially" public interface would not change at all)base package is for, to officially allow others to use base as a, uh, base for their softwareObviously, while the first part is trivial, the second one requires careful consideration.

This issue is currently affecting Blazer users today - if someone wants to implement a program in golang and that program needs the internal details such as File.id, they are copying the source code of Blazer's base into their project, modifying the visibility of a field and leaving it there without any updates Blazer team is doing, for years. Therefore in my opinion, exposure of a few fields and removal of not intended to be used directly restriction would make the world a better place.

If my understanding is correct, such changes should not break the actual b2 package, but if they did, matching changes would need to be done accordingly in both packages in the same PR, as well as in any unit tests, documentation etc, to follow the quality standard you have set in the project. I would take care of all that to the satisfaction of the maintainer of the repository, if the change is pre-approved in the discussion below.

Would it be ok for base to be allowed to be used by other developers?

Would it be ok to merge a PR which would to expose a currently private field (as discussed above) in the base package (while making sure that b2 is not negatively affected)?

By default, blazer will do a pass over the data to compute the hash so that it can be sent at the start of the upload request, as described in https://www.backblaze.com/b2/docs/uploading.html

Blazer can either do this in memory, which is the default, or can copy the input into a temp file, or it can just make two passes over the input if the input supports seeking. Each of these has side effects that might not be obvious to a user (e.g., if you're using blazer to copy files out of NFS, blazer will happily read every file twice).

Blazer should append the hash at the end by default. This is not recommended by blazer, but if there's a functional difference between the two options it's not obvious to me what it is.

Hi! I was wondering how am I suppose to delete sequence of .JPEG files? Do I use create Key structure for each of them and then call Delete()? What is the most efficient way?

Hello everyone, I am trying to upload some files on b2 bucket but I keep getting this error like my bucket does not exist. Here is my code:

package main

import(

"github.com/kurin/blazer/b2"

"fmt"

"context"

"net/url"

"os"

"path/filepath"

)

type B2 struct {

ID string // unique identifier for B2 cloud storage

Key string // application key that controls access to B2 storage

BucketName string // name of the container that holds files

ObjectKey string // absolute path to specific file/directory inside given bucket

Client *b2.Client

Bucket *b2.Bucket

}

//

// Creates and initializes new B2 struct.

//

// Arguments:

// - id string: unique identifier for B2 cloud storage

// - key string: application key that controls access to B2 storage

// - bucket string: name of a bucket on b2 cloud storage

// - objectKey string: path to specific file or directory that is part of B2 bucket

//

// Returns:

// - Reference to new B2 instance

//

func NewB2Driver(id string, key string, bucketName string, objectKey string) (*B2, error) {

var b2Driver B2

client, err := newB2Client(id, key)

if err != nil {

fmt.Printf("Failed to create new B2 client object, reason - %v", err)

return nil, err

}

b2Driver.Client = client

bucket, err := newB2Bucket(client, bucketName, objectKey)

if err != nil {

fmt.Printf("Failed to create new B2 bucket object, reason - %v", err)

return nil, err

}

b2Driver.Bucket = bucket

b2Driver.ID = id

b2Driver.BucketName = bucketName

b2Driver.Key = key

b2Driver.ObjectKey = objectKey

return &b2Driver, nil

}

//

// Creates a new Client instance based on specified storage configuration.

//

func newB2Client(id string, applicationKey string) (*b2.Client, error) {

cli, err := b2.NewClient(context.Background(), id, applicationKey)

if err != nil {

fmt.Printf("Failed to create new B2 Client instance. Message - %v", err)

return nil, err

}

return cli, nil

}

//

// Parses URL in order to generate URI which is used to identify

// a container (bucket) that is part of B2 cloud storage.

//

// Arguments:

// - c *b2.Client: Client instance which we are using in order to

// establish connection to speific B2 cloud storage.

// Returns:

// - Bucket instance that is used in order to manipulate with specific container

// on B2 cloud storage.

// - error condition if any

//

func newB2Bucket(c *b2.Client, bucketName string, objectKey string) (*b2.Bucket, error) {

uri, err := url.Parse(filepath.Join("b2://", bucketName, objectKey))

if err != nil {

fmt.Printf("Failed to generate URI to specific file/directory on B2 cloud storage. Message -%v", err)

return nil, err

}

bucket, err := c.Bucket(context.Background(), uri.Host)

if err != nil {

fmt.Printf("Failed to create new B2 Bucket instance. Message -%v", err)

return nil, err

}

return bucket, nil

}

func main() {

b2Driver, err := NewB2Driver({APPLICATION-KEY}, {KEY-ID}, "cart-test-thumbnail-lambda-segment-bucket", {OBJECT-KEY})

if err != nil{

fmt.Printf("Failed to create New B2 Driver, reason - %v", err)

os.Exit(1)

}

fmt.Printf("%v", b2Driver)

}

And here is a screenshot of my buckets on B2 (which are set as public):

When I authorize and list buckets via b2 command line tool I do get my buckets listed as follows:

xxxxxxxxxxxxxxxxxxxxxx allPublic cart-test-thumbnail-lambda-segment-bucket

xxxxxxxxxxxxxxxxxxxxxx allPublic cart-test-thumbnail-lambda-thumbnail-bucket

Can anyone help me pin point the issue here. Am I doing something wrong?

I'm using the library in a simple file backup to backblaze program.

The backblaze path is sha1 of the content so I'm using metadata to store the original file path, i.e. something like:

dst := obj.NewWriter(ctx)

info := make(map[string]string)

info["path"] = filePath

attrs := b2.Attrs{

Info: info,

}

dst = dst.WithAttrs(&attrs)

...When path has spaces in it (as it happens sometimes), I get the error:

2017/06/06 22:19:13 error writing files/0f/82/0f822a8bc2600860b6066bca0e0df11349ecb5ff.pdf: b2_upload_file: 400: Bad character in percent-encoded string: 32 (0x20)

BackblazeUploadFileWithMetadta() failed with b2_upload_file: 400: Bad character in percent-encoded string: 32 (0x20)

I assume that value (and key?) of metadata must be url-escaped?

Also, the debug log message shouldn't be there (I don't have B2_LOG_LEVEL set).

According to https://www.backblaze.com/b2/docs/uploading.html, we're no longer obligated to send the SHA1 hash in the header. This could save memory. Explore this.

It would be nice to have more than just a breakdown of API calls by count, but also:

It's nice but it's propagating flags into everything.

For issues like #34, but general and possibly exported.

Hello,

I'm inquiring about the state of this library. I'm looking forward to using it as it seems like a viable alternative to go-backblaze but I see that it hasn't been maintained in 8 months other than one pull request.

Is this planned on still being actively maintained or are you looking for someone to pick up the project?

Regards,

Ely Haughie

When downloading, the reader suffers from head-of-line blocking and has to buffer chunks that may have finished but which can't be flushed yet. If the b2.Reader satisfies io.WriterTo, then (for appropriate writers anyway) it will be able to flush that data as soon as it is available.

Download URL's take two query parameters, both optional: Authorization and b2ContentDisposition (see: https://www.backblaze.com/b2/docs/b2_download_file_by_name.html)

If an authorization token is used for download, the value of b2ContentDisposition must also have been specified byte-for-byte in the request to get_download_authorization when creating the token (see: https://www.backblaze.com/b2/docs/b2_get_download_authorization.html).

blazer does not have support for specifying b2ContentDisposition to get_download_authorization, and tokens generated by AuthToken can thus not be used with the b2ContentDisposition query parameter.

Hi,

I am using "subfolders" in B2 and want to list files efficiently inside a "subfolder".

Currently the only option is ListCurrentObjects and filtering the result by my prefix. But the underlying B2 API does support being passed a prefix. It would be faster to have this filtration done by the B2 server instead of client code.

Would you consider adding a ListCurrentObjectsWithPrefix function?

Regards

mappu

Hi,

would it be possible to have an example on how to upload a file together with some custom attributes (X-Bz-Info-* headers)?

I'm seeing a WithAttrs function on the Writer, but it's marked as deprecated and I'm not sure I understand how to use the alternative.

Thanks!

Hi,

thanks for writing this library! I'm currently integrating it into restic, a fast and secure backup program. During development I noticed that reading from a *b2.Reader after EOF has been seen yields a panic:

panic: close of closed channel [recovered]

panic: close of closed channel

goroutine 40 [running]:

testing.tRunner.func1(0xc42014a410)

/usr/lib/go/src/testing/testing.go:622 +0x29d

panic(0x75a940, 0xc42021c2a0)

/usr/lib/go/src/runtime/panic.go:489 +0x2cf

github.com/kurin/blazer/b2.(*Reader).Read(0xc420224620, 0xc420186000, 0x2000, 0x2000, 0x2000, 0x73b560, 0xc42015d701)

/home/fd0/restic/vendor/src/github.com/kurin/blazer/b2/reader.go:221 +0x2cd

restic/backend/b2.wrapReader.Read(0x93e860, 0xc420224620, 0xc4201f6660, 0xc420186000, 0x2000, 0x2000, 0x0, 0xc420094160, 0xc420094188)

/home/fd0/restic/src/restic/backend/b2/b2.go:110 +0x70

restic/backend/b2.(*wrapReader).Read(0xc42020be00, 0xc420186000, 0x2000, 0x2000, 0x5fa, 0x0, 0x93be60)

<autogenerated>:10 +0x7d

io/ioutil.devNull.ReadFrom(0x0, 0x7f7b1a209308, 0xc42020be00, 0x762860, 0x601, 0x7f7b1a2092b8)

/usr/lib/go/src/io/ioutil/ioutil.go:144 +0x85

io/ioutil.(*devNull).ReadFrom(0x98ae20, 0x7f7b1a209308, 0xc42020be00, 0x7f7b1a2092b8, 0x98ae20, 0x2b51e04b401)

<autogenerated>:6 +0x61

io.copyBuffer(0x93d360, 0x98ae20, 0x7f7b1a209308, 0xc42020be00, 0x0, 0x0, 0x0, 0x787a40, 0x0, 0x7f7b1a209308)

/usr/lib/go/src/io/io.go:384 +0x2cb

io.Copy(0x93d360, 0x98ae20, 0x7f7b1a209308, 0xc42020be00, 0xc42020be00, 0xc42001c7d0, 0xc42015d958)

/usr/lib/go/src/io/io.go:360 +0x68

restic/backend.LoadAll.func1(0x93fca0, 0xc42020be00, 0xc42015da18)

/home/fd0/restic/src/restic/backend/utils.go:17 +0x75

restic/backend.LoadAll(0x942da0, 0xc4202917a0, 0x7bc78c, 0x4, 0x7cecec, 0x40, 0xc42026a000, 0x6, 0x600, 0x0, ...)

/home/fd0/restic/src/restic/backend/utils.go:28 +0x1ca

restic/backend/test.(*Suite).TestBackend(0xc420015840, 0xc42014a410)

/home/fd0/restic/src/restic/backend/test/tests.go:439 +0x790

reflect.callMethod(0xc420131020, 0xc42015dfa8)

/usr/lib/go/src/reflect/value.go:640 +0x18a

reflect.methodValueCall(0xc42014a410, 0x339d5113, 0x96e8a0, 0x0, 0x45b2a1, 0xc42014a410, 0xc420131020, 0x0, 0x0, 0x0, ...)

/usr/lib/go/src/reflect/asm_amd64.s:29 +0x40

testing.tRunner(0xc42014a410, 0xc420131020)

/usr/lib/go/src/testing/testing.go:657 +0x96

created by testing.(*T).Run

/usr/lib/go/src/testing/testing.go:697 +0x2ca

exit status 2

Can you have a look please?

Found in restic/restic#1351

For restic, we'll switch to dep shortly, and it would be very helpful if you could tag a release. Thanks!

https://dave.cheney.net/2016/06/24/gophers-please-tag-your-releases

When starting a large file upload, you can specify the SHA1 for the entire large file if known beforehand: https://www.backblaze.com/b2/docs/b2_start_large_file.html

This does fly in the face of the b2_finish_large_file documentation stating a large file doesn't have a SHA1 checksum, but this might be incorrect/dated now?

This is on Mac, go 1.8.3, blazer 022f731

This happened after uploading few thousands of files, so it's rare. There's no multi-threading (i.e. all uploads happen from the same thread).

crash:

panic: runtime error: invalid memory address or nil pointer dereference

[signal SIGSEGV: segmentation violation code=0x1 addr=0x0 pc=0x124764f]

goroutine 1 [running]:

github.com/kurin/blazer/b2.(*Writer).Close.func1()

/Users/kjk/src/go/src/github.com/kurin/blazer/b2/writer.go:366 +0x6f

sync.(*Once).Do(0xc420a60098, 0xc42428b9f8)

/usr/local/Cellar/go/1.8.3/libexec/src/sync/once.go:44 +0xbe

github.com/kurin/blazer/b2.(*Writer).Close(0xc420a60000, 0xc420a60000, 0x1422480)

/Users/kjk/src/go/src/github.com/kurin/blazer/b2/writer.go:385 +0x58

main.BackblazeUploadFileWithMetadata(0xc421020dc0, 0x38, 0xc420ed1780, 0x75, 0xc42428bc68, 0x2, 0x2, 0x0, 0x0)

/Users/kjk/src/kjkpriv/src/b2backup/backblaze.go:76 +0x3d0

main.backupFile2(0xc420ed1780, 0x75, 0x0, 0x1, 0x1)

/Users/kjk/src/kjkpriv/src/b2backup/main.go:166 +0x3f7

main.backupDir(0x7fff5fbffa04, 0xf, 0xc420077180, 0x1, 0x1, 0xc420077190, 0x4)

/Users/kjk/src/kjkpriv/src/b2backup/main.go:130 +0x2b4

main.main()

/Users/kjk/src/kjkpriv/src/b2backup/main.go:315 +0x2f1

For your convenience, those are snippets of code that crash. <-- crash marks the line that crashes.

func BackblazeUploadFileWithMetadata(b2path, path string, metadata []string) error {

if len(metadata)%2 != 0 {

return errInvalidMetadata

}

nMetadata := len(metadata) / 2

if nMetadata > 10 {

return errInvalidMetadata

}

src, err := os.Open(path)

if err != nil {

return err

}

defer src.Close()

ctx := context.Background()

bucket, err := getBucket(ctx)

if err != nil {

return err

}

obj := bucket.Object(b2path)

dst := obj.NewWriter(ctx)

if nMetadata > 0 {

info := make(map[string]string)

for i := 0; i < nMetadata; i++ {

key, val := metadata[i*2], metadata[i*2+1]

info[key] = val

}

// TODO: set attrs.ContentType ?

attrs := b2.Attrs{

Info: info,

}

dst = dst.WithAttrs(&attrs)

}

_, err = io.Copy(dst, src)

err2 := dst.Close() <--- crash

if err == nil {

err = err2

}

return err

}b2/writer.go

func (w *Writer) Close() error {

w.done.Do(func() {

defer w.o.b.c.removeWriter(w)

defer w.w.Close() // TODO: log error

if w.cidx == 0 {

w.setErr(w.simpleWriteFile())

return

}

if w.w.Len() > 0 {

if err := w.sendChunk(); err != nil {

w.setErr(err)

return

}

}

close(w.ready)

w.wg.Wait()

f, err := w.file.finishLargeFile(w.ctx)

if err != nil {

w.setErr(err)

return

}

w.o.f = f

}) <-- crash

return w.getErr()

}sync/once.go:

func (o *Once) Do(f func()) {

if atomic.LoadUint32(&o.done) == 1 {

return

}

// Slow-path.

o.m.Lock()

defer o.m.Unlock()

if o.done == 0 {

defer atomic.StoreUint32(&o.done, 1) <-- crash

f()

}

}So it looks o.done was nil.

Hi, I did some fuzz-testing on blazer and found that there were some cases where the input does not come through cleanly for unescape(escape(s)):

"&\x020000\x9c",

"&\x020\x9c0",

"&\x0230j",

"&\x02\x98000",

"&\x02\x983\xc8j00",

"00\x000",

"00\x0000",

"00\x0000000000000",

"\x11\x030",

I think this can be fixed, and at the same time greatly simplify the code, by following the pattern in Backblaze's sample code: use the platform's stdlib encoding function, and just replace "%2F" with "/" for their edge-case of not encoding the slash.

I also found that DownloadFileByName probably needs to escape the filename when used as part of the request URI.

My fixes and some tests for this are here:

armhold@4df8235

armhold@1b09f11

Would you mind reviewing? I can submit a PR if you think that's appropriate, but would appreciate your review of my potential changes.

Thanks!

I need a cheap way to list existing items including the basic info (size etc.).

I did the obvious thing:

Bucket.ListCurrentObjectsObject.AttrsCurrently in that case Object.Attrs involves another HTTP call, which is slow and costly.

It looks like the http api used by Bucket.ListCurrentObjects returns the necessary data so it seems possible to construct Object.Attrs out of that and cache it.

I looks I could use the lower-level base API, but I'm told not to :)

like maybe write to tempfiles idk

It feels awfully dirty to fmt.Sprintf direct embedded-authorization URLs.

Something like:

func (*Object) GetAuthorizedURL(ctx context.Context, validity time.Duration) (*url.URL, error) {}

func (*Object) GetAuthorizedURLWithContentDisposition(ctx context.Context, validity time.Duration, contentDisposition string) (*url.URL, error) {}... Would feel a lot cleaner than:

token, err := bucket.AuthToken(ctx, name, validity)

if err != nil {

...

}

u, err := url.Parse(fmt.Sprintf("%s/file/%s/%s?Authorization=%s", bucket.BaseURL(), bucket.Name(), name, url.QueryEscape(token)))

if err != nil {

...

}

return u, nilBy default, b2 retries forever on error, which is the behavior specified in the Backblaze integration checklist.

However, this often breaks users who expect a command either to succeed or to fail. For example, in restic/restic#1906 a user ran an interactive command which ought to have finished after a few seconds, either indicating success or printing an error to the effect of "Backblaze is unavailable."

Currently users can set context deadlines, but this only tells the user that a deadline exceeded, and there's no indication about whether they did something wrong or why the command didn't succeed.

An option to turn off error handling would cease all such retries. It could either be a ClientOption, which would disable error handling for every request from that client, or a context wrapper, which would allow users to disable error handling on a per-request basis.

restic/restic#1383 is showing a lot of class B transactions.

Restic calls Attrs to ensure that an object doesn't exist before uploading it. This calls object.ensure, which populates the object ID and name, and then (on objects that exist) getFileInfo, to populate the Info field. This probably isn't necessary, although I'm not sure why object.ensure went from getFileInfo to downloadFileByName in the first place in 8b44fc9.

Let me preface this by saying that I am not a Go programmer, nor an expert on B2; just a humble punter trying to get zfsbackup-go working on my Solaris machine. I don't know enough to know whether this issue is in zfsbackup-go's usage of Blazer, or in Blazer itself.

ListObjects() in Writer.getLargeFile() could return EOF, which was causing zfsbackup-go to hang starting a new upload. Treating EOF identically to len(objs) < 1 (i.e. restarting with w.Resume = false) seemed to fix it, but I'm not sure if this is the correct fix.

diff --git a/b2/writer.go b/b2/writer.go

index 209cfce..bedf626 100644

--- a/b2/writer.go

+++ b/b2/writer.go

@@ -311,7 +311,12 @@ func (w *Writer) getLargeFile() (beLargeFileInterface, error) {

cur := &Cursor{name: w.name}

objs, _, err := w.o.b.ListObjects(w.ctx, 1, cur)

if err != nil {

- return nil, err

+ if err == io.EOF {

+ w.Resume = false

+ return w.getLargeFile()

+ } else {

+ return nil, err

+ }

}

if len(objs) < 1 || objs[0].name != w.name {

w.Resume = falseAll of the examples in the Readme require a pointer to a b2.Bucket instance. Please update the Readme to provide documentation on how to create the b2.Bucket for a given bucket name.

While using Restic backup with Blazer I'm running into high memory usage. See this issue for context. After some playing around with the blazer/b2/writer.go file here are some results.

Using Ubuntu 16.04 with 1GB ram and a 2GB swap file enabled. Attempting to send 625GBs to B2 storage using Restic.

So it seems that increasing the ChuckSize is helping. Even still it's running pretty high memory. Any other suggestions or ideas?

I am trying to get all old versions.

.List() with b2.ListHidden() lists both current and old versions.

Now I thought I could just subtract a list of current files from a list of file versions, but the ID doesn't seem to be exposed. When I get them from iterator.Object(), the old versions look identical to the current versions, so I have no way of detecting which is the most current one (apart from sorting by LastModified, which is tricky because it has limited resolution).

I'm trying to implement file uploads using this library, but can't quite get wrap my head around the correct flow of credentials/configuration to uploading the file itself. If someone could add an example usage in the docs a la https://github.com/mitchellh/goamz that would be super helpful.

From what I can tell from reading the docs and looking at the issues is to get all versions of a file I need to use the ListHidden option. However, if I want to get a particular file at a specific version (using the direct fileID I believe), I don't see a way to do that. Can this be added or can I be corrected if I missed something?

The Attrs() method wants to call b2_get_file_info, but can't if it only has the object name and not the object id, because the API call doesn't except names for what I'm sure are excellent reasons.

A few B2 methods exist that will map names to IDs. We use b2_download_file_by_name and not b2_list_file_names because in #14 we found out that the latter didn't handle missing files well.

However, it's not possible to download a 0-byte file; what we end up doing is downloading a 1-byte file and never consuming the byte, which leaves stale connections hanging around.

Thus, we want to consume the byte.

Hi,

for restic we have a custom http.RoundTripper for multiple backends so that we can configure settings like the number of idle HTTP/TLS connections per host. Can you maybe add an option to use a custom transport for blazer? The minio-go s3 library has a method for it: https://godoc.org/github.com/minio/minio-go#Client.SetCustomTransport, the swift library by ncw has a field in the Connection struct: https://godoc.org/github.com/ncw/swift#Connection

I don't have any particular preference for either, I'd just like to use our http.RoundTripper :)

If you're interested in a PR proposing an API, please let me know, I'd work on that.

Thanks!

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.