Hello!

Bug details

This bug report encompasses two related bugs. I'll group them together in this report, as they might both be solvable with the same fix.

- The

polarity of spans will take into consideration the tokens prior to the span, causing large issues in the produced sentiment.

- Commas and end-of-sentence markers are disregarded.

How to reproduce the behaviour for bug 1

Sample code

import asent

import spacy

from pprint import pprint

# load spacy pipeline

nlp = spacy.load("en_core_web_lg")

# add the rule-based sentiment model

nlp.add_pipe("asent_en_v1")

doc = nlp("I am not very happy.")

print(doc[3:])

print(doc[3:]._.polarity)

pprint(doc[3:]._.polarity.polarities)Bugged results

very happy.

neg=0.616 neu=0.384 pos=0.0 compound=-0.4964 span=very happy.

[TokenPolarityOutput(polarity=0.0, token=very, span=very),

TokenPolarityOutput(polarity=-2.215, token=happy, span=not very happy),

TokenPolarityOutput(polarity=0.0, token=., span=.)]

Despite stating that the span is very happy., you can see that the sentiment is very negative, as it considers the tokens prior to the start of the span as well. Note the TokenPolarityOutput(polarity=-2.215, token=happy, span=not very happy). I discovered this bug while working on #52, as the polarity of the not very happy, very happy and happy spans were all reported to be the same.

You could argue that this is not that big of a deal, as most people are interested in per-sentence sentiment. However, this can also cause issues between sentences, as can be seen below:

Sample code

import asent

import spacy

from pprint import pprint

# load spacy pipeline

nlp = spacy.load("en_core_web_lg")

# add the rule-based sentiment model

nlp.add_pipe("asent_en_v1")

doc = nlp("Would you do that? I would not. Very stupid is what that is.")

for sent in doc.sents:

print(f"{sent.text:<30} - {sent._.polarity}")

pprint(list(doc.sents)[-1]._.polarity.polarities)Bugged results

Would you do that? - neg=0.0 neu=0.0 pos=0.0 compound=0.0 span=Would you do that?

I would not. - neg=0.0 neu=0.0 pos=0.0 compound=0.0 span=I would not.

Very stupid is what that is. - neg=0.0 neu=0.667 pos=0.333 compound=0.4575 span=Very stupid is what that is.

[TokenPolarityOutput(polarity=0.0, token=Very, span=Very),

TokenPolarityOutput(polarity=1.993, token=stupid, span=not. Very stupid),

TokenPolarityOutput(polarity=0.0, token=is, span=is),

TokenPolarityOutput(polarity=0.0, token=what, span=what),

TokenPolarityOutput(polarity=0.0, token=that, span=that),

TokenPolarityOutput(polarity=0.0, token=is, span=is),

TokenPolarityOutput(polarity=0.0, token=., span=.)]

Note the TokenPolarityOutput(polarity=1.993, token=stupid, span=not. Very stupid).

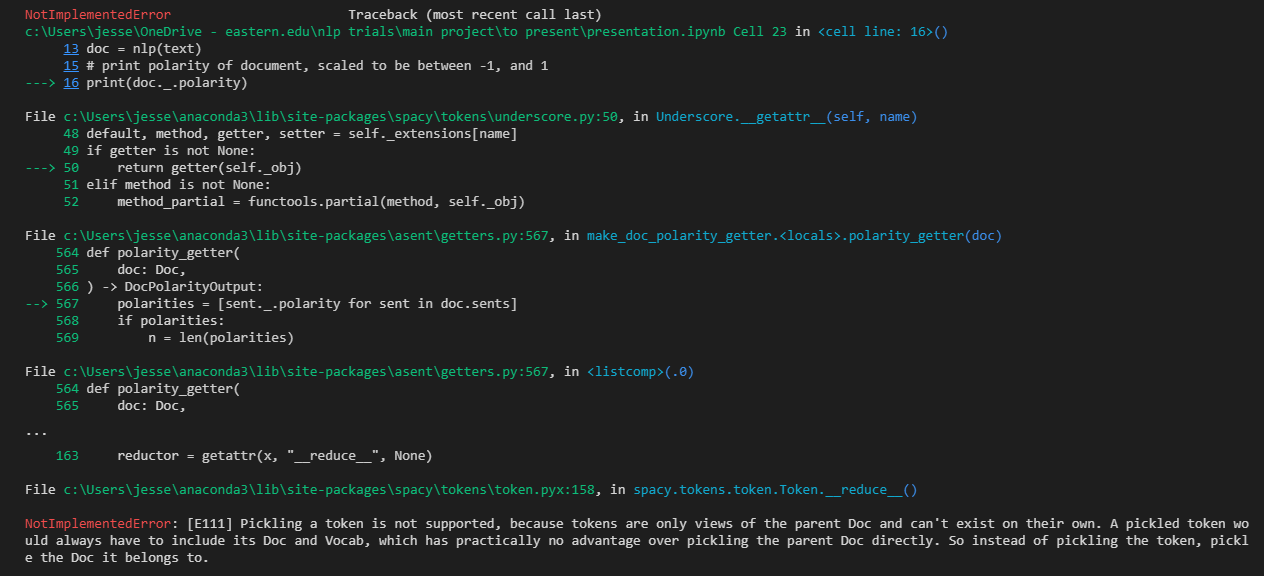

How to reproduce the behaviour for bug 2

See the second sample from this issue, as well as the commented-out example from #57. In these examples, tokens from prior sentence (segments) are used to modify the polarity of tokens in the new sentence (segment). For example, in the second example from #57, the total polarity of the entire document is DocPolarityOutput(neg=0.198, neu=0.662, pos=0.14, compound=-0.2411). This is higher than expected, because the unhappy part becomes positive. Rewriting the input to be "I am not great, I would describe myself as unhappy." will cause the polarity to become DocPolarityOutput(neg=0.379, neu=0.621, pos=0.0, compound=-0.7264), with the following visualization:

This is the behaviour I would expect, even before rewriting the input.

My Environment

- asent version: 0.4.3

- spaCy version: 3.4.1

- Platform: Windows-10-10.0.19043-SP0

- Python version: 3.10.1

- Pipelines: en_core_web_lg (3.4.0)