A k6 extension for interacting with Kubernetes clusters while testing.

To build a custom k6 binary with this extension, first ensure you have the prerequisites:

- Go toolchain

- Git

-

Download xk6:

go install go.k6.io/xk6/cmd/xk6@latest

-

xk6 build --with github.com/grafana/xk6-kubernetes

The

xk6 buildcommand creates a k6 binary that includes the xk6-kubernetes extension in your local folder. This k6 binary can now run a k6 test using xk6-kubernetes APIs.

To make development a little smoother, use the Makefile in the root folder. The default target will format your code, run tests, and create a k6 binary with your local code rather than from GitHub.

git clone [email protected]:grafana/xk6-kubernetes.git

cd xk6-kubernetes

makeUsing the k6 binary with xk6-kubernetes, run the k6 test as usual:

./k6 run k8s-test-script.js

The API assumes a kubeconfig configuration is available at any of the following default locations:

- at the location pointed by the

KUBECONFIGenvironment variable - at

$HOME/.kube

This API offers methods for creating, retrieving, listing and deleting resources of any of the supported kinds.

| Method | Parameters | Description |

|---|---|---|

| apply | manifest string | creates a Kubernetes resource given a YAML manifest or updates it if already exists |

| create | spec object | creates a Kubernetes resource given its specification |

| delete | kind | removes the named resource |

| name | ||

| namespace | ||

| get | kind | returns the named resource |

| name | ||

| namespace | ||

| list | kind | returns a collection of resources of a given kind |

| namespace | ||

| update | spec object | updates an existing resource |

The kinds of resources currently supported are:

- ConfigMap

- Deployment

- Ingress

- Job

- Namespace

- Node

- PersistentVolume

- PersistentVolumeClaim

- Pod

- Secret

- Service

- StatefulSet

import { Kubernetes } from 'k6/x/kubernetes';

const podSpec = {

apiVersion: "v1",

kind: "Pod",

metadata: {

name: "busybox",

namespace: "testns"

},

spec: {

containers: [

{

name: "busybox",

image: "busybox",

command: ["sh", "-c", "sleep 30"]

}

]

}

}

export default function () {

const kubernetes = new Kubernetes();

kubernetes.create(pod)

const pods = kubernetes.list("Pod", "testns");

console.log(`${pods.length} Pods found:`);

pods.map(function(pod) {

console.log(` ${pod.metadata.name}`)

});

}import { Kubernetes } from 'k6/x/kubernetes';

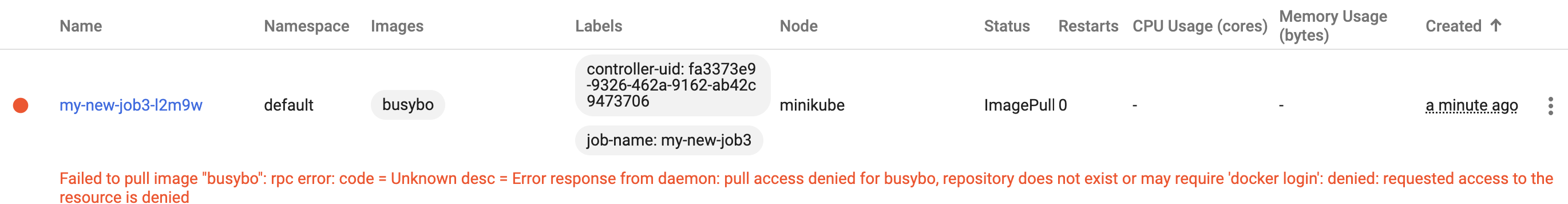

const manifest = `

apiVersion: batch/v1

kind: Job

metadata:

name: busybox

namespace: testns

spec:

template:

spec:

containers:

- name: busybox

image: busybox

command: ["sleep", "300"]

restartPolicy: Never

`

export default function () {

const kubernetes = new Kubernetes();

kubernetes.apply(manifest)

const jobs = kubernetes.list("Job", "testns");

console.log(`${jobs.length} Jobs found:`);

pods.map(function(job) {

console.log(` ${job.metadata.name}`)

});

}The xk6-kubernetes extension offers helpers to facilitate common tasks when setting up a tests. All helper functions work in a namespace to facilitate the development of tests segregated by namespace. The helpers are accessed using the following method:

| Method | Parameters | Description |

|---|---|---|

| helpers | namespace | returns helpers that operate in the given namespace. If none is specified, "default" is used |

The methods above return an object that implements the following helper functions:

| Method | Parameters | Description |

|---|---|---|

| getExternalIP | service | returns the external IP of a service if any is assigned before timeout expires |

| timeout in seconds | ||

| waitPodRunning | pod name | waits until the pod is in 'Running' state or the timeout expires. Returns a boolean indicating of the pod was ready or not. Throws an error if the pod is Failed. |

| timeout in seconds | ||

| waitServiceReady | service name | waits until the given service has at least one endpoint ready or the timeout expires |

| timeout in seconds |

import { Kubernetes } from 'k6/x/kubernetes';

let podSpec = {

apiVersion: "v1",

kind: "Pod",

metadata: {

name: "busybox",

namespace: "default"

},

spec: {

containers: [

{

name: "busybox",

image: "busybox",

command: ["sh", "-c", "sleep 30"]

}

]

}

}

export default function () {

const kubernetes = new Kubernetes();

// create pod

kubernetes.create(pod)

// get helpers for test namespace

const helpers = kubernetes.helpers()

// wait for pod to be running

const timeout = 10

if (!helpers.waitPodRunning(pod.metadata.name, timeout)) {

console.log(`"pod ${pod.metadata.name} not ready after ${timeout} seconds`)

}

}