The Quick Draw Dataset is a collection of 50 million drawings across 345 categories, contributed by players of the game Quick, Draw!. The drawings were captured as timestamped vectors, tagged with metadata including what the player was asked to draw and in which country the player was located. You can browse the recognized drawings on quickdraw.withgoogle.com/data.

We're sharing them here for developers, researchers, and artists to explore, study, and learn from. If you create something with this dataset, please let us know by e-mail or at A.I. Experiments.

We have also released a tutorial and model for training your own drawing classifier on tensorflow.org.

Please keep in mind that while this collection of drawings was individually moderated, it may still contain inappropriate content.

- The raw moderated dataset

- Preprocessed dataset

- Get the data

- Projects using the dataset

- Changes

- License

The raw data is available as ndjson files seperated by category, in the following format:

| Key | Type | Description |

|---|---|---|

| key_id | 64-bit unsigned integer | A unique identifier across all drawings. |

| word | string | Category the player was prompted to draw. |

| recognized | boolean | Whether the word was recognized by the game. |

| timestamp | datetime | When the drawing was created. |

| countrycode | string | A two letter country code (ISO 3166-1 alpha-2) of where the player was located. |

| drawing | string | A JSON array representing the vector drawing |

Each line contains one drawing. Here's an example of a single drawing:

{

"key_id":"5891796615823360",

"word":"nose",

"countrycode":"AE",

"timestamp":"2017-03-01 20:41:36.70725 UTC",

"recognized":true,

"drawing":[[[129,128,129,129,130,130,131,132,132,133,133,133,133,...]]]

}The format of the drawing array is as following:

[

[ // First stroke

[x0, x1, x2, x3, ...],

[y0, y1, y2, y3, ...],

[t0, t1, t2, t3, ...]

],

[ // Second stroke

[x0, x1, x2, x3, ...],

[y0, y1, y2, y3, ...],

[t0, t1, t2, t3, ...]

],

... // Additional strokes

]Where x and y are the pixel coordinates, and t is the time in milliseconds since the first point. x and y are real-valued while t is an integer. The raw drawings can have vastly different bounding boxes and number of points due to the different devices used for display and input.

We've preprocessed and split the dataset into different files and formats to make it faster and easier to download and explore.

We've simplified the vectors, removed the timing information, and positioned and scaled the data into a 256x256 region. The data is exported in ndjson format with the same metadata as the raw format. The simplification process was:

- Align the drawing to the top-left corner, to have minimum values of 0.

- Uniformly scale the drawing, to have a maximum value of 255.

- Resample all strokes with a 1 pixel spacing.

- Simplify all strokes using the Ramer–Douglas–Peucker algorithm with an epsilon value of 2.0.

There is an example in examples/nodejs/simplified-parser.js showing how to read ndjson files in NodeJS.

Additionally, the examples/nodejs/ndjson.md document details a set of command-line tools that can help explore subsets of these quite large files.

The simplified drawings and metadata are also available in a custom binary format for efficient compression and loading.

There is an example in examples/binary_file_parser.py showing how to load the binary files in Python.

There is also an example in examples/nodejs/binary-parser.js showing how to read the binary files in NodeJS.

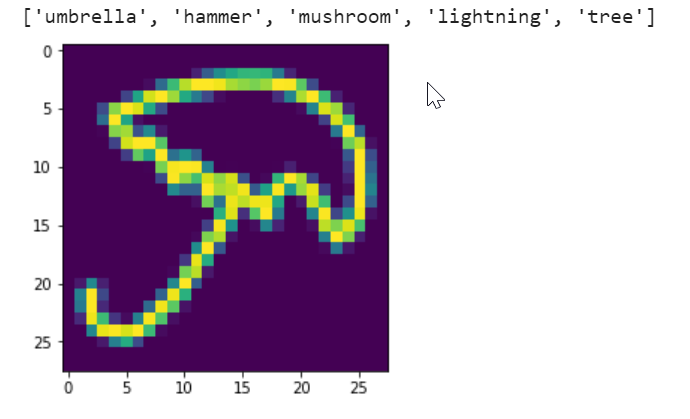

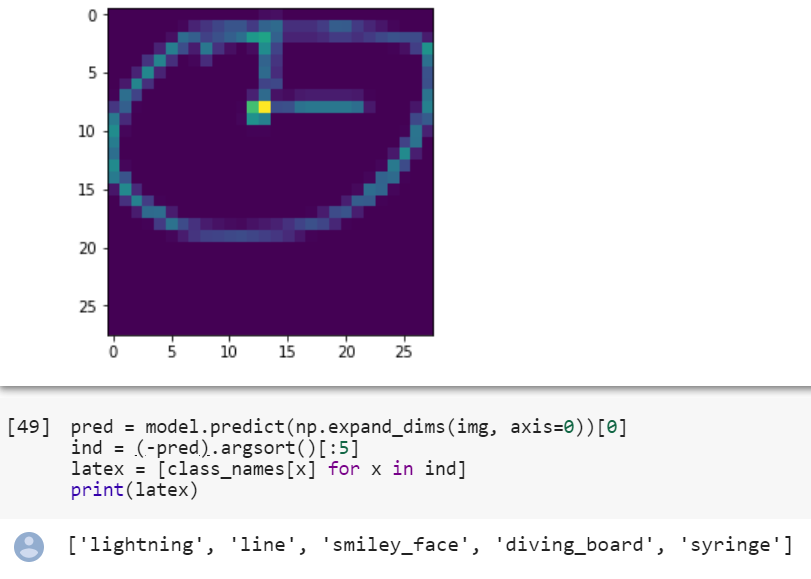

All the simplified drawings have been rendered into a 28x28 grayscale bitmap in numpy .npy format. The files can be loaded with np.load(). These images were generated from the simplified data, but are aligned to the center of the drawing's bounding box rather than the top-left corner. See here for code snippet used for generation.

The dataset is available on Google Cloud Storage as ndjson files seperated by category. See the list of files in Cloud

, or read more about accessing public datasets using other methods. As an example, to easily download all simplified drawings, one way is to run the command gsutil -m cp 'gs://quickdraw_dataset/full/simplified/*.ndjson' .

- Raw files (

.ndjson) - Simplified drawings files (

.ndjson) - Binary files (

.bin) - Numpy bitmap files (

.npy)

This data is also used for training the Sketch-RNN model. An open source, TensorFlow implementation of this model is available in the Magenta Project, (link to GitHub repo). You can also read more about this model in this Google Research blog post. The data is stored in compressed .npz files, in a format suitable for inputs into a recurrent neural network.

In this dataset, 75K samples (70K Training, 2.5K Validation, 2.5K Test) has been randomly selected from each category, processed with RDP line simplification with an epsilon parameter of 2.0. Each category will be stored in its own .npz file, for example, cat.npz.

We have also provided the full data for each category, if you want to use more than 70K training examples. These are stored with the .full.npz extensions.

Note: For Python3, loading the npz files using np.load(data_filepath, encoding='latin1', allow_pickle=True)

Instructions for converting Raw ndjson files to this npz format is available in this notebook.

Here are some projects and experiments that are using or featuring the dataset in interesting ways. Got something to add? Let us know!

Creative and artistic projects

- Letter collages by Deborah Schmidt

- Face tracking experiment by Neil Mendoza

- Faces of Humanity by Tortue

- Infinite QuickDraw by kynd.info

- Misfire.io by Matthew Collyer

- Draw This by Dan Macnish

- Scribbling Speech by Xinyue Yang

- illustrAItion by Ling Chen

- Dreaming of Electric Sheep by Dr. Ernesto Diaz-Aviles

Data analyses

- How do you draw a circle? by Quartz

- Forma Fluens by Mauro Martino, Hendrik Strobelt and Owen Cornec

- How Long Does it Take to (Quick) Draw a Dog? by Jim Vallandingham

- Finding bad flamingo drawings with recurrent neural networks by Colin Morris

- Facets Dive x Quick, Draw! by People + AI Research Initiative (PAIR), Google

- Exploring and Visualizing an Open Global Dataset by Google Research

- Machine Learning for Visualization - Talk / article by Ian Johnson

Papers

- A Neural Representation of Sketch Drawings by David Ha, Douglas Eck, ICLR 2018. code

- Sketchmate: Deep hashing for million-scale human sketch retrieval by Peng Xu et al., CVPR 2018.

- Multi-graph transformer for free-hand sketch recognition by Peng Xu, Chaitanya K Joshi, Xavier Bresson, ArXiv 2019. code

- Deep Self-Supervised Representation Learning for Free-Hand Sketch by Peng Xu et al., ArXiv 2020. code

- SketchTransfer: A Challenging New Task for Exploring Detail-Invariance and the Abstractions Learned by Deep Networks by Alex Lamb, Sherjil Ozair, Vikas Verma, David Ha, WACV 2020.

- Deep Learning for Free-Hand Sketch: A Survey by Peng Xu, ArXiv 2020.

- A Novel Sketch Recognition Model based on Convolutional Neural Networks by Abdullah Talha Kabakus, 2nd International Congress on Human-Computer Interaction, Optimization and Robotic Applications, pp. 101-106, 2020.

Guides & Tutorials

- TensorFlow tutorial for drawing classification

- Train a model in tf.keras with Colab, and run it in the browser with TensorFlow.js by Zaid Alyafeai

Code and tools

- Quick, Draw! Polymer Component & Data API by Nick Jonas

- Quick, Draw for Processing by Cody Ben Lewis

- Quick, Draw! prediction model by Keisuke Irie

- Random sample tool by Learning statistics is awesome

- SVG rendering in d3.js example by Ian Johnson (read more about the process here)

- Sketch-RNN Classification by Payal Bajaj

- quickdraw.js by Thomas Wagenaar

- ~ Doodler ~ by Krishna Sri Somepalli

- quickdraw Python API by Martin O'Hanlon

- RealTime QuickDraw by Akshay Bahadur

- DataFlow processing by Guillem Xercavins

- QuickDrawGH Rhino Plugin by James Dalessandro

- QuickDrawBattle by Andri Soone

May 25, 2017: Updated Sketch-RNN QuickDraw dataset, created .full.npz complementary sets.

This data made available by Google, Inc. under the Creative Commons Attribution 4.0 International license.

The following table is necessary for this dataset to be indexed by search engines such as Google Dataset Search.

| property | value | ||||||

|---|---|---|---|---|---|---|---|

| name | The Quick, Draw! Dataset |

||||||

| alternateName | Quick Draw Dataset |

||||||

| alternateName | quickdraw-dataset |

||||||

| url | https://github.com/googlecreativelab/quickdraw-dataset |

||||||

| sameAs | https://github.com/googlecreativelab/quickdraw-dataset |

||||||

| description | The Quick Draw Dataset is a collection of 50 million drawings across 345 categories, contributed by players of the game "Quick, Draw!". The drawings were captured as timestamped vectors, tagged with metadata including what the player was asked to draw and in which country the player was located.\n

\n

Example drawings:

|

||||||

| provider |

|

||||||

| license |

|