Photo credit: Google AI Blog

Reinforcement Learning agents such as the one created in this project are used in many real-world applications. In particular, industrial control applications benefit greatly from the continuous control aspects like those implemented in this project. This Medium blog post describes several potential applications of this technology, including:

- Robotic Control Systems: Very similar to the Reacher environment in this project!

- Automotive Control Systems: DDPG-like algorithms can help instrument throttle commands, air-to-fuel ratios, and ignition control — improving fuel economy and reducing emissions.

- Automatic Calibration: DDPG networks are used to calibrate industrial equipment and optimize performance. They can also help detect anomalies and issue alerts to avoid disasters.

- HVAC Control Systems: Heating and Ventilation systems are everywhere, and improvements in these systems can lead to great energy savings. In fact, Google turned over control of its data center cooling systems to an AI system, reducing cooling costs by 40%. A July 2018 paper by Li et al addresses a similar issue for the National Super Computing Center (NSCC). Their algorithm uses an actor-critic model that's similar to the one I used in this project, which reduced NSCC electricity costs by 15%.

In this project, I build a reinforcement learning (RL) agent that controls a robotic arm within Unity's Reacher environment. The goal is to get 20 different robotic arms to maintain contact with the green spheres.

A reward of +0.1 is provided for each timestep that the agent's hand is in the goal location. Thus, the goal of your agent is to maintain its position at the target location for as many time steps as possible.

In order to solve the environment, our agent must achieve a score of +30 averaged across all 20 agents for 100 consecutive episodes.

- Set-up: Double-jointed arm which can move to target locations.

- Goal: Each agent must move its hand to the goal location, and keep it there.

- Agents: The environment contains 20 agents linked to a single Brain.

- Agent Reward Function (independent):

- +0.1 for each timestep agent's hand is in goal location.

- Brains: One Brain with the following observation/action space.

- Vector Observation space: 33 variables corresponding to position, rotation, velocity, and angular velocities of the two arm Rigidbodies.

- Vector Action space: (Continuous) Each action is a vector with four numbers, corresponding to torque applicable to two joints. Every entry in the action vector should be a number between -1 and 1.

- Visual Observations: None.

- Reset Parameters: Two, corresponding to goal size, and goal movement speed.

- Benchmark Mean Reward: 30

Here are the high-level steps taken in building an agent that solves this environment.

- Evaluate the state and action space.

- Establish performance baseline using a random action policy.

- Select an appropriate algorithm and begin implementing it.

- Run experiments, make revisions, and retrain the agent until the performance threshold is reached.

The state space space has 33 dimensions corresponding to the position, rotation, velocity, and angular velocities of the robotic arm. There are two sections of the arm — analogous to those connecting the shoulder and elbow (i.e., the humerus), and the elbow to the wrist (i.e., the forearm) on a human body.

Each action is a vector with four numbers, corresponding to the torque applied to the two joints (shoulder and elbow). Every element in the action vector must be a number between -1 and 1, making the action space continuous.

Before building an agent that learns, I started by testing an agent that selects actions (uniformly) at random at each time step.

env_info = env.reset(train_mode=False)[brain_name] # reset the environment

states = env_info.vector_observations # get the current state (for each agent)

scores = np.zeros(num_agents) # initialize the score (for each agent)

while True:

actions = np.random.randn(num_agents, action_size) # select an action (for each agent)

actions = np.clip(actions, -1, 1) # all actions between -1 and 1

env_info = env.step(actions)[brain_name] # send all actions to tne environment

next_states = env_info.vector_observations # get next state (for each agent)

rewards = env_info.rewards # get reward (for each agent)

dones = env_info.local_done # see if episode finished

scores += env_info.rewards # update the score (for each agent)

states = next_states # roll over states to next time step

if np.any(dones): # exit loop if episode finished

break

print('Total score (averaged over agents) this episode: {}'.format(np.mean(scores)))Running this agent a few times resulted in scores from 0.03 to 0.09. Obviously, if the agent needs to achieve an average score of 30 over 100 consecutive episodes, then choosing actions at random won't work.

To get started, there are a few high-level architecture decisions we need to make. First, we need to determine which types of algorithms are most suitable for the Reacher environment. Second, we need to determine how many "brains" we want controlling the actions of our agents.

There are two key differences in the Reacher environment compared to the previous 'Navigation' project:

- Multple agents — The version of the environment I'm tackling in this project has 20 different agents, whereas the Navigation project had only a single agent. To keep things simple, I decided to use a single brain to control all 20 agents, rather than training 20 individual brains. Training multiple brains seemed unnecessary since all of the agents are essentially performing the same task under the same conditions. Also, training 20 brains would take a really long time!

- Continuous action space — The action space is now continuous, which allows each agent to execute more complex and precise movements. Essentially, there's an unlimited range of possible action values to control the robotic arm, whereas the agent in the Navigation project was limited to four discrete actions: left, right, forward, backward.

Given the additional complexity of this environment, the value-based method we used for the last project is not suitable — i.e., the Deep Q-Network (DQN) algorithm. Most importantly, we need an algorithm that allows the robotic arm to utilize its full range of movement. For this, we'll need to explore a different class of algorithms called policy-based methods.

Here are some advantages of policy-based methods:

- Continuous action spaces — Policy-based methods are well-suited for continuous action spaces.

- Stochastic policies — Both value-based and policy-based methods can learn deterministic policies. However, policy-based methods can also learn true stochastic policies.

- Simplicity — Policy-based methods directly learn the optimal policy, without having to maintain a separate value function estimate. With value-based methods, the agent uses its experience with the environment to maintain an estimate of the optimal action-value function, from which an optimal policy is derived. This intermediate step requires the storage of lots of additional data since you need to account for all possible action values. Even if you discretize the action space, the number of possible actions can be quite high. For example, if we assumed only 10 degrees of freedom for both joints of our robotic arm, we'd have 1024 unique actions (210). Using DQN to determine the action that maximizes the action-value function within a continuous or high-dimensional space requires a complex optimization process at every timestep.

The algorithm I chose to model my project on is outlined in this paper, Continuous Control with Deep Reinforcement Learning, by researchers at Google Deepmind. In this paper, the authors present "a model-free, off-policy actor-critic algorithm using deep function approximators that can learn policies in high-dimensional, continuous action spaces." They highlight that DDPG can be viewed as an extension of Deep Q-learning to continuous tasks.

I used this vanilla, single-agent DDPG as a template. I further experimented with the DDPG algorithm based on other concepts covered in Udacity's classroom and lessons. My understanding and implementation of this algorithm (including various customizations) are discussed below.

Actor-critic methods leverage the strengths of both policy-based and value-based methods.

Using a policy-based approach, the agent (actor) learns how to act by directly estimating the optimal policy and maximizing reward through gradient ascent. Meanwhile, employing a value-based approach, the agent (critic) learns how to estimate the value (i.e., the future cumulative reward) of different state-action pairs. Actor-critic methods combine these two approaches in order to accelerate the learning process. Actor-critic agents are also more stable than value-based agents, while requiring fewer training samples than policy-based agents.

You can find the actor-critic logic implemented as part of the Agent() class here in ddpg_agent.py of the source code. The actor-critic models can be found via their respective Actor() and Critic() classes here in models.py.

Note: As we did with Double Q-Learning in the last project, we're again leveraging local and target networks to improve stability. This is where one set of parameters w is used to select the best action, and another set of parameters w' is used to evaluate that action. In this project, local and target networks are implemented separately for both the actor and the critic.

# Actor Network (w/ Target Network)

self.actor_local = Actor(state_size, action_size, random_seed).to(device)

self.actor_target = Actor(state_size, action_size, random_seed).to(device)

self.actor_optimizer = optim.Adam(self.actor_local.parameters(), lr=LR_ACTOR)

# Critic Network (w/ Target Network)

self.critic_local = Critic(state_size, action_size, random_seed).to(device)

self.critic_target = Critic(state_size, action_size, random_seed).to(device)

self.critic_optimizer = optim.Adam(self.critic_local.parameters(), lr=LR_CRITIC, weight_decay=WEIGHT_DECAY)One challenge is choosing which action to take while the agent is still learning the optimal policy. Should the agent choose an action based on the rewards observed thus far? Or, should the agent try a new action in hopes of earning a higher reward? This is known as the exploration vs. exploitation dilemma.

In the Navigation project, I addressed this by implementing an 𝛆-greedy algorithm. This algorithm allows the agent to systematically manage the exploration vs. exploitation trade-off. The agent "explores" by picking a random action with some probability epsilon 𝛜. Meanwhile, the agent continues to "exploit" its knowledge of the environment by choosing actions based on the deterministic policy with probability (1-𝛜).

However, this approach won't work for controlling a robotic arm. The reason is that the actions are no longer a discrete set of simple directions (i.e., up, down, left, right). The actions driving the movement of the arm are forces with different magnitudes and directions. If we base our exploration mechanism on random uniform sampling, the direction actions would have a mean of zero, in turn cancelling each other out. This can cause the system to oscillate without making much progress.

Instead, we'll use the Ornstein-Uhlenbeck process, as suggested in the previously mentioned paper by Google DeepMind (see bottom of page 4). The Ornstein-Uhlenbeck process adds a certain amount of noise to the action values at each timestep. This noise is correlated to previous noise, and therefore tends to stay in the same direction for longer durations without canceling itself out. This allows the arm to maintain velocity and explore the action space with more continuity.

You can find the Ornstein-Uhlenbeck process implemented here in the OUNoise class in ddpg_agent.py of the source code.

In total, there are five hyperparameters related to this noise process.

The Ornstein-Uhlenbeck process itself has three hyperparameters that determine the noise characteristics and magnitude:

- mu: the long-running mean

- theta: the speed of mean reversion

- sigma: the volatility parameter

Of these, I only tuned sigma. After running a few experiments, I reduced sigma from 0.3 to 0.2. The reduced noise volatility seemed to help the model converge faster.

Notice also there's an epsilon parameter used to decay the noise level over time. This decay mechanism ensures that more noise is introduced earlier in the training process (i.e., higher exploration), and the noise decreases over time as the agent gains more experience (i.e., higher exploitation). The starting value for epsilon and its decay rate are two hyperparameters that were tuned during experimentation.

You can find the epsilon process implemented here in the Agent.act() method in ddpg_agent.py of the source code. While the epsilon decay is performed here as part of the learning step.

The final noise parameters were set as follows:

OU_SIGMA = 0.2 # Ornstein-Uhlenbeck noise parameter

OU_THETA = 0.15 # Ornstein-Uhlenbeck noise parameter

EPSILON = 1.0 # explore->exploit noise process added to act step

EPSILON_DECAY = 1e-6 # decay rate for noise processIn the first few versions of my implementation, the agent performed the learning step at every timestep. This made training very slow, and there was no apparent benefit to the agent's performance. So, I implemented an interval in which the learning step is only performed every 20 timesteps. As part of each learning step, the algorithm samples experiences from the buffer and runs the Agent.learn() method 10 times.

LEARN_EVERY = 20 # learning timestep interval

LEARN_NUM = 10 # number of learning passesYou can find the learning interval implemented here in the Agent.step() method in ddpg_agent.py of the source code.

In early versions of my implementation, I had trouble getting my agent to learn. Or, rather, it would start to learn but then become very unstable and either plateau or collapse.

I suspect that one of the causes was outsized gradients. Unfortunately, I couldn't find an easy way to investigate this, although I'm sure there's some way of doing this in PyTorch. Absent this investigation, I hypothesize that many of the weights from my critic model were becoming quite large after just 5-10 episodes of training. (Note that at this point, I was running the learning process at every timestep, which made the problem worse.)

The issue of exploding gradients is described in layman's terms in this post by Jason Brownlee. Essentially, each layer of your net amplifies the gradient it receives. This becomes a problem when the lower layers of the network accumulate huge gradients, making their respective weight updates too large to allow the model to learn anything.

To combat this, I implemented gradient clipping using the torch.nn.utils.clip_grad_norm_ function. I set the function to "clip" the norm of the gradients at 1, therefore placing an upper limit on the size of the parameter updates, and preventing them from growing exponentially. Once this change was implemented, along with batch normalization (discussed in the next section), my model became much more stable and my agent started learning at a much faster rate.

You can find gradient clipping implemented here in the "update critic" section of the Agent.learn() method, within ddpg_agent.py of the source code.

Note that this function is applied after the backward pass, but before the optimization step.

# Compute critic loss

Q_expected = self.critic_local(states, actions)

critic_loss = F.mse_loss(Q_expected, Q_targets)

# Minimize the loss

self.critic_optimizer.zero_grad()

critic_loss.backward()

torch.nn.utils.clip_grad_norm_(self.critic_local.parameters(), 1)

self.critic_optimizer.step()I've used batch normalization many times in the past when building convolutional neural networks (CNN), in order to squash pixel values. But, it didn't occur to me how important it would be to this project. This was another aspect of the Google DeepMind paper that proved tremendously useful in my implementation of this project.

Similar to the exploding gradient issue mentioned above, running computations on large input values and model parameters can inhibit learning. Batch normalization addresses this problem by scaling the features to be within the same range throughout the model and across different environments and units. In additional to normalizing each dimension to have unit mean and variance, the range of values is often much smaller, typically between 0 and 1.

Initially, I added batch normalization between every layer in both the actor and critic models. However, this may have been overkill, and seemed to prolong training time. I eventually reduced the use of batch normalization to just the outputs of the first fully-connected layers of both the actor and critic models.

You can find batch normalization implemented here for the actor, and here for the critic, within model.py of the source code.

# actor forward pass

def forward(self, state):

"""Build an actor (policy) network that maps states -> actions."""

x = F.relu(self.bn1(self.fc1(state)))

x = F.relu(self.fc2(x))

return F.tanh(self.fc3(x))# critic forward pass

def forward(self, state, action):

"""Build a critic (value) network that maps (state, action) pairs -> Q-values."""

xs = F.relu(self.bn1(self.fcs1(state)))

x = torch.cat((xs, action), dim=1)

x = F.relu(self.fc2(x))

return self.fc3(x)Experience replay allows the RL agent to learn from past experience.

As with DQN in the previous project, DDPG also utilizes a replay buffer to gather experiences from each agent. Each experience is stored in a replay buffer as the agent interacts with the environment. In this project, there is one central replay buffer utilized by all 20 agents, therefore allowing agents to learn from each others' experiences.

The replay buffer contains a collection of experience tuples with the state, action, reward, and next state (s, a, r, s'). Each agent samples from this buffer as part of the learning step. Experiences are sampled randomly, so that the data is uncorrelated. This prevents action values from oscillating or diverging catastrophically, since a naive algorithm could otherwise become biased by correlations between sequential experience tuples.

Also, experience replay improves learning through repetition. By doing multiple passes over the data, our agents have multiple opportunities to learn from a single experience tuple. This is particularly useful for state-action pairs that occur infrequently within the environment.

The implementation of the replay buffer can be found here in the ddpg_agent.py file of the source code.

Once all of the various components of the algorithm were in place, my agent was able to solve the 20 agent Reacher environment. Again, the performance goal is an average reward of at least +30 over 100 episodes, and over all 20 agents.

The graph below shows the final results. The best performing agent was able to solve the environment starting with the 12th episode, with a top mean score of 39.3 in the 79th episode. The complete set of results and steps can be found in this notebook.

- Experiment with other algorithms — Tuning the DDPG algorithm required a lot of trial and error. Perhaps another algorithm such as Trust Region Policy Optimization (TRPO), [Proximal Policy Optimization (PPO)](Proximal Policy Optimization Algorithms), or Distributed Distributional Deterministic Policy Gradients (D4PG) would be more robust.

- Add prioritized experience replay — Rather than selecting experience tuples randomly, prioritized replay selects experiences based on a priority value that is correlated with the magnitude of error. This can improve learning by increasing the probability that rare and important experience vectors are sampled.

If you want to run this project yourself, the project starter code can be found below or here within Udacity's source repo for this project.

For this project, you will work with the Reacher environment.

For this project, there are two separate versions of the Unity environment:

- The first version contains a single agent.

- The second version contains 20 identical agents, each with its own copy of the environment.

The second version is useful for algorithms like PPO, A3C, and D4PG that use multiple (non-interacting, parallel) copies of the same agent to distribute the task of gathering experience.

Note that your project submission need only solve one of the two versions of the environment.

The task is episodic, and in order to solve the environment, your agent must get an average score of +30 over 100 consecutive episodes.

The barrier for solving the second version of the environment is slightly different, to take into account the presence of many agents. In particular, your agents must get an average score of +30 (over 100 consecutive episodes, and over all agents). Specifically,

- After each episode, we add up the rewards that each agent received (without discounting), to get a score for each agent. This yields 20 (potentially different) scores. We then take the average of these 20 scores.

- This yields an average score for each episode (where the average is over all 20 agents).

The environment is considered solved, when the average (over 100 episodes) of those average scores is at least +30.

-

Download the environment from one of the links below. You need only select the environment that matches your operating system:

-

Version 1: One (1) Agent

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

-

Version 2: Twenty (20) Agents

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

(For Windows users) Check out this link if you need help with determining if your computer is running a 32-bit version or 64-bit version of the Windows operating system.

(For AWS) If you'd like to train the agent on AWS (and have not enabled a virtual screen), then please use this link (version 1) or this link (version 2) to obtain the "headless" version of the environment. You will not be able to watch the agent without enabling a virtual screen, but you will be able to train the agent. (To watch the agent, you should follow the instructions to enable a virtual screen, and then download the environment for the Linux operating system above.)

-

-

Place the file in the DRLND GitHub repository, in the

p2_continuous-control/folder, and unzip (or decompress) the file.

Follow the instructions in Continuous_Control.ipynb to get started with training your own agent!

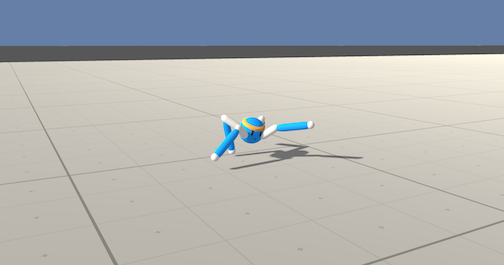

After you have successfully completed the project, you might like to solve the more difficult Crawler environment.

In this continuous control environment, the goal is to teach a creature with four legs to walk forward without falling.

You can read more about this environment in the ML-Agents GitHub here. To solve this harder task, you'll need to download a new Unity environment. (Note: Udacity students should not submit a project with this new environment.)

You need only select the environment that matches your operating system:

- Linux: click here

- Mac OSX: click here

- Windows (32-bit): click here

- Windows (64-bit): click here

Then, place the file in the p2_continuous-control/ folder in the DRLND GitHub repository, and unzip (or decompress) the file. Next, open Crawler.ipynb and follow the instructions to learn how to use the Python API to control the agent.

(For AWS) If you'd like to train the agent on AWS (and have not enabled a virtual screen), then please use this link to obtain the "headless" version of the environment. You will not be able to watch the agent without enabling a virtual screen, but you will be able to train the agent. (To watch the agent, you should follow the instructions to enable a virtual screen, and then download the environment for the Linux operating system above.)