fluxcd / flux2-kustomize-helm-example Goto Github PK

View Code? Open in Web Editor NEWA GitOps workflow example for multi-env deployments with Flux, Kustomize and Helm.

Home Page: https://fluxcd.io

License: Apache License 2.0

A GitOps workflow example for multi-env deployments with Flux, Kustomize and Helm.

Home Page: https://fluxcd.io

License: Apache License 2.0

Things under the /infrastructure folder will be deployed identically to every cluster, right?

What if each cluster is supposed to have some cluster-specific parameters? The proposed folder structure doesn't allow per-cluster Kustomization for things under ./infrastructure, right?

What would be your suggestion? I would think it needs to be split to 2 folders:

/infrustructure-common -- with the same folder structure you proposed/infrustructure-cluster-specific -- with the following structure:infrustructure-cluster-specific

├── base

└── overlays

├── production

└── staging

Am I understanding it right?

P.S. Your /apps folder currently has this structure:

apps

├── base

├── production

└── staging

While there's nothing wrong with that, the proposal here seems to be easier to keep track of, i.e., re-arrange it to:

apps

├── base

└── overlays

├── production

└── staging

The explicit overlays folder is a self-documenting way to say that this is a Kustomization thing. That might sound obvious when we are right in the middle of discussing Kustomization alone, but after a while, with so many technologies mixed in (app's Kubernetes manifests, Flux bootstrap folders, Flux HelmRepository, Flux HelmRelease vs. Helm Charts, Flux Kustomization vs. original Kustomization -- as debated in Issue 321), it becomes quite confusing which folder is for which technology.

I bootstrapped an existing k8s cluster with the staging config. After it committed the flux-system files the test workflow started failing. Here is the pertinent part of the log

INFO - Validating clusters

PASS - ./clusters/production/infrastructure.yaml contains a valid Kustomization (flux-system.infrastructure)

PASS - ./clusters/production/apps.yaml contains a valid Kustomization (flux-system.apps)

ERR - ./clusters/staging/flux-system/gotk-components.yaml: Failed initializing schema https://kubernetesjsonschema.dev/master-standalone-strict/customresourcedefinition-apiextensions-v1.json: Could not read schema from HTTP, response status is 404 Not Found

Failed initializing schema file:///tmp/flux-crd-schemas/master-standalone-strict/customresourcedefinition-apiextensions-v1.json: open /tmp/flux-crd-schemas/master-standalone-strict/customresourcedefinition-apiextensions-v1.json: no such file or directory

Error: Process completed with exit code 1.

It seems to be failing at line 45:

kubeval ${file} --strict --additional-schema-locations=file:///tmp/flux-crd-schemas

Any suggestions?

Just an FYI that's probably worth mentioning in the in the readme.

The bitnami images used in this example are not built to support arm64 processors. So they won't work with the newest generation of AWS EC2 t4 instance types.

I spent like 2 hours trying to figure out why nginx and redis wouldn't load up before I thought to go and check if they were building the images for arm or not.

Hi,

I was just thinking how i can better "sell" flux2 to my team of developers who are mainly using Helm for installing their workloads in the cluster

Note that with version: ">=1.0.0-alpha" we configure Flux to automatically upgrade the HelmRelease to the latest chart version including alpha, beta and pre-releases.

I am curious how would the above actually works? Could it be a automated Pull/Merge request that is created when a container image is updated? Or Flux will automatically pull the latest tags/version based on the interval configured.

Thanks!

Hello,

First of all, this repo has been very helpful!!!

Reason for opening this issue is that when using sops to store encrypted secrets in a repo with a similar structure as this, the validate.sh exits with non-zero exit code and these errors:

INFO - Validating kustomization ./sops/staging/

WARN - Set to ignore missing schemas

WARN - stdin contains an invalid Secret (kube-system.foo-secret) - sops: Additional property sops is not allowed

WARN - stdin contains an invalid Secret (kube-system.bar-secret) - sops: Additional property sops is not allowed

$ echo $?

1I believe it is due to the nature of a sops encrypted secret having the sops: section while being of kind: Secret and not matching the standard k8s Secret spec:

apiVersion: v1

data:

address: ENC[AES256_GCM,data:************,type:str]

kind: Secret

metadata:

creationTimestamp: null

name: foo-secret

namespace: kube-system

sops:

kms: []

gcp_kms: []

azure_kv:

- vault_url: https://kvname.vault.azure.net

name: keyname

version: ************

created_at: "2021-12-09T14:00:56Z"

enc: ************

hc_vault: []

age: []

lastmodified: "2021-12-09T14:00:59Z"

mac: ENC[AES256_GCM,data:************,type:str]

pgp: []

encrypted_regex: ^(data|stringData)$

version: 3.7.1To work around this, we updated the script as such so kubeval could skip validation of Secrets:

# mirror kustomize-controller build options

kustomize_flags="--load-restrictor=LoadRestrictionsNone --reorder=legacy"

kustomize_config="kustomization.yaml"

kubeval_flags="--skip-kinds=Secret"

echo "INFO - Validating kustomize overlays"

find . -type f -name $kustomize_config -print0 | while IFS= read -r -d $'\0' file;

do

echo "INFO - Validating kustomization ${file/%$kustomize_config}"

kustomize build "${file/%$kustomize_config}" $kustomize_flags | \

kubeval --ignore-missing-schemas --strict --additional-schema-locations=file:///tmp/flux-crd-schemas $kubeval_flags

if [[ ${PIPESTATUS[0]} != 0 ]]; then

exit 1

fi

doneWould like to seek out other possible ways folks think this to address could be addressed.

Thanks!

I am on Ubuntu 20.04.

flux bootstrap github --context=staging --owner=${GITHUB_USER} --repository=${GITHUB_REPO} --branch=main --personal --path=clusters/staging

✗ context "staging" does not exist

I checked

flux check

► checking prerequisites

✗ flux 0.30.2 <0.31.1 (new version is available, please upgrade)

✔ Kubernetes 1.23.3 >=1.20.6-0

► checking controllers

✔ helm-controller: deployment ready

► ghcr.io/fluxcd/helm-controller:v0.21.0

✔ kustomize-controller: deployment ready

► ghcr.io/fluxcd/kustomize-controller:v0.25.0

✔ notification-controller: deployment ready

► ghcr.io/fluxcd/notification-controller:v0.23.5

✔ source-controller: deployment ready

► ghcr.io/fluxcd/source-controller:v0.24.4

✔ all checks passed

It seems OK

flux get sources all

NAME REVISION SUSPENDED READY MESSAGE

gitrepository/flux-system main/3fabbc2 False True stored artifact for revision 'main/3fabbc21c473f479389790de8d1daa20d207ebd6'

gitrepository/podinfo master/132f4e7 False True stored artifact for revision 'master/132f4e719209eb10b9485302f8593fc0e680f4fc'

Then I have problems here

flux get kustomizations

NAME REVISION SUSPENDED READY MESSAGE

flux-system main/4b57c5b46656c4e71233a34d1dea0db75a40dba7 False False failed to decode Kubernetes YAML from /tmp/kustomization-3169253572/clusters/demo-cluster/podinfo-kustomization.yaml: missing Resource metadata

Why? what is wrong with customization? I cloned the repo locally,just changed remote origin.

The staging context sets up fine and everything launches, but when I try to spin up the production context I get an error and nothing goes forward:

iancote@iancote-XPS-13-7390:~/git/awx-k3s-flux$ flux bootstrap github \

--context=staging

--owner=iancote

--repository=awx-k3s-flux

--branch=main

--personal

--path=clusters/staging

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/iancote/awx-k3s-flux.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed sync manifests to "main" ("2f83add8aa71d4a6d36f3e136c0121e89644c287")

► pushing component manifests to "https://github.com/iancote/awx-k3s-flux.git"

► installing components in "flux-system" namespace

✔ installed components

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

► generating source secret

✔ public key: ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQD5CWGl4r459xB1USxuJFGwOYun2I/AWM6tGARfzcAUUP0sYhdfPrMVyfe2nWSkWVCP7fUd8YfzxxdW/wtLCnkwlUxtVFArV5BDuC3e7yCc+J63vMZw3AY7RpP9rdso4GJ0jCMJ6R0Aj3F7maVHi9fBgxIqZgq+hOKHuC8l6IaMadClKz9Dl7vCD97m6irOegQiRTmUbVMpdJWNkinWiyvf+1PoPhESXlkkk45uVI7bgI8oxsjz5S6ysQSkSrLfCygMzdjaKcU6IUQAT7YUAX/siqePbsI7SnKp7Y5vgF6tz9KnT9T6S2v8SnY2HgZHGN+Cl4/03etI430M+wKd0+F/

✔ configured deploy key "flux-system-main-flux-system-./clusters/staging" for "https://github.com/iancote/awx-k3s-flux"

► applying source secret "flux-system/flux-system"

✔ reconciled source secret

► generating sync manifests

✔ generated sync manifests

✔ committed sync manifests to "main" ("52e7cafe8eac4a06e88d32895189d293b538d375")

► pushing sync manifests to "https://github.com/iancote/awx-k3s-flux.git"

► applying sync manifests

✔ reconciled sync configuration

◎ waiting for Kustomization "flux-system/flux-system" to be reconciled

✔ Kustomization reconciled successfully

► confirming components are healthy

✔ source-controller: deployment ready

✔ kustomize-controller: deployment ready

✔ helm-controller: deployment ready

✔ notification-controller: deployment ready

✔ all components are healthy

iancote@iancote-XPS-13-7390:/git/awx-k3s-flux$ kubectl config use-context production/git/awx-k3s-flux$ flux bootstrap github --context=production --owner=iancote --repository=awx-k3s-flux --branch=main --personal --path=clusters/production

Switched to context "production".

iancote@iancote-XPS-13-7390:

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/iancote/awx-k3s-flux.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed sync manifests to "main" ("710f4d5f4f3ef5da9c31631fb73f07dea7dc696a")

► pushing component manifests to "https://github.com/iancote/awx-k3s-flux.git"

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

✔ source secret up to date

✗ sync path configuration ("./clusters/production") would overwrite path ("./clusters/staging") of existing Kustomization

iancote@iancote-XPS-13-7390:/git/awx-k3s-flux$ git pull/git/awx-k3s-flux$ tree

remote: Enumerating objects: 21, done.

remote: Counting objects: 100% (21/21), done.

remote: Compressing objects: 100% (16/16), done.

remote: Total 17 (delta 0), reused 16 (delta 0), pack-reused 0

Unpacking objects: 100% (17/17), 18.33 KiB | 3.67 MiB/s, done.

From github.com:iancote/awx-k3s-flux

ffb1ba9..710f4d5 main -> origin/main

Updating ffb1ba9..710f4d5

Fast-forward

clusters/production/flux-system/gotk-components.yaml | 2831 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

clusters/staging/flux-system/gotk-components.yaml | 2831 ++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

clusters/staging/flux-system/gotk-sync.yaml | 27 +

clusters/staging/flux-system/kustomization.yaml | 5 +

4 files changed, 5694 insertions(+)

create mode 100644 clusters/production/flux-system/gotk-components.yaml

create mode 100644 clusters/staging/flux-system/gotk-components.yaml

create mode 100644 clusters/staging/flux-system/gotk-sync.yaml

create mode 100644 clusters/staging/flux-system/kustomization.yaml

iancote@iancote-XPS-13-7390:

.

├── apps

│ ├── base

│ │ └── podinfo

│ │ ├── kustomization.yaml

│ │ ├── namespace.yaml

│ │ └── release.yaml

│ ├── production

│ │ ├── kustomization.yaml

│ │ └── podinfo-values.yaml

│ └── staging

│ ├── kustomization.yaml

│ └── podinfo-values.yaml

├── clusters

│ ├── production

│ │ ├── apps.yaml

│ │ ├── flux-system

│ │ │ └── gotk-components.yaml

│ │ └── infrastructure.yaml

│ └── staging

│ ├── apps.yaml

│ ├── flux-system

│ │ ├── gotk-components.yaml

│ │ ├── gotk-sync.yaml

│ │ └── kustomization.yaml

│ └── infrastructure.yaml

└── infrastructure

├── kustomization.yaml

├── nginx

│ ├── kustomization.yaml

│ ├── namespace.yaml

│ └── release.yaml

├── redis

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ ├── namespace.yaml

│ ├── release.yaml

│ └── values.yaml

└── sources

├── bitnami.yaml

├── kustomization.yaml

└── podinfo.yaml

Any ideas what would cause that?

Thanks,

-ian

Hello! How can I achive to select which pre-release I want to install.

For example I have a chart that has one Release version and many pre-release called 1.x-dev and 1.x-uat. Due that Flux is using semver to select version, always is taking versions that finish with -uat because it use ASCII sort order to get the latest, so all the non productive environments are using the same chart version.

Is there any way of filter per postfix or some other way to select the chart depending of the environment? We are using the same directory structure as it is in the example.

Thanks!

Disclaimer: I'm new to flux.

Steps to reproduce:

► checking prerequisites

✔ kubectl 1.21.2 >=1.18.0-0

✔ Kubernetes 1.20.7-eks-8be107 >=1.16.0-0

► checking controllers

✔ helm-controller: deployment ready

► ghcr.io/fluxcd/helm-controller:v0.11.1

✔ kustomize-controller: deployment ready

► ghcr.io/fluxcd/kustomize-controller:v0.13.2

✔ notification-controller: deployment ready

► ghcr.io/fluxcd/notification-controller:v0.15.0

✔ source-controller: deployment ready

► ghcr.io/fluxcd/source-controller:v0.15.3

✔ all checks passed

context=$(kubectl config current-context)

export GITHUB_TOKEN=ksjdfkjasdkfjaksjdf

export GITHUB_USER=ME

export GITHUB_REPO=flux-tutorial-2

flux bootstrap github

--context=${context}

--owner=${GITHUB_USER}

--repository=${GITHUB_REPO}

--branch=main

--personal

--path=clusters/staging

► checking prerequisites

✔ kubectl 1.21.2 >=1.18.0-0

✔ Kubernetes 1.20.7-eks-8be107 >=1.16.0-0

► checking controllers

✔ helm-controller: deployment ready

► ghcr.io/fluxcd/helm-controller:v0.11.1

✔ kustomize-controller: deployment ready

► ghcr.io/fluxcd/kustomize-controller:v0.13.2

✔ notification-controller: deployment ready

► ghcr.io/fluxcd/notification-controller:v0.15.0

✔ source-controller: deployment ready

► ghcr.io/fluxcd/source-controller:v0.15.3

✔ all checks passed

flux get helmreleases ‐‐all‐namespaces # no HelmRelease objects found in flux-system namespaceThere seems to be a missing step in the instructions. If I'm missing something, I'm not sure what, as I've re-read and re-tried 3 times.

Hey, When the e2e is running I'm getting this error:

flux-system gitrepository/flux-system False invalid 'ssh' auth option: 'identity' is required

what might be the reason for this failure?

Hi,

First of all, thank you for your amazing work on this! I have based our flux2+kustomize repo on this and am wondering how I would integrate ImageUpdateAutomation to this? I have been unsuccessful getting it to work, which I'm starting to think may be related to this: fluxcd/flux2#1207. It's also likely an implementation error so let me explain my setup:

ImageUpdateAutomation in infrastructure

infrastructure/base/gitops-toolkit

├── image-update-automation.yaml

├── kustomization.yaml

├── notifications.yaml

└── secrets.yaml

The spec is very standard, with the path omitted (it's patched in infrastructure/<env>/image-update-automation-patch.yaml. The resource within the cluster includes the path

apiVersion: image.toolkit.fluxcd.io/v1alpha2

kind: ImageUpdateAutomation

metadata:

name: flux-system

namespace: flux-system

spec:

interval: 1m0s

sourceRef:

kind: GitRepository

name: flux-system

git:

checkout:

ref:

branch: main

commit:

author:

email: [email protected]

name: fluxcdbot

messageTemplate: '{{range .Updated.Images}}{{println .}}{{end}}'

push:

branch: main

update:

strategy: Setters

# path: ./clusters/development # Patched in environment

The anticipated target is a HelmRelease in services/company/portal/release.yaml. I have tried adding the image policy marker in the deployment of the helm chart itself, in the HelmRelease, and in the Kustomization images: [] field, but nothing is working. I see that the image policies in the cluster are up to date with the latest matching tags, so it just seems like the image-automation-controller is not doing its job. This makes me think the policy markers are not being seen or making it into the cluster. There are no logs coming out of the image-automation-controller that indicate its erroring or doing any work at all.

services

├── company

│ └── portal

│ ├── image-policy.yaml

│ ├── image-repo.yaml

│ ├── kustomization.yaml

│ ├── namespace.yaml

│ ├── notifications.yaml

│ ├── release.yaml

│ └── secrets.yaml

├── development

│ ├── image-policy-patches.yaml

│ ├── image-repo-patches.yaml

│ ├── kustomization.yaml

│ ├── release-patches.yaml

│ └── secret-patches.yaml

There is a discrepancy in the example that confuses me:

infrastructure/sources/podinfo.yaml does not specify a namespace, so the HelmRepository is placed in the default namespace.

apps/base/podinfo/release.yaml specifies as its sourceRef a HelmRepository named podinfo but in the flux-system namespace.

Should these two not refer to the same namespace?

~ flux get helmreleases --all-namespaces

NAMESPACE NAME REVISION SUSPENDED READY MESSAGE

cert-manager cert-manager v1.14.4 False True Helm install succeeded for release cert-manager/cert-manager.v1 with chart [email protected]

ingress-nginx ingress-nginx 4.10.0 False True Helm install succeeded for release ingress-nginx/ingress-nginx.v1 with chart [email protected]

podinfo podinfo 6.6.2 False True Helm install succeeded for release podinfo/podinfo.v1 with chart [email protected]

~ curl -H "Host: podinfo.production" http://localhost:8080

{

"hostname": "podinfo-75b9f9f57f-5fkmr",

"version": "6.6.2",

"revision": "8d010c498e79f499d1b37480507ca1ffb81a3bf7",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "greetings from podinfo v6.6.2",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.22.2",

"num_goroutine": "9",

"num_cpu": "12"

}

~ kc config use-context staging

Switched to context "staging".

~ flux bootstrap github \

--context=staging

--owner=${GITHUB_USER}

--repository=${GITHUB_REPO}

--branch=main

--personal

--path=clusters/staging

► connecting to github.com

► cloning branch "main" from Git repository "https://github.com/bunengxiu/gitops.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ component manifests are up to date

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

✔ source secret up to date

✗ sync path configuration ("./clusters/staging") would overwrite path ("./clusters/production") of existing Kustomizatio

Have cloned this repo and bootstrapped flux using the getting started guide:

2024-01-16T08:08:35.453Z info Kustomization/apps.flux-system - Dependencies do not meet ready condition, retrying in 30s

2024-01-16T08:08:35.459Z info Kustomization/infra-configs.flux-system - Dependencies do not meet ready condition, retrying in 30s

Issue is brought about by existence of clusters/production/infrastructure.yaml. If i remove it seems to work just fine.

The Dockerfile in ./github/actions/tools file in the fork i'm working with of this repository is rightfully flagged by aquasecurity/trivy-action as a high security vulnerability. Running containers as the root user goes against container security best practices. I propose we update the Dockerfile to run as User 1001.

I'm trying to deploy a helm chart and then patch one of the Deployments to add a label.

Try what I might, I cannot get this to work: https://github.com/agardnerIT/otel-demo-cdr/blob/main/clusters/otel-demo-cluster/apps/otel-demo-app/kustomization.yaml

I'm new to Kustomize and Flux which probabyl doesn't help, so more than happy to rework / read up to understand what I'm doing wrong. Point me in the right direction please :)

There is no namespace in the production file, while it exists in the staging one?

vs.

https://github.com/fluxcd/flux2-kustomize-helm-example/blob/main/apps/staging/kustomization.yaml#L3

I'm scratching my head trying to improve upon our current setup and limit duplication to a minimum.

We are using FluxCD on multiple clusters and multiple instances of the same app on different namespaces. This is the apps tree:

./apps/

├── base

│ ├── instance-01

│ │ ├── front-proxy

│ │ │ ├── kustomization.yaml

│ │ │ └── release.yaml

│ │ └── ... - other apps directories

│ └── instance-02

│ ├── front-proxy

│ │ ├── kustomization.yaml

│ │ └── release.yaml

│ └── ... - other apps directories

├── development

│ ├── instance-01

│ │ ├── front-proxy

│ │ │ ├── kustomization.yaml

│ │ │ └── front-proxy-values.yaml

│ │ ├── ... - other apps directories

│ │ ├── namespace.yaml

│ │ ├── kustomization.yaml

│ │ ├── kustomizeconfig.yaml

│ │ └── shared.yaml

│ └── instance-02

│ ├── front-proxy

│ │ ├── kustomization.yaml

│ │ └── front-proxy-values.yaml

│ ├── ... - other apps directories

│ ├── namespace.yaml

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ └── shared.yaml

└── test

├── instance-01

│ ├── front-proxy

│ │ ├── kustomization.yaml

│ │ └── front-proxy-values.yaml

│ ├── ... - other apps directories

│ ├── namespace.yaml

│ ├── kustomization.yaml

│ ├── kustomizeconfig.yaml

│ └── shared.yaml

└── instance-02

├── front-proxy

│ ├── kustomization.yaml

│ └── front-proxy-values.yaml

├── ... - other apps directories

├── namespace.yaml

├── kustomization.yaml

├── kustomizeconfig.yaml

└── shared.yaml

Where:

Only difference between /apps/base/instance-01/front-proxy/release.yaml & /apps/base/instance-02/front-proxy/release.yaml is the metadata:

/apps/base/instance-01/front-proxy/release.yaml:

metadata:

name: front-proxy

namespace: flux-instance-01

/apps/base/instance-02/front-proxy/release.yaml

metadata:

name: front-proxy

namespace: flux-instance-02

And then the apps are deployed with patchesStrategicMerge:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: flux-instance-01

resources:

- ../../../base/instance-01/front-proxy

patchesStrategicMerge:

- front-proxy-values.yaml

Everything works correctly this way but I dislike number of very similar code in /apps/base/instance-01 & /apps/base/instance-02.

The reason behind creating 2 bases, 1 per instance, was HelmRelease name uniqueness requirement but I'm wondering if there is a way to use a shared base instead of 1 per targetNamespace?

Is there a way to load patches from file, similar like this kustomize

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

patchesStrategicMerge:

- patch-exclude.yaml

I find troublesome to use flux2-kustomize patch string.

Thanks for the tips.

I am getting this error when applying HelmRelease kind. I get this error from logs of the HelmController. Can you please help me on this.

{"level":"error","ts":"2021-12-18T13:47:42.914Z","logger":"controller.helmrelease","msg":"Reconciler error","reconciler group":"helm.toolkit.fluxcd.io","reconciler kind":"HelmRelease","name":"nginx","namespace":"nginx","error":"Helm install failed: unable to build kubernetes objects from release manifest: [unable to recognize \"\": no matches for kind \"ClusterRole\" in version \"rbac.authorization.k8s.io/v1beta1\", unable to recognize \"\": no matches for kind \"ClusterRoleBinding\" in version \"rbac.authorization.k8s.io/v1beta1\", unable to recognize \"\": no matches for kind \"Role\" in version \"rbac.authorization.k8s.io/v1beta1\", unable to recognize \"\": no matches for kind \"RoleBinding\" in version \"rbac.authorization.k8s.io/v1beta1\"]"}

flux get all -- Display that chart and repo are fetched.

NAME READY MESSAGE REVISION SUSPENDED

gitrepository/flux-system True Fetched revision: master/065af7d92b738636805ba541f305bf86487e43a5 master/065af7d92b738636805ba541f305bf86487e43a5 False

NAME READY MESSAGE REVISION SUSPENDED

helmrepository/bitnami True Fetched revision: 3badadec8260194b80d3719f69bf7009962060043c63e4335b17263c678ccb2b 3badadec8260194b80d3719f69bf7009962060043c63e4335b17263c678ccb2b False

NAME READY MESSAGE REVISION SUSPENDED

helmchart/nginx-nginx True Pulled 'nginx-ingress-controller' chart with version '5.6.14'. 5.6.14 False

NAME READY MESSAGE REVISION SUSPENDED

kustomization/flux-system True Applied revision: master/065af7d92b738636805ba541f305bf86487e43a5 master/065af7d92b738636805ba541f305bf86487e43a5 False

Hi,

I'm seeing this error in my local: "podinfo" not found. Can you please help? All manifest files looks fine to me.

NAMESPACE NAME READY MESSAGE REVISION SUSPENDED

flux-system helmchart/podinfo-podinfo False failed to retrieve source: HelmRepository.source.toolkit.fluxcd.io "podinfo" not found False

NAMESPACE NAME READY MESSAGE REVISION SUSPENDED

podinfo helmrelease/podinfo False HelmChart 'flux-system/podinfo-podinfo' is not ready False

Hey

I was trying to bootstrap the cluster but the source-controller has some issues with helmchart permission:

E0203 20:44:03.400987 1 reflector.go:138] k8s.io/[email protected]/tools/cache/reflector.go:167: Failed to watch *v1beta1.HelmChart: failed to list *v1beta1.HelmChart: helmcharts.source.toolkit.fluxcd.io is forbidden: User "system:serviceaccount:flux-system:source-controller" cannot list resource "helmcharts" in API group "source.toolkit.fluxcd.io" at the cluster scope

Hey there! Thanks for providing an example of layout. I have a common question.

What's the advantage of using kustomize.toolkit.fluxcd.io/v1beta1 (kind: Kustomization), which points to the folder into root of the repo, instead of including references to the base / infrastructure folder with simple kustomization, like it was in first flux ? Having 2 production folders confuses, because we have to keep in mind that we need to jump between folder to make separates changes f.e. for apps and infrastructure (so there are 2 sources of truth (apps and infra) for one cluster).

I just learning flux v2 and just want to understand, what's the advantage of using layout in example.

Thanks in advance.

Hey,

I get the concept of this DRY example for Helm releases. Now it is fairly simple with a single app, but if we were to add another app let's say, only deployed in staging that require a postgreSQL db, how would it look like ?

I guess I'm trying to understand how do DRY in my context, without giving unnecessary overhead for any new team joiner in the future. So the simplest would be declare your app in each cluster folder. Easy to read, not that painful to maintain, but quite a lot of copy/paste.

So given your example and the need above, would it look like this (removed the things that don't change for clarity of the purpose):

flux-example

├── apps

│ ├── base

│ │ ├── myapp

│ │ │ ├── kustomization.yaml

│ │ │ ├── namespace.yaml

│ │ │ └── release.yaml

│ │ └── podinfo

│ │ ├── kustomization.yaml

│ │ ├── namespace.yaml

│ │ └── release.yaml

│ └── staging

│ ├── myapp

│ │ ├── kustomization.yaml

│ │ └── myapp-values.yaml

│ └── podinfo

│ ├── kustomization.yaml

│ └── podinfo-values.yaml

└── infrastructure

└── postgre

├── kustomization.yaml

├── namespace.yaml

└── release.yaml

9 directories, 13 files

I'm not trying to fit any best practice and I do understand it's up to us to define our repo structure to fit our needs, but I'm trying to get if I understood it correctly because a good start is important I guess if we are to deploy tens of charts with flux.

The HelmRepository apiVersion update from source.toolkit.fluxcd.io/v1beta1 to source.toolkit.fluxcd.io/v1beta2 for gave me the following error when applied on cluster:

"no matches for kind \"HelmRepository\" in version \"source.toolkit.fluxcd.io/v1beta2\"Reverting both infrastructure/sources/bitnami.yaml and infrastructure/sources/podinfo.yaml fixed the issue for me.

Hi,

since the bootstrap command fails in our environment:

flux bootstrap git \

--url=ssh://[email protected]/v3/<org>/<product>/<repo> \

--branch=master \

--path=clusters/staging \

--private-key-file=./identity

gives:

failed to clone repository: empty git-upload-pack given

What would be the alternative command list without bootstrap to reach the same?

Thanks for help

We have following set:

deploy-charts.yaml

apiVersion: kustomize.toolkit.fluxcd.io/v1beta2

kind: Kustomization

metadata:

name: 3-deploy-helmcharts

namespace: flux-system

spec:

path: ./infrastructure/types/dev-hw

postBuild:

substitute:

sde_suspend_release: "false"

types:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

metadata:

name: deploy-charts-dev-hw

resources:

- ../../applications/sde

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: sde

namespace: sde

spec:

suspend: ${sde_suspend_release}

...

However there is no way for the attached validate.sh script to validate this. Because if we specify false without quotes issue will be it's not a string in kustomize.toolkit.

stdin - Kustomization 3-deploy-helmcharts is invalid: For field spec.postBuild.substitute.sde_suspend_release: Invalid type. Expected: string, given: boolean

If we specify it as string it kustomize will say it needs to be boolean

INFO - Validating kustomization ./infrastructure/applications/sde/

stdin - HelmRelease sde is invalid: For field spec.suspend: Invalid type. Expected: boolean, given: string

Any way how to make this work?

Hi,

I am trying to configure multiple K8s clusters via a single Flux instance and a single repo with the following process. Please note cluster provisioning is handled outside of Flux and without using CAPI.

I use Terraform to create a Gitlab repo, install flux on the management cluster, and sync with the repo.

Next, I clone the repo and add sync files for it to deploy workloads on the remote cluster using a KUBECONFIG secret of that cluster.

It is a bit hard to find examples and detailed documentation for that particular use case could you create one?

Thank you very much!

It would be very helpful if the the flux get ... command was also showing us the commit message and the time of the commit.

Because right now by looking at the output I don't know if my last change was reconciled already or not, and also don't know what the SHA refers to unless I copy that and come back to Github and see what it is.

I am using a customized version of the e2e.yaml workflow, but one thing I have had trouble with is how to make use of it, when the cluster I am testing makes use of more complex infrastructure e.g. cert-manager with dns01 challenges.

In those cases I am missing some way to template the clusters configuration, such that selfsigned certs and local domain names are used when running in CI. Is there someway to either edit the yaml files before flux reconciles them, or some way for the kustomization to read in external variables before reconcilitiation?

I have tried to accomplish the first need, by using 'yq' to succesfully change the yaml files to use a working configuration, but I am not able to make it apply, because flux is unable to sync from local files, and will always read the files from the git source.

Am I missing something, or have anyone else managed to create stable workflows for more complex environments?

I forked this repo, added a new cluster in clusters/development and bootstraped flux on a local kind cluster.

flux bootstrap github \

--owner=itapai \

--repository=flux2-kustomize-helm-example \

--branch=master \

--path=clusters/development \

--personal

flux check

► checking prerequisites

✔ Kubernetes 1.25.2 >=1.20.6-0

► checking controllers

✔ helm-controller: deployment ready

► ghcr.io/fluxcd/helm-controller:v0.25.0

✔ kustomize-controller: deployment ready

► ghcr.io/fluxcd/kustomize-controller:v0.29.0

✔ notification-controller: deployment ready

► ghcr.io/fluxcd/notification-controller:v0.27.0

✔ source-controller: deployment ready

► ghcr.io/fluxcd/source-controller:v0.30.0

► checking crds

✔ alerts.notification.toolkit.fluxcd.io/v1beta1

✔ buckets.source.toolkit.fluxcd.io/v1beta2

✔ gitrepositories.source.toolkit.fluxcd.io/v1beta2

✔ helmcharts.source.toolkit.fluxcd.io/v1beta2

✔ helmreleases.helm.toolkit.fluxcd.io/v2beta1

✔ helmrepositories.source.toolkit.fluxcd.io/v1beta2

✔ kustomizations.kustomize.toolkit.fluxcd.io/v1beta2

✔ ocirepositories.source.toolkit.fluxcd.io/v1beta2

✔ providers.notification.toolkit.fluxcd.io/v1beta1

✔ receivers.notification.toolkit.fluxcd.io/v1beta1

✔ all checks passed

k get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

local-control-plane Ready control-plane 37m v1.25.2 172.24.0.2 <none> Ubuntu 22.04.1 LTS 5.19.12-arch1-1 containerd://1.6.8

k get svc -n nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-ingress-controller NodePort 10.96.186.228 <none> 80:32642/TCP,443:30051/TCP 32m

nginx-ingress-controller-default-backend ClusterIP 10.96.218.142 <none> 80/TCP 32m

When I try to curl it with the url, I get this error:

curl 172.24.0.2:32642 -H "Host: podinfo.development"

<html>

<head><title>503 Service Temporarily Unavailable</title></head>

<body>

<center><h1>503 Service Temporarily Unavailable</h1></center>

<hr><center>nginx</center>

</body>

</html>

and this error log in the nginx pod

k logs -n nginx nginx-ingress-controller-598f55d78c-6pcbq

...

E1003 18:57:16.549786 1 reflector.go:140] k8s.io/[email protected]/tools/cache/reflector.go:169: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:nginx:nginx-ingress-controller" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

W1003 18:57:47.749632 1 reflector.go:424] k8s.io/[email protected]/tools/cache/reflector.go:169: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:nginx:nginx-ingress-controller" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

E1003 18:57:47.749654 1 reflector.go:140] k8s.io/[email protected]/tools/cache/reflector.go:169: Failed to watch *v1.EndpointSlice: failed to list *v1.EndpointSlice: endpointslices.discovery.k8s.io is forbidden: User "system:serviceaccount:nginx:nginx-ingress-controller" cannot list resource "endpointslices" in API group "discovery.k8s.io" at the cluster scope

However, if I port forward and curl, it works.

k port-forward -n podinfo svc/podinfo 8088:9898

curl -H "Host: podinfo.development" localhost:8088

{

"hostname": "podinfo-6cd678c96-tqqkg",

"version": "6.2.1",

"revision": "44157ecd84c0d78b17e4d7b43f2a7bb316372d6c",

"color": "#34577c",

"logo": "https://raw.githubusercontent.com/stefanprodan/podinfo/gh-pages/cuddle_clap.gif",

"message": "greetings from podinfo v6.2.1",

"goos": "linux",

"goarch": "amd64",

"runtime": "go1.19.1",

"num_goroutine": "9",

"num_cpu": "8"

}

I tried the same thing with a managed kubernetes service (digitalocean) with a LoadBalancer service, and had the same error in the nginx-ingress pod and curl returned Empty reply from server.

How can I access the service via url?

Sorry if this is a very basic question. But I'm having a hard time understanding the function of kustomization.yaml in configs and controllers folder inside infrastructure.

The only thing inside this file are list of files inside the folder as resources.

Are these file necessary? there doesn't seem to be any kustomization done the resources.

Let's say when we release a new podinfo chart, as HelmRelease will scan that chart in 5m, then new podinfo app will deployed on both production and staging environment.

Is there a way we can deploy in staging environment first?

The following error occurs during the bootstrap: Any hints on this ?

$ flux bootstrap github --owner=${GITHUB_USER} --repository=${GITHUB_REPO} --branch=master --path=clusters/staging --personal

► connecting to github.com

✔ repository "https://github.com/xxxxxxx/flux2-kustomize-helm-example" created

► cloning branch "master" from Git repository "https://github.com/xxxxxxx/flux2-kustomize-helm-example.git"

✔ cloned repository

► generating component manifests

✔ generated component manifests

✔ committed sync manifests to "master" ("60adc00a8e500b024dc6059cbcb605297e10aa5c")

► pushing component manifests to "https://github.com/xxxxxx/flux2-kustomize-helm-example.git"

✔ reconciled components

► determining if source secret "flux-system/flux-system" exists

✔ source secret up to date

✗ sync path configuration ("./clusters/staging") would overwrite path ("./clusters/my-cluster") of existing Kustomization

qq: https://github.com/fluxcd/flux2-kustomize-helm-example/blob/main/apps/staging/kustomization.yaml#L3

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: podinfo

....is namespace: podinfo here necessary? The reason that I ask is that our kustomization.yaml will cover many namespaces.

Thanks

-cl

Hey,

first of all thank you very much for this great example. It works really well and except for some of the podinfo values not being documented here very well, it‘s easy to follow.

I think it could be extended a little, because I feel like I would not always (never) expose my service with a custom proxy command.

How would I point the installed ingress to my service and make it handle traffic over the internet? (Or in my clusters network)

Hi, didn't know if this was the correct place to ask. But i'll try here :)

I'm currently trying to get the helm controller on our cluster to deploy a release with a values.yaml file, I discovered this repo and tried following the "redis" example:

https://github.com/fluxcd/flux2-kustomize-helm-example/tree/main/infrastructure/redis

I have a folder with 6 files (i can upload them in a zip file if thats needed):

kustomization.yaml:

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

namespace: logging

resources:

- humio-token.yaml

- namespace.yaml

- release.yaml

configMapGenerator:

- name: fluentbit-values

files:

- values.yaml=values.yaml

configurations:

- kustomizeconfig.yaml

kustomizeconfig.yaml:

# Kustomize config for enabling HelmRelease values from

# ConfigMaps and Secrets generated by Kustomize

nameReference:

- kind: ConfigMap

version: v1

fieldSpecs:

- path: spec/valuesFrom/name

kind: HelmRelease

namespace.yaml:

apiVersion: v1

kind: Namespace

metadata:

name: logging

labels:

kubernetes.io/metadata.name: logging

release.yaml:

apiVersion: source.toolkit.fluxcd.io/v1beta1

kind: HelmRepository

metadata:

name: humio-repository

namespace: logging

spec:

interval: 30m

url: https://humio.github.io/humio-helm-charts

---

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: humio-fluentbit

namespace: logging

spec:

releaseName: humio-fluentbit

chart:

spec:

chart: humio-helm-charts

version: 0.8.29

sourceRef:

kind: HelmRepository

name: humio-repository

namespace: logging

interval: 10m0s

install:

remediation:

retries: 3

valuesFrom:

- kind: ConfigMap

name: fluentbit-values

values.yaml

humio-fluentbit:

enabled: true

humioHostname: cloud.humio.com

es:

tls: true

tokenSecretName: humio-token

inputConfig: |-

[INPUT]

Name tail

Path /var/log/containers/*.log

Parser cri

Tag kube.*

Refresh_Interval 5

Mem_Buf_Limit 5MB

Skip_Long_Lines On

parserConfig: |-

[PARSER]

Name cri

Format regex

Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) [^{]*(?<message>.*)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L%z

And a humio-token containing a secret access token.

But the Helm Controller keeps throwing an error:

"missing key 'values.yaml' in ConfigMap 'logging/fluentbit-values-7fffgftbhb'"

This has me puzzled cause when i describe the generated config map as yaml I can se a Binary Data entry with a values.yaml:

Is Binary Data not supported for the flux v2 helm controller? Or have I missed something somewhere?

/Henrik

If you had more than a single app to deploy into a cluster, where would you put the manifests?

I've been scratching my head for a while with this and I don't see a clear approach using the current repo structure.

My understanding of the file is that it monitors for changes in the current repository (flux-system defined during bootstrapping) and then apply the kustomizations in the root /infrastructure folder. Is that correct?

Secondly, is this task not already handled by gotk-sync.yaml generated during bootstrapping?

Thanks for such a well structured example. It's been very helpful in learning flux.

Hello

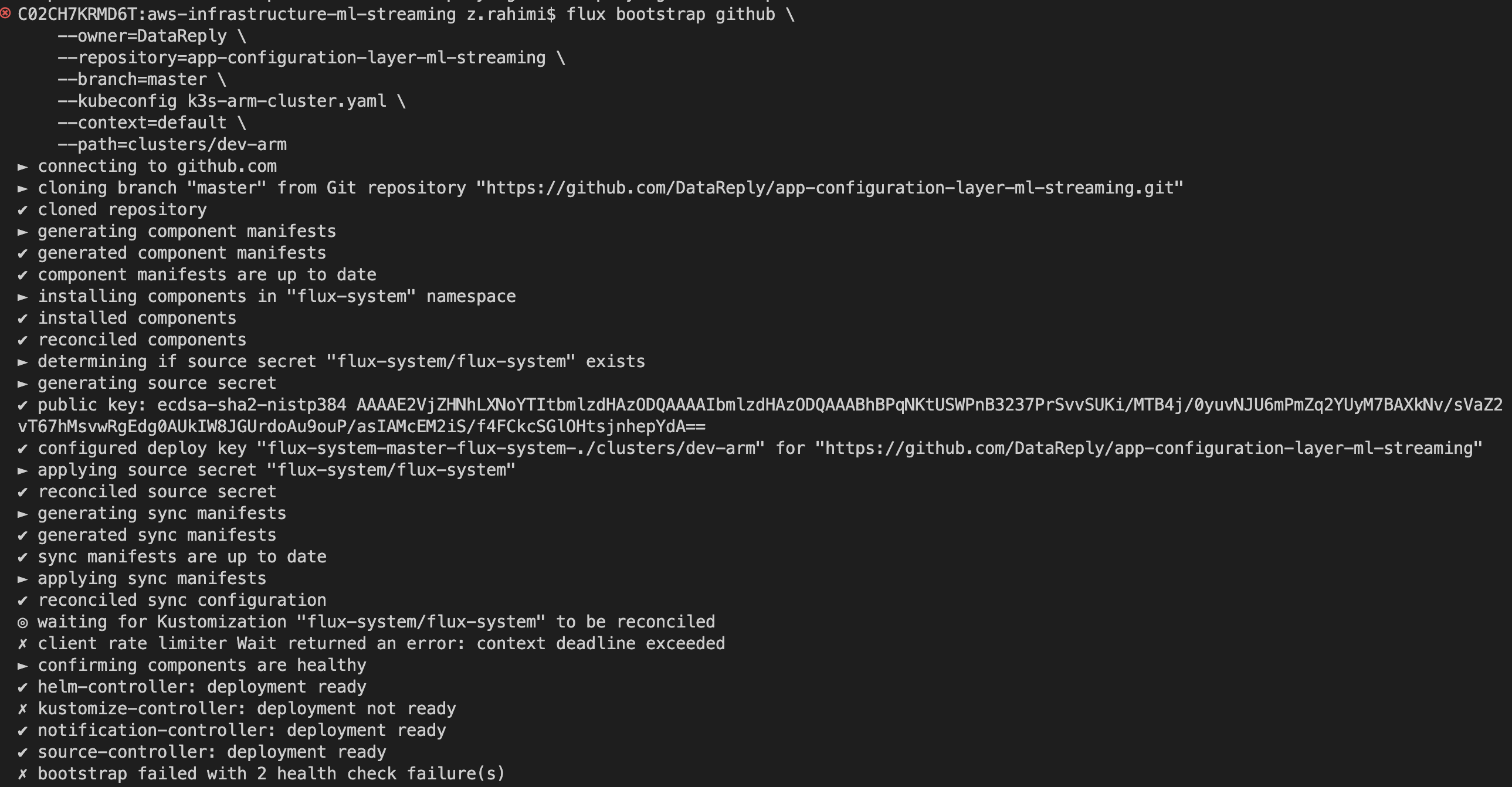

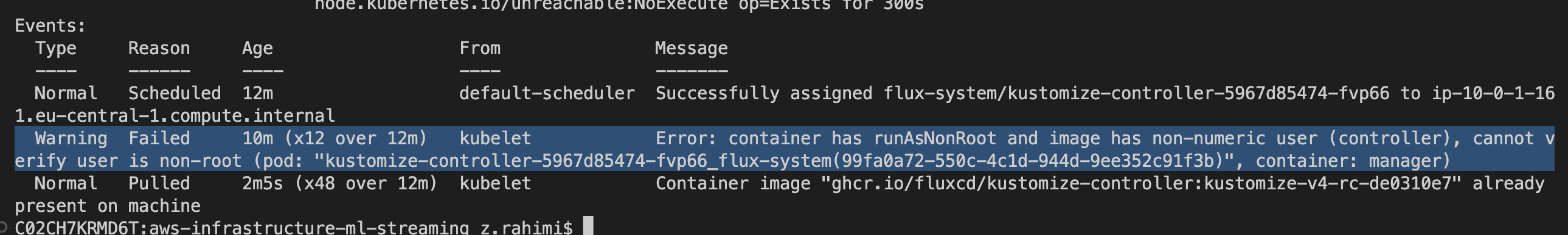

We are trying to bootstrap flux CD with our git repository and for some reason the bootstap fails with 2 health checks. When we are looking at the pods that are being deployed in the flux-system namespace we see that the `kustomization controller``` goes into crashback loop. Also when flux is trying to deploy the strimzi operator it shows the following error:

{"level":"error","ts":"2023-03-28T17:19:50.126Z","msg":"Reconciliation failed after 612.402757ms, next try in 10m0s","controller":"kustomization","controllerGroup":"[kustomize.toolkit.fluxcd.io](http://kustomize.toolkit.fluxcd.io/)","controllerKind":"Kustomization","Kustomization":{"name":"apps","namespace":"flux-system"},"namespace":"flux-system","name":"apps","reconcileID":"208d153b-ee19-469e-bdca-281ace1e641c","revision":"master@sha1:99aea66a26cf45820aa3414f081085675d6ed8d3","error":"Kafka/strimzi/kafka dry-run failed, error: no matches for kind \"Kafka\" in version \"[kafka.strimzi.io/v1beta2](http://kafka.strimzi.io/v1beta2)\"\nNamespace/seldon-system created\nNamespace/strimzi created\n"}```

Flux version: 0.41.2

Cluster details: k3s cluster with ARM64 architecture

[combined_cert_and_trans.pdf](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119687/combined_cert_and_trans.pdf)

[combined_certificate.pdf](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119688/combined_certificate.pdf)

[Contract_SUBLET.pdf](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119689/Contract_SUBLET.pdf)

[CV-EN-22364.docx](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119690/CV-EN-22364.docx)

[CV-Rachit.pdf](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119691/CV-Rachit.pdf)

[Dummy_Charger_for_customer_demo_bak.xlsx](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119692/Dummy_Charger_for_customer_demo_bak.xlsx)

[Dummy_Charger_for_customer_demo(1).xlsx](https://github.com/fluxcd/flux2-kustomize-helm-example/files/11119694/Dummy_Charger_for_customer_demo.1.xlsx)

A declarative, efficient, and flexible JavaScript library for building user interfaces.

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

An Open Source Machine Learning Framework for Everyone

The Web framework for perfectionists with deadlines.

A PHP framework for web artisans

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

Some thing interesting about web. New door for the world.

A server is a program made to process requests and deliver data to clients.

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

Some thing interesting about visualization, use data art

Some thing interesting about game, make everyone happy.

We are working to build community through open source technology. NB: members must have two-factor auth.

Open source projects and samples from Microsoft.

Google ❤️ Open Source for everyone.

Alibaba Open Source for everyone

Data-Driven Documents codes.

China tencent open source team.