Please use the outreach email for media, sponsorship, or to contact us for other miscellaneous purposes.

Do not send us emails with troubleshooting requests, feature requests or bug reports, please direct those to GitHub Issues or Discord.

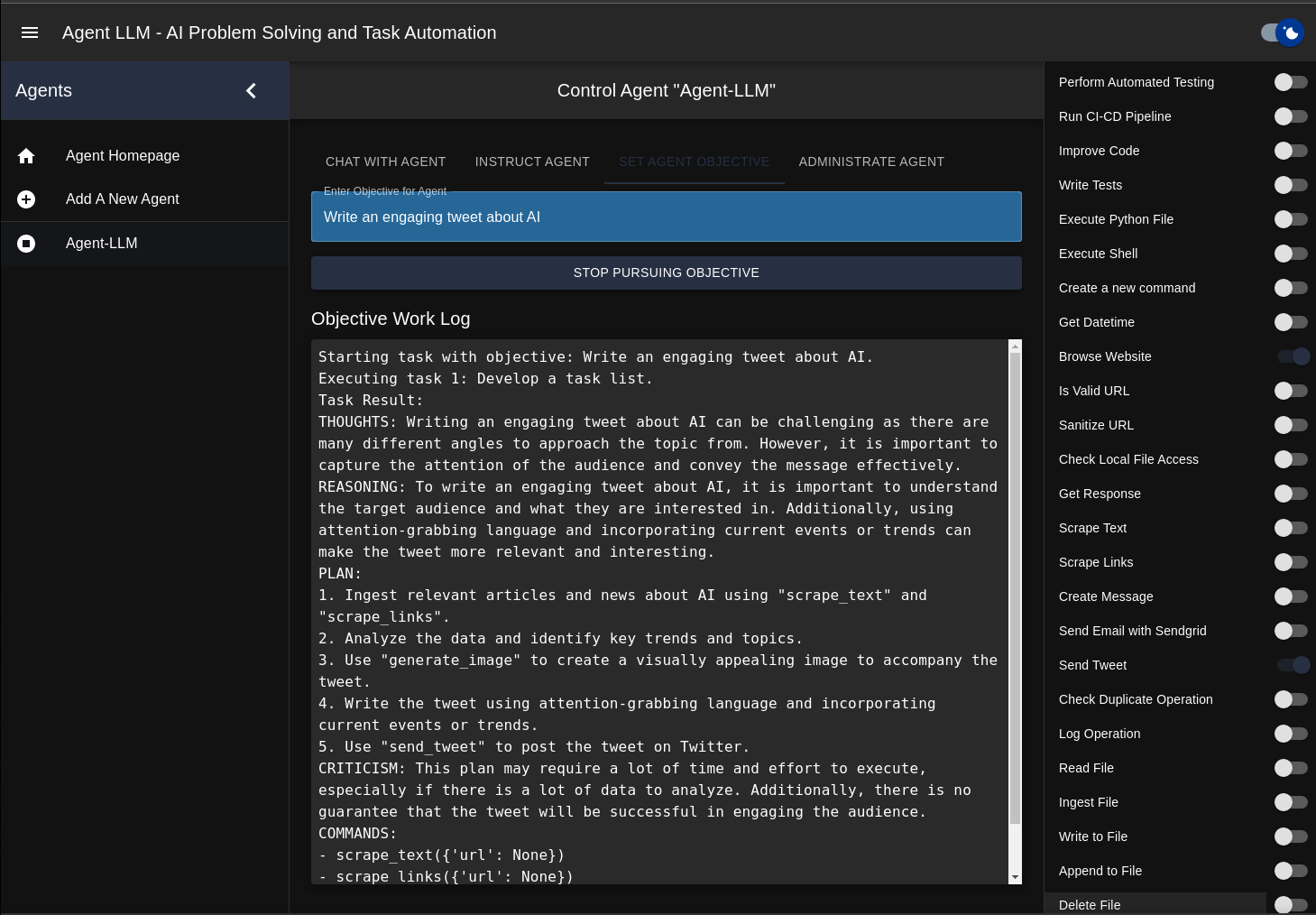

Agent-LLM is an Artificial Intelligence Automation Platform designed to power efficient AI instruction management across multiple providers. Our agents are equipped with adaptive memory, and this versatile solution offers a powerful plugin system that supports a wide range of commands, including web browsing. With growing support for numerous AI providers and models, Agent-LLM is constantly evolving to empower diverse applications.

You're welcome to disregard this message, but if you do and the AI decides that the best course of action for its task is to build a command to format your entire computer, that is on you. Understand that this is given full unrestricted terminal access by design and that we have no intentions of building any safeguards. This project intends to stay light weight and versatile for the best possible research outcomes.

Please note that using some AI providers (such as OpenAI's GPT-4 API) can be expensive! Monitor your usage carefully to avoid incurring unexpected costs. We're NOT responsible for your usage under any circumstance.

This project is under active development and may still have issues. We appreciate your understanding and patience. If you encounter any problems, please first check the open issues. If your issue is not listed, kindly create a new issue detailing the error or problem you experienced. Thank you for your support!

- Agent-LLM (Large Language Model)

⚠️ Run this in Docker or a Virtual Machine!⚠️ Monitor Your Usage!⚠️ Under Development!- Table of Contents 📖

- Media Coverage ⏯️

- Key Features 🗝️

- Web Application Features

- Quick Start

- Configuration

- API Endpoints

- Extending Functionality

- Contributing

- Donations and Sponsorships

- Our Team 🧑💻

- Acknowledgments

- History

- From World of AI on YouTube: Agent LLM: AI Automation Bot for Managing and Implementing AI Through Applications

-

Adaptive long-term and short-term memory management

-

Versatile plugin system with extensible commands for various AI models

-

Wide compatibility with multiple AI providers, including:

-

OpenAI GPT-3.5, GPT-4

-

Oobabooga Text Generation Web UI

-

Kobold

-

llama.cpp

-

FastChat

-

Google Bard

-

And More!

-

-

Web browsing and command execution capabilities

-

Code evaluation support

-

Seamless Docker deployment

-

Integration with Hugging Face for audio-to-text conversion

-

Interoperability with platforms like Twitter, GitHub, Google, DALL-E, and more

-

Text-to-speech options featuring Brian TTS, Mac OS TTS, and ElevenLabs

-

Continuously expanding support for new AI providers and services

The frontend web application of Agent-LLM provides an intuitive and interactive user interface for users to:

- Manage agents: View the list of available agents, add new agents, delete agents, and switch between agents.

- Set objectives: Input objectives for the selected agent to accomplish.

- Start tasks: Initiate the task manager to execute tasks based on the set objective.

- Instruct agents: Interact with agents by sending instructions and receiving responses in a chat-like interface.

- Available commands: View the list of available commands and click on a command to insert it into the objective or instruction input boxes.

- Dark mode: Toggle between light and dark themes for the frontend.

- Built using NextJS and Material-UI

- Communicates with the backend through API endpoints

- Obtain an OpenAI API key from OpenAI and add it to your

.envfile. - Set the

OPENAI_API_KEYin your.envfile using the provided .env.example as a template.

wget https://raw.githubusercontent.com/Josh-XT/Agent-LLM/main/docker-compose.yml

wget https://raw.githubusercontent.com/Josh-XT/Agent-LLM/main/.env.example

mv .env.example .env

- Run the following Docker command in the folder with your

.envfile:

docker compose up -d

- Access the web interface at http://localhost

You'll need to run docker compose to build if the command above does not work.

docker compose -f docker-compose-mac.yml up -d

We are constantly trying to expand our AI provider support. Take a look at our Jupyter Notebooks for Quick starts for these:

Reminder:

For more detailed setup and configuration instructions, refer to the sections below.

Agent-LLM utilizes a .env configuration file to store AI language model settings, API keys, and other options. Use the supplied .env.example as a template to create your personalized .env file. Configuration settings include:

- INSTANCE CONFIG: Set the agent name, objective, and initial task.

- AI_PROVIDER: Choose between OpenAI, llama.cpp, or Oobabooga for your AI provider.

- AI_PROVIDER_URI: Set the URI for custom AI providers such as Oobabooga Text Generation Web UI (default is http://127.0.0.1:7860).

- MODEL_PATH: Set the path to the AI model if using llama.cpp or other custom providers.

- COMMANDS_ENABLED: Enable or disable command extensions.

- MEMORY SETTINGS: Configure short-term and long-term memory settings.

- AI_MODEL: Specify the AI model to be used (e.g., gpt-3.5-turbo, gpt-4, text-davinci-003, Vicuna, etc.).

- AI_TEMPERATURE: Set the AI temperature (leave default if unsure).

- MAX_TOKENS: Set the maximum number of tokens for AI responses (default is 2000).

- WORKING_DIRECTORY: Set the agent's working directory.

- EXTENSIONS_SETTINGS: Configure settings for OpenAI, Hugging Face, Selenium, Twitter, and GitHub.

- VOICE_OPTIONS: Choose between Brian TTS, Mac OS TTS, or ElevenLabs for text-to-speech.

For a detailed explanation of each setting, refer to the .env.example file provided in the repository.

Agent-LLM provides several API endpoints for managing agents, prompts and chains.

To learn more about the API endpoints and their usage, visit the API documentation at

This documentation is hosted locally and the frontend must be running for these links to work.

To introduce new commands, generate a new Python file in the commands folder and define a class inheriting from the Commands class. Implement the desired functionality as methods within the class and incorporate them into the commands dictionary.

To switch AI providers, adjust the AI_PROVIDER setting in the .env file. The application is compatible with OpenAI, Oobabooga Text Generation Web UI, and llama.cpp. To support additional providers, create a new Python file in the provider folder and implement the required functionality.

Coming Soon: Any providers defined in the .env file will be usable on different agents in the application and will not need to be manually switched.

We welcome contributions to Agent-LLM! If you're interested in contributing, please check out our contributions guide the open issues and pull requests, submit a pull request, or suggest new features. To stay updated on the project's progress,

We appreciate any support for Agent-LLM's development, including donations, sponsorships, and any other kind of assistance. If you would like to support us, please contact us through our

We're always looking for ways to improve Agent-LLM and make it more useful for our users. Your support will help us continue to develop and enhance the application. Thank you for considering to support us!

| Josh (@Josh-XT) | James (@JamesonRGrieve) |

|---|---|

|

|

|

|

|

|

This project was inspired by and is built using code from the following open-source repositories:

Please consider exploring and contributing to these projects if you like what we are doing.