rsparse is an R package for statistical learning primarily on sparse matrices - matrix factorizations, factorization machines, out-of-core regression. Many of the implemented algorithms are particularly useful for recommender systems and NLP.

We've paid some attention to the implementation details - we try to avoid data copies, utilize multiple threads via OpenMP and use SIMD where appropriate. Package allows to work on datasets with millions of rows and millions of columns.

- Follow the proximally-regularized leader which allows to solve very large linear/logistic regression problems with elastic-net penalty. Solver uses stochastic gradient descent with adaptive learning rates (so can be used for online learning - not necessary to load all data to RAM). See Ad Click Prediction: a View from the Trenches for more examples.

- Only logistic regerssion implemented at the moment

- Native format for matrices is CSR -

Matrix::RsparseMatrix. However common RMatrix::CsparseMatrix(dgCMatrix) will be converted automatically.

- Factorization Machines supervised learning algorithm which learns second order polynomial interactions in a factorized way. We provide highly optimized SIMD accelerated implementation.

- Vanilla Maximum Margin Matrix Factorization - classic approch for "rating" prediction. See

WRMFclass and constructor optionfeedback = "explicit". Original paper which indroduced MMMF could be found here. - Weighted Regularized Matrix Factorization (WRMF) from Collaborative Filtering for Implicit Feedback Datasets. See

WRMFclass and constructor optionfeedback = "implicit". We provide 2 solvers:- Exact based on Cholesky Factorization

- Approximated based on fixed number of steps of Conjugate Gradient. See details in Applications of the Conjugate Gradient Method for Implicit Feedback Collaborative Filtering and Faster Implicit Matrix Factorization.

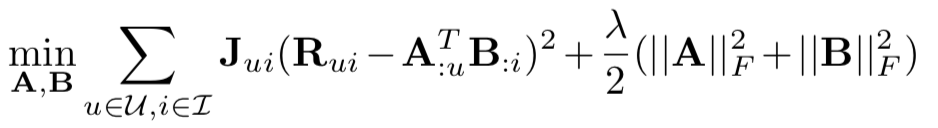

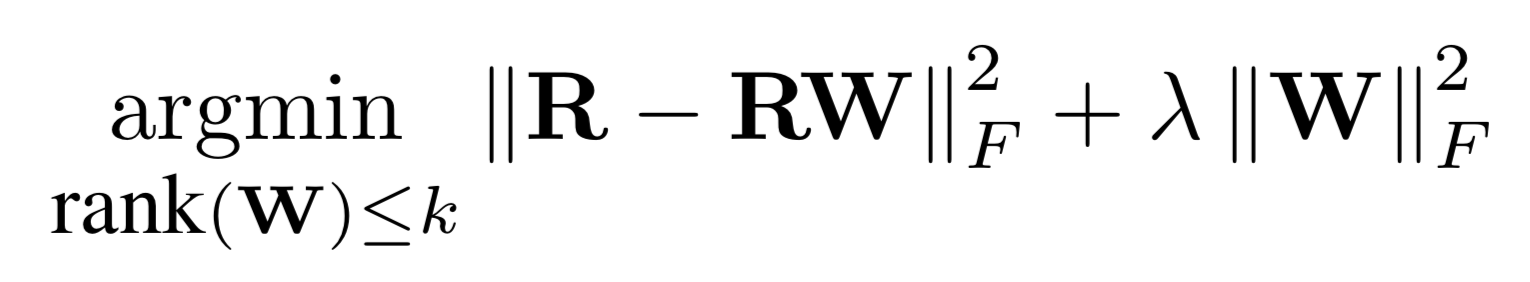

- Linear-Flow from Practical Linear Models for Large-Scale One-Class Collaborative Filtering. Algorithm looks for factorized low-rank item-item similarity matrix (in some sense it is similar to SLIM)

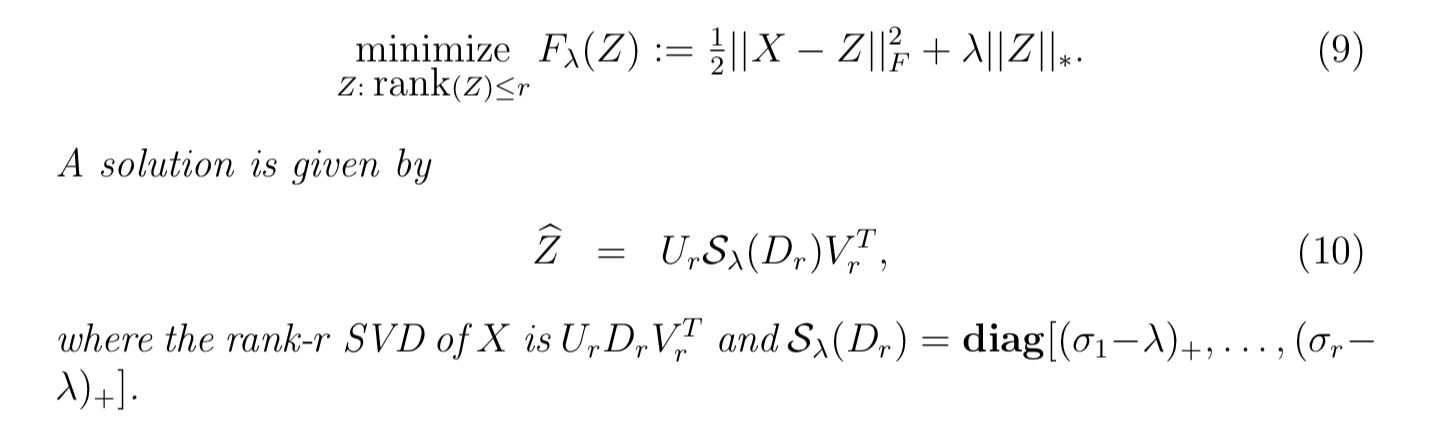

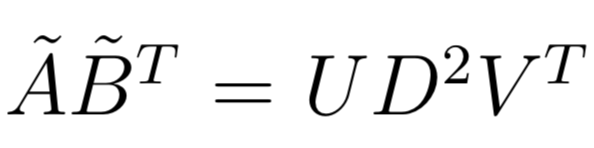

- Fast Truncated SVD and Truncated Soft-SVD via Alternating Least Squares as described in Matrix Completion and Low-Rank SVD via Fast Alternating Least Squares. Works for both sparse and dense matrices. Works on float matrices as well! For certain problems may be even faster than irlba package.

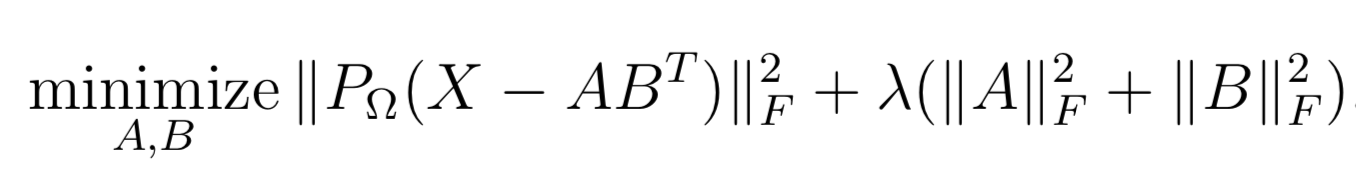

- Soft-Impute via fast Alternating Least Squares as described in Matrix Completion and Low-Rank SVD via Fast Alternating Least Squares.

- GloVe as described in GloVe: Global Vectors for Word Representation.

- This is usually used to train word embeddings, but actually also very useful for recommender systems.

- Matrix scaling as descibed in EigenRec: Generalizing PureSVD for Effective and Efficient Top-N Recommendations

Note: the optimized matrix operations which rparse used to offer have been moved to a separate package

Most of the algorithms benefit from OpenMP and many of them could utilize high-performance implementations of BLAS. If you want to make the maximum out of this package, please read the section below carefully.

It is recommended to:

- Use high-performance BLAS (such as OpenBLAS, MKL, Apple Accelerate).

- Add proper compiler optimizations in your

~/.R/Makevars. For example on recent processors (with AVX support) and compiler with OpenMP support, the following lines could be a good option:

CXX11FLAGS += -O3 -march=native -fopenmp

CXXFLAGS += -O3 -march=native -fopenmp

If you are on Mac follow the instructions at https://mac.r-project.org/openmp/. After clang configuration, additionally put a PKG_CXXFLAGS += -DARMA_USE_OPENMP line in your ~/.R/Makevars. After that, install rsparse in the usual way.

Also we recommend to use vecLib - Apple’s implementations of BLAS.

ln -sf /System/Library/Frameworks/Accelerate.framework/Frameworks/vecLib.framework/Versions/Current/libBLAS.dylib /Library/Frameworks/R.framework/Resources/lib/libRblas.dylibOn Linux, it's enough to just create this file if it doesn't exist (~/.R/Makevars).

If using OpenBLAS, it is highly recommended to use the openmp variant rather than the pthreads variant. On Linux, it is usually available as a separate package in typical distribution package managers (e.g. for Debian, it can be obtained by installing libopenblas-openmp-dev, which is not the default version), and if there are multiple BLASes installed, can be set as the default through the Debian alternatives system - which can also be used for MKL.

By default, R for Windows comes with unoptimized BLAS and LAPACK libraries, and rsparse will prefer using Armadillo's replacements instead. In order to use BLAS, install rsparse from source (not from CRAN), removing the option -DARMA_DONT_USE_BLAS from src/Makevars.win and ideally adding -march=native (under PKG_CXXFLAGS). See this tutorial for instructions on getting R for Windows to use OpenBLAS. Alternatively, Microsoft's MRAN distribution for Windows comes with MKL.

Note that syntax is these posts/slides is not up to date since package was under active development

- Slides from DataFest Tbilisi(2017-11-16)

- Introduction to matrix factorization with Weighted-ALS algorithm - collaborative filtering for implicit feedback datasets.

- Music recommendations using LastFM-360K dataset

- evaluation metrics for ranking

- setting up proper cross-validation

- possible issues with nested parallelism and thread contention

- making recommendations for new users

- complimentary item-to-item recommendations

- Benchmark against other good implementations

Here is example of rsparse::WRMF on lastfm360k dataset in comparison with other good implementations:

We follow mlapi conventions.

Don't forget to add DARMA_NO_DEBUG to PKG_CXXFLAGS to skip bound checks (this has significant impact on NNLS solver)

PKG_CXXFLAGS = ... -DARMA_NO_DEBUG

Generate configure:

autoconf configure.ac > configure && chmod +x configure