Neural Network JavaScript library for Coding Train tutorials

Here are some demos running directly in the browser:

- XOR problem, Coding Challenge on YouTube

- Handwritten digit recognition

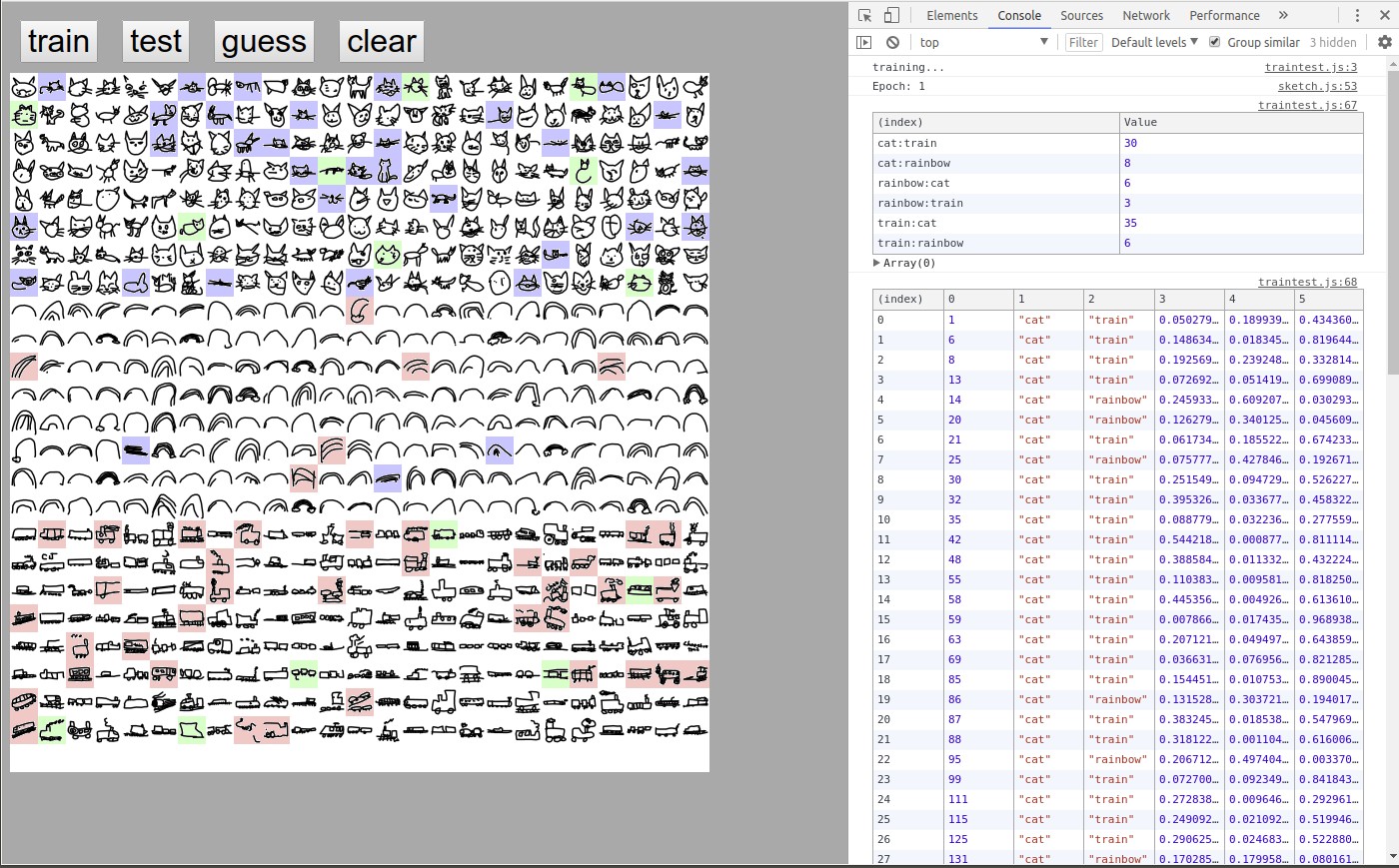

- Doodle classifier, Coding Challenge on YouTube

- Redo gradient descent video about

- Delta weight formulas, connect to "mathematics of gradient" video

- Implement gradient descent in library / with code

- XOR coding challenge live example

- MNIST coding challenge live example

- redo this challenge

- cover softmax activation, cross-entropy

- graph cost function?

- only use testing data

- Support for saving / restoring network (see #50)

- Support for different activation functions (see #45, #62)

- Support for multiple hidden layers (see #61)

- Support for neuro-evolution

- play flappy bird (many players at once).

- play pong (many game simulations at once)

- steering sensors (a la Jabril's forrest project!)

- Combine with ml5 / deeplearnjs

If you're looking for the original source code to match the videos visit this repo

You need to have the following installed:

- Nodejs

- NPM

- Install the NodeJS dependencies via the following command:

npm install

This Project doesn't require any additional Installing steps

NeuralNetwork- The neural network classpredict(input_array)- Returns the output of a neural networktrain(input_array, target_array)- Trains a neural network

The Tests can either be checked via the automatically running CircleCI Tests or you can also run npm test on your PC after you have done the Step "Prerequisites"

Please send PullRequests. These need to pass a automated Test first and after it will get reviewed and on that review either denied or accepted.

Here are some libraries with the same or similar functionality to this one built by the community:

- Java Neural Network Library by kim-marcel

- Library-less Java Neural Network by Fir3will

- Python Neural Network Library by Gabriel-Teston

- Python Neural Network Library by David Snyder

- JavaScript Multi-Layer Neural Network Library by Shekhar Tyagi

- F# Neural Network Library by jackroi

- TinyNeuralNetwork4Java by Anirudh Giri

- miniANN Neural Network Library JavaScript by Siddharth Maurya

- Convolutional Neural Network Library JavaScript by Yubraj Sharma

Feel free to add your own libraries.

We use SemVer for versioning. For the versions available, see the tags on this repository.

- shiffman - Initial work - shiffman

See also the list of contributors who participated in this project.

This project is licensed under the terms of the MIT license, see LICENSE.