InfluxDB Cluster - An Open-Source Distributed Time Series Database, Open Source Alternative to InfluxDB Enterprise

InfluxDB Cluster is an open source time series database with no external dependencies. It's useful for recording metrics, events, and performing analytics.

InfluxDB Cluster is inspired by InfluxDB Enterprise, InfluxDB v1.8.10 and InfluxDB v0.11.1, aiming to replace InfluxDB Enterprise.

InfluxDB Cluster is easy to maintain, and can be updated in real time with upstream InfluxDB 1.x.

- Built-in HTTP API so you don't have to write any server side code to get up and running.

- Data can be tagged, allowing very flexible querying.

- SQL-like query language.

- Clustering is supported out of the box, so that you can scale horizontally to handle your data. Clustering is currently in production state.

- Simple to install and manage, and fast to get data in and out.

- It aims to answer queries in real-time. That means every data point is indexed as it comes in and is immediately available in queries that should return in < 100ms.

Note: The clustering of InfluxDB Cluster is exactly the same as that of InfluxDB Enterprise.

Please see: Clustering in InfluxDB Enterprise

Architectural overview:

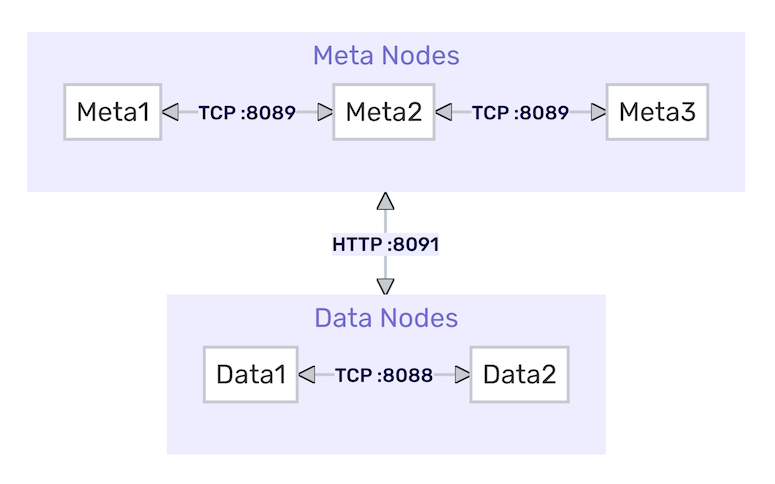

Network overview:

We recommend installing InfluxDB Cluster using one of the pre-built releases.

Complete the following steps to install an InfluxDB Cluster in your own environment:

Note: The installation of InfluxDB Cluster is exactly the same as that of InfluxDB Enterprise.

Download docker-compose.yml, then start 3 meta nodes and 2 data nodes by docker-compose:

docker-compose up -d

docker exec -it influxdb-meta-01 bash

influxd-ctl add-meta influxdb-meta-01:8091

influxd-ctl add-meta influxdb-meta-02:8091

influxd-ctl add-meta influxdb-meta-03:8091

influxd-ctl add-data influxdb-data-01:8088

influxd-ctl add-data influxdb-data-02:8088

influxd-ctl show

Stop and remove them when they are no longer in use:

docker-compose down -v

curl -XPOST "http://influxdb-data-01:8086/query" --data-urlencode "q=CREATE DATABASE mydb WITH REPLICATION 2"

curl -XPOST "http://influxdb-data-01:8086/write?db=mydb" \

-d 'cpu,host=server01,region=uswest load=42 1434055562000000000'

curl -XPOST "http://influxdb-data-02:8086/write?db=mydb&consistency=all" \

-d 'cpu,host=server02,region=uswest load=78 1434055562000000000'

curl -XPOST "http://influxdb-data-02:8086/write?db=mydb&consistency=quorum" \

-d 'cpu,host=server03,region=useast load=15.4 1434055562000000000'

Note:

consistency=[any,one,quorum,all]sets the write consistency for the point.consistencyisoneif you do not specify consistency. See the Insert some data / Write consistency for detailed descriptions of each consistency option.

curl -G "http://influxdb-data-02:8086/query?pretty=true" --data-urlencode "db=mydb" \

--data-urlencode "q=SELECT * FROM cpu WHERE host='server01' AND time < now() - 1d"

curl -G "http://influxdb-data-02:8086/query?pretty=true" --data-urlencode "db=mydb" \

--data-urlencode "q=SELECT mean(load) FROM cpu WHERE region='uswest'"

- View the wiki: English Document / 中文文档.

- Read more about the design goals and motivations of the project.

- Follow the getting started guide to learn the basics in just a few minutes.

- Learn more about clustering and glossary.

If you're feeling adventurous and want to contribute to InfluxDB Cluster, see our CONTRIBUTING.md for info on how to make feature requests, build from source, and run tests.

See LICENSE and DEPENDENCIES.md.