An easy-to-use library for skin tone classification.

This can be used to detect face or skin area in the specified images. The detected skin tones are then classified into the specified color categories. The library finally generates results to report the detected faces (if any), dominant skin tones and color categories.

Check out the Changelog for the latest updates.

If you find this project helpful, please consider giving it a star ⭐. It would be a great encouragement for me!

Table of Contents

- Video tutorials

- Installation

- HOW TO USE

- Quick Start

- Detailed Usage

- Use Cases

- 1. Process multiple images

- 2. Specify color categories

- 3. Specify category labels

- 4. Specify output folder

- 5. Store report images for debugging

- 6. Specify the types of the input image(s)

- 7. Convert the

colorimages toblack/whiteimages - 8. Tune parameters of face detection

- 9. Multiprocessing settings

- 10. Used as a library by importing into other projects

- Use Cases

- Citation

- Contributing

- Disclaimer

Please visit the following video tutorials if you have no programming background or are unfamiliar with how to use Python and this library 💖

Click here to show more.

Please refer to this notebook for more information.

More videos are coming soon...

Tip

Since v1.2.3, we have made the GUI mode optional.

pip install skin-tone-classifier --upgradeIt is useful for users who want to use this library in non-GUI environments, e.g., servers or .

pip install skin-tone-classifier[all] --upgradeIt is useful for users who are not familiar with the command line interface and want to use the GUI mode.

git clone [email protected]:ChenglongMa/SkinToneClassifier.git

cd SkinToneClassifier

pip install -e . --verboseTip

If you encounter the following problem:

ImportError: DLL load failed while importing _core: The specified module could not be found

Please download and install Visual C++ Redistributable at here.

Then this error will be gone.

Tip

You can combine the following documents, the video tutorials above

and the running examples

to understand the usage of this library more intuitively.

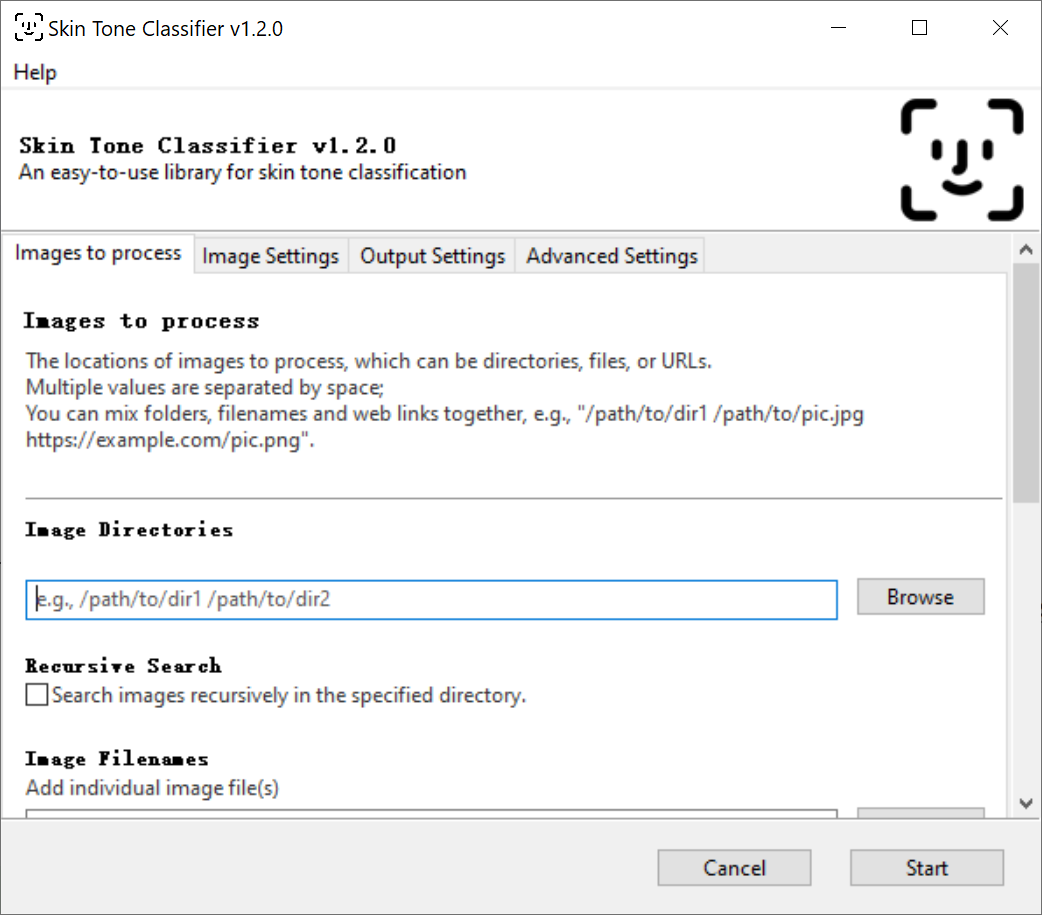

✨ Since v1.2.0, we have provided a GUI version of stone for users who are not familiar with the command line

interface.

Instead of typing commands in the terminal, you can use the config GUI of stone to process the images.

Steps:

- Open the terminal that can run

stone(e.g.,PowerShellin Windows orTerminalin macOS). - Type

stone(without any parameters) orstone --guiand press Enter to open the GUI. - Specify the parameters in each tab.

- Click the

Startbutton to start processing the images.

Hopefully, this can make it easier for you to use stone 🍻!

Tip

-

It is recommended to install v1.2.3+, which supports Python 3.9+.

If you have installed v1.2.0, please upgrade to v1.2.3+ by running

pip install skin-tone-classifier[all] --upgrade -

If you encounter the following problem:

This program needs access to the screen. Please run with a Framework build of python, and only when you are logged in on the main display of your Mac.

Please launch the GUI by running

pythonw -m stonein the terminal. References:

To detect the skin tone in a portrait, e.g.,

Just run:

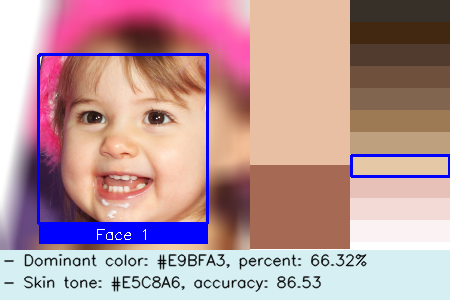

stone -i /path/to/demo.png --debugThen, you can find the processed image in ./debug/color/faces_1 folder, e.g.,

In this image, from left to right you can find the following information:

- detected face with a label (Face 1) enclosed by a rectangle.

- dominant colors.

- The number of colors depends on settings (default is 2), and their sizes depend on their proportion.

- specified color palette and the target label is enclosed by a rectangle.

- you can find a summary text at the bottom.

Furthermore, there will be a report file named result.csv which contains more detailed information, e.g.,

| file | image type | face id | dominant 1 | percent 1 | dominant 2 | percent 2 | skin tone | tone label | accuracy(0-100) |

|---|---|---|---|---|---|---|---|---|---|

| demo.png | color | 1 | #C99676 | 0.67 | #805341 | 0.33 | #9D7A54 | CF | 86.27 |

file: the filename of the processed image.- NB: The filename pattern of report image is

<file>-<face id>.<extension>

- NB: The filename pattern of report image is

image type: the type of the processed image, i.e.,colororbw(black/white).face id: the id of the detected face, which matches the reported image.NAmeans no face has been detected.dominant n: then-th dominant color of the detected face.percent n: the percentage of then-th dominant color, (0~1.0).skin tone: the skin tone category of the detected face.tone label: the label of skin tone category of the detected face.accuracy: the accuracy of the skin tone category of the detected face, (0~100). The larger, the better.

To see the usage and parameters, run:

stone -h (or --help)Output in console:

usage: stone [-h] [-i IMAGE FILENAME [IMAGE FILENAME ...]] [-r] [-t IMAGE TYPE] [-p PALETTE [PALETTE ...]]

[-l LABELS [LABELS ...]] [-d] [-bw] [-o DIRECTORY] [--n_workers WORKERS] [--n_colors COLORS]

[--new_width WIDTH] [--scale SCALE] [--min_nbrs NEIGHBORS] [--min_size WIDTH [HEIGHT ...]]

[--threshold THRESHOLD] [-v]

Skin Tone Classifier

options:

-h, --help show this help message and exit

-i IMAGE FILENAME [IMAGE FILENAME ...], --images IMAGE FILENAME [IMAGE FILENAME ...]

Image filename(s) or URLs to process;

Supports multiple values separated by space, e.g., "a.jpg b.png";

Supports directory or file name(s), e.g., "./path/to/images/ a.jpg";

Supports URL(s), e.g., "https://example.com/images/pic.jpg" since v1.1.0+.

The app will search all images in current directory in default.

-r, --recursive Whether to search images recursively in the specified directory.

-t IMAGE TYPE, --image_type IMAGE TYPE

Specify whether the input image(s) is/are colored or black/white.

Valid choices are: "auto", "color" or "bw",

Defaults to "auto", which will be detected automatically.

-p PALETTE [PALETTE ...], --palette PALETTE [PALETTE ...]

Skin tone palette;

Supports RGB hex value leading by "#" or RGB values separated by comma(,),

E.g., "-p #373028 #422811" or "-p 255,255,255 100,100,100"

-l LABELS [LABELS ...], --labels LABELS [LABELS ...]

Skin tone labels; default values are the uppercase alphabet list leading by the image type ('C' for 'color'; 'B' for 'Black&White'), e.g., ['CA', 'CB', ..., 'CZ'] or ['BA', 'BB', ..., 'BZ'].

-d, --debug Whether to generate report images, used for debugging and verification.The report images will be saved in the './debug' directory.

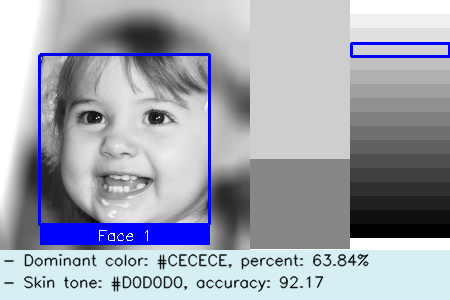

-bw, --black_white Whether to convert the input to black/white image(s).

If true, the app will use the black/white palette to classify the image.

-o DIRECTORY, --output DIRECTORY

The path of output file, defaults to current directory.

--n_workers WORKERS The number of workers to process the images, defaults to the number of CPUs in the system.

--n_colors COLORS CONFIG: the number of dominant colors to be extracted, defaults to 2.

--new_width WIDTH CONFIG: resize the images with the specified width. Negative value will be ignored, defaults to 250.

--scale SCALE CONFIG: how much the image size is reduced at each image scale, defaults to 1.1

--min_nbrs NEIGHBORS CONFIG: how many neighbors each candidate rectangle should have to retain it.

Higher value results in less detections but with higher quality, defaults to 5.

--min_size WIDTH [HEIGHT ...]

CONFIG: minimum possible face size. Faces smaller than that are ignored, defaults to "90 90".

--threshold THRESHOLD

CONFIG: what percentage of the skin area is required to identify the face, defaults to 0.15.

-v, --version Show the version number and exit.

1.1 Multiple filenames

stone -i (or --images) a.jpg b.png https://example.com/images/pic.jpg1.2 Images in some folder(s)

stone -i ./path/to/images/NB: Supported image formats: .jpg, .gif, .png, .jpeg, .webp, .tif.

In default (i.e., stone without -i option), the app will search images in current folder.

2.1 Use HEX values

stone -p (or --palette) #373028 #422811 #513B2ENB: Values start with '#' and are separated by space.

2.2 Use RGB tuple values

stone -p 55,48,40 66,40,17 251,242,243NB: Values split by comma ',', multiple values are still separated by space.

You can assign the labels for the skin tone categories, for example:

"CA": "#373028",

"CB": "#422811",

"CC": "#513B2E",

...

To achieve this, you can use the -l (or --labels) option:

3.1 Specify the labels directly using spaces as delimiters, e.g.,

stone -l A B C D E F G H3.2 Specify the range of labels based on this pattern: <start><sep><end><sep><step>.

Specifically,

<start>: the start label, can be a letter (e.g.,A) or a number (e.g.,1);<end>: the end label, can be a letter (e.g.,H) or a number (e.g.,8);<step>: the step to generate the label sequence, can be a number (e.g.,2or-1), defaults to1.<sep>: the separator between<start>and<end>, can be one of these symbols:-,,,~,:,;,_.

Examples:

stone -l A-H-1which is equivalent to stone -l A-H and stone -l A B C D E F G H.

stone -l A-H-2which is equivalent to stone -l A C E G.

stone -l 1-8which is equivalent to stone -l 1 2 3 4 5 6 7 8.

stone -l 1-8-3which is equivalent to stone -l 1 4 7.

Important

Please make sure the number of labels is equal to the number of colors in the palette.

The app puts the final report (result.csv) in current folder in default.

To change the output folder:

stone -o (or --output) ./path/to/output/The output folder will be created if it does not exist.

In result.csv, each row is showing the color information of each detected face.

If more than one faces are detected, there will be multiple rows for that image.

stone -d (or --debug)This option will store the report image (like the demo portrait above) in

./path/to/output/debug/<image type>/faces_<n> folder,

where <image type> indicates if the image is color or bw (black/white);

<n> is the number of faces detected in the image.

By default, to save storage space, the app does not store report images.

Like in the result.csv file, there will be more than one report images if 2 or more faces were detected.

6.1 The input are color images

stone -t (or --image_type) color6.2 The input are black/white images

stone -t (or --image_type) bw6.3 In default, the app will detect the image type automatically, i.e.,

stone -t (or --image_type) autoFor color images, we use the color palette to detect faces:

#373028 #422811 #513b2e #6f503c #81654f #9d7a54 #bea07e #e5c8a6 #e7c1b8 #f3dad6 #fbf2f3(Please refer to our paper above for more details.)

For bw images, we use the bw palette to detect faces:

#FFFFFF #F0F0F0 #E0E0E0 #D0D0D0 #C0C0C0 #B0B0B0 #A0A0A0 #909090 #808080 #707070 #606060 #505050 #404040 #303030 #202020 #101010 #000000(Please refer to Leigh, A., & Susilo, T. (2009). Is voting skin-deep? Estimating the effect of candidate ballot photographs on election outcomes. Journal of Economic Psychology, 30(1), 61-70. for more details.)

and then do the classification using bw palette

stone -bw (or --black_white)For example:

NB: we did not do the opposite, i.e., convert black/white images to color images

because the current AI models cannot accurately "guess" the color of the skin from a black/white image.

It can further bias the analysis results.

The rest parameters of CONFIG are used to detect face.

Please refer to https://stackoverflow.com/a/20805153/8860079 for detailed information.

stone --n_workers <Any Positive Integer>Use --n_workers to specify the number of workers to process images in parallel, defaults to the number of CPUs in your

system.

You can refer to the following code snippet:

import stone

from json import dumps

# process the image

result = stone.process(image_path, image_type, palette, *other_args, return_report_image=True)

# show the report image

report_images = result.pop("report_images") # obtain and remove the report image from the `result`

face_id = 1

stone.show(report_images[face_id])

# convert the result to json

result_json = dumps(result)stone.process is the main function to process the image.

It has the same parameters as the command line version.

It will return a dict, which contains the process result and report image(s) (if required,

i.e., return_report_image=True).

You can further use stone.show to show the report image(s).

And convert the result to json format.

The result_json will be like:

{

"basename": "demo",

"extension": ".png",

"image_type": "color",

"faces": [

{

"face_id": 1,

"dominant_colors": [

{

"color": "#C99676",

"percent": "0.67"

},

{

"color": "#805341",

"percent": "0.33"

}

],

"skin_tone": "#9D7A54",

"tone_label": "CF",

"accuracy": 86.27

}

]

}If you are interested in our work, please cite:

@article{https://doi.org/10.1111/ssqu.13242,

author = {Rej\'{o}n Pi\tilde{n}a, Ren\'{e} Alejandro and Ma, Chenglong},

title = {Classification Algorithm for Skin Color (CASCo): A new tool to measure skin color in social science research},

journal = {Social Science Quarterly},

volume = {n/a},

number = {n/a},

pages = {},

keywords = {colorism, measurement, photo elicitation, racism, skin color, spectrometers},

doi = {https://doi.org/10.1111/ssqu.13242},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/ssqu.13242},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/ssqu.13242},

abstract = {Abstract Objective A growing body of literature reveals that skin color has significant effects on people's income, health, education, and employment. However, the ways in which skin color has been measured in empirical research have been criticized for being inaccurate, if not subjective and biased. Objective Introduce an objective, automatic, accessible and customizable Classification Algorithm for Skin Color (CASCo). Methods We review the methods traditionally used to measure skin color (verbal scales, visual aids or color palettes, photo elicitation, spectrometers and image-based algorithms), noting their shortcomings. We highlight the need for a different tool to measure skin color Results We present CASCo, a (social researcher-friendly) Python library that uses face detection, skin segmentation and k-means clustering algorithms to determine the skin tone category of portraits. Conclusion After assessing the merits and shortcomings of all the methods available, we argue CASCo is well equipped to overcome most challenges and objections posed against its alternatives. While acknowledging its limitations, we contend that CASCo should complement researchers. toolkit in this area.}

}👋 Welcome to SkinToneClassifier! We're excited to have your contributions. Here's how you can get involved:

-

💡 Discuss New Ideas: Have a creative idea or suggestion? Start a discussion in the Discussions tab to share your thoughts and gather feedback from the community.

-

❓ Ask Questions: Got questions or need clarification on something in the repository? Feel free to open an Issue labeled as a "question" or participate in Discussions.

-

🐛 Issue a Bug: If you've identified a bug or an issue with the code, please open a new Issue with a clear description of the problem, steps to reproduce it, and your environment details.

-

✨ Introduce New Features: Want to add a new feature or enhancement to the project? Fork the repository, create a new branch, and submit a Pull Request with your changes. Make sure to follow our contribution guidelines.

-

💖 Funding: If you'd like to financially support the project, you can do so by sponsoring the repository on GitHub. Your contributions help us maintain and improve the project.

The images used in this project are from Flickr-Faces-HQ Dataset (FFHQ), which is licensed under the Creative Commons BY-NC-SA 4.0 license.

Thank you for considering contributing to SkinToneClassifier. We value your input and look forward to collaborating with you!