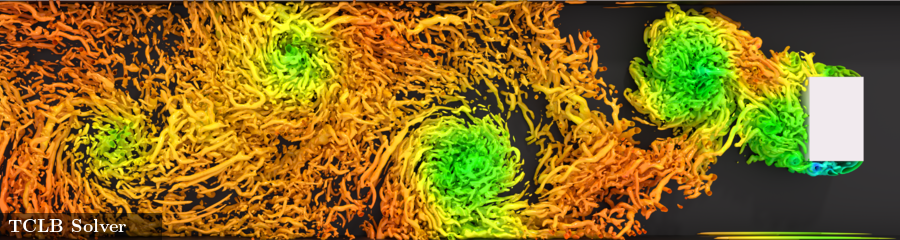

TCLB is a MPI+CUDA, MPI+CPU or MPI+HIP high-performance Computational Fluid Dynamics simulation code, based on the Lattice Boltzmann Method. It provides a clear interface for calculation of complex physics, and the implementation of new models.

Stable release (master branch):

Current release (develop branch):

Install

git clone https://github.com/CFD-GO/TCLB.git

cd TCLBConfigure

make configure

./configureCompile

make d2q9Run

CLB/d2q9/main example/flow/2d/karman.xmlThe documentation (including tutorials) is published at docs.tclb.io.

For the develop version, the most recent documentation can be found at

develop.docs.tclb.io.

You can contribute to the documentation at CFD-GO/TCLB_docs.

This code is designed to run on Linux with CUDA. We strongly recommend using Linux for compilation, computation and postprocessing.

Nevertheless, TCLB can be compiled on Windows using the Windows Subsystem for Linux, with CUDA supported on some system configurations (see nVidia's website for more info). It also can be compiled on MacOS (CPU only). Both Debian and Red Hat based Linux distributions are supported by the install.sh script described below, as is MacOS (with brew package manager).

For the code to compile and work you'll need a few things:

- R and some R packages (optparse, rtemplate, gvector, polyAlgebra)

- MPI. We recommend OpenMPI

- To use your GPU, you'll need nVidia CUDA or AMD HIP/ROCm

Optionally, you may need:

- To integrate TCLB with R, you'll need R package rinside

- To integrate TCLB with Python, you'll need python, numpy with libraries and headers

- To develop a model using Python, you'll need python, sympy and R package reticulate

You can install many of these with the provided tools/install.sh script (note that this requires sudo):

sudo tools/install.sh essentials # Installs essential system packages needed by TCLB

sudo tools/install.sh r # Installs R

sudo tools/install.sh openmpi # Installs OpenMPI

tools/install.sh rdep # Installs needed R packages

sudo tools/install.sh cuda # Installs CUDA (we recommend to do it on your own)

sudo tools/install.sh python-dev # Installs Python libraries with headersYou can run the tools/install.sh script with the --dry option, which will print the commands to run, so you can run them on your own.

We do not recommend running anything with sudo without checking

If you want a more recent version, you could try the development branch with git checkout develop

To compile the code for CPU, you can use the --disable-cuda option for ./configure:

./configure --disable-cuda

To compile the code for AMD GPUs (ROCm), you can use the --enable-hip option for ./configure:

./configure --enable-hip

To run TCLB in parallel (both on multiple CPU and multiple GPU), you can use the standard syntax of MPI parallel run:

mpirun -np 8 CLB/d2q9/main example/flow/2d/karman.xmlTo assist with using TCLB on HPC clusters (SLURM/PBS), there are scripts provided in the TCLB_cluster repository.

TCLB code can be coupled with Discrete Element Method (DEM) codes, to enable computation of flow with particles.

The DEM codes that TCLB can be integrated with are:

Refer to the documentation for instructions on compilation and coupling.

TCLB began development in 2012 with the aim at providing a framework for efficient CFD computations with LBM, mainly for research.

Author: Łukasz Łaniewski-Wołłk

Major contributors:

Contributors:

- Nathan Di Vaira

- Grzegorz Gruszczyński

- Bryce Hill

- Jon McCullough

- Paweł Obrępalski

- Wojciech Regulski

- Mariusz Rutkowski

- Dmytro Sashko

Developed at:

- Zakład Aerodynamiki at Politechnika Warszawska (Warsaw University of Technology)

- School of Mechanical & Mining Engineering at University of Queensland

- Interdisciplinary Centre for Mathematical and Computational Modelling at University of Warsaw

Please use appropriate citations if using this software in any research publication. The publication should cite the original paper about TCLB and papers which describe the used LBM models. You can find the list of TCLB publications at docs.tclb.io/general-info/publications/. You can also find the information about published articles in the source code of the models. The code can be cited additionally, by its Zenodo DOI.

This software is distributed under the GPL v3 License.

If you need this software under a different license, please contact the main author.

Contact: lukasz.laniewski(monkey)pw.edu.pl