bupt-gamma / cpf Goto Github PK

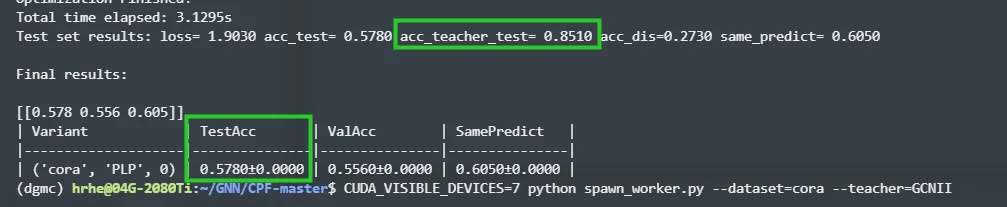

View Code? Open in Web Editor NEWThe official code of WWW2021 paper: Extract the Knowledge of Graph Neural Networks and Go Beyond it: An Effective Knowledge Distillation Framework

Home Page: https://arxiv.org/pdf/2103.02885.pdf