Eval 1000: 39%|###9 | 259/660 [2:45:08<3:45:04, 33.68s/it]

Eval 1000: 39%|###9 | 260/660 [2:45:45<3:51:40, 34.75s/it]

Eval 1000: 40%|###9 | 261/660 [2:46:32<4:16:02, 38.50s/it]

Eval 1000: 40%|###9 | 262/660 [2:46:48<3:31:01, 31.81s/it]

Eval 1000: 40%|###9 | 263/660 [2:47:36<4:01:20, 36.48s/it]

Eval 1000: 40%|#### | 264/660 [2:48:17<4:10:44, 37.99s/it]

Eval 1000: 40%|#### | 265/660 [2:48:55<4:09:58, 37.97s/it]

Eval 1000: 40%|#### | 266/660 [2:49:36<4:15:21, 38.89s/it]

Eval 1000: 40%|#### | 267/660 [2:50:14<4:12:22, 38.53s/it]

Eval 1000: 41%|#### | 268/660 [2:50:54<4:14:42, 38.98s/it]

Eval 1000: 41%|#### | 269/660 [2:51:53<4:52:46, 44.93s/it]

Eval 1000: 41%|#### | 270/660 [2:52:38<4:52:35, 45.02s/it]

Eval 1000: 41%|####1 | 271/660 [2:53:20<4:45:23, 44.02s/it]

Eval 1000: 41%|####1 | 272/660 [2:53:57<4:32:12, 42.09s/it]

Eval 1000: 41%|####1 | 273/660 [2:54:38<4:30:02, 41.87s/it]

Eval 1000: 42%|####1 | 274/660 [2:55:20<4:28:42, 41.77s/it]

Eval 1000: 42%|####1 | 275/660 [2:56:09<4:42:37, 44.05s/it]

Eval 1000: 42%|####1 | 276/660 [2:57:02<4:57:38, 46.51s/it]

Eval 1000: 42%|####1 | 277/660 [2:57:19<4:01:31, 37.84s/it]

Eval 1000: 42%|####2 | 278/660 [2:57:40<3:28:02, 32.68s/it]

Eval 1000: 42%|####2 | 279/660 [2:57:59<3:00:44, 28.46s/it]

Eval 1000: 42%|####2 | 280/660 [2:58:39<3:22:59, 32.05s/it]

Eval 1000: 43%|####2 | 281/660 [2:59:21<3:40:47, 34.95s/it]

Eval 1000: 43%|####2 | 282/660 [3:00:04<3:55:35, 37.39s/it]

Eval 1000: 43%|####2 | 283/660 [3:00:55<4:20:37, 41.48s/it]

Eval 1000: 43%|####3 | 284/660 [3:01:39<4:24:33, 42.22s/it]

Eval 1000: 43%|####3 | 285/660 [3:02:22<4:26:11, 42.59s/it]

Eval 1000: 43%|####3 | 286/660 [3:03:13<4:41:31, 45.16s/it]

Eval 1000: 43%|####3 | 287/660 [3:03:58<4:39:56, 45.03s/it]

Eval 1000: 44%|####3 | 288/660 [3:04:38<4:29:30, 43.47s/it]

Eval 1000: 44%|####3 | 289/660 [3:04:54<3:37:57, 35.25s/it]

Eval 1000: 44%|####3 | 290/660 [3:05:10<3:02:42, 29.63s/it]

Eval 1000: 44%|####4 | 291/660 [3:05:58<3:35:32, 35.05s/it]

Eval 1000: 44%|####4 | 292/660 [3:06:41<3:49:31, 37.42s/it]

Eval 1000: 44%|####4 | 293/660 [3:06:59<3:13:29, 31.63s/it]

Eval 1000: 45%|####4 | 294/660 [3:07:15<2:43:25, 26.79s/it]

Eval 1000: 45%|####4 | 295/660 [3:07:32<2:25:47, 23.97s/it]

Eval 1000: 45%|####4 | 296/660 [3:08:28<3:24:13, 33.66s/it]

Eval 1000: 45%|####5 | 297/660 [3:08:49<2:59:40, 29.70s/it]

Eval 1000: 45%|####5 | 298/660 [3:09:32<3:24:05, 33.83s/it]

Eval 1000: 45%|####5 | 299/660 [3:09:52<2:58:49, 29.72s/it]

Eval 1000: 45%|####5 | 300/660 [3:10:36<3:22:46, 33.80s/it]

Eval 1000: 46%|####5 | 301/660 [3:11:21<3:42:01, 37.11s/it]

Eval 1000: 46%|####5 | 302/660 [3:12:10<4:03:41, 40.84s/it]

Eval 1000: 46%|####5 | 303/660 [3:12:51<4:03:45, 40.97s/it]

Eval 1000: 46%|####6 | 304/660 [3:13:39<4:14:45, 42.94s/it]

Eval 1000: 46%|####6 | 305/660 [3:13:59<3:33:06, 36.02s/it]

Eval 1000: 46%|####6 | 306/660 [3:14:55<4:07:27, 41.94s/it]

Eval 1000: 47%|####6 | 307/660 [3:15:39<4:11:57, 42.83s/it]

Eval 1000: 47%|####6 | 308/660 [3:15:56<3:24:41, 34.89s/it]

Eval 1000: 47%|####6 | 309/660 [3:16:42<3:43:38, 38.23s/it]

Eval 1000: 47%|####6 | 310/660 [3:17:23<3:48:52, 39.24s/it]

Eval 1000: 47%|####7 | 311/660 [3:17:24<2:41:26, 27.76s/it]Run ID is 1541052899

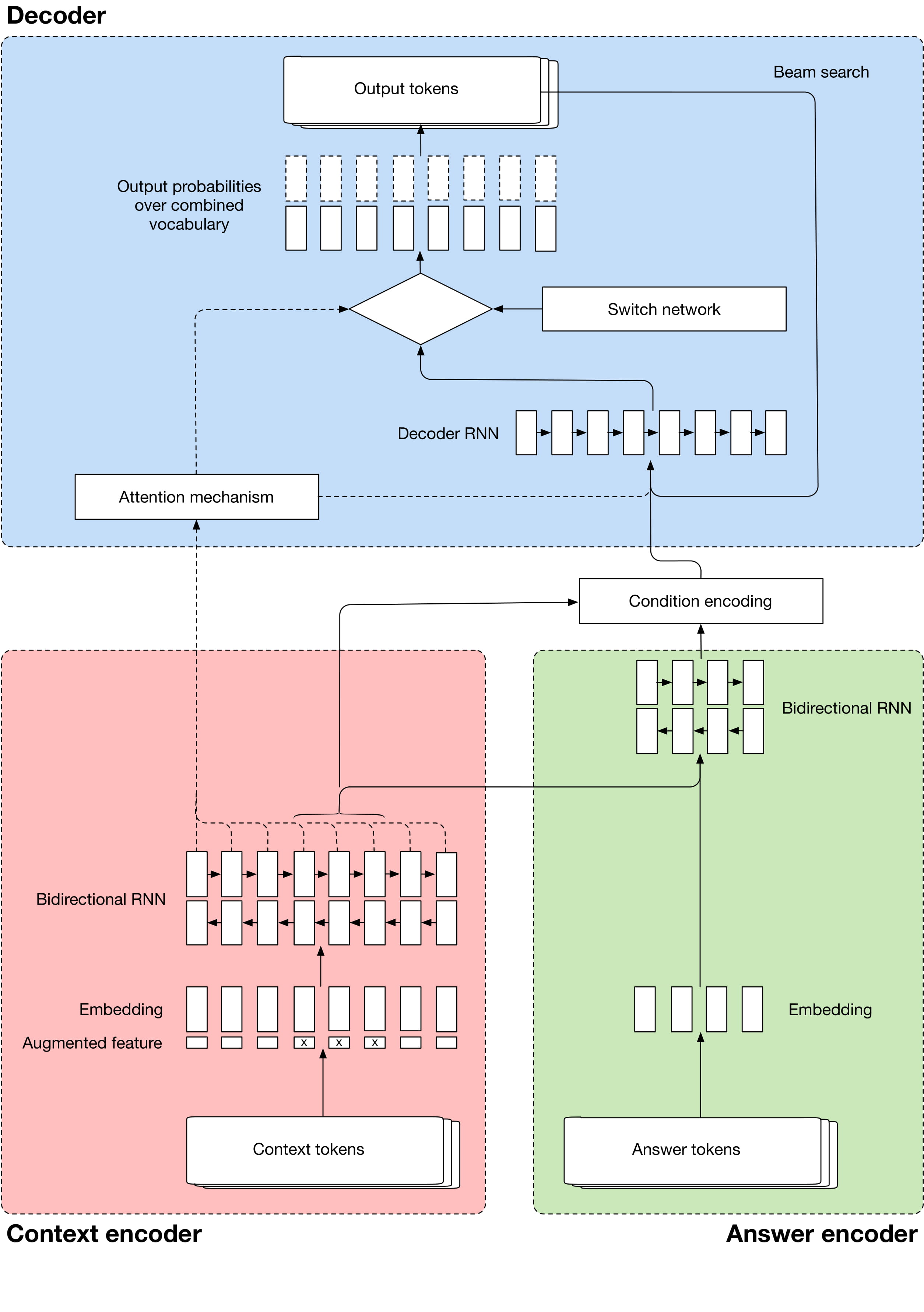

Model type is MALUUBA

Loaded SQuAD with 87599 triples

Modifying Seq2Seq model to incorporate RL rewards

Total number of trainable parameters: 39165281

Traceback (most recent call last):

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1327, in _do_call

return fn(*args)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1312, in _run_fn

options, feed_dict, fetch_list, target_list, run_metadata)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1420, in _call_tf_sessionrun

status, run_metadata)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\framework\errors_impl.py", line 516, in __exit__

c_api.TF_GetCode(self.status.status))

tensorflow.python.framework.errors_impl.OutOfRangeError: End of sequence

[[Node: IteratorGetNext = IteratorGetNext[output_shapes=[[?,?], [?,?],[?,?], [?], [?], [?,?], [?,?], [?,?,?], [?], [?,?], [?,?], [?], [?,?], [?]], output_types=[DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32, DT_STRING, DT_INT32, DT_FLOAT, DT_INT32, DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32], _device="/job:localhost/replica:0/task:0/device:CPU:0"](Iterator)]]

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "./src/train.py", line 415, in <module>

tf.app.run()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\platform\app.py", line 126, in run

_sys.exit(main(argv))

File "./src/train.py", line 373, in main

dev_batch, curr_batch_size = dev_data_source.get_batch()

File "C:\QG_blooms\src\datasources\squad_streamer.py", line 43, in get_batch

return self.sess.run([self.batch_as_nested_tuple, self.batch_len])

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 905, in run

run_metadata_ptr)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1140, in _run

feed_dict_tensor, options, run_metadata)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1321, in _do_run

run_metadata)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\client\session.py", line 1340, in _do_call

raise type(e)(node_def, op, message)

tensorflow.python.framework.errors_impl.OutOfRangeError: End of sequence

[[Node: IteratorGetNext = IteratorGetNext[output_shapes=[[?,?], [?,?],[?,?], [?], [?], [?,?], [?,?], [?,?,?], [?], [?,?], [?,?], [?], [?,?], [?]], output_types=[DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32, DT_STRING, DT_INT32, DT_FLOAT, DT_INT32, DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32], _device="/job:localhost/replica:0/task:0/device:CPU:0"](Iterator)]]

Caused by op 'IteratorGetNext', defined at:

File "./src/train.py", line 415, in <module>

tf.app.run()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\platform\app.py", line 126, in run

_sys.exit(main(argv))

File "./src/train.py", line 145, in main

with SquadStreamer(vocab, FLAGS.batch_size, FLAGS.num_epochs, shuffle=True)as train_data_source, SquadStreamer(vocab, FLAGS.eval_batch_size, 1, shuffle=True) as dev_data_source:

File "C:\QG_blooms\src\datasources\squad_streamer.py", line 24, in __enter__

self.build_data_pipeline(self.batch_size)

File "C:\QG_blooms\src\datasources\squad_streamer.py", line 107, in build_data_pipeline

self.batch_as_nested_tuple = self.iterator.get_next()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\data\ops\iterator_ops.py", line 366, in get_next

name=name)), self._output_types,

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\ops\gen_dataset_ops.py", line 1484, in iterator_get_next

output_shapes=output_shapes, name=name)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\framework\op_def_library.py", line 787, in _apply_op_helper

op_def=op_def)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\framework\ops.py", line 3290, in create_op

op_def=op_def)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\framework\ops.py", line 1654, in __init__

self._traceback = self._graph._extract_stack() # pylint: disable=protected-access

OutOfRangeError (see above for traceback): End of sequence

[[Node: IteratorGetNext = IteratorGetNext[output_shapes=[[?,?], [?,?],[?,?], [?], [?], [?,?], [?,?], [?,?,?], [?], [?,?], [?,?], [?], [?,?], [?]], output_types=[DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32, DT_STRING, DT_INT32, DT_FLOAT, DT_INT32, DT_STRING, DT_INT32, DT_INT32, DT_INT32, DT_INT32], _device="/job:localhost/replica:0/task:0/device:CPU:0"](Iterator)]]

Exception ignored in: <bound method tqdm.__del__ of Eval 1000: 47%|####7 |311/660 [3:17:24<2:41:26, 27.76s/it]>

Traceback (most recent call last):

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tqdm\_tqdm.py", line 931, in __del__

self.close()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tqdm\_tqdm.py", line 1133, in close

self._decr_instances(self)

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tqdm\_tqdm.py", line 496, in _decr_instances

cls.monitor.exit()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\site-packages\tqdm\_monitor.py", line 52, in exit

self.join()

File "C:\Users\Akashtyagi\AppData\Local\Programs\Python\Python36\lib\threading.py", line 1053, in join

raise RuntimeError("cannot join current thread")

RuntimeError: cannot join current thread