Comments (19)

最新工作汇报

- 使用torch.utils.checkpoint + lora 方法,在fp16的情况下、在batch_size=1的时候,显存降低到15G左右。正在整理代码,后面会放出来。

- 这个工作,可以让很多卡跑起来了,甚至batchsize可以提高。

from zero_nlp.

感谢反馈问题,把batch_size都改为1,context_length=32试一试。别的情况,我再试一试

from zero_nlp.

感谢反馈问题,把batch_size都改为1,context_length=32试一试。别的情况,我再试一试

改完了也是爆显存,显卡是RTX4090 24GB,配置(如果有需要我可以把ssh开放给你研究研究x)

context_length = 32

args = TrainingArguments(

output_dir="test003",

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

evaluation_strategy="steps",

eval_steps=100,

logging_steps=100,

gradient_accumulation_steps=8,

num_train_epochs=1,

weight_decay=0.1,

warmup_steps=1_000,

lr_scheduler_type="cosine",

learning_rate=5e-4,

save_steps=100,

fp16=True,

push_to_hub=False,

)

Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 294.00 MiB (GPU 0; 23.65 GiB total capacity; 21.84 GiB already allocated; 152.56 MiB free; 22.11 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

0%| | 0/595 [00:01<?, ?it/s]

from zero_nlp.

我看了一下我torch是2.0,我改成1.13试试看(仍然爆显存

Variable._execution_engine.run_backward( # Calls into the C++ engine to run the backward pass

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 294.00 MiB (GPU 0; 23.65 GiB total capacity; 21.83 GiB already allocated; 52.56 MiB free; 22.10 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

0%| | 0/595 [00:01<?, ?it/s]

(venv) user@calculator:~/ext/zero_nlp/simple_thu_chatglm6b$ pip show torch

Name: torch

Version: 1.13.0

Summary: Tensors and Dynamic neural networks in Python with strong GPU acceleration

Home-page: https://pytorch.org/

Author: PyTorch Team

Author-email: [email protected]

License: BSD-3

Location: /home/user/ext/zero_nlp/venv/lib/python3.10/site-packages

Requires: nvidia-cublas-cu11, nvidia-cuda-nvrtc-cu11, nvidia-cuda-runtime-cu11, nvidia-cudnn-cu11, typing-extensions

Required-by: accelerate, peft, pytorch-lightning, torchmetrics, torchvision, triton

(venv) user@calculator:~/ext/zero_nlp/simple_thu_chatglm6b$

from zero_nlp.

用INT4量化后的模型可以大幅减少显存,有没有直接微调INT4模型的可能性?

from zero_nlp.

查看这个配置#5 (comment)

from zero_nlp.

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 148.00 MiB (GPU 0; 22.38 GiB total capacity; 21.49 GiB already allocated; 87.94 MiB free; 21.52 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

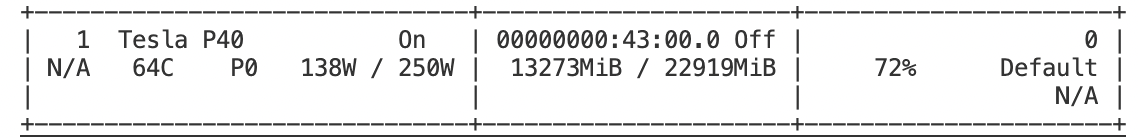

我用P40跑的,22G显存,一样的问题,context_length设置成了32

from zero_nlp.

from zero_nlp.

查看这个配置#5 (comment)

试过了,无效

from zero_nlp.

22g显寸不够 发自我的 iPhone 在 2023年3月23日,17:26,Adherer @.> 写道: torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 148.00 MiB (GPU 0; 22.38 GiB total capacity; 21.49 GiB already allocated; 87.94 MiB free; 21.52 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF 我用P40跑的,22G显存,一样的问题,context_length设置成了32 — Reply to this email directly, view it on GitHub<#4 (comment)>, or unsubscribehttps://github.com/notifications/unsubscribe-auth/AHJRI6JAQJ6JUG2IWYTTU4TW5QJNHANCNFSM6AAAAAAWD3FFLE. You are receiving this because you commented.Message ID: @.>

我参考了一下其他repo,8bit量化可以16G的显存finetune,目前暂无支持多卡finetune的版本。因此,后续是否有如下两个优化方向:

- 8bit/4bit finetune优化;

- 单机多卡 or 多机多卡优化。

若有相关优化计划,可合作

from zero_nlp.

目前在做两个方向:

- 使用torch.utils.checkpoint来降低显存压力。

- 单机多卡

花了一天了,还没什么进展😂,继续努力~

from zero_nlp.

目前在做两个方向:

- 使用torch.utils.checkpoint来降低显存压力。

- 单机多卡

花了一天了,还没什么进展😂,继续努力~

可以参考下这个代码:https://github.com/mymusise/ChatGLM-Tuning

我跑通了,正在训练中,明天有空我改下,改成中文训练的

from zero_nlp.

目前在做两个方向:

- 使用torch.utils.checkpoint来降低显存压力。

- 单机多卡

花了一天了,还没什么进展😂,继续努力~

可以参考下这个代码:https://github.com/mymusise/ChatGLM-Tuning 我跑通了,正在训练中,明天有空我改下,改成中文训练的

这个我也跑通了,但不知道是不是方法有问题,训练效果并不理想,似乎是在胡说八道(

from zero_nlp.

from zero_nlp.

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment)

可以看截图,跑起来的时候,显寸占用为24330MB

from zero_nlp.

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment)

可以看截图,跑起来的时候,显寸占用为24330MB

对windows没啥好感,刚双系统打开windows,弹窗问我是否创建GPT分区表,我点了一下确定,训练集LVM分区炸了得重新配置了,正好重试一下(

from zero_nlp.

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment)

可以看截图,跑起来的时候,显寸占用为24330MB

相关问题已解决,模型裁剪即可,现可用13G+显存即可finetune:

from zero_nlp.

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment)

可以看截图,跑起来的时候,显寸占用为24330MB对windows没啥好感,刚双系统打开windows,弹窗问我是否创建GPT分区表,我点了一下确定,训练集LVM分区炸了得重新配置了,正好重试一下(

相关问题已解决,模型裁剪即可,现可用13G+显存即可finetune:

from zero_nlp.

已经有不少人跑出来了。不知道你们这边是怎么回事,要求就是显存问题。#5 (comment)

可以看截图,跑起来的时候,显寸占用为24330MB对windows没啥好感,刚双系统打开windows,弹窗问我是否创建GPT分区表,我点了一下确定,训练集LVM分区炸了得重新配置了,正好重试一下(

相关问题已解决,模型裁剪即可,现可用13G+显存即可finetune:

怎么裁剪…咕

from zero_nlp.

Related Issues (20)

- 博主您好

- chatGLMv2-6b p-tuning 和 LoRA数据预处理的方法是一样的吗 ?

- ChatGLM2 lora finetuning 加载 lora 参数:RuntimeError: Expected 4-dimensional input for 4-dimensional weight [3072, 32, 1, 1], but got 3-dimensional input of size [1, 64, 4096] instead HOT 4

- 4张3080ti跑chatglm2-6b-lora报oom HOT 5

- 求助:chatglm2 lora训练error:RuntimeError: Expected is_sm80 to be true, but got false. HOT 2

- 训练的时候报错ValueError: The current `device_map` had weights offloaded to the disk. HOT 11

- 训练出错

- 两张4090单机多卡跑,咋感觉越跑越慢了,比单卡慢 HOT 2

- 请问有部署或者运行的文档吗?在哪里可以看?

- 实时微调可以通过加入传统RL实现吗

- 请问如果单纯使用zeroth-order向前优化少量batch(只要体现出一定的优化效果)的话要怎么实现 HOT 2

- lora推理中只能指定一个输入吗?有办法实现batch_size的推理吗

- 救命!!ChatGlm-v2-6b_Lora该怎么设置epoch?? HOT 1

- 大佬,可以多个多个lora叠加使用吗?

- chatglm_v2_6b_lora多卡如何设置,没有找到 HOT 2

- 能出一个ChatGLM

- 能出一个ChatGLM的教程吗

- Segment Fault 是哪的问题?

- 大佬 chinese_llama 还可以用吗 HOT 1

- 出个chatglm3的吧 微调后 推理老是出问题 HOT 1

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from zero_nlp.