Comments (33)

执行make clean

然后执行make

从错误日志中看是对应的依赖没有拉取到,检查下是不是在拉取对应包的过程中出现了网络超时现象

from primihub.

执行make clean 然后执行make 从错误日志中看是对应的依赖没有拉取到,检查下是不是在拉取对应包的过程中出现了网络超时现象

原因是WORKSPACE里的workspace未被定义,将WORKSPACE_CN中的内容拷贝到WORKSPACE中,然后进行编译。当时编译过程中虚拟机容易卡死,我的虚拟机设置的8C8G,是否需要升级硬件配置呢?

from primihub.

编译的时候限制下job的数目,修改makefile文件在对应的编译命令后面加--jobs=xxx

from primihub.

编译的时候限制下job的数目,修改makefile文件在对应的编译命令后面加--jobs=xxx

编译通过。但是后续在多方安全计算任务中,从本地编译启动,报错如下:

root@ubuntu:/home/mash/Desktop/primihub# ./bazel-bin/cli --server="192.168.14.129:50050" --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File"

I20230419 07:24:51.783396 142444 :468] use tls: 0

I20230419 07:24:51.783684 142444 cli.cc:478] SDK SubmitTask to: 192.168.14.129:50050

I20230419 07:24:51.785651 142444 cli.cc:382] SubmitTask...

E20230419 07:24:51.787084 142444 grpc_link_context.cc:227] submitTask to Node [defalut:192.168.14.129:50050:0:default] rpc failed. 14: failed to connect to all addresses

I20230419 07:24:51.787552 142444 cli.cc:491] SubmitTask time cost(ms): 2

rpc无法连接到地址

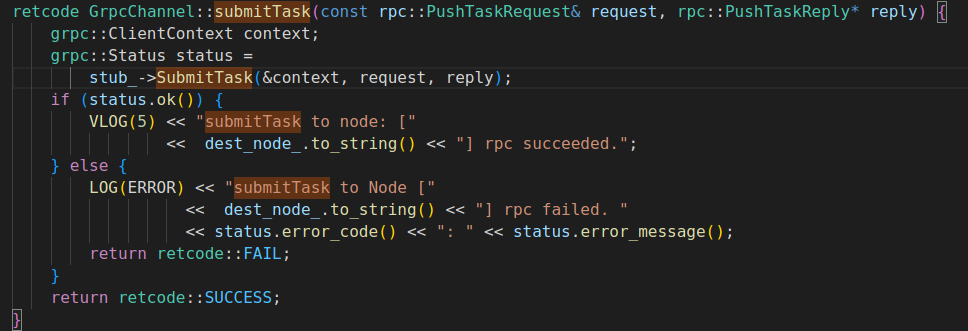

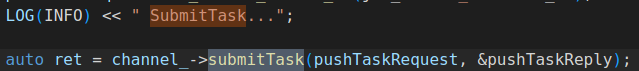

查找到src/primihub目录下源代码,cli.cc和grpc_link_context.cc如下:

from primihub.

检查下是不是本地有代理,把内网地址的代理去掉试一下

from primihub.

检查下是不是本地有代理,把内网地址的代理去掉试一下

关了内外网所有代理之后还是报一样的错

from primihub.

把 config/node*.yaml 中location 配置的127.0.0.1改成机器的IP地址在再试试

from primihub.

把 config/node*.yaml 中location 配置的127.0.0.1改成机器的IP地址在再试试

报一样的错,包括我这里让三个节点共同执行一个MPC的逻辑回归任务也是同样的报错(修改未修改地址都一样)

root@ubuntu:/home/mash/Desktop/primihub# docker run --network=host -it primihub/primihub-node:latest ./primihub-cli --server="192.168.14.129:8050"

I20230420 21:01:27.604171 1 cli.cc:373] use tls: 0

I20230420 21:01:27.604892 1 cli.cc:383] SDK SubmitTask to: 192.168.14.129:8050

I20230420 21:01:27.606652 1 cli.cc:246] SubmitTask...

E20230420 21:01:47.608011 1 grpc_link_context.cc:222] submitTask to Node [defalut:192.168.14.129:8050:0:default] rpc failed. 14: failed to connect to all addresses

I20230420 21:01:47.608525 1 cli.cc:396] SubmitTask time cost(ms): 20002

from primihub.

telnet下看端口通吗?

from primihub.

telnet下看端口通吗?

telnet 127.0.0.1 和 telnet [机器ip地址] 都能通

from primihub.

以往遇到这个问题的可能情况,一个是把配置里的127.0.0.1改成本机IP,一个是机器设置了http_proxy,去掉后就可以了,你现在两种都不行,确实不好确定什么原因,你系统是ubuntu哪个版本?

from primihub.

ubuntu20.04.6。我现在直接通过docker-compose启动一个MPC的逻辑回归任务。请问primihub-cli 的公共参数到哪里手动设置,找不到--task_config_file。

root@ubuntu:/home/mash/Desktop/primihub# docker exec -it primihub-node0 bash

root@1ce1ed164c35:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File"

I20230421 23:19:30.795068 110 cli.cc:373] use tls: 0

I20230421 23:19:30.795435 110 cli.cc:383] SDK SubmitTask to: 127.0.0.1:50050

I20230421 23:19:30.797150 110 cli.cc:246] SubmitTask...

I20230421 23:19:33.859876 110 cli.cc:396] SubmitTask time cost(ms): 3063

root@1ce1ed164c35:/app# ./primihub-cli --task_config_file="example/mpc_lr_task_conf.json"

ERROR: Unknown command line flag 'task_config_file'

from primihub.

task_config_file 这个问题我们确认下,文档有点问题,这两个命令是两种提交方式,执行任意一种都可以,你这个mpc任务已经运行成功了。

from primihub.

ubuntu20.04.6。我现在直接通过docker-compose启动一个MPC的逻辑回归任务。请问primihub-cli 的公共参数到哪里手动设置,找不到--task_config_file。 root@ubuntu:/home/mash/Desktop/primihub# docker exec -it primihub-node0 bash root@1ce1ed164c35:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File" I20230421 23:19:30.795068 110 cli.cc:373] use tls: 0 I20230421 23:19:30.795435 110 cli.cc:383] SDK SubmitTask to: 127.0.0.1:50050 I20230421 23:19:30.797150 110 cli.cc:246] SubmitTask... I20230421 23:19:33.859876 110 cli.cc:396] SubmitTask time cost(ms): 3063 root@1ce1ed164c35:/app# ./primihub-cli --task_config_file="example/mpc_lr_task_conf.json" ERROR: Unknown command line flag 'task_config_file'

确保代码编译是用的最新的版本,这个flag是最近几个版本才加入的

from primihub.

ubuntu20.04.6。我现在直接通过docker-compose启动一个MPC的逻辑回归任务。请问primihub-cli 的公共参数到哪里手动设置,找不到--task_config_file。 root@ubuntu:/home/mash/Desktop/primihub# docker exec -it primihub-node0 bash root@1ce1ed164c35:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File" I20230421 23:19:30.795068 110 cli.cc:373] use tls: 0 I20230421 23:19:30.795435 110 cli.cc:383] SDK SubmitTask to: 127.0.0.1:50050 I20230421 23:19:30.797150 110 cli.cc:246] SubmitTask... I20230421 23:19:33.859876 110 cli.cc:396] SubmitTask time cost(ms): 3063 root@1ce1ed164c35:/app# ./primihub-cli --task_config_file="example/mpc_lr_task_conf.json" ERROR: Unknown command line flag 'task_config_file'

确保代码编译是用的最新的版本,这个flag是最近几个版本才加入的

请问最新版本是多少,我看了一下,我这边是4.13号clone下来的。

from primihub.

ubuntu20.04.6。我现在直接通过docker-compose启动一个MPC的逻辑回归任务。请问primihub-cli 的公共参数到哪里手动设置,找不到--task_config_file。 root@ubuntu:/home/mash/Desktop/primihub# docker exec -it primihub-node0 bash root@1ce1ed164c35:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File" I20230421 23:19:30.795068 110 cli.cc:373] use tls: 0 I20230421 23:19:30.795435 110 cli.cc:383] SDK SubmitTask to: 127.0.0.1:50050 I20230421 23:19:30.797150 110 cli.cc:246] SubmitTask... I20230421 23:19:33.859876 110 cli.cc:396] SubmitTask time cost(ms): 3063 root@1ce1ed164c35:/app# ./primihub-cli --task_config_file="example/mpc_lr_task_conf.json" ERROR: Unknown command line flag 'task_config_file'

确保代码编译是用的最新的版本,这个flag是最近几个版本才加入的

请问最新版本是多少,我看了一下,我这边是4.13号clone下来的。

从这个版本开始的 5372c93

检查下源码src/primihub/cli/cli.cc 中是否有如下定义

ABSL_FLAG(std::string, task_config_file, "", "path of task config file");

from primihub.

ubuntu20.04.6。我现在直接通过docker-compose启动一个MPC的逻辑回归任务。请问primihub-cli 的公共参数到哪里手动设置,找不到--task_config_file。 root@ubuntu:/home/mash/Desktop/primihub# docker exec -it primihub-node0 bash root@1ce1ed164c35:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File" I20230421 23:19:30.795068 110 cli.cc:373] use tls: 0 I20230421 23:19:30.795435 110 cli.cc:383] SDK SubmitTask to: 127.0.0.1:50050 I20230421 23:19:30.797150 110 cli.cc:246] SubmitTask... I20230421 23:19:33.859876 110 cli.cc:396] SubmitTask time cost(ms): 3063 root@1ce1ed164c35:/app# ./primihub-cli --task_config_file="example/mpc_lr_task_conf.json" ERROR: Unknown command line flag 'task_config_file'

确保代码编译是用的最新的版本,这个flag是最近几个版本才加入的

请问最新版本是多少,我看了一下,我这边是4.13号clone下来的。

从这个版本开始的 5372c93 检查下源码src/primihub/cli/cli.cc 中是否有如下定义 ABSL_FLAG(std::string, task_config_file, "", "path of task config file");

我又重新clone 了 5372c93 这个版本从头开始编译,docker方式启动mpc任务,还是报了上面ERROR: Unknown command line flag 'task_config_file'的错误,而从本地源码编译启动mpc任务,则依然是报了上面E20230420 21:01:47.608011 1 grpc_link_context.cc:222] submitTask to Node [defalut:192.168.14.129:8050:0:default] rpc failed. 14: failed to connect to all addresses的错误,同时也修改了本地ip和取消代理。

from primihub.

docker方式运行就和本地编译没关系了,需要更新下最新的镜像,docker pull primihub/primihub-node,docker-compose down停掉原来的,docker-compose up -d再重新启动

from primihub.

docker方式运行就和本地编译没关系了,需要更新下最新的镜像,docker pull primihub/primihub-node,docker-compose down停掉原来的,docker-compose up -d再重新启动

查看日志,迭代到Epochs=79就停止了,发现没找到lr_mode.csv

root@20a1abc4e976:/app/log# cat primihub-node.ERROR

Log file created at: 2023/04/24 00:23:00

Running on machine: 20a1abc4e976

Running duration (h:mm:ss): 0:00:00

Log line format: [IWEF]yyyymmdd hh:mm:ss.uuuuuu threadid file:line] msg

E20230424 00:23:00.089043 47 csv_driver.cc:112] Open file /data/result/lr_mode.csv failed.

E20230424 00:23:00.089270 47 logistic.cc:574] Save LR model to file /data/result/lr_mode.csv failed.

然后接着启动节点2和3,两个节点都报了submitTask to Node [defalut:192.168.14.129:8050:0:default] rpc failed. 14: failed to connect to all addresses 这个错误。

from primihub.

docker方式运行就和本地编译没关系了,需要更新下最新的镜像,docker pull primihub/primihub-node,docker-compose down停掉原来的,docker-compose up -d再重新启动

停掉了之前的,重新拉了下最新的镜像尝试的

from primihub.

https://docs.primihub.com/docs/advance-usage/create-tasks/mpc-task

看一下文档, 结果文件保存路径可以自定义,上面保存失败是没有result这层目录,你说的然后接着启动节点2和3是什么意思?

from primihub.

https://docs.primihub.com/docs/advance-usage/create-tasks/mpc-task 看一下文档, 结果文件保存路径可以自定义,上面保存失败是没有result这层目录,你说的然后接着启动节点2和3是什么意思?

我新建了data文件夹,但是找不到lr_mode.csv数据集无法保存LR模型;

使用docker方式启动第一个节点,启动成功,但是查看log/文件夹下的primihub-node.ERROR日志文件,如下:

root@20a1abc4e976:/app/log# cat primihub-node.ERROR

Log file created at: 2023/04/24 00:23:00

Running on machine: 20a1abc4e976

Running duration (h:mm:ss): 0:00:00

Log line format: [IWEF]yyyymmdd hh:mm:ss.uuuuuu threadid file:line] msg

E20230424 00:23:00.089043 47 csv_driver.cc:112] Open file /data/result/lr_mode.csv failed.

E20230424 00:23:00.089270 47 logistic.cc:574] Save LR model to file /data/result/lr_mode.csv failed.

E20230424 22:05:51.407390 138 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:05:51.407670 138 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:05:51.408107 93 parser.cc:121] no scheduler created to dispatch task

E20230424 22:09:30.862849 168 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:09:30.863210 168 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:09:30.863487 93 parser.cc:121] no scheduler created to dispatch task

E20230424 22:10:15.982421 184 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:10:15.982550 184 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:10:15.982697 96 parser.cc:121] no scheduler created to dispatch task

E20230424 22:11:46.030390 200 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:11:46.030558 200 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:11:46.030736 93 parser.cc:121] no scheduler created to dispatch task

E20230424 22:14:35.598970 209 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:14:35.599139 209 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:14:35.599509 96 parser.cc:121] no scheduler created to dispatch task

之后又查看容器primihub-node0的日志文件:docker logs -f primihub-node0,如下:

I20230424 22:14:32.530588 96 node.cc:665] start to schedule task, task_type: 0

I20230424 22:14:32.530601 96 node.cc:1183]

I20230424 22:14:32.530615 96 node.cc:1187]

I20230424 22:14:32.530701 96 proto_parser.cc:48] dataset: train_party_0

I20230424 22:14:32.530733 96 proto_parser.cc:48] dataset: train_party_1

I20230424 22:14:32.530746 96 proto_parser.cc:48] dataset: train_party_2

I20230424 22:14:32.530758 96 proto_parser.cc:48] dataset: test_party_0

I20230424 22:14:32.530766 96 proto_parser.cc:48] dataset: test_party_1

I20230424 22:14:32.530773 96 proto_parser.cc:48] dataset: test_party_2

I20230424 22:14:32.530946 209 parser.cc:76]

I20230424 22:14:32.531008 209 redis_helper.cc:20] Redis server: host redis, port 6379.

E20230424 22:14:35.598970 209 redis_helper.cc:26] Connect to redis server failed, No route to host.

E20230424 22:14:35.599139 209 service.cc:501] Connect to redis server redis:6379failed.

E20230424 22:14:35.599509 96 parser.cc:121] no scheduler created to dispatch task

from primihub.

你直接通过项目里的docker-compose.yaml 文件启动,里面有redis,你用docker只启动一个节点没用啊,任务都是需要多个节点才能运行

from primihub.

再看一下文档中的docker-compose启动方式

from primihub.

把你现在启动的这些都停了

from primihub.

你直接通过项目里的docker-compose.yaml 文件启动,里面有redis,你用docker只启动一个节点没用啊,任务都是需要多个节点才能运行

嗯呢,节点0启动成功,节点1和2启动还是报下面同样的错。

root@284e36e672a8:/app# ./primihub-cli --task_lang=proto --task_type=0 --task_code="logistic_regression" --params="BatchSize:INT32:0:128,NumIters:INT32:0:100,Data_File:STRING:0:train_party_0;train_party_1;train_party_2,modelName:STRING:0:/data/result/lr_mode.csv" --input_datasets="Data_File"

I20230424 23:57:09.724027 46 cli.cc:468] use tls: 0

I20230424 23:57:09.724349 46 cli.cc:478] SDK SubmitTask to: 127.0.0.1:50050

I20230424 23:57:09.726305 46 cli.cc:382] SubmitTask...

E20230424 23:57:09.727294 46 grpc_link_context.cc:228] submitTask to Node [defalut:127.0.0.1:50050:0:default] rpc failed. 14: failed to connect to all addresses

from primihub.

你是说在node1和node2容器里执行命令报这个错?

from primihub.

任务只在node0容器里运行就可以了,node1和node2的端口是50051和50052

from primihub.

任务只在node0容器里运行就可以了,node1和node2的端口是50051和50052

但是看node0的日志,未找到/data/result/lr_mode.csv文件呀。

from primihub.

在node0容器中运行任务,在另一个终端执行docker logs -f primihub-node0,把日志贴一下

from primihub.

在node0容器中运行任务,在另一个终端执行docker logs -f primihub-node0,把日志贴一下

I20230424 23:54:07.436573 40 node.cc:854] start to create worker for task: job_id : 100 task_id: 200 request id: 84ca9770-2747-4fe5-a978-a38667b26226

I20230424 23:54:07.436586 40 node.cc:1183]

I20230424 23:54:07.436601 40 node.cc:1187]

I20230424 23:54:07.436733 40 node.cc:865] create worker thread future for task: job_id : 100 task_id: 200 request id: 84ca9770-2747-4fe5-a978-a38667b26226 finished

I20230424 23:54:07.436873 46 node.cc:831] begin to execute task

I20230424 23:54:07.439937 46 logistic.cc:108] 4

I20230424 23:54:07.440155 46 logistic.cc:127] Note party id of this node is 0.

I20230424 23:54:07.440519 46 worker.cc:54]

I20230424 23:54:07.440596 46 logistic.cc:180] Train data train_party_0, test data .

I20230424 23:54:07.440768 40 node.cc:882] create worker thread for task: job_id : 100 task_id: 200 request id: 84ca9770-2747-4fe5-a978-a38667b26226 finished

I20230424 23:54:07.448966 46 logistic.cc:300] [Next] Init server session, party 0, ip 172.28.1.10, port 12120, name sess_0_1.

I20230424 23:54:07.449196 46 logistic.cc:306] [Prev] Init server session, party 0, ip 172.28.1.10, port 12120, name sess_0_2.

I20230424 23:54:07.456758 46 runtime.h:44] Init Runtime : 0, 0x7f21f4014680

I20230424 23:54:07.480000 46 logistic.cc:382] Init party: 0 communication finish.

I20230424 23:54:07.531560 46 logistic.cc:521] Train dataset has 558 examples, dimension of each is 10.

I20230424 23:54:07.531641 46 logistic.cc:524] Test dataset has 141 examples, dimension of each is 10.

I20230424 23:54:07.531927 46 logistic.cc:62] (Learning rate):0.015625.

I20230424 23:54:07.532014 46 logistic.cc:63] (Epoch):100.

I20230424 23:54:07.532033 46 logistic.cc:64] (Batchsize) :128.

I20230424 23:54:07.532042 46 logistic.cc:65] (Train_loader size):4.

I20230424 23:54:07.532048 46 logistic.cc:68] Epochs : ( 0/100 )

I20230424 23:54:07.744144 46 logistic.cc:68] Epochs : ( 1/100 )

I20230424 23:54:07.962257 46 logistic.cc:68] Epochs : ( 2/100 )

I20230424 23:54:08.179220 46 logistic.cc:68] Epochs : ( 3/100 )

I20230424 23:54:08.393626 46 logistic.cc:68] Epochs : ( 4/100 )

I20230424 23:54:08.610514 46 logistic.cc:68] Epochs : ( 5/100 )

I20230424 23:54:08.833190 46 logistic.cc:68] Epochs : ( 6/100 )

I20230424 23:54:09.053086 46 logistic.cc:68] Epochs : ( 7/100 )

I20230424 23:54:09.265569 46 logistic.cc:68] Epochs : ( 8/100 )

I20230424 23:54:09.482378 46 logistic.cc:68] Epochs : ( 9/100 )

I20230424 23:54:09.698501 46 logistic.cc:68] Epochs : ( 10/100 )

I20230424 23:54:09.917053 46 logistic.cc:68] Epochs : ( 11/100 )

I20230424 23:54:10.133777 46 logistic.cc:68] Epochs : ( 12/100 )

I20230424 23:54:10.352149 46 logistic.cc:68] Epochs : ( 13/100 )

I20230424 23:54:10.566813 46 logistic.cc:68] Epochs : ( 14/100 )

I20230424 23:54:10.784725 46 logistic.cc:68] Epochs : ( 15/100 )

I20230424 23:54:11.005990 46 logistic.cc:68] Epochs : ( 16/100 )

I20230424 23:54:11.223676 46 logistic.cc:68] Epochs : ( 17/100 )

I20230424 23:54:11.441133 46 logistic.cc:68] Epochs : ( 18/100 )

I20230424 23:54:11.658236 46 logistic.cc:68] Epochs : ( 19/100 )

I20230424 23:54:11.878443 46 logistic.cc:68] Epochs : ( 20/100 )

I20230424 23:54:12.092494 46 logistic.cc:68] Epochs : ( 21/100 )

I20230424 23:54:12.309180 46 logistic.cc:68] Epochs : ( 22/100 )

I20230424 23:54:12.525719 46 logistic.cc:68] Epochs : ( 23/100 )

I20230424 23:54:12.744263 46 logistic.cc:68] Epochs : ( 24/100 )

I20230424 23:54:12.960798 46 logistic.cc:68] Epochs : ( 25/100 )

I20230424 23:54:13.172833 46 logistic.cc:68] Epochs : ( 26/100 )

I20230424 23:54:13.385586 46 logistic.cc:68] Epochs : ( 27/100 )

I20230424 23:54:13.601778 46 logistic.cc:68] Epochs : ( 28/100 )

I20230424 23:54:13.817140 46 logistic.cc:68] Epochs : ( 29/100 )

I20230424 23:54:14.033438 46 logistic.cc:68] Epochs : ( 30/100 )

I20230424 23:54:14.255892 46 logistic.cc:68] Epochs : ( 31/100 )

I20230424 23:54:14.474695 46 logistic.cc:68] Epochs : ( 32/100 )

I20230424 23:54:14.695394 46 logistic.cc:68] Epochs : ( 33/100 )

I20230424 23:54:14.916321 46 logistic.cc:68] Epochs : ( 34/100 )

I20230424 23:54:15.133008 46 logistic.cc:68] Epochs : ( 35/100 )

I20230424 23:54:15.345700 46 logistic.cc:68] Epochs : ( 36/100 )

I20230424 23:54:15.567945 46 logistic.cc:68] Epochs : ( 37/100 )

I20230424 23:54:15.782415 46 logistic.cc:68] Epochs : ( 38/100 )

I20230424 23:54:16.000154 46 logistic.cc:68] Epochs : ( 39/100 )

I20230424 23:54:16.214078 46 logistic.cc:68] Epochs : ( 40/100 )

I20230424 23:54:16.427541 46 logistic.cc:68] Epochs : ( 41/100 )

I20230424 23:54:16.640097 46 logistic.cc:68] Epochs : ( 42/100 )

I20230424 23:54:16.860771 46 logistic.cc:68] Epochs : ( 43/100 )

I20230424 23:54:17.076203 46 logistic.cc:68] Epochs : ( 44/100 )

I20230424 23:54:17.299577 46 logistic.cc:68] Epochs : ( 45/100 )

I20230424 23:54:17.516425 46 logistic.cc:68] Epochs : ( 46/100 )

I20230424 23:54:17.730170 46 logistic.cc:68] Epochs : ( 47/100 )

I20230424 23:54:17.945080 46 logistic.cc:68] Epochs : ( 48/100 )

I20230424 23:54:18.159049 46 logistic.cc:68] Epochs : ( 49/100 )

I20230424 23:54:18.375844 46 logistic.cc:68] Epochs : ( 50/100 )

I20230424 23:54:18.590790 46 logistic.cc:68] Epochs : ( 51/100 )

I20230424 23:54:18.810873 46 logistic.cc:68] Epochs : ( 52/100 )

I20230424 23:54:19.033954 46 logistic.cc:68] Epochs : ( 53/100 )

I20230424 23:54:19.247550 46 logistic.cc:68] Epochs : ( 54/100 )

I20230424 23:54:19.467563 46 logistic.cc:68] Epochs : ( 55/100 )

I20230424 23:54:19.684513 46 logistic.cc:68] Epochs : ( 56/100 )

I20230424 23:54:19.905318 46 logistic.cc:68] Epochs : ( 57/100 )

I20230424 23:54:20.122334 46 logistic.cc:68] Epochs : ( 58/100 )

I20230424 23:54:20.337607 46 logistic.cc:68] Epochs : ( 59/100 )

I20230424 23:54:20.553450 46 logistic.cc:68] Epochs : ( 60/100 )

I20230424 23:54:20.771946 46 logistic.cc:68] Epochs : ( 61/100 )

I20230424 23:54:20.987932 46 logistic.cc:68] Epochs : ( 62/100 )

I20230424 23:54:21.210723 46 logistic.cc:68] Epochs : ( 63/100 )

I20230424 23:54:21.427621 46 logistic.cc:68] Epochs : ( 64/100 )

I20230424 23:54:21.646024 46 logistic.cc:68] Epochs : ( 65/100 )

I20230424 23:54:21.863533 46 logistic.cc:68] Epochs : ( 66/100 )

I20230424 23:54:22.078302 46 logistic.cc:68] Epochs : ( 67/100 )

I20230424 23:54:22.295083 46 logistic.cc:68] Epochs : ( 68/100 )

I20230424 23:54:22.509812 46 logistic.cc:68] Epochs : ( 69/100 )

I20230424 23:54:22.721182 46 logistic.cc:68] Epochs : ( 70/100 )

I20230424 23:54:22.938562 46 logistic.cc:68] Epochs : ( 71/100 )

I20230424 23:54:23.155169 46 logistic.cc:68] Epochs : ( 72/100 )

I20230424 23:54:23.372893 46 logistic.cc:68] Epochs : ( 73/100 )

I20230424 23:54:23.592212 46 logistic.cc:68] Epochs : ( 74/100 )

I20230424 23:54:23.814303 46 logistic.cc:68] Epochs : ( 75/100 )

I20230424 23:54:24.033784 46 logistic.cc:68] Epochs : ( 76/100 )

I20230424 23:54:24.256633 46 logistic.cc:68] Epochs : ( 77/100 )

I20230424 23:54:24.472149 46 logistic.cc:68] Epochs : ( 78/100 )

I20230424 23:54:24.686939 46 logistic.cc:68] Epochs : ( 79/100 )

I20230424 23:54:24.908722 46 logistic.cc:68] Epochs : ( 80/100 )

I20230424 23:54:25.126327 46 logistic.cc:68] Epochs : ( 81/100 )

I20230424 23:54:25.342397 46 logistic.cc:68] Epochs : ( 82/100 )

I20230424 23:54:25.555570 46 logistic.cc:68] Epochs : ( 83/100 )

I20230424 23:54:25.769846 46 logistic.cc:68] Epochs : ( 84/100 )

I20230424 23:54:25.986382 46 logistic.cc:68] Epochs : ( 85/100 )

I20230424 23:54:26.204106 46 logistic.cc:68] Epochs : ( 86/100 )

I20230424 23:54:26.420639 46 logistic.cc:68] Epochs : ( 87/100 )

I20230424 23:54:26.636018 46 logistic.cc:68] Epochs : ( 88/100 )

I20230424 23:54:26.853946 46 logistic.cc:68] Epochs : ( 89/100 )

I20230424 23:54:27.069939 46 logistic.cc:68] Epochs : ( 90/100 )

I20230424 23:54:27.293044 46 logistic.cc:68] Epochs : ( 91/100 )

I20230424 23:54:27.509610 46 logistic.cc:68] Epochs : ( 92/100 )

I20230424 23:54:27.730319 46 logistic.cc:68] Epochs : ( 93/100 )

I20230424 23:54:27.947926 46 logistic.cc:68] Epochs : ( 94/100 )

I20230424 23:54:28.161187 46 logistic.cc:68] Epochs : ( 95/100 )

I20230424 23:54:28.374837 46 logistic.cc:68] Epochs : ( 96/100 )

I20230424 23:54:28.590337 46 logistic.cc:68] Epochs : ( 97/100 )

I20230424 23:54:28.803864 46 logistic.cc:68] Epochs : ( 98/100 )

I20230424 23:54:29.017604 46 logistic.cc:68] Epochs : ( 99/100 )

I20230424 23:54:29.235186 46 logistic.cc:548] Party 0 train finish.

E20230424 23:54:29.236776 46 csv_driver.cc:112] Open file /data/result/lr_mode.csv failed.

E20230424 23:54:29.236980 46 logistic.cc:574] Save LR model to file /data/result/lr_mode.csv failed.

from primihub.

你在node0容器里执行 mkdir /data/result -p,再运行,前面说了一次是缺目录,这个目录会挂载在容器外面的项目目录下的data/result 目录下

from primihub.

/

你在node0容器里执行 mkdir /data/result -p,再运行,前面说了一次是缺目录,这个目录会挂载在容器外面的项目目录下的data/result 目录下

成功了,原来docker方式从拉取镜像到创建目录再到启动任务要一次性完成,我之前没有想到是挂载result目录到外面,第一次启动mpc任务失败之后重复启动也是不能成功的。多谢你了。

from primihub.

Related Issues (20)

- Failed to open file: /tmp/FL/hetero_xgb/train/train_breast_cancer_host.csv

- 我在部署完整的PrimiHub隐私计算管理平台报错 HOT 3

- 执行 deploy.sh 报错Error response from daemon: Invalid address 172.28.1.30: It does not belong to any of this network's subnets HOT 4

- 我在 sh deploy.sh部署的时候提示提示 HOT 5

- 虚拟机上运行了好久了,但是突然报错了kernel:NMI watchdog: BUG: soft lockup - CPU#3 stuck for 23s! [java:102195] HOT 2

- docker部署的方式,无法访问 HOT 8

- 拉取了最新的镜像之后,页面还是404,nacos报错,请问这个是哪里出了问题 HOT 2

- 选择【模型评估】流程节点,单击开始,报错“运行失败,评估模型无入参数信息” HOT 1

- 通过本地编译启动方式测试FL任务时,总是提示“ImportError: /usr/local/lib/python3.8/lib-dynload/_posixsubprocess.cpython-38-x86_64-linux-gnu.so: undefined symbol: PyTuple_Type” HOT 5

- Windows环境下,编译失败 HOT 3

- > 你是使用的什么系统?如果是centos或者ubuntu,可以参考[这里](https://docs.primihub.com/docs/advance-usage/start/build)配置python环境,如果是别的系统,可以尝试通过miniconda安装python,参考[这里](https://m74hgjmt55.feishu.cn/docx/FmnSdtDLMoyGLMxffVqcqEZWnFg)

- PSI结果不全 HOT 2

- 我在公众号中看到primihub实现了ABY2.0,在项目中没有看到相关代码,只找到了ABY3的,请问有ABY2.0的实现么 HOT 1

- Failed to execute python: ImportError: cannot import name 'service_pb2' from 'src.primihub.protos' HOT 5

- 隐私查询计算完导出的csv中文显示乱码 HOT 1

- 带有身份证号码的计算结果会被显示成科学计数法 HOT 1

- 把dockerhub里的离线部署到内网服务器,如何操作? HOT 1

- 管理平台1无法使用Github SSO登录 HOT 1

- 隐私算子相关问题 HOT 3

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from primihub.