Comments (55)

And when I get around to implementing #391 an obsolete as well as horrible hack

from kitty.

No not yet, I will close this bug when it is implemented. I'm rather busy with other things at the moment.

from kitty.

w3m does not use the graphics protocol. It uses a X11 specific fragile hack that involves drawing a separate X11 window over the terminal window to display the image. I'm not surprised that does not work with a opengl based terminal.

from kitty.

Tried with lsix but only characters came out.

from kitty.

In that case neither am I ;-) Are there any apps that does use your protocol?

from kitty.

Since the protocol spec has not even been finished yet, no. You can currently use the icat.py script included in kitty to cat images in the terminal, but that's about it.

from kitty.

from kitty.

I really love this idea.

So to put this into my own words:

A program asks kitty about the height and width of a character,

then it chops the image to display, into fitting pieces and sends them piece by piece to kitty,

finally kitty starts to draw this pixel-characters at the current cursor position.

Assuming I got it right, the first issue that comes to my mind would be the drawing at cursor position, some applications like ranger show some image preview like this.

So setting a position to render to could be useful.

But I have no clue about how this ncurse shell rendering works so maybe its not an issue.

Next thought:

Define a header after the escape code which is extensible something like this:

<ESC>r x=100,y=100,format=ARGB\n

My main point here is to use named arguments so its easy to add new arguments while older versions still work because they ignore unknown args.

Next:

Wouldn't it be possible to render whole images?

I guess it would not integrate into your char grid as nicely, but on the other hand simplify the creation of pixel data that could be displayed.

Also drawing pixelchar by pixelchar could lead to funny effects when the drawing or data producing is too slow, especially for videos or gifs.

from kitty.

Yes, there will be the possibility to specify formats for image data, probably something like:

f=1 or f=8 or f=24 or f=32 for (1-bit, 8-bit, 24bit RGB and 32bit RGBA) Other formats such as compressed image data can also be added later.

And yes you can specify whole images at a time, they will simply span multiple cells, something like this:

<ESC code>f=24,x=2,y=3,w=500,h=300;image data

Then the terminal will render the 500x300 pixels image starting at position (2, 3) from the top of the current cell.

from kitty.

I have created a spec for the protocol here: https://github.com/kovidgoyal/kitty/blob/master/protocol-extensions.asciidoc#graphics-rendering

from kitty.

What were the issues/limitations you saw with sixel? Implementing that protocol would allow you to take advantage of projects that already support sixel graphics (including a replacement for w3mimgdisplay, allowing images to work in w3m and ranger) rather than adding support in those applications afterwards.

Would you consider adding sixel support in addition to this new graphics rendering protocol?

from kitty.

IIRC (and please correct me if I am wrong)

- sixel supports only 256 colors

- it has no way to optimize transfers in the case when the client program and the terminal emulator are running on the same computer and have access to a common filesystem/shared memory

- This may just be my fault, but, I could not find a clear description of how it works when interspered with text -- so, how does the client know what size (number of pixels) of images to send and how does it control their placement? What happens in a full screen application that wants to display an image and then overwrite it with text and so on.

- There is no facility for persistence. Think of the case of displaying an animated GIF, for example. With the protocol I describe above the client has to send each frame to the terminal emulator only once. With sixels it has to be done repeatedly.

- A minor issue: The sixel encoding is IMO unnecessarily complex.

from kitty.

As for adding sixel support -- I have nothing against it, but, practically speaking, since I dont have any need for it either, it will not get done unless someone else contributes. However, if and when I implement some variation of this protcol in kitty, adding support for additional legacy schemes, should be fairly easy to do on top of that. Basically all that has to be done is implement the parsing for the escape codes and then map the result onto this scheme (which is a strict superset of all the legacy schemes).

from kitty.

- Sixel only supports a 256 color palette, but it can be cycled. 24-bit color is technically possible, but the point still stands though, because libsixel itself only supports up to 15-bit color when encoding, though there's an open issue for 24-bit color support.

- Fair point, though I'd expect performance to be a lesser concern because you'd probably stop displaying in a terminal after a certain point.

- I'd expect that it acts like normal characters do, using escape sequences to reposition for animations. Text and graphics probably cannot overlap. I'm reasonably confident that's how it works, but I haven't read a real description myself.

- While interesting, I would expect such a feature at the toolkit level, and reuse would be something only gifs would use because the buffer would need to be very large otherwise.

- Probably true.

As an aside, the palette format and low color support of sixels might be a good thing dealing remotely if all someone wants is a preview of a file. A 3.1MB 2560x1600 jpeg encoded as a 15-bit sixel image can be around 11 MB, while with 256 colors only it is 4.5M, and base64 encoded it is 4.1MB. After a certain point, it may be better to get the original image from the source and then display it because of how much data you'd be dealing with.

from kitty.

-

My motivation for implementing graphics support at all is to port https://github.com/kovidgoyal/iv to run in a terminal. This can display full screens worth of image thumbnails, that can be animated. Performance, especially over a network is a definite concern.

-

How does the client know:

- How many pixels to send to cover an mxn block of character cells. i.e. how many pixels is a single character cell?

- What happens if the client sends more or less pixels than the number of cells available?

- Is there a specification for how the cursor moves in case of overflow/underflow of pixels?

- I dont see how this can be implemented in a toolkit. In the general case, when running over a network, there is no way for the client program to implement persistence server side without co-operation from the terminal emulator. Also, it is not only for GIFs. Another application is sprite maps. If an application wants to use special characters/symbols in its rendering it can generate a sprite map of them and send it to the terminal emulator once, and then simply refer to them in the future, instead of resending the individual spite data at each occurrence.

2 and 4 are designed precisely to workaround the fact that images are very large as raw data. And it is trivial to add an indexed, 8-bit format to the new protocol if desired.

from kitty.

- Fair enough.

- ranger uses termios to get the size of a cell. With sixel, anything going past the horizontal limits is cut off. Everything past the vertical limit scrolls, just like with text.

- Having fully read your specification, the display of images is still completely controlled by the application and not handed off to the terminal in any way, so my concern here was unfounded.

Extending that last point, if the goal is remote image display, what you've got is probably the right way to do it. Locally though, w3m on X (when you're not using the sixel support) is drawing directly onto the terminal window, not having images decoded and drawn by the terminal at all. That route certainly has better performance, but is limited to applications running locally and is not display server agnostic.

Thanks for the discussion. I had only briefly looked at the sixel protocol before and now I know more about it and why you've determined it is not an ideal solution.

from kitty.

Interesting, I did not realize one could get cell width height and not just number of cells per row/number of cells per column using termios. At least according to http://man7.org/linux/man-pages/man4/tty_ioctl.4.html the ws_xpixel and ws_ypixel values are unused in the linux console. I assume they are actually made use of in some X terminals. I should probably implement that in kitty. And once implemented remove the extra escape code to get cell size from this protocol, since it is not needed. Thanks, good to know.

One some investigation -- libvte based terminals and konsole both return 0 for those values. xterm returns the window size.

from kitty.

Is the the spec implemented in master yet? I just tried it with no effect, trying to determine if user error.

from kitty.

OK, so some opinions about graphics rendering;

I think this could be simplified by making some assumptions;

- Reading PNG files is very easy to implement using libpng, lodepng, stb_image.h etc, so I think its OK to send image data as PNG. It also much more efficient for bandwidth.

- When you run locally, the speed loss of reading a PNG as a base64 text stream is not going to matter that much. It will especially not matter if you optimize for ssh usage anyway; since then local is so much faster already -- why would you need a complex shared memory solution for making it even faster?

So now we have a simpler case where the client sends image data as base64 encoded PNG:s, binding them to image ids, and then uses that id to render the image on screen.

Otherwise I think it's great.

from kitty.

Yeah but on the other hand the savings from PNG are not that large for arbitrary images (i.e. images with lots of colors). And then you have baked in dependence on a particular image format for ever in the protocol. I dont think that's a good idea, and if we were going to do that, then why not opt for a more modern lossless format with better compression?

Shared memory is a performance optimization, particularly for displaying animated images. But it's optional, if you dont want to implement it, simply have your emulator reply that it does not support it when queried.

from kitty.

Just a heads up, I am starting work on implementing this in kitty. As I implement it, there will probably be changes/additions to the protcol, informed by the implementation. One change, as requested by sasq64 is the addition of a z-index so that graphics can be rendered below or above text and also alpha blended with each other.

from kitty.

I thought about this again an maybe you're right that png shouldn't be used for a generic protocol. Better to have optional support for generic zlib/deflate compression for any resource sent from the application.

from kitty.

Is that really needed though? Doesn't ssh already compress data? Double compression would just waste CPU and probably yield slightly larger data sizes because fo zlib headers.

from kitty.

Compression is not on by default and not recommended unless in certain very low bandwidth situations afaik

from kitty.

It's not on by default because it actually slows things down on fast networks, which is also true in the case of compression of image data. I cant think of a scenario where it makes sense to turn on compression for transmission of image data, but not for the ssh connection in general.

from kitty.

Because you typically pre-compress resources for an application, so it will have no cost for the remote side, and decompression (on the local side) is much faster than compression.

Also gzip compression (like ssh uses) depends on enough data to scan for patterns and will have to become very laggy to achieve good compression.

from kitty.

Certainly for application assets that is the case, but surely the main cost will be displaying actual image content not assets, which cannot be pre-compressed. Even in the case of application assets, it is unlikely that the assets will be both stored and displayed in the exact same resolution, so chances are they will need to be compressed at least once on the client. For example, the most common asset class --- application icons -- are typically stored in the application bundle in a single resolution and then resized on the fly depending on runtime conditions.

I'm not familiar with how ssh does compression, but surely it only compresses data if there is a significant amount of it ready to send. At least, I have not noticed increased latency with compression over ssh on slow connections.

But lets not get into the weeds about this. It is a minor thing that can always be added later, by simply adding a compression field to the escape code metadata. Something like c=gzip. Once we have a working viable implementation, we can do some benchmarking to see what the effects of compression actually are in actual usage scenarios and decide if it makes sense to add.

from kitty.

Yes I think we imagine different scenarios of use. You mentioned that you're primary goal was an image viewer which means large, uncompressed image data. I imagine it more for extending existing applications with graphics features, or porting graphics only applications to the terminal.

Either way, there needs to be a way to ask the terminal about these features. I suggest extending the normal Device Attributes command (which already can report features such as sixel graphics) with attributes related to this feature.

so sending "CSI c" should answer something like

CSI ? 64 ; 1 ; 4 ; 101 ; 102 ...

if we assume that we but new attributes on 100 and over.

The main benefit of extending an existing command is that the application knows it is getting a known format answer back, even on non supporting terminals.

Some suggested attributes:

- Can use graphics

- Can use shared memory

- Can handle Z compressed resources

from kitty.

There is no need for separate queries. The graphics protocol itself allows querying, see the q field int eh command metadata. The client application simply sends dummy images with all the attributes it wants to test and the terminal replies with whether reading the iamges was successful or not.

from kitty.

It might make sense to add a DECQRM code for testing for the existence of graphics support itself, but only if there are widespread client programs actually have trouble with interpreting APC codes.

from kitty.

DECRQM seems to be to check if a certain mode is on or off, not for checking features...

But good, details can be checked using the query commands so we only need one attribute. I still think Device Attribnutes is the place for it.

Regarding compression; How about allowing more formats in the "f=" key, ie a suffix for the type, where "z" mean z-compressed. I could also imagine other bit depths being supported besides 24 and 32 -- for mono colored decoration it would really make sense with 1-bit data for instance. Also compressed textures would speed up things a lot in certain scenarios.

In short; basic formats are "32" and "24", but the terminal can optionally support things like "8", "32z" or "32PVRTC" ?

from kitty.

The reason I prefer DECQRM is because DA is used by lots of legacy terminals for lots and lots of things. The namespace in DECQRM is "cleaner".

To simplify parsing I am only allowing integer and single chars as values for keys. So f can only be an integer values. Makes more sense to have compression orthogonal to it, via a separate key.

As for mono formats, what's the use case? Remember that you can fill a region of the screen with a solid color using simple background colors in cells.

from kitty.

Oh and FYI I am developing this in the gr branch. I will make updates to the protocol-extensions document as well in that branch as the implementation matures.

from kitty.

A terminal is usually mono color. For fancy command prompts, headings, separators, custom font characters etc it would be handy with monochrome graphics that are drawn using the foreground color.

But as your query system allows you to check for that kind of support you don't need to implement it.

Personally I think that 1,4,8,16,24 & 32 bits are all valid options for those who want to implement them.

from kitty.

Makes sense, that is a use case I did not consider. Although, at least the way I am planning to implement it, using graphics support to draw characters will have poor performance. This is because each individual graphic will require a separate draw call. Not to mention the overhead of tracking when the graphics need to be released, moved to the history buf, etc.

So I am OK with adding f=8 to the spec, color being taken from current foreground color. The image being an alpha mask. I dont see why you'd want 1 and 4 though. I dont think the transmission space savings would be that significant.

from kitty.

I added both zlib based compression and PNG (the latter because it is an efficient way to send indexed images). Since PNG is supported there is no need for f=8 (it can just be sent as indexed PNG instead).

from kitty.

The documentation for the protocol has moved to the file graphics-protocol.asciidoc in the gr branch. I have finished implementing loading of image data. The remaining tasks are mentioned in the first post.

from kitty.

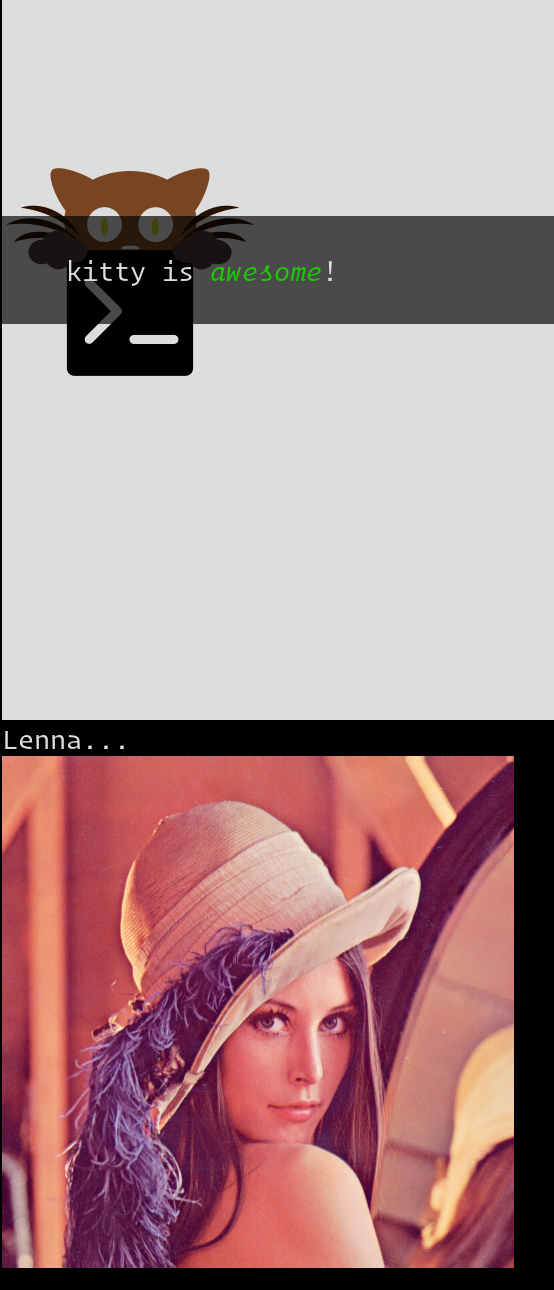

Displaying of images is done. See attached screen shot, which shows alpha blending of images as well as rendering text on top of images. Also shows a simple 200 line script with which you can implement an image-cat utility (see https://github.com/kovidgoyal/kitty/blob/gr/kitty/icat.py)

The above was rendered in kitty using the following simple fifty line script:

https://github.com/kovidgoyal/kitty/blob/gr/kitty_tests/gr.py

from kitty.

I'd like some opinions on the best way to handle screen resize/font size changes. Should images be scaled proportionately? Size preserved? Position adjusted to be on screen?

from kitty.

Image rendering is partly broken on my installation. Images are not visible, except when I scroll quickly, then they will briefly flicker into view. Should I open an issue on that or do you know about it?

from kitty.

How are you displaying the images? And what system is this?

from kitty.

Eg. in w3m in a Google Images search. It works as expected in xterm, but as described in kitty. BTW: I’m on ArchLinux on a laptop with Intel graphics.

from kitty.

the iterm2 implementation is very useful when working through ssh, do we have any thing similar that can [escape sequence + encode image] -> image rendered in Kitty?

from kitty.

Umm kitty's graphics protocol is far more powerful than iterms and supports working over ssh just fine.

from kitty.

since lsix uses the inferior sixel protocol for images, that is hardly surprising. As I've said before, i have no interest in implementing an inferior imaging solution in kitty, but patches are welcome.

from kitty.

@kovidgoyal Thanks for your swift reply - I'll try to convince the lsix maintainer to perhaps look into other options, perhaps there's an approach both projects can bridge to each other.

Understand your rationale, though.

from kitty.

commented on May 14, 2024

commented on May 14, 2024

Hello, I have a terminal widget library here that has support for images in both its terminal widget (parsing sixel) and its terminal and Swing backends (rendering to sixel or images). I am curious about adding Kitty image support to my terminal widget.

Main question: Is the Kitty image protocol specification here considered complete? Is it at a particular version number now?

Next issue: My terminal treats image data as just "image stuff in an otherwise normal text cell". I.e. I do not have image IDs, z order, or storage. This has the nice property that all other VT100/Xterm sequences behave as one would expect. One could for example display image, and then delete text cells in the middle of the image, and parts of the image would move over just as text would. But I am not keen on the idea of an application ordering its terminal to do image stacking, clipping, etc. -- there is very little chance xterm would ever support that based on how its sixel images work. How am I supposed to tell a Kitty-supporting application which subset of functions I do support? Or is it expected that one must support all of it?

Just curious, thanks for your time.

from kitty.

The protocol specification is complete, has not had any additions in

years. If there are additions, they will be compatible. All current keys

and actions will not change their meanings (the only exception is to

correct security issues, if any). You only need to support the

spec as it is now, there are no prior versions to worry about. The spec

includes techniques for detecting if a terminal supports it.

The fact that when you delete text unrelated images get distorted is one

of the major warts of sixel, so kitty images are never going to behave

like that.

You can support whatever subset of the full protocol makes sense for

you. But note that there are fundamental semantic differences between

the kitty protocol and sixel, not sure how you would paper over those in

a single API. You mention one, with text erase distorting images.

Wrapping behavior is another. And of course the kitty protocol does far

more than sixel will ever be able to.

from kitty.

commented on May 14, 2024

commented on May 14, 2024

The protocol specification is complete, has not had any additions in

years.

Looking at the specification, some comments:

General:

-

It would be nice if the specification web page was available as raw text or markdown.

-

You should define your expectation for C0/C1 control characters received during an APC sequence. I think most people are now familiar with Williams' state machine, where C0/C1 for ESCAPE, CSI, OSC, DCS, SOS, PM, and APC will always go to another state, and SOS/PM/APC can still see the other C0/C1 without acting on them, but you never know.

-

Is there a required codepage for this protocol? Unicode already defines encoding as beneath the VT100 emulation layer, and your error message text says ASCII, but there might be other reasons to insist on e.g. UTF-8.

-

How does the application determine the terminal's support for images without actually trying to display an image and waiting for timeout or seeing if the cursor moved or not? Why not have a DA flag for this, same as sixel does?

In order to know what size of images to display and how to position them, the client must be able to get the window size in pixels and the number of cells per row and column. This can be done by using the TIOCGWINSZ ioctl. ... CSI 14 t

Some other methods for row/column count: ioctl (or in my case 'stty size'), CSI 18 t, the underlying protocol window size option (e.g. telnet NAWS option, rlogin window size), or move the cursor to something very large and use DSR 6 (still used by a lot of things).

_G;\

What should the terminal do when control data/payload don't work, i.e. when some other application uses APC for its own needs that happens to start with 'G'? Silently ignore it?

The terminal emulator must understand pixel data in three formats, 24-bit RGB, 32-bit RGBA and PNG.

PNG is not a pixel data format, it is a file format. Why limit to PNG? Why not JPG, TIFF, BMP, etc? For that matter, why not AVI or MP4?

What should the terminal do if it cannot understand the pixel format provided (e.g. 24-bit RGB but not 32-bit RGBA)? Not display an image at all? Not reserve an image ID? Tell the application somehow that the image did not display?

Here the width and height are specified using the s and v keys respectively. Since f=24 there are three bytes per pixel and therefore the pixel data must be 3 * 10 * 20 = 600 bytes.

Could consider adding a link to the summary table here.

Where does the text cursor position end up after an image is successfully displayed? Does it move at all? Does it end up on the row below, or column to the right of the image? Should the screen scroll if the image is too tall? What if the image is wider than the screen?

Currently, only zlib based deflate compression is supported, which is specified using o=z.

Which deflate-based program are you referring to? Can I just run the pixel data through gzip?

Transmission Medium

t | A temporary file, the terminal emulator will delete the file after reading the pixel data. For security reasons the terminal emulator should only delete the file if it is in a known temporary directory, such as /tmp, /dev/shm, TMPDIR env var if present and any platform specific temporary directories.

How does the temporary file get there in the first place? The application puts it there, then sends the sequence to display it? This seems a security risk: it wouldn't be hard to fool the terminal into deleting the wrong file via clever use of symlinks and relative paths inside a tmp directory.

s | A POSIX shared memory object. The terminal emulator will delete it after reading the pixel data

What about Windows users? They have a different shared memory model. Should a Windows-based terminal silently ignore this image, or respond somehow that it could not display it?

This tells the terminal emulator to read 80 bytes starting from the offset 10 inside the specified shared memory buffer.

This is screaming "security hole."

Since escape codes are of limited maximum length, the data will need to be chunked up for transfer.

Why this particular maximum length? According to Dickey: "string parameters (such as setting the title on a window) do not have a predefined limit on their length."

The client then sends the graphics escape code as usual, with the addition of an m key that must have the value 1 for all but the last chunk, where it must be 0.

After the chunked data has been received and reassembled, if the resulting image data is not a valid PNG (or future file format) or RGB/RGBA, what should the terminal do? Silently ignore the whole thing? Display a partial image, since it knows how many rows/columns the image was supposed to be shown in?

Since each chunk is a whole APC sequence, what should happen when printable characters or other VT100/Xterm sequences come in between chunks? Should the image be displayed where the cursor was at the first chunk received, or the final chunk? Or should anything that comes in between chunks cause this image to be treated as corrupt/discarded?

to which the terminal emulator will reply (after trying to load the data):

_Gi=31;error message or OK\

What about localization? Is the error message expected to be in English only? What kind of scrubbing/sanitation must be performed on the error message (ASCII includes C0 control characters afterall)?

or if you are sending a dummy image and do not want it stored by the terminal emulator

What is the difference between a dummy image and a not-dummy image?

then display it with a=p,i=10 which will display the previously transmitted image at the current cursor position.

Repeating the question earlier: where does the cursor go after the image is displayed?

Why bother drawing at the actual cursor at all? Add keys to pick the text row/column, and say that the cursor does not move: then there would be no further ambiguity.

Note that the offsets must be smaller that the size of the cell.

What happens if they are not? Is the image not displayed at all? Why not allow negative offsets (imagine an application mouse-dragging a window pixel-by-pixel all over the screen)?

The image will be scaled (enlarged/shrunk) as needed to fit the specified area.

There are three scaling possibilities: stretch to fix X only and stretch/shrink Y, stretch to fix Y only and stretch/shrink X, and stretch/shrink both. How does the application select which option it wants?

You can specify z-index values using the z key. Negative z-index values mean that the images will be drawn under the text. This allows rendering of text on top of images.

What if the application wants the text background color to cover the image (no blending, no overlay: text is text)? Does it have to display a background-color image over that cell first, then text?

If z is defined for images, why not text too? Add a sequence to select the text z order and current alpha blending value, then write text under or over existing.

The uppercase variants will delete the image data as well, provided that the image is not referenced elsewhere, such as in the scrollback buffer.

How should the terminal tell the application that the image data was not actually deleted because it was used in the scrollback buffer?

When adding a new image, if the total size exceeds the quota, the terminal emulator should delete older images to make space for the new one.

How should the terminal tell the application that an image somewhere was deleted because it exceeded the quota? Someday we will all be using retina displays where 320MB will feel quaint.

The other commands to erase text must have no effect on graphics. The dedicated delete graphics commands must be used for those.

Going back to the question on text completely covering images: this spec requires that covering an image with text means either making numerous calls to display pieces of an image around where the text will go, or having snippets of text background color as images to cover up the cells that the text will draw on. Not a problem for Jexer -- it already displays images as strips of text-cell-sized image pieces -- but could be a headache for less advanced systems.

When switching from the main screen to the alternate screen buffer (1049 private mode) all images in the alternate screen must be cleared, just as all text is cleared.

Does clearing them on screen also delete them?

The clear screen escape code (usually [2J) should also clear all images. This is so that the clear command works.

I don't see why clear screen is more important than erase line.

Interaction with other terminal actions

What about:

-

Reverse video (DECSCNM)? Should images be reverse-video too?

-

Double-width / double-height lines (DECWL, DECDWL, DECHDL). Should images also stretch like the text above them?

-

Copy-paste: should the terminal be able to copy images to the system clipboard? Should the application be able to request whatever is on the system clipboard to be displayed on screen if it is an image?

-

Image memory management: How can an application find out which image IDs are in use? And how much memory they consume?

Summary thoughts:

-

This spec seems good for displaying thumbnails and supporting tiling window managers. The inability for text to fully cover images will make it less convenient for cascading / floating window managers. But the ability to draw offsets from the same image might make up for that inconvenience.

-

The focus here seems to be general-purpose mapping of rectangular text cell regions to 2-dimensional pixel-based file data. PNG and RGB/RGBA feel like too little gain for this, it should be both expanded to include animations (meaning time offsets and looping), and not married to PNG format (use something like generic mime-type discussed at https://gitlab.freedesktop.org/terminal-wg/specifications/issues/12 ).

-

There is not enough consideration for failure modes. Sixel is pretty simple: if it is malformed, nothing is displayed and the cursor does not move, otherwise the text cursor moves with the sixel "print head"; if artifacts are left on screen, so be it, the application has to figure it out. Through multiple sections the cursor is mentioned, but where the cursor ends up at the end of an image render is not defined.

from kitty.

from kitty.

commented on May 14, 2024

commented on May 14, 2024

On Tue, Aug 27, 2019 at 05:46:20AM -0700, Kevin Lamonte wrote: > The protocol specification is complete, has not had any additions in years. Looking at the specification, some comments: General: * It would be nice if the specification web page was available as raw text or markdown.

It is, look in the kitty repo.

OK, I will. Is there more to the spec than the web page? You say in several other responses "it is in the spec", but I am not seeing it in the web page.

- You should define your expectation for C0/C1 control characters received during an APC sequence. I think most people are now familiar with Williams' state machine, where C0/C1 for ESCAPE, CSI, OSC, DCS, SOS, PM, and APC will always go to another state, and SOS/PM/APC can still see the other C0/C1 without acting on them, but you never know.

This has nothing to do with this spec. C0 and C1 escape codes inside APC codes are invalid and cause the entire APC code to not be parsed.

Looking at the spec, we are both incorrect. C0 and C1 are acceptable inside APC codes.:

Application program command | APC9/15 | Introduces an application program command.*

*The VT510 ignores all following characters until it receives a SUB, ST, or any other C1 control character.

Same with PM and OSC. I am wrong in that C1 control codes are processed within APC/PM/OSC. You are wrong in that C0 (except SUB and ST) are valid data inside APC.

- Is there a required codepage for this protocol? Unicode already defines encoding as beneath the VT100 emulation layer, and your error message text says ASCII, but there might be other reasons to insist on e.g. UTF-8.

Look carefully and you will see that it uses only a-zA-Z0-9=/, so no. And pretty much all modern terminals/terminal applications use UTF-8.

OK.

- How does the application determine the terminal's support for images without actually trying to display an image and waiting for timeout or seeing if the cursor moved or not? Why not have a DA flag for this, same as sixel does?

This is addressed in detail in the spec.

I did not find this spelled out in the web page, but I think I see what you are getting at now. More below.

Huh? The spec explicitly mentions the ioctl and CSI t. I have no interest in specifying any other methods. If a client application wants to use other methods, it is free to do so, the spec does not care.

Fine.

_G;\ What should the terminal do when control data/payload don't work, i.e. when some other application uses APC for its own needs that happens to start with 'G'? Silently ignore it?

Yes, this is what terminals are supposed to do with all malformed escape codes in general. Has nothing to do with this spec.

OK.

The terminal emulator must understand pixel data in three formats, 24-bit RGB, 32-bit RGBA and PNG. PNG is not a pixel data format, it is a file format. Why limit to PNG? Why not JPG, TIFF, BMP, etc? For that matter, why not AVI or MP4?

No PNG is a pixel data format.

If you want to assert that all of this just means pixels, more power to you.

What should the terminal do if it cannot understand the pixel format provided (e.g. 24-bit RGB but not 32-bit RGBA)? Not display an image at all? Not reserve an image ID? Tell the application somehow that the image did not display?

If a terminal does not understand one of these extremely simple formats, then it is not in spec compliance.

OK.

Here the width and height are specified using the s and v keys respectively. Since f=24 there are three bytes per pixel and therefore the pixel data must be 3 * 10 * 20 = 600 bytes. Could consider adding a link to the summary table here. Where does the text cursor position end up after an image is successfully displayed? Does it move at all? Does it end up on the row below, or column to the right of the image? Should the screen scroll if the image is too tall? What if the image is wider than the screen?

The cursor position is not well defined, so applications should not rely on it. They can simply reposition it wherever they like. And yes, screen will scroll if image is too tall. If the image is wider than the screen behavior is again undefined, so well designed applications which already know the screen size, should not rely on it.

Regarding scrolling: you define the need to clip to scroll regions, why not also define the need to clip to screen size?

Also one inconvenience with sixel support in xterm is that sixel images drawn on the bottom row causes xterm to scroll (even when the "print head" does not require it). Does this spec expect the same behavior?

This is addressed in the spec, the terminal is only allowed to delete files inside well know temp directories. And obv it will realpath() before deleting things. If it does not do that, that is a bug in its implementation.

What happens if the file in /tmp is not an image? Should the terminal's behavior be: don't display it, report error, and delete it anyway?

s | A POSIX shared memory object. The terminal emulator will delete it after reading the pixel data What about Windows users? They have a different shared memory model. Should a Windows-based terminal silently ignore this image, or respond somehow that it could not display it?

No windows supports named shared memory as well. However, I have not looked into it, but since an application can only use shared memory if running on the same machine as the terminal, an application running on windows can simply use files.

But what should the Windows-based terminal do right now if they are to be deemed "in compliance"? They don't have POSIX shared memory objects, so obviously cannot display the image. If they notify the application that the image failed to display, are they in compliance?

This tells the terminal emulator to read 80 bytes starting from the offset 10 inside the specified shared memory buffer. This is screaming "security hole."

To you maybe. When I write software that receives data that specifies that a sub-region of a buffer needs to be processed, I bounds check the sub-region.

Good to know that your secure coding skills exceed those of most major vendors.

But let's say that us less skilled developers decide not to support shared memory buffers because we are unsure our skills are up to it. Will a terminal that refuses to support shared memory buffers be deemed in compliance with the spec or not?

Since escape codes are of limited maximum length, the data will need to be chunked up for transfer. Why this particular maximum length? According to Dickey: "string parameters (such as setting the title on a window) do not have a predefined limit on their length."

The idea of infinite length escape sequences is absurd, thomas dickey notwithstanding. Anybody that has ever written an escape code parser will know that. Infinite length escape codes actually do scream security holes, unlike offsets into buffers.

A limit clearly must exist. The question is why 4096? Did you do some kind of tests to come to this value?

The client then sends the graphics escape code as usual, with the addition of an m key that must have the value 1 for all but the last chunk, where it must be 0. After the chunked data has been received and reassembled, if the resulting image data is not a valid PNG (or future file format) or RGB/RGBA, what should the terminal do? Silently ignore the whole thing? Display a partial image, since it knows how many rows/columns the image was supposed to be shown in?

It returns an error, as specified in the protocol.

The only error reporting I see in the web page is in the "Detecting available transmission mediums" section and "Display images on screen". These two error reports are a) different in structure, and b) not clear if they are intended to be general-purpose and apply to the rest of the protocol actions.

Since each chunk is a whole APC sequence, what should happen when printable characters or other VT100/Xterm sequences come in between chunks? Should the image be displayed where the cursor was at the first chunk received, or the final chunk? Or should anything that comes in between chunks cause this image to be treated as corrupt/discarded?

No image is displayed until the sequence is complete. It is up to the terminal emulator to implement whatever policy it likes on how long to wait for partial sequences to be completed.

I guess I didn't make my question clear. If an "in compliance" terminal receives the following:

- CUP(0, 0).

- Chunk 1.

- Chunk 2.

- CUP(10, 10).

- Chunk 3, and final.

Will the image be displayed? If not, what is the kind of error to report to the application ("incomplete image", "out of sequence", "bad data", ...)? If yes, where on screen will it be?

to which the terminal emulator will reply (after trying to load the data): > _Gi=31;error message or OK\ What about localization? Is the error message expected to be in English only? What kind of scrubbing/sanitation must be performed on the error message (ASCII includes C0 control characters afterall)?

The OK message is the only defined thing. Anything else is an ERROR. I am not going to list all the various possible errors in the spec. Terminal developers can be as helpful or not with their error messages.

That's fine, but since error messages have no structure or defined numeric codes to refer to it will be harder for applications to perform graceful fallback. Is graceful fallback something you want this standard to support? I could see applications trying several different strategies depending on the error they might get: if an image exceeds memory, try to downsample it or use a smaller amount of screen space; if shared memory won't work, try local disk, and if that fails resort to chunking the image data.

or if you are sending a dummy image and do not want it stored by the terminal emulator What is the difference between a dummy image and a not-dummy image?

A name.

So do you really mean something like this at this part of the spec?

"An application can determine image support from the terminal by attempting to load, but not store or display, an image using one of the transmission mediums defined above. To do this, it can use the query action, set a=q. Then the terminal emulator will try to load the image and respond with either OK or an error. If OK, then the application can assume that images of this format and medium are both storable and displayable. If error, the application can try different mediums until one comes back OK."

If so, can 'a=q' be used to query the other capabilities of the terminal?

then display it with a=p,i=10 which will display the previously transmitted image at the current cursor position. Repeating the question earlier: where does the cursor go after the image is displayed? Why bother drawing at the actual cursor at all? Add keys to pick the text row/column, and say that the cursor does not move: then there would be no further ambiguity.

You can say that the cursor does not move in either case, do not have to use extra keys for it. Again, not defined, applications should not rely on any particular cursor position.

If applications can't deterministically know where the cursor position should be, then libraries such as ncurses might never be able to support this protocol. Dickey waited for a de facto standard before doing it, yet here we are now at ncurses 6.1 supporting 24-bit RGB. If this spec could be ironed out then it could be pretty cool for ncurses 8.x or whatever to be able to do images, and even optimize transparently to the application for local/remote.

Note that the offsets must be smaller that the size of the cell. What happens if they are not? Is the image not displayed at all? Why not allow negative offsets (imagine an application mouse-dragging a window pixel-by-pixel all over the screen)?

A negative offset is the same as positive offset in a prev cell. And if they are not, the terminal is free to do whatever it wants, once again, applications sending invalid things can have no expectations with regard to the result.

Up top you say "invalid sequences should be ignored", yet here you say "invalid sequences can put whatever they want on the screen, or nothing". Which one do you want?

The image will be scaled (enlarged/shrunk) as needed to fit the specified area. There are three scaling possibilities: stretch to fix X only and stretch/shrink Y, stretch to fix Y only and stretch/shrink X, and stretch/shrink both. How does the application select which option it wants?

By resizing the image itself before sending it.

If the application has to scale the image, why does the spec say the terminal will scale it? Conversely, if the terminal is scaling the image, which (or both) axis is getting altered?

You can specify z-index values using the z key. Negative z-index values mean that the images will be drawn under the text. This allows rendering of text on top of images. What if the application wants the text background color to cover the image (no blending, no overlay: text is text)? Does it have to display a background-color image over that cell first, then text?

yes.

OK.

If z is defined for images, why not text too? Add a sequence to select the text z order and current alpha blending value, then write text under or over existing.

because that is needless complication.

Seems a bit arbitrary where the "needless complication" line is, but OK.

The uppercase variants will delete the image data as well, provided that the image is not referenced elsewhere, such as in the scrollback buffer. How should the terminal tell the application that the image data was not actually deleted because it was used in the scrollback buffer?

Why does the application care? If it wants to explicitly manage images it should use ids and delete using those ids.

It doesn't matter to you (the terminal) why the application cares. You have an error condition, with no defined error action. If the application requests the terminal do something, and the terminal doesn't do, shouldn't the application have some way of knowing that it needs to try something else?

When adding a new image, if the total size exceeds the quota, the terminal emulator should delete older images to make space for the new one. How should the terminal tell the application that an image somewhere was deleted because it exceeded the quota? Someday we will all be using retina displays where 320MB will feel quaint.

It should not. There is no guarantee than an application currently running is the application that originally sent the image. If an application wants to guarantee an image is displayed then it should use the querying facilities for that purpose.

So the terminal manages memory, applications will have no notification of memory actions, if they step on each they won't know. But inside each application's screen they can get some guarantee that their bitmaps are or are not on the screen. OK.

The other commands to erase text must have no effect on graphics. The dedicated delete graphics commands must be used for those. Going back to the question on text completely covering images: this spec requires that covering an image with text means either making numerous calls to display pieces of an image around where the text will go, or having snippets of text background color as images to cover up the cells that the text will draw on. Not a problem for Jexer -- it already displays images as strips of text-cell-sized image pieces -- but could be a headache for less advanced systems.

What? If you want to place text on top of an image you simply send the image with a negative z-index, that is all. If for some odd reason you also want a block of solid color on which to write the text, you send that block with a higher but still negative z-index. In actual fact, you would use a semi-transparent block for best results, since opaque blocks covering images look fairly ugly.

What I'm getting at is this and this: text window borders overlapping images. (I am unaware of anyone pulling off the trick before Jexer, but would be excited to be corrected.) I am hoping that new multiplexers come up this capability in the future.

The clear screen escape code (usually [2J) should also clear all images. This is so that the clear command works. I don't see why clear screen is more important than erase line.

Because images can span more than a single line, commonly, while spanning more than a single screen is rare. Besides which users can trigger screen clears easily via the clear command. It would be extremely surprising if clear cleared text but not images.

But why only for a full screen clear? Why can't a program like tmux/screen clear say only the top half of a screen, clipping the image, because the bottom half is another terminal? Right now their only means of doing that is either drawing a bunch more background color images on top of what they need, or clearing/redrawing the bits of the image that should remain visible.

Also: If the screen is cleared, are the images that were on screen deleted in the ID cache too (i.e the application has to reload them to display them again)?

- Image memory management: How can an application find out which image IDs are in use? And how much memory they consume?

It cannot. It simply uses its own ids. If they conflict with ids from a previous application, they will overwrite.

Do you envision this spec being used by multiple different applications on the same physical screen, or is it targeted to applications that can assume they have the entire physical screen? Is it supposed to fit into the same ecosystem as tilix, terminator, and tmux/screen?

Example: for tmux/screen, sixel is currently hacked by way of bypassing the window manager and sending the DCS sequence directly to the host terminal; if one had a tmux session with multiple terminals on screen, and ran neofetch or icat or something, and they all used ID 1, ID 2, etc. then they will step on each other because all of those APC sequences would be routed to the same shared terminal. If an application could guarantee they had unique IDs, then all of these terminals could look good. But if this is not a use case you care about, then OK.

Summary thoughts: * This spec seems good for displaying thumbnails and supporting tiling window managers. The inability for text to fully cover images will make it less convenient for cascading / floating window managers. But the ability to draw offsets from the same image might make up for that inconvenience.

I have no idea what window management has to do with this spec?

Again, this. Terminal window managers like tmux/screen are often asked to support images because people like 'lsix' and such, but they haven't really jumped on it because breaking up images into text cell pieces and putting them back together again is hard, niche, and there aren't enough users asking for it. If this spec makes it easy to chop up images from multiple terminal windows and put them back onto a text-based tiling WM, it might make inroads that sixel hasn't gotten to.

Up to you if that is something you are interested in.

- The focus here seems to be general-purpose mapping of rectangular text cell regions to 2-dimensional pixel-based file data. PNG and RGB/RGBA feel like too little gain for this, it should be both expanded to include animations (meaning time offsets and looping), and not married to PNG format (use something like generic mime-type discussed at https://gitlab.freedesktop.org/terminal-wg/specifications/issues/12 ).

One of the core goals of this spec, as mentioned in its motivations is not not force terminals to support an arbitrary and ever growing set of image formats. That is not going to change.

OK.

And animations can be driven purely by the application, there is no need to have the terminal support it specially.

I suppose animations should wait for a different spec. The CPU hit from taking each frame of a mpg, converting to PNG, putting in shared memory, and asking the terminal to display it; vs putting the avi/mp4 in shared memory and asking the terminal to animate it will be very different. But it is premature to optimize for a use case with no users yet.

- There is not enough consideration for failure modes. Sixel is pretty simple: if it is malformed, nothing is displayed and the cursor does not move, otherwise the text cursor moves with the sixel "print head"; if artifacts are left on screen, so be it, the application has to figure it out. Through multiple sections the cursor is mentioned, but where the cursor ends up at the end of an image render is not defined.

The situation is much better here. If the image data/escape codes is invalid, nothing is displayed and the cursor does not move.

OK, thank you for clarifying what happens on the error case (no cursor movement).

There is no need to worry about artifacts or partial images or absurdities like deleting unrelated text distorting displayed images.

For a terminal multiplexer, what the terminal sees has little to do with the underlying images. Being able to treat cells as "parts of the image" and shuffle them around is very useful. Should ncurses (or termbox?) ever get image support, I think it likely that they would optimize the image cell updates just like the text cells. Things like: move down 3 rows, over 2, display 7 cells of image data, up 2 rows, 2 cells of text data, etc. So they would be overwriting image cells very frequently (which is hard to do nicely in this spec), and redisplaying small pieces of the image (which is a nice part of this spec).

from kitty.

from kitty.

Oh and regarding scaling, if you specify an area for display and the image does not fit in it, the terminal emulator will scale the image to make it fit. There is no control over how the scaling is done, if the application needs this control, it should scale the image itself.

from kitty.

commented on May 14, 2024

commented on May 14, 2024

There is a reason tmux and its ilk are horrible hacks.

At a time only a single application can control a given tty, having multiple applications simultaneously write to the same tty is chaos and will not work for pretty much anything, even printing out simple text.

IMO terminal multiplexers are horrible hacks

I think our conversation has reached its end then.

from kitty.

It cannot. It simply uses its own ids. If they conflict with ids from a previous application, they will overwrite.

Do you envision this spec being used by multiple different applications on the same physical screen, or is it targeted to applications that can assume they have the entire physical screen? Is it supposed to fit into the same ecosystem as tilix, terminator, and tmux/screen?

Example: for tmux/screen, sixel is currently hacked by way of bypassing the window manager and sending the DCS sequence directly to the host terminal; if one had a tmux session with multiple terminals on screen, and ran neofetch or icat or something, and they all used ID 1, ID 2, etc. then they will step on each other because all of those APC sequences would be routed to the same shared terminal. If an application could guarantee they had unique IDs, then all of these terminals could look good. But if this is not a use case you care about, then OK.

to help reduce the likelihood of this happening, i start at a random ID each time my library is initialized. any attempt to discover what other IDs are in use seems fundamentally racy (in the same way that it's difficult for non-cooperating threads to discover what file descriptors are in use), and with a 24-bit space, collisions are relatively rare (assuming a reasonably-seeded PRNG) outside of pathological cases. IMHO, an ideal solution would be the ability so supply i=-1, at which point kitty would assign you an id (and return that information). practically, i'm not sure i would bother using such functionality, due to my dislike of terminal results and user input being multiplexed onto the same channel [shrug].

from kitty.

Related Issues (20)

- Nested Tabs HOT 3

- Crash on Wayland when switching to a virtual console HOT 10

- Support for COLRv1 fonts HOT 7

- [Help Wanted] strange font size in ligatures HOT 1

- `diff` kitten feature enhancements for terminal power users HOT 1

- [feat. discussion] Add an explicit `always_on_top` configuration option and launch option HOT 4

- `kitty @ ls` doesn't show other kitty os windows, only ones started with `--single-instance` HOT 1

- Flexible width for tab_title_template HOT 1

- macOS: Shell keybindings do not work HOT 3

- Cannot launch kitty in Fedora Asahi Remix HOT 2

- Full screen Kitty window on dual monitors in Ubuntu flickers/bleeds/swaps into the 2nd screen HOT 2

- Title bar mysteriously disappears HOT 4

- Build-time gcc detection fails if e.g. CC=x86_64-pc-linux-gnu-gcc (CC=gcc is fine) HOT 1

- The letter "f" looks weird in the title of the active tab when using the UbuntuMono Nerd Font HOT 1

- Minimized app uses default icon instead of custom one HOT 1

- bash: kitten: command not found HOT 1

- No window shadow on macOS HOT 3

- Kitty becomes unresponsive when using neovim HOT 1

- Cmd+K does not clear images on MacOS HOT 5

- Making `kitty icat` work in tmux popup HOT 4

Recommend Projects

-

React

React

A declarative, efficient, and flexible JavaScript library for building user interfaces.

-

Vue.js

🖖 Vue.js is a progressive, incrementally-adoptable JavaScript framework for building UI on the web.

-

Typescript

Typescript

TypeScript is a superset of JavaScript that compiles to clean JavaScript output.

-

TensorFlow

An Open Source Machine Learning Framework for Everyone

-

Django

The Web framework for perfectionists with deadlines.

-

Laravel

A PHP framework for web artisans

-

D3

Bring data to life with SVG, Canvas and HTML. 📊📈🎉

-

Recommend Topics

-

javascript

JavaScript (JS) is a lightweight interpreted programming language with first-class functions.

-

web

Some thing interesting about web. New door for the world.

-

server

A server is a program made to process requests and deliver data to clients.

-

Machine learning

Machine learning is a way of modeling and interpreting data that allows a piece of software to respond intelligently.

-

Visualization

Some thing interesting about visualization, use data art

-

Game

Some thing interesting about game, make everyone happy.

Recommend Org

-

Facebook

We are working to build community through open source technology. NB: members must have two-factor auth.

-

Microsoft

Open source projects and samples from Microsoft.

-

Google

Google ❤️ Open Source for everyone.

-

Alibaba

Alibaba Open Source for everyone

-

D3

Data-Driven Documents codes.

-

Tencent

China tencent open source team.

from kitty.